A Comparison of Results from a Spanish and English Mail Survey: Effects of Instruction Placement on Item Missingness

Wang, K., & Sha, M. (2013). A Comparison of Results from a Spanish and English Mail Survey: Effects of Instruction Placement on Item Missingness. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=1741

© the authors 2013. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Few research studies compare results from self-administered bilingual paper questionnaires on how the positioning of skip instructions may affect the respondent’s ability to follow skip patterns. Using data from the 2004 and 2005 of the Phase 5 Pregnancy Risk Assessment Monitoring System (PRAMS) mail survey questionnaire, this paper attempts to fill this gap. We examined whether the positioning of skip instructions can produce differences in item nonresponse rates in subsequent items and how results compare between English and Spanish language questionnaires. These results will be of interest to designers of bilingual self-administered survey questionnaires in guiding respondents through the intended navigational path with skip patterns.

Keywords

item missingness, mail survey, PRAMS, Spanish

Acknowledgement

We would like to thank Jeremy Aldworth, Kathy-Perham Hester and Andy Peytchev for comments and statistical advice on earlier versions of this paper. We would also like to acknowledge the PRAMS Working Group: Alabama—Albert Woolbright, PhD Alaska—Kathy Perham-Hester, MS, MPH Arkansas— Mary McGehee, PhD Colorado—Alyson Shupe, PhD Delaware— George Yocher, MS Florida— Aruna Surendera Babu Georgia—Carol Hoban, Ph.D, MS,.MPH Hawaii— Mark Eshima, MA Illinois—Theresa Sandidge, MA Louisiana—Joan Wightkin, DrPH Maine—Tom Patenaude Maryland—Diana Cheng, MD Massachusetts—Hafsatou Diop, MD, MPH Michigan—Violanda Grigorescu, MD, MSPH Minnesota—Judy Punyko, PhD, MPH Mississippi— Marilyn Jones, M.Ed Missouri—Venkata Garikapaty, MSc, MS, PhD, MPH Montana—JoAnn Dotson Nebraska—Brenda Coufal New Jersey—Lakota Kruse, MD New Mexico—Eirian Coronado, MA New York State—Anne Radigan-Garcia New York City—Candace Mulready-Ward, MPH North Carolina—Kathleen Jones-Vessey, MS North Dakota—Sandra Anset Ohio—Connie Geidenberger PhD Oklahoma—Alicia Lincoln, MSW, MSPH Oregon—Kenneth Rosenberg, MD Pennsylvania—Tony Norwood Rhode Island—Sam Viner-Brown, PhD South Carolina—Mike Smith South Dakota Tribal—Jennifer Irving, MPH Texas—Kate Sullivan, PhD Tennessee—David Law, PhD Utah—Laurie Baksh Vermont—Peggy Brozicevic Virginia—Marilyn Wenner Washington—Linda Lohdefinck West Virginia—Melissa Baker, MA Wisconsin—Katherine Kvale, PhD Wyoming—Angi Crotsenberg CDC PRAMS Team, Applied Sciences Branch, Division of Reproductive Health

Copyright

© the authors 2013. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

One challenge that questionnaire designers face is how to make sure respondents answer questions they are supposed to answer and avoid answering questions they are not supposed to answer. Failure to answer questions (errors of omission) leads to missing data or item nonresponse. It is well known that item nonresponse rates are higher on surveys with skip patterns than surveys without skip patterns (Turner, Lessler, George, Hubbard, & Witt, 1992; Featherston & Moy, 1990). Errors of commission on the other hand, refer to errors in which the respondent provides a response for a question they should have skipped, according to the questionnaire design. While such erroneously provided responses can be deleted after data collection, respondents have taken the time to answer these questions. The respondent may have exerted considerable cognitive effort to answer questions that were difficult to answer because they were not meant to be answered. Thus, errors of commission may increase the respondent’s perception of burden and in turn increase the likelihood of terminating the interview before completion in an interviewer administered setting or failing to complete and return a self-administered questionnaire.

For surveys with skip patterns, computer assisted interviewing (CAI) methods and well-trained interviewers can direct respondents through the correct navigational path of a questionnaire. In contrast, designers of mail questionnaires (and other self-administered paper questionnaires) face a challenge in how to guide respondents through the questionnaire using only visual means on paper.

Some research has been carried out on how question attributes such as positioning of a question on a page may affect skip pattern compliance (e.g. Dillman, Redline, & Carley-Baxter, 1999). However, very little, if any, research has been carried out on how the positioning of skip instructions may affect the respondent’s ability to follow skip patterns in self-administered paper surveys. In this paper, we use data from the first two years (2004-2005) of the Phase 5 Pregnancy Risk Assessment Monitoring System (PRAMS) survey to examine whether the positioning of question skip instructions can produce differences in item nonresponse rates in subsequent items. For 2004 and 2005, the PRAMS survey was administered in 30 vital records registry areas, consisting of 29 states and New York City.[1] Each registry area had its own survey instrument in both mail and phone administration modes. Our research focuses on the mail questionnaire.

While some survey items were common in all of the registry areas, each area could select its own questions of interest. This allowed for differences in the presentation of skip pattern instructions for similar items across the surveys. Our goal is to see if differences in how skip patterns were displayed were associated with differences in item nonresponse in subsequent questions.

Background

In an experiment involving university students, Dillman et al. (1999) examined the effects of eight question attributes on errors of omission (the respondent fails to answer items they are supposed to) and errors of commission (the respondent fails to skip items they are supposed to). They found that placement of a question at the bottom of a page was associated with an increase in item nonresponse for the subsequent item. Placement at the bottom of a page was also associated with an increase in errors of commission for the next item. Dillman et al. (1999) reasoned that questions at the bottom of a page interrupt the respondent’s attention to the questionnaire and such an interruption increases the likelihood that the respondent will make an error in following any skip instructions.

While Dillman et al. (1999) examined how question positioning (as well as other question attributes) can affect item nonresponse in subsequent items, a related issue is whether the positioning of skip instructions can affect item nonresponse. Considerable research has been carried out on using symbols and simple instructions to indicate straightforward skip patterns in self-administered paper questionnaire forms. For example, Redline, Dillman, Dajani and Scaggs (2003) report on an experiment conducted during the 2000 Decennial Census that tested different methods of displaying branching instructions. However, in some cases, a separate branching instruction is required when the skip pattern is more complicated. For example, suppose the first item in a series consists of multiple items that are to be answered with a “yes/no” format and the desired skip pattern after these items is that if any of these items are answered with a “yes”, the respondent should go to the next question. If all of the items in the series are answered with “no”, the next question should be skipped. In this case, it is not possible to provide a visual guide for determining the next question. Instead, the survey designer must introduce a skip pattern instruction using text to indicate the next question. As previously noted, the placement of a question at the bottom of a page can interrupt respondent attention and lead to errors in following a skip pattern. We hypothesize that differences in the placement of skip instructions can also affect the respondent’s ability to follow the navigational path of a questionnaire.

Data and Methods

Our analysis utilized PRAMS data for 2004 and 2005 for all states (registry areas) from mail questionnaires. Sponsored by the Centers for Disease Control and Prevention (CDC) and state health departments, PRAMS is an on-going surveillance project of women who have recently given birth. The purpose of the project is to improve the health and well-being of mothers and infants by collecting information about maternal experiences before, during and after pregnancy. The PRAMS sample consists of stratified, systematic samples of 100 to 250 new mothers each month from each participating state’s frame of birth certificates, yielding state level sample sizes of about 1,000 to 3,400 in each year. The survey relies upon data collection through mail and telephone. In the mail phase, sampled mothers are contacted through varied follow-up attempts. After the last follow-up attempt by mail, those who have not responded to the mail questionnaire are followed up using computer assisted telephone interviewing (CATI). For this analysis, we only use cases from those completing the mail survey.

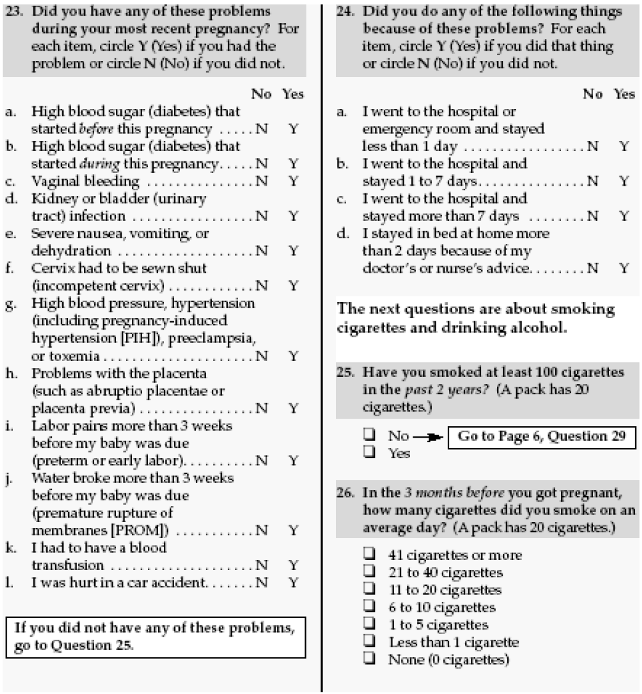

For this analysis, we take advantage of variation in how each state displayed the skip instruction between two items found on all the surveys. Core question Q22 of the survey consisted of a series of 12 “yes-no” items on problems experienced during the most recent pregnancy. If any of these questions were answered with a “yes”, the respondent was supposed to answer core question Q23, which is a series of four “yes/no” items on trips to the hospital or emergency room, hospital stays or bed rest due to the reported problems in Q22. If all of the items in Q22 were answered “no”, the respondent was instructed to skip past item Q23. An example of how Q22 and Q23 were displayed for Mississippi in the 2004 and 2005 PRAMS is shown in Figure 1. In the Mississippi questionnaire, item 23 is core question Q22 and item 24 is core question Q23. For the 2004 and 2005 PRAMS, there were five different placements for the skip instruction between questions Q22 and Q23.

- Instruction appears below Q22; Q23 appears on the same page

- Instruction appears below Q22; Q23 appears on the next page, facing

- Instruction appears below Q22; Q23 appears on the next page, not facing

- Instruction appears at the top of the next column; Q23 appears on the same page

- Instruction appears on the next page

We have ordered these types by the perceived level of interruption that the placement of the skip instruction is expected to have on respondents skipping all of the Q23 items. When the instruction does not appear immediately after Q22 (e.g. in the next column, on the next page or a page must be turned to get to the next question) this represents a break in the path the respondent must follow which may increase the likelihood that the instruction is missed. In addition, even if the instruction is seen, it may not be interpreted correctly if the respondent did not realize that “these problems” referred to the items in Q22.

Figure 1: Example of Type I Skip Pattern Instruction for Core Items 22 and 23, 2004 PRAMS – Mississippi Survey

We did not use cases from Maryland and Arkansas for 2004 because of issues with these data that may have produced misleading results for the analyses in this paper.[2] The data issues for these states in 2004 did not occur in 2005, so cases from 2005 are included in the analysis.

Our main outcome measure of interest is whether or not the respondent left all four items in Q23 blank. Because there was no explicit option for “don’t know” or “refuse” on the mail questionnaires, we cannot know for sure if items are being left blank due to having made a mistake in following the skip instruction or because the respondent would have chosen “don’t know” or “refuse” if these had been offered as response options. All analyses were conducted using SUDAAN version 10.0 (RTI International, 2008). Standard errors were computed based on the Taylor series approximation method accounting for the features of the PRAMS sample design. The weights used in the analysis reflect different probabilities of selection, and do not include adjustments for nonresponse and poststratification.[3]

Results

English Language Forms

Table 1 presents the percentages of respondents (among those eligible for the item Q23) who failed to answer all of the items in Q23 by each type of skip instruction position. The clearest pattern that emerges is that when the skip instruction does not appear below Q22 but instead in the next column (Type 4 – 10.2 percent) or next page (Type 5 – 10.8 percent), respondents are more likely to skip Q23 than when the instruction is placed immediately below item Q22. We speculate that respondents may have seen the instruction but not understood the reference to “these problems” in the skip instruction. If the instruction had been missed altogether, the respondent may have gone to the correct question but skipped over the question since it asks “Did you do any of the following because of these problems?”. In either case, referring to “these problems” in the skip instruction or the question itself may have been sufficiently ambiguous to cause respondents to skip Q23.

Table 1: Percent Leaving All Q23 Items Blank (Errors of Omission) by Skip Instruction Position – English, 2004 and 2005 PRAMS Mail Respondents, 30 Registry Areas

| Instruction Position | States | Percent All Q23 Blank | Standard Error |

| Type 1 – Instruction at bottom; Q23 on same page | AK, MD, ME, MS, NC, NY, SC, WV | 7.1 | 0.4 |

| Type 2 – Instruction on bottom; Q23 on next page (facing) | FL, GA, RI | 4.1 | 0.5 |

| Type 3 – Instruction at bottom; Q23 on next page (not facing) | AL, AR, HI, NJ, NYC, OH, OR, TX, WA | 8.0 | 0.4 |

| Type 4 – Instruction in next column; Q23 on same page | IL, LA, NE, OK, VT | 10.2 | 0.6 |

| Type 5 – Instruction on different page | CO, MI, MN, NM, UT | 10.8 | 0.5 |

All pairwise differences in the percentages of respondents leaving all items in Q23 blank are statistically significant at the .005 level with two exceptions.[4] Differences in the percent leaving all items blank in Q23 between Type 1 and Type 3 and between Type 4 and Type 5 are not statistically significant. Among the three instruction placement types where the instruction was below Q22 (Types 1, 2 and 3), we expected rates of missing item Q23 to vary positively with ”distance” between items Q22 and Q23. That is, we expected Type 1 to have the lowest rate of missingness and Type 3 to have the highest rate of missingness with Type 2 somewhere in the middle. Instead, we find that Type 2 has the lowest rate in which all Q23 items are skipped, although this may be an artifact of only having a small number of states in this category.

Earlier, we noted that the placement of the instruction on the next page was associated with the highest rate of omission errors (Type 5 placement group). For four out of the five states in this group, Q22 appears on an even numbered page so the skip instruction appears on the facing (odd numbered page). For the fifth, Minnesota, Q22 appears on an odd numbered page so the skip instruction is not visible until the respondent turns the page over. Perhaps not coincidentally, Minnesota has the highest rate of respondents failing to answer all of the items in Q23 (12.3 percent).

Since the placement of skip instructions were not randomly assigned for individual respondents or at the state level, differences in the characteristics of individual respondents or in characteristics at the state level may be confounding the relationships between skip instruction placement and errors of omission for item Q23. In order to address this potential confounding, we carried out a logistic regression analysis (among cases that were supposed to have answered Q23) in which whether or not all of the Q23 items were skipped was regressed on four dummy variables representing the five types of skip instruction. We included as covariates variables for the respondent’s level of education (0-8 years, 9-11 years, 12 years, 13-15 years, 16+ years), race (White, Black, Asian, Other), age (less than 20 years old, 20 to 29, 30 to 39, 40 and older), and year of the survey (to control for possible changes over time).[5] Aside from these demographic variables at the person level, we considered one other variable that might differ by state that could confound the relationship between skip instruction type and item nonresponse, the respondent’s propensity to have completed the survey, as approximated by the nonresponse weighting adjustment factor. Results from the regression are shown in Table 2. We provide predictive margins for each value of each predictor in order to facilitate interpretation of the effects of each variable. The predictive margin for a given level of a predictor is the average predicted response in the dependent variable if all sample cases have that value of the predictor (Graubard & Korn, 1999).

Figure 2: Weighted Percentage Distribution of Item Nonresponse on Q23 by State, 2004 and 2005 PRAMS Mail Respondents (who gave at least one answer of “yes” to Q22), 30 Registry Areas (Error Bars indicate 95 percent confidence intervals)

Figure 2 displays the percentages of respondents who left all items in Q23 blank by state with the states grouped by placement type. The percentage of eligible respondents who leave all of the items in Q23 unanswered is used as an imperfect indicator of whether the skip instruction was followed since a respondent could have followed the instruction and failed to provide a response for some other reason, such as answering “don’t know” or refusing to answer the items in Q23.

Comparing the effects and predictive margins in Table 2 for each of the instruction placement types with the unadjusted percentages in Table 1, we see that the Type 4 and Type 5 instructions continue to have the highest rates of nonresponse on all the Q23 items. In addition, for reasons not clear to us, the Type 2 instruction (appears below Q22 and Q23 is on the next facing page) continues to show the lowest rate of nonresponse for all Q23 items (predictive margin = 4.3 percent).

Table 2: Logistic Regression of Missing All Q23 Items, 2004 and 2005 PRAMS, English Language, Mail Respondents, 27 Registry Areas

| Variable | Coefficient | Standard Error of Coefficient | P – value | Predictive Margin | Standard Error of PredictiveMargin |

| Intercept | -2.998 | 0.243 | < 0.001 | 0.080 | 0.002 |

| Instruction Placement Type | |||||

| Type 1 | 0.000 | 0.000 | – | 0.074 | 0.005 |

| Type 2 | -0.569 | 0.147 | < 0.001 | 0.043 | 0.005 |

| Type 3 | 0.016 | 0.099 | 0.872 | 0.075 | 0.005 |

| Type 4 | 0.377 | 0.096 | < 0.001 | 0.104 | 0.006 |

| Type 5 | 0.422 | 0.088 | < 0.001 | 0.108 | 0.005 |

| Education | |||||

| 0 to 8 years | 0.587 | 0.250 | 0.019 | 0.131 | 0.027 |

| 9 to 11 years | -0.016 | 0.132 | 0.904 | 0.076 | 0.007 |

| 12 years | -0.016 | 0.098 | 0.874 | 0.076 | 0.005 |

| 13 to 15 years | 0.098 | 0.089 | 0.271 | 0.085 | 0.005 |

| 16 or more years | 0.000 | 0.000 | – | 0.078 | 0.005 |

| Race | |||||

| White | 0.000 | 0.000 | – | 0.079 | 0.003 |

| Black | -0.093 | 0.107 | 0.385 | 0.073 | 0.006 |

| Asian | 0.353 | 0.146 | 0.016 | 0.109 | 0.013 |

| Other | 0.177 | 0.186 | 0.342 | 0.093 | 0.015 |

| Age | |||||

| Under 20 | 0.000 | 0.000 | – | 0.072 | 0.008 |

| 20 to 29 | 0.055 | 0.126 | 0.660 | 0.076 | 0.003 |

| 30 to 39 | 0.196 | 0.136 | 0.152 | 0.086 | 0.004 |

| 40 and over | 0.437 | 0.209 | 0.037 | 0.107 | 0.016 |

| Year | |||||

| 2004 | 0.000 | 0.000 | – | 0.075 | 0.003 |

| 2005 | 0.127 | 0.065 | 0.050 | 0.085 | 0.004 |

Respondents with the fewest years of education (0 to 8 years; predictive margin = 13.1 percent) are more likely to have skipped item Q23 than those with 16 or more years of schooling (the difference in the predictive margins between those with 0 to 8 years and those with 13 to 15 years is not statistically significant). Furthermore, respondents over the age of 40 are more likely to have skipped item Q23 than those in the youngest age group. The difference in the predictive margin between those in the 20 to 29 year old group and those 40 and older is not quite statistically significant (contrast = 3.10 percent, p = .0569). Finally, Asian respondents appear to have skipped answering item Q23 more often than White or Black respondents.[6]

Spanish Language Forms

Table 3 presents the percentages of respondents leaving all Q23 items blank by language of interview and Hispanic origin. Among respondents completing the questionnaire in Spanish, 26.1 percent left all of the Q23 items blank. This is considerably higher than the rate of missingness on Q23 for Non-Hispanics (7.9 percent) and Hispanics who completed the English language instrument (9.1 percent). The difference in the percentage leaving Q23 blank between Hispanics using the English language form and Non-Hispanics using the English language form is not statistically significant (contrast = 1.4 percent, p = 0.136).

Table 3: Percent Leaving All Q23 Items Blank by Hispanic Origin and Language, 2004 and 2005 PRAMS Mail Respondents, 27 Registry Areas [7]

| Hispanic Origin – Language | Sample size | Percent All Q23 Blank | Standard Error |

| Hispanic – Spanish Language | 2,799 | 25.9 | 1.6 |

| Hispanic – English Language | 3,259 | 9.3 | 0.9 |

| Non-Hispanic – English Language | 37,000 | 7.9 | 0.2 |

Table 4 shows the percentages of respondents among those using the Spanish language form who did provide responses to any of the items in Q23 (who were eligible for answering Q23). These results should be viewed cautiously due to both the small numbers of cases overall and the small numbers of states in some of the groups. Only the difference in the percentages leaving all Q23 items blank between the Type 1 and Type 3 instructions is close to being statistically significant (contrast = 4.9, p = .057). Curiously, this is in the opposite direction from our prediction as well as the results from the analyses using the English language form.

Table 4: Percent Leaving All Q23 Items Blank by Skip Instruction Position – Spanish, 2004 and 2005 PRAMS Mail Respondents, 22 Registry Areas

| Instruction Position | States | Percent All Q23 Blank | Standard Error |

| Type 1 – Instruction at bottom; Q23 on same page | AL, CO, FL, GA, IL, NE, OR, WA, NYC | 26.0 | 1.9 |

| Type 2– Instruction on bottom; Q23 on next page (facing) | MD, MN, TX | 27.9 | 3.5 |

| Type 3– Instruction at bottom; Q23 on next page (not facing) | ME, NC, NJ, NM, NY, OK, RI, SC, UT | 21.1 | 1.7 |

| Type 4– Instruction in next column; Q23 on same page | AR | 23.4 | 6.4 |

Discussion

In order to maximize respondent participation, some questionnaire designers for mail surveys may try to reduce the respondents’ perceptions of burden by limiting the number of pages in the questionnaire but without sacrificing content. The cost implications of printing a mail survey may sometimes become a practical consideration affecting the number of pages. However, keeping the number of pages to a minimum can result in questions and instructions being positioned as close to each other as possible. While the ideal solution for any mail survey is not to present a compressed questionnaire to the respondents, a pragmatic approach may be to control the placement of the skip instructions so that they do not mislead the respondents about the intended navigational path. This is particularly relevant for mail surveys that have been fielded continuously, such as the PRAMS mail survey. In our analysis, we find that instruction placement affected item missingness. Differences between states in the placement of skip instructions were associated with respondents failing to provide answers to a multi-item subsequent question. Furthermore, such errors of omission generally varied with the severity of the interruptions in ways consistent with the notion that interruptions in the respondent’s cognitive processing of visual elements can affect item nonresponse.

The logistic regression model on nonresponse to all items in Q23 supports the notion that instruction placement affected item missingness. Education and age also had effects, but they only appear between the most extreme differences for the lowest and highest education groups (8 years or less versus the highest 16 years or more) and age groups (under 20 versus 40 or more). We reason that respondents with little or no formal schooling may be less likely to successfully follow skip patterns or carry out the cognitive work needed to discern the intended navigational path. They may be candidates for telephone follow-ups. In addition, research on the question of whether question design features including order effects (Knauper 1999) or other elements of visual design (Stern et al., 2007) provide some evidence that differences in design features have larger effects among older respondents than younger ones. However, these finding have been based on larger differences in age groups (e.g. comparing those 60 and older with those under 60) than the ones observed in our analysis.

When data collected through the Spanish questionnaires are examined, there is higher data missingness as compared to the English questionnaires that were completed by both Hispanics and non-Hispanics. In addition, the type of skip instruction placement has more of an effect on item nonresponse in the English version than in the Spanish version. Almost 60 percent of those completing in Spanish have less than 12 years of education as compared to 29.2 percent of Hispanics who completed the English language form, suggesting that some of the difference in item missingness on Q23 is due to lower levels of education among Hispanic respondents who answered using the Spanish form rather than the English form. These findings may be explained by comparing the results with a PRAMS field test in December 2007.[8] The respondents who completed a Spanish questionnaire spoke little or no English. They tended to leave an item blank rather than marking the “no” response choice, resulting in data missingness. In terms of following skip instructions, with the exception of two respondents who completed more than 12 years of schooling, almost every respondent answered the questionnaire as if there were no skip instructions at all. During debriefing, those respondents explained that they did not know what the skip instructions intended. In other words, the placement of the skip instructions probably yielded little effect because these respondents were not familiar with this questionnaire convention to begin with. Our current analyses suggest a potential need to regularly monitor the data quality of the Spanish language forms and possibly consider using the interviewer-assisted mode exclusively for this population. In addition, although the layout of the Spanish questionnaire mirrors the English version as closely as possible, Spanish written words require more spaces because of the grammatical structure of the language. Teasing out the effects of possible layout differences and the characteristics of respondents who choose to answer the questionnaire in Spanish (e.g. education and form literacy) are clearly an area for future research.

There are several limitations for findings that emerge from this study. First, the analysis is restricted to those who responded to the PRAMS by mail. The telephone sample cannot be used as a basis for comparison due to the self-selecting nature of response by mail or telephone. Thus, the results from this analysis may not hold for telephone respondents if they were not offered a choice to respond by telephone and could only respond by mail.

Second, as we noted earlier, the analysis cannot distinguish between different types of item nonresponse. Because the PRAMS data are from a mail questionnaire, we cannot tell the difference between a respondent who follows the skip pattern correctly but refuses to answer an item (or series of items) and a respondent who has not followed the skip pattern correctly (unless an explicit don’t know or refuse option is offered). We note that almost 6 percent of respondents who should have answered Q23 gave responses to at least one of the items in Q23.

Finally, our findings are limited to errors of omission because the data on errors of commission need to be compiled separately from files of marginal comments. Due to the volume and format of the qualitative marginal comments, detailed analysis was beyond the scope of the study. We are not able to analyze the effects of instruction placement on errors of commission because the data entry software was programmed to blank out responses to questions that should not have been answered. Recommendations about steps taken to reduce errors of omission need to take into account that these steps may also reduce errors of commission but they may increase such as errors as well.

[1] For Louisiana and Mississippi, the PRAMS was only conducted in 2004.

[2] For Maryland, the skip instruction placement for item Q23 differed between the English (Type 1) and Spanish (Type 2) questionnaires. The variable indicating whether data was collected in the English or Spanish mail questionnaire was missing for over half of the cases. For Arkansas, the 2004 data did not show any respondents who skipped Q23 when they should not have. In fact, all respondents in Arkansas who were to have answered Q23 gave complete responses to all four items in Q23.

[3] Results using 1) weights adjusted for nonresponse and 2) weights adjusted for nonresponse and undercoverage are similar to the ones presented in this paper.

[4] The .005 level of significance is based on a Bonferroni adjustment for multiple comparisons. The adjusted critical significance level is .05/n where n is the number of pairwise comparisons.

[5] At the time these analyses were carried out, three states/registry areas, Alabama, Maryland and New York City, had not granted approval to use data from the birth certificate files so these cases were removed from our multivariate analyses.

[6] We also estimated a model which did not include state regressors. Overall, regression coefficients and significance test results were similar to those shown in the paper, with one notable exception. In the model that omits state regressors, there is no difference between the regression coefficients and predictive margins between Types 4 and 5.

[7] For mail respondents who are eligible to answer Q23, regardless of skip instruction position (2004 data for AR excluded as were all data for MD, AL and New York City, see the Data and Methods section).

[8] The field test was conducted in both English and Spanish and included ten mothers who completed a Spanish questionnaire, which was a partial PRAMS survey and in several versions (Sha, 2008).

References

1. Dillman, D. A., Redline, C. D., & Carley-Baxter, L .R. (1999). Influence of Type of Question on Skip Pattern Compliance in Self-Administered Questionnaires. Proceedings of the Section on Survey Research Methods, American Statistical Association.

2. Featherson, F., & Moy, L. (1990). Item Nonresponse in Mail Surveys. Proceedings of the Section on Survey Research Methods, American Statistical Association.

3. Graubard, B. I., & Korn, E. L. (1999). Predictive margins with survey data. Biometrics, 55, 652-659.

4. Knäuper, B. (1999). The impact of age and education on response order effects in attitude measurement. Public Opinion Quarterly, 63(3), 347-370.

5. Redline, C., Dillman, D. A., Dajani, A. N., & Scaggs, M. A. (2003). Improving Navigational Performance in U.S. Census 2000 by Altering the Visually Administered Languages of Branching Instructions. Journal of Official Statistics, 19(4), 403-419.

6. RTI International. (2004). SUDAAN® language manual, Release 9.0. Research Triangle Park, NC: RTI International.

7. Sha, M. (2008). Phase VI Field Testing of Spanish Questionnaires Summary Findings. Unpublished manuscript.

8. Stern, M. J., Dillman, D. A., & Smyth, J. D. (2007). Visual design, order effects and respondent characteristics in a self-administered survey. Survey Methods Research, 1(3), 121-138.

9. Turner, C. F., Lessler, J. T., George, B. J., Hubbard, M. L., & Witt, M. B. (1992). Effects of Mode of Administration and Wording on Data Quality. In C. F. Turner, J. T. Lessler & J. C. Gfroerer (Eds.), Survey Measurement of Drug Use: Methodological Studies (DHHS Publication No. ADM 92-1929, pp. 221-244). Rockville, MD: National Institute on Drug Abuse.