Effects of a sequential mixed-mode design on participation, contact and sample composition – Results of the pilot study “IMOA – Improving Health Monitoring in Old Age”

Gaertner B. & Lüdtke D. et al. (2019). Effects of a sequential mixed-mode design on participation, contact and sample composition – Results of the pilot study “IMOA – Improving Health Monitoring in Old Age”. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=10841

© the authors 2019. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Existing health survey data of individuals who are 65+ years of age is limited due to the exclusion of the oldest old and physically or cognitively impaired individuals. This study aimed to assess the effects of a sequential mixed-mode design on (1) contact and response rates, (2) sample composition and (3) non-response bias. A register-based random sample of 2,000 individuals 65+ years was initially contacted by mail to answer a health questionnaire. Random subgroups of initial non-responders were further contacted by telephone or home visits. Participation by interview or proxy was possible. After postal contact only, the initial contact and response rates were 51.7% and 37.8%, respectively. The contact and response rates increased to 71.1% and 44.6%, respectively, after all contact steps. A different sample composition regarding sociodemographic (i.e., older individuals) and health characteristics (i.e., worse self-rated health, more functional impairments) was achieved by the inclusion of those late participants. Ill health was the second most frequent reason for non-participation. Personal contact modes are important to increase contact and response rates in population-based health studies and to include hard-to-reach groups such as the oldest or physically impaired individuals. However, non-response bias still occurred.

Keywords

contact mode, data collection mode, non-response bias, older individuals, reasons for non-participation, sequential mixed-mode design

Acknowledgement

The authors wish to thank Ralf Block, Hans Peter Dircks, Volker Heß, Christian Haatz and Monika Buske for data collection; Barbara Wess, Julian Graef and Stefan Meisegeier for programming the participant management software; Sophie Schertell for support with data entry and data cleansing; Patrick Schmich for ongoing advice and support for the project; Dr Ronny Kuhnert for statistical advice; all other colleagues at the Robert Koch Institute who supported our study; and the study participants as well as their proxies for taking the time to participate. This work was supported by the Robert Bosch foundation (Grant Number: 11.5.G410.0001.0). The contents of this publication are solely the responsibility of the authors.

Copyright

© the authors 2019. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

In Germany, currently 21% of the population is 65 years or older, and 6% of the population is 80 years or older [1]. Proportions are projected to increase up to 30% of the population being 65 years or older in the next two decades [2]. Therefore, mapping the health status of the older and oldest population gains more and more attention for health policy planning which leads to urgent needs of valid health data for this specific population.

However, nation-wide data on the health status and health needs of older adults are scarce for several reasons. A first reason is a systematic exclusion of older or functionally impaired individuals in most population-based research studies. For example, the application of age limits for study participation in general-population surveys is common [3-7]. In addition, exclusion criteria of health studies often include health-related problems such as mobility problems, institutionalization or cognitive impairments [8-10]. Low response rates are a second reason for data gaps among this group [e.g., 11, 12, 13]. Methodological studies observed this phenomenon for all data collection modes, such as mailed questionnaires [14, 15], face-to-face interviews [14] and telephone interviews [16]. Low response rates are explained by difficulties in gaining access (i.e., non-contactability) rather than refusal [17]. In addition, increasing rates of physical and/or mental disabilities might hamper study participation of this specific age group. For example, a 2005 German household survey of adults 65 years or older found that 1.5% of persons under 70 years of age and 3.3% of persons aged 70 years or older were not able to participate [18].

Although low response rates do not necessarily imply limited data quality, the underrepresentation of older age groups in health surveys can have severe consequences for data quality [17]. Non-participation due to health-related reasons (e.g., physical or mental disabilities, hospitalisation during the field period) biases survey estimates of health-related outcomes [19, 20]. In general, higher morbidity and mortality rates are reported for non-participants compared to participants [15, 20-26].

Health surveys including older persons should avoid systematic exclusion criteria. In addition, surveys should implement strategies to minimise non-response bias. Investing special efforts and extra costs to enhance response rates will be useful in particular, if non-response due to physical or mental disabilities can be reduced. For this purpose and as shown in previous work of our group and by other researchers [20, 22, 27, 28], more insights into the reasons for survey refusal among this specific age group are necessary. Moreover, the suitability of different contact modes and data collection modes for this age group should be examined. Results from population-based studies of older individuals in the US, England, Ireland and Japan have shown that home visits help to include older or disabled respondents into population-based health research studies [29-31]. However, home visits as single mode are very cost-intensive, whereas mixed-mode designs provide “an opportunity to compensate for the weaknesses of each individual mode at affordable cost” [32, p. 235]. The term “mixed-mode design” refers to mixtures of data collection modes, mixtures of contact modes and combinations of both [32-34]. It is supposed that mixed-mode designs help minimising coverage error and nonresponse error and enhance the quality of answers [32-34]. A specific type of mixed-mode designs is the sequential multi-mode approach where the data collection starts with an inexpensive mode for the whole sample which is followed by a more expensive mode for the initial non-responders [32]. The sequential mixed-mode design might remarkably improve response rates and reduced costs [35]. Indeed, offering more than one mode of data collection seems to enhance the probability of response among older individuals as well [36-39].

Therefore, a sequential mixed-mode design using different contact modes and offering different data collection modes could help to identify preferences of this target group. Moreover, the offer of proxy interviews could help to include physically or mentally impaired individuals [19, 40].

The Health Monitoring conducted at the Robert Koch Institute applied telephone interviews in the first surveys and switched to online and, in particular, postal questionnaires in recent surveys [41]. Starting from this survey design, the present study aimed to pilot the effects of a sequential mixed-mode design including different contact and data collection modes into population health surveys of the population 65 years or older living in private households or in nursing homes in Germany. We specifically asked: (1) Can we improve contact, cooperation, and response rates as compared to postal survey contacts only, (2) What proportion of participants choose the mode of contact as the mode of data collection and what proportion turns to alternative modes of data collection offered, (3) Do late participants included via subsequent recruitment efforts change sample composition, and (4) What are remaining sources of non-response bias?

Methods

Study design

The present pilot study was conducted between September 2017 and April 2018 at the Robert Koch Institute in Berlin, Germany, as part of the “IMOA – Improving Health Monitoring in Old Age” project, funded by the Robert Bosch Foundation (Grant Number: 11.5.G410.0001.0). The objective of this project was to develop a conceptual framework for a public health monitoring of the population aged 65 years or older.

The study was approved by the ethics committee at the Berlin Chamber of Physicians (German: Berliner Ärztekammer, Eth-22/17) and was conducted in compliance with data protection and privacy regulations, as required by the Federal Commissioner for Data Protection and Freedom of Information. Our data protection concept included the use of register-based information and the linkage with publically available data for non-responder analyses and the search for a telephone number to contact individuals via telephone. Informed written consent was obtained from all participants who completed a questionnaire or had a face-to-face interview. Oral consent was obtained from participants who had a telephone interview. For proxy participation, written informed consent was required either from the invited individual or from their legal representative.

Sampling procedures

A two-stage-sampling approach was used that included (1) the selection of primary sampling units (PSUs) and (2) the drawing of random samples from population registers within the PSU. Due to limited resources, two PSUs close to the Robert Koch Institute site were chosen for recruitment of study participants. One PSU belonged to an urban area (≥100,000 inhabitants), whereas the other PSU was rural (<10,000 inhabitants). More details on selection of PSUs are provided elsewhere [42]. A random sample of 1,000 individuals aged 65 years or older was drawn proportionally within each PSU to the general population from each of the population registers of the two chosen municipalities on September 11, 2017, leading to a total random sample of 2,000 individuals. Inclusion criteria were permanent residence in the sampled community, living in private households or nursing homes and age 65 years or older. Individuals who had died or moved outside of the study area before the field period started as well as those who had insufficient German language skills were considered ineligible for study participation.

Sequential mixed-mode design

Contact of sampled individuals and data collection followed three steps of a sequential mixed-mode design (Figure 1) and was conducted between October and December 2017 by experienced research assistants. In recruitment step 1, all 2,000 sampled individuals were initially contacted through postal mail. Although prior observational studies and the very few experimental studies on the effectiveness of different contact modes among individuals 65 years or older suggest contacting older individuals personally and especially face-to-face might be promising [43-46], we decided to start with a postal contact as it is a resource-saving mode and served as first contact in past waves of the Health Monitoring at the Robert Koch Institute which is based on random samples from population registers [6, 47]. We therefore aimed at evaluating the benefits from additional respectively other types of contact modes for survey data quality.

A personalised invitation letter (step 1a) included a study description, a health questionnaire, a consent form, a prepaid envelope for returning the questionnaire and an unconditional incentive (pack of flower seeds). The study description contained the information that address data would be linked to public resources to receive a telephone number and aggregated socioeconomic data. In addition, the number of a toll-free telephone hotline was provided and individuals were informed that they could call from Monday to Friday between 9 to 12 a.m. and 2 to 4 p.m. for further information, to refuse survey participation or to express specific preferences or needs for participation. Additional offers facilitating participation were described in the letter and included appointments for a telephone or face-to-face interview with or without further assistance as well as proxy-only interviews/questionnaires. The questionnaire/interview included a total of 54 questions covering demographics, physical and mental health, difficulties in activities of daily living, falls, health care use, loneliness and social participation. After 16 days, initial non-responders received a personalised reminder letter that also announced possible further contacts by telephone or home visit in the case of non-response to the reminder letter (step 1b).

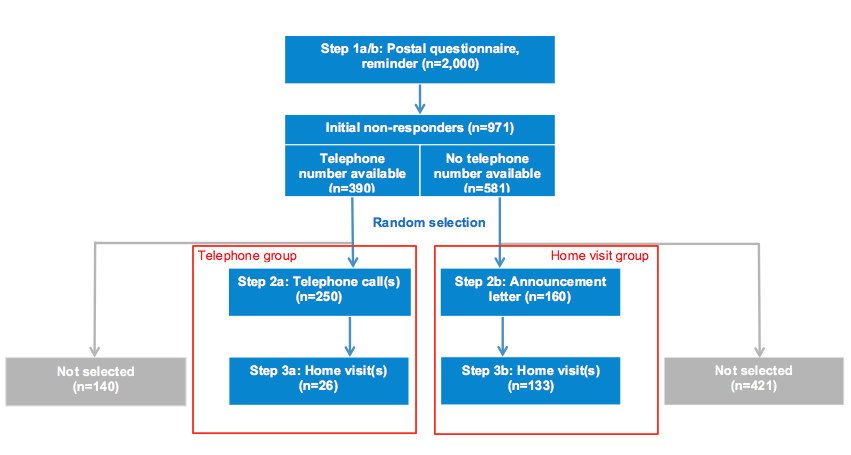

Figure 1. Study flow and the three steps of the sequential mixed-mode design

Two weeks later, remaining initial non-responders were either eligible for telephone contact if a telephone number was provided by a commercial provider (telephone group) or face-to-face contact if no telephone number was available (home visit group; Figure 1). Due to limited resources, both staff-intensive recruitment efforts were applied only to random subsamples of the telephone and the home visit groups, respectively. However, initial non-responders not sampled for further contact attempts could still send back the questionnaire or call the telephone hotline. Indeed, some late responses of these non-selected individuals were received after further contact attempts within random samples of initial non-responders had started.

Among persons sampled in the telephone group, ten contact attempts by telephone were placed on different days from Monday to Friday at varying times (step 2a). If no telephone contact could be established, two further attempts were made by home visits without further announcement (step 3a; Figure 1). Persons sampled to the home visit group received a letter announcing a home visit by trained RKI staff (step 2b). This letter specified that the home visit(s) would take place within 16 days and included a photograph of the research assistants of whom one would carry out the home visit. The team of RKI staff members consisted of four experienced persons, all male and 40 years or older. For identification purposes, research assistants used an official RKI employee identification card. Up to two contact attempts by home visit were undertaken among individuals not reacting to the announcement letter (step 3b). Throughout these recruitment steps, individuals could still express specific needs for participation, e.g. individuals in the telephone group could send back the completed health questionnaire. We took into account all questionnaires sent back until the end of the field period (i.e., February 2018). Telephone and face-to-face interviews were possible until December 20, 2017.

Individuals who participated after having been contacted by post only (step 1) were considered “early participants”. Participants who were recruited in subsequent recruitment efforts (steps 2 and 3) or who were initial non-responders not selected for the telephone or home visit group were defined as “late participants”.

Non-responder questionnaire

To further characterise non-responders, an additional, short non-responder questionnaire was used to obtain at least minimal information on this specific subgroup of the sample. The non-responder questionnaire comprised nine questions on health and demographics in line with the main study questionnaire as well as reasons for non-participation. Individuals who refused study participation by phone or during face-to-face contact were asked to answer this non-responder questionnaire. Moreover, after the end of the field period (i.e., February 2018), this non-responder questionnaire was sent to non-contacts, individuals without a final result regarding study participation and individuals whose invitation letters were returned as undeliverable. In addition, individuals who refused to complete the non-responder questionnaire by telephone or during home visits were asked to state at least their reasons for non-participation.

Measures

Contact, cooperation and response rates

Final disposition codes were assigned according to the American Association for Public Opinion Research (AAPOR) standards [48]. The final disposition code “interview” was assigned to all completed questionnaires/interviews with at least 80% of the applicable questions answered and to partial questionnaires/interviews with at least 50% of the applicable questions answered. The following cases were defined as an “eligible non-interview”: if the sample person refused a questionnaire/interview, broke off an interview, moved outside of the PSU during the field period, died during the field period, did not provide a decision regarding participation or refusal during a personal contact or did not provide written informed consent or if the questionnaire was lost. The final disposition code “unknown eligibility, non-interview” was assigned to non-contacts if no mailed questionnaire was ever returned, no contact could be established by phone or home visit, or nothing was known about the sample person or the address. The final disposition code “not eligible” was assigned to persons who met the exclusion criteria, i.e. individuals who had died or moved outside of the study area before the field period started and to those who had insufficient German language skills.

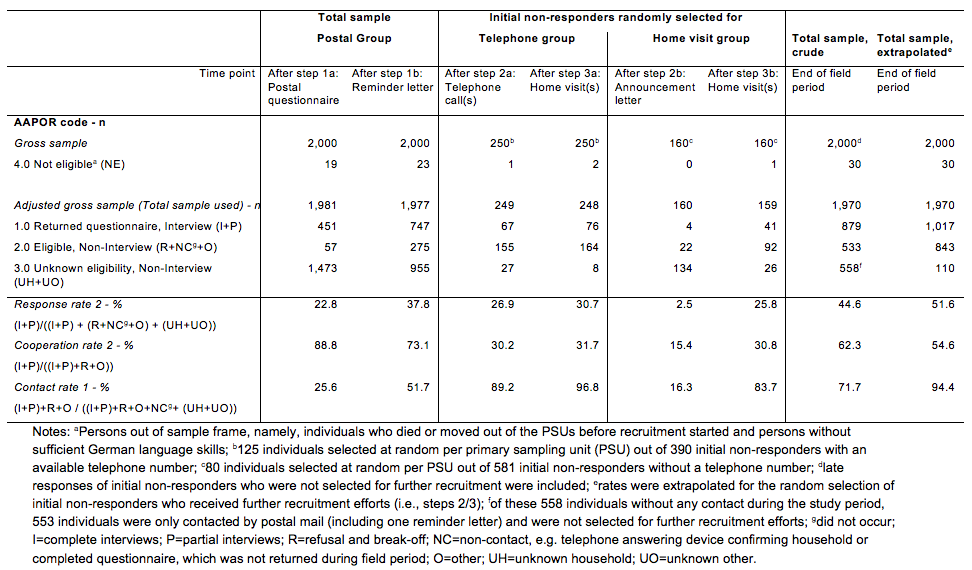

Contact, cooperation and response rates were calculated according to AAPOR standards as well [48]. For the calculation of these rates, “not eligible” cases were excluded. Therefore, the number of the total sample used was the sum of all cases assigned the final disposition code “interview”, “eligible non-interview” or “unknown eligibility, non-interview”. As we were interested in the number of individuals who were willing to provide information at all we chose formulas to calculate contact, cooperation and response rates that also included partial questionnaires/interviews rather than full-interviews only. Therefore, we calculated contact rate 1 (i.e., number of all “interview” and “eligible non-interview” cases divided by the number of the total sample used), cooperation rate 2 (i.e., number of all “interview” cases divided by the number of “interview” cases plus “eligible non-interview” cases) and response rate 2 (i.e., number of all “interview” cases” divided by the number of the total sample used). Formulas are depicted in Table 2.

Descriptives of the study population based on information obtained from population registers and the use of external data sources

Information obtained from the population registers included sex, date of birth, postal address, marital status, citizenship and, if applicable, the existence of an information release ban. An information release ban should be assigned to all institutionalized persons in Germany according to § 52 Federal Act on Registration (German: bedingter Sperrvermerk nach § 52 Bundesmeldegesetz) [49]. Marital status was categorised as being married or living in a registered partnership (yes/no). Citizenship was categorised into German and non-German.

To identify nursing home residents in the sample, postal addresses were compared to addresses of nursing homes within the PSUs that either were obtained from our own internet search or one commercial provider [42]. In addition, it was assumed that the information release ban referred exclusively to nursing home residents in our sample of individuals 65 years or older. If an individual was identified as a nursing home resident at least once by one of the described strategies, the individual was considered as being a nursing home resident (yes/no).

One commercial provider (“Deutsche Post Direkt GmbH”) was commissioned to check postal addresses and surnames of all individuals after sample drawing to detect telephone numbers for potential telephone contact during the data collection period. This information described the availability of a telephone number (yes/no).

Information on purchasing power according to postal addresses was provided by “Growth from Knowledge” (GfK) and was defined as the sum of the net income of the population referring to the applicable street segment. The calculation of net income included income from (self-) employment, investment and state transfer payments (e.g., unemployment benefit, child benefit and pensions). We used the standardised purchasing index with a German mean of 100. All values under this mean value were defined as low purchasing power.

Self- or proxy-reported information on sociodemographic and health characteristics and reasons for non-participation

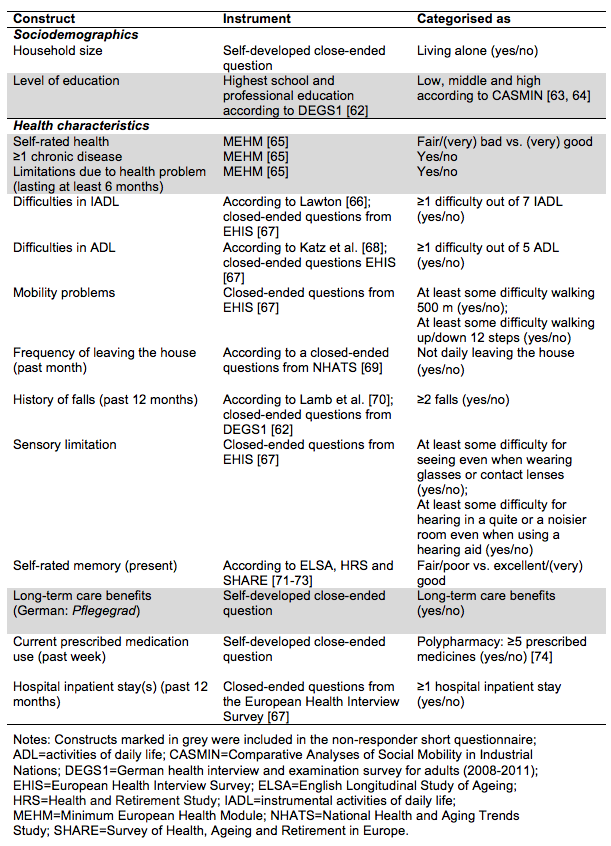

Table 1 provides an overview of all sociodemographic and health characteristics assessed by self- or proxy report via questionnaire or interview that were used in the analyses. A full list of all measures assessed and questionnaires used in IMOA can be received on request from the authors.

Proxy participation (yes/no) was defined if a proxy questionnaire/interview was completed or if proxy participation was indicated in the questionnaire.

Table 1. Self- or proxy reported information on sociodemographic and health characteristics

The non-responder short questionnaire included the marked constructs of Table 1. We used the term “soft refuser” [50] for all sample persons who did not participate in the main study but completed the non-responder questionnaire. Persons who did not send back the non-responder questionnaire, refused participation in both the main study and the non-responder short questionnaire or could not be contacted at any time, were defined as “final non-responders”.

Finally, the following seven reasons for non-participation could be chosen in the non-responder short questionnaire or during refusal by personal contact (multiple choices were possible): absence (e.g., because of longer holidays, hospitalisation or rehabilitation), too ill, lack of time, lack of interest in the study, providing nursing care for family members, concerns about the study, and other reasons. Other reasons for non-participation could be further specified in open-end fields. By reviewing the open-end responses, it became apparent that reasons such as “not being able to participate”, “cannot participate” or “cannot provide information” were often-mentioned reasons for non-participation. Hence, “not being able” was defined as a separate reason for non-participation.

Statistical analyses

Descriptive statistics were calculated to describe the total sample and different subgroups: (a) individuals reached by the different sequential steps, (b) early vs. late participants, (c) participants vs. non-participants, and (d) soft refusers vs. final non-responders. We provided proportions and the respective 95% confidence intervals (CIs) to allow comparisons between different subgroups. Non-overlapping CIs were considered as significant differences between subgroups. In addition, descriptive statistics were used to provide information on reasons for non-participation.

We analysed effects of the sequential contact steps on sample composition by calculating the deviation of each of the six subsamples of participants from the gross sample. Deviations were calculated by calculating the absolute deviation between a subsample and the gross sample for each of the register-based sociodemographic characteristics separately (n=8) and then summarizing all deviations and dividing them by the number of variables.

The impact of sociodemographic characteristics (age, sex, marital status, citizenship, nursing home resident, rural PSU, availability of telephone number, low purchasing power) on the chance of being a participant (complement: non-participant) was estimated by a binary logistic regression model [51]. Moreover, the impact of sociodemographic characteristics and basic health characteristics on the chance of being a participant (complement: soft refuser) was estimated by a second binary logistic regression model. Odds ratios (OR) are reported and p-values <.05 were considered statistically significant.

Further recruitment of initial non-responders was only carried out among random subgroups, i.e. 125 individuals of each PSU with an available telephone number were randomly selected for the telephone group (total: 250 out of 390) and 80 individuals of each PSU without an available telephone number were randomly selected for the home visit group (total: 160 out of 581). A total of 561 initial non-responders were therefore not selected for further recruitment efforts. An extrapolation factor was calculated to receive estimates about what could have been expected if further recruitment steps had been applied to all initial non-responders. The extrapolation factor considered the disproportional selection probability dependent on PSUs and the availability of a telephone number, i.e., the inverse of the inclusion probability of an individual. All statistical analyses were conducted using Stata/SE 15.1 [52].

Results

Sample characteristics and study flow

Of the total gross sample (n=2,000), 57.1% were female, 30.6% were 80 years of age or older, 57.4% were married or lived in a registered partnership, 4.7% had a non-German citizenship, 5.2% lived in a nursing home, 50.0% lived in the rural PSU, 65.0% lived in an area with low purchasing power, and for 40.9% a telephone number could be investigated by the commercial provider.

Of the total gross sample (n=2,000) and after the initial postal contact (i.e. invitation letter plus postal questionnaire, step 1a), 1,473 persons did not provide a final decision regarding study participation and reminder letters were send to them (step 1b). After that step, a total of 971 individuals had not responded (Figure 1). For 390 of these initial non-responders, a telephone number was available. Of those, 125 individuals of each PSU were randomly selected for the telephone group. After ten contact attempts by telephone, a total of 26 individuals had still not been reached. Therefore, for these individuals, two contact attempts by home visit were conducted. Of the 581 initial non-responders without an available telephone number, 80 individuals of each PSU were randomly selected for the home visit group and received a postal announcement of the home visit. A final response regarding study participation due to this letter was received by 27 individuals. For the remaining 133 individuals, up to two contact attempts by home visit were undertaken. Of the 561 initial non-responders who were not randomly selected for either the telephone or the home visit group, a final response regarding study participation was received by 30 individuals by the end of the field period.

Survey design effects on contact, cooperation and response

After recruitment step 1a, 19 individuals of the total gross sample (n=2,000) were defined as ineligible for study participation and were excluded for the calculation of contact, cooperation and response rates (Table 2). Of the remaining adjusted gross sample after step 1a (n=1,981), 508 individuals were successfully contacted, i.e. they had received the final disposition code “interview” or “eligible, non-interview”. Therefore, initial contact rate was 25.6% (508 out of 1,981). As 451 individuals out of those 508 individuals contacted were participants, the cooperation rate was 88.8%. The initial response rate that takes the adjusted gross sample into account was 22.8% (451 out of 1,981). After the reminder letter (step 1b), a contact rate of 51.7%, a cooperation rate of 73.1% and a response rate of 37.8% were realised. In the random subsamples allocated for further contact attempts, high contact rates were achieved by the end of the field period (96.8% telephone group after step 3a vs. 83.7% home visit group after step 3b). Among the telephone group, 10.8% could not be contacted by telephone. Among the home visit group, 16.3% responded to the letter announcing a home visit. Cooperation rates for these further contact attempts ranged between 15.4% (after step 2b) and 31.7% (after step 3a) and were lower compared to step 1a. Among these random groups response rates of 30.7% and 25.8% were achieved for the telephone group and home visit group, respectively.

After all steps were passed through, a total final response rate of 44.6%, a cooperation rate of 62.3% and a contact rate of 71.7% were achieved. Taking the random selection process into account, extrapolation resulted in a response rate of 51.6%, a cooperation rate of 54.6% and a contact rate of 94.4%.

Table 2. Final disposition codes according to American Association for Public Opinion Research (AAPOR) standards for mixed-mode surveys and response, cooperation and contact rates stratified by the steps of the sequential mixed-mode design and total

Specific offers facilitating participation: Choice of data collection mode, proxy participation and informed consent by legal representatives

Among study participants, data collection mode was closely correlated to contact mode (Table 3). Thus, 96.8% of early participants contacted only by postal contact participated by returning the questionnaire. Likewise, the majority (61.8%) of the participants in the telephone group were interviewed via telephone and 53.7% of participants in the home visit group were interviewed face-to-face. However, the offer to choose an alternative data collection mode different to the contact mode was used in all participant groups.

Of all participants, 88.2% participated by questionnaire, 8.2% by telephone interview and 3.6% by face-to-face interview. If rates were extrapolated for the random selection, 79.3% of the participants would have participated by postal questionnaire, 11.5% by telephone interview and 9.3% by face-to-face interview.

Proxy participation and informed consent provided by legal representatives were mainly received through face-to-face contact among late participants (i.e., step 2/3b). A total of 3.8% participated by proxy, and 1.4% consents by legal representatives were received. Extrapolated rates were slightly higher (proxy participation: 3.9%; consent by legal representative: 2.0%).

In total, at least one out of the three specific offers (i.e. choice of data collection mode, proxy participation or providing informed consent by legal representatives) was most often used by participants of the home visit group (43.9%) and of the telephone group (36.8%) compared to the participants contacted only postally (6.2%). Therefore, the increase in response rate due to using ≥1 specific offer was 11.3% in the telephone and home visit group compared to a 2.3% increase in the postal group. Due to using ≥1 specific offer, the crude total response rate increased by 4.8% and the extrapolated total response rate by 7.9%.

Table 3. Specific offers facilitating participation: Choice of data collection mode, proxy participation and consent by legal representative among participants and the effect on response rate

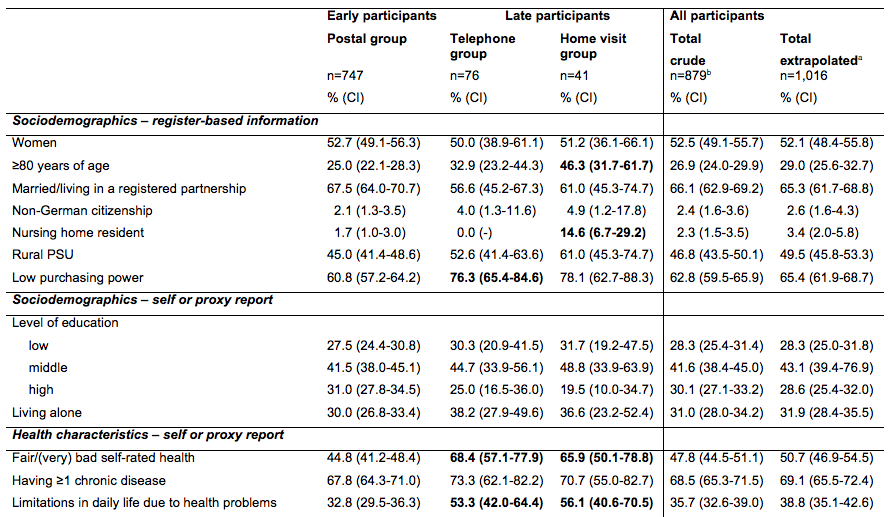

Survey design effects on sample composition: Comparison of early vs. late participants

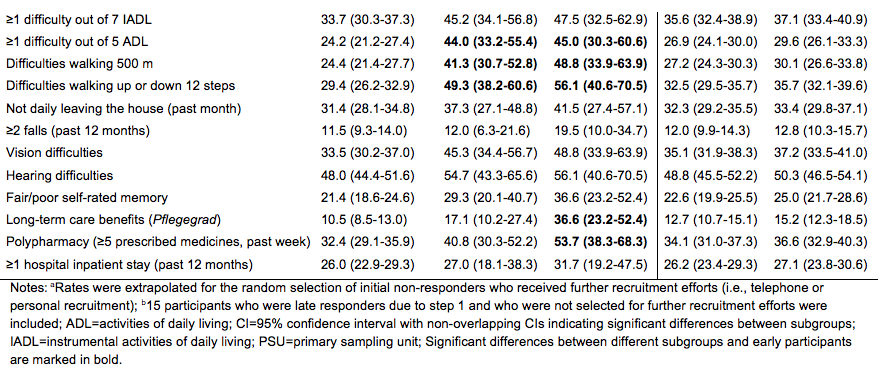

An overview of the differences in sociodemographic and health characteristics between early and late participants is provided in Table 4. Early participants recruited through postal contact only (step 1, n=747) were younger, better educated, less often nursing home residents, less often lived in areas with low purchasing power and generally less often reported health problems or functional limitations than late participants recruited in subsequent recruitment efforts. Compared to proportions calculated for the early participants (n=747), proportions of nearly all health problems increased when all 132 late participants were included in the analyses (crude total: n=879). This effect was even more marked when proportions were extrapolated for the random selection process.

Table 4. Differences in sociodemographic and health variables between early and late participants

Table 5 displays the sample composition of the adjusted gross sample and of the different subsamples of participants after the sequential contact steps. The deviation from the adjusted gross sample was highest after the first step of the sequential mixed-mode design (step 1a: 5.5). After the reminder letter (step 1b) the deviation was reduced to 4.4, but was not further affected by step 2. The final step 3 (i.e. home visit(s)) diminished the deviation from the gross sample remarkably (deviation factor=3.9), which is particularly true for the extrapolated sample (deviation factor=2.5). Thus, this last step improved the sample composition notably.

Table 5. Deviation in sociodemographic characteristics from subsamples of participants to the adjusted gross sample

Non-response bias: Comparison of participants and non-participants

In our study, 879 individuals participated, while 1,091 were non-participants. Of these, 129 individuals completed the non-responder short questionnaire (soft refusers), while 962 individuals were defined as final non-responders. In a descriptive comparison, non-participants were more often female, 80 years of age or older and nursing home residents, were less often married or living in a registered partnership and more often had a non-German citizenship compared to participants (Table 6). Non-participants more often lived in the rural PSU, and telephone numbers could less often be investigated by the provider for them. Assessing associations of sociodemographic characteristics available for both participants and non-participants with the chance of participation (vs. non-participation) using a logistic regression model revealed that being married respectively living in a registered partnership (OR=1.7) and having the German citizenship (OR=2.9) significantly and positively affected participation (p≤.001), while living in a nursing home (OR=0.4) and living in a rural area (OR=0.7) significantly and negatively impacted participation (p≤.001), compared to their reference groups. No other significant associations remained in this logistic regression model.

Comparing descriptively, soft refusers were more often 80 years of age or older, were less often married or living in a registered partnership and telephone numbers could less often be investigated by the provider for them compared to participants (Table 6). The non-responder short questionnaire allowed us to additionally compare descriptively soft refusers with participants regarding basic health characteristics, level of education and proxy participation. Compared to participants, soft refusers more often participated by proxy and more often had low levels of education. Regarding health-related factors, soft refusers more often reported fair, bad or very bad self-rated health and limitations in daily life due to health problems. We estimated a logistic regression model to assess the association for participation (vs. soft refusal) with all sociodemographic and health-related characteristics available for both participants and soft refusers. The analysis revealed that being married respectively living in a registered partnership (OR=1.7) and having a telephone number available (OR=1.8) significantly and positively affected participation (p≤.05), while using the offer of proxy participation (OR=0.4) was significantly and negatively associated with participation (p≤.01). No other significant associations remained in this logistic regression model.

Table 6. Characteristics of total gross sample, participants, and non-participants at end of field period

Reasons for non-participation

Among all 1,091 non-participants 36.1% (n=393) stated at least one reason for non-participation with a range from 1 to 4 reasons. Out of these 393 individuals, the most frequently mentioned reason for non-participation was a lack of interest in the study (42.4%) followed by illness (20.3%), concerns about the study (16.2%; for example with regard to data protection), time restraints (12.2%), long absence during the field period (9.1%), having to provide nursing care for family members (4.1%) and/or being not able to participate (3.8%). A further 16.8% mentioned other reasons for non-participation, such as death of a family member or a fundamental opposition to study participation.

Discussion

As a main result, the applied sequential mixed-mode design of the IMOA pilot study improved contact and response rates compared to recruitment by postal contact only (contact rate: 71.7% vs. 51.7%; response rate: 44.6% vs. 37.8%; respectively). This is in line with other studies that combined postal contact with other contact modes and thereby improved their response rates [28, 36-39]. As further contact steps in our study were only provided to random subgroups of initial non-responders, rates were extrapolated and projected rates amounted to a contact rate of 94.4% and a response rate of 51.6%. Thus, with higher resources even more individuals could have been reached by the applied survey design. The achieved response rates exceed those reported in previous national general-population health surveys among individuals aged 65 years or older of the Robert Koch Institute, which have varied between 20% for those 75 years or older and 33% for those aged 65 to 74 years in a health interview survey offering online and postal questionnaires [47] and 38% for those 70 to 79 years and 47% for those 60 to 69 years in a health examination survey [6]. In addition, our response rate also exceeds the response rate of 23% achieved in a previous epidemiological cohort study of the population 65 years or older in Berlin, that used comprehensive recruitment methods and only applied few exclusion criteria [53].

The final crude and extrapolated cooperation rates were lower compared to those after initial postal contact (62.3%/54.6% vs. 88.8%, respectively). By each additional step of the sequential mixed-mode design, the huge initial difference between response and cooperation rates (22.8% vs. 88.8%) diminished as response rates increased and cooperation rates decreased. This can be explained by the remarkable reduction of the proportion of non-contacts. However, compared to the initial postal contact, a lower proportion of contacts resulted in study participation during later steps reducing cooperation rates. In sum, the Health Monitoring at the Robert Koch Institute should benefit from contact modes additional to the standard postal contact when surveying older individuals by increasing contact and response rates.

In general, at least one additional offer to facilitate participation was more often used among participants of the telephone and home visit group compared to participants recruited by postal contacts only (36.8%, 43.9% vs. 6.2%). These offers increased the extrapolated response rate by 7.9%. Regarding the applied additional offers, the choice of data collection mode was the most often used offer. However, this offer was mainly used by initial non-responders. Thus, only 3.2% of the early participants who were recruited by postal contact only chose a telephone or face-to-face interview. Among the total sample, proxy participation and informed consent by legal representatives occurred rarely (3.8% and 1.4%). If this option was chosen, it occurred mostly among the home visit group. Considering the proportion of individuals 65 years or older, having a legal representative is becoming steadily more common with higher age [54], and assuming that a substantial proportion of older adults with a legal representative will have limited ability to consent to study participation, we suspect that we have missed potential study participants who could have been reached via their legal representatives. Future studies will have to develop better means of contacting and including legal representatives.

In addition, due to the later contact steps, the deviation of the realised sample from the gross sample with respect to sociodemographic characteristics diminished notably, especially by the reminder letter (step 1b) and the home visit(s) (step 3). In contrast, sample composition did not benefit from the second step (telephone group: up to 10 telephone calls; home visit group: announcement letter). This result was not surprising for two reasons: First, the increase of participants after step 2 can mainly be explained by the telephone contacts of the telephone group (step 2a). Instead, the announcement of a home visit did hardly affect the response rate (step 2b). Second, having an available telephone number was also included in the calculation of the deviation factor. Therefore, the amount of persons with detectable telephone number is much higher in this subgroup of participants, as a known telephone number was a requirement for the telephone contact.

Consistent with other studies [30, 39, 55], late participants recruited via telephone or face-to-face contacts were older, less well educated, and more often lived in an area with low purchasing power, groups that are generally hard to reach in surveys [14, 56]. Like the majority of other studies that compared early with late participants [30, 39, 55, 57-59], late participants in our study reported a poorer health status compared to early participants. This seemed to be especially true for participants of the home visit group contacted by the most expensive contact mode, i.e., face-to-face. As a recent systematic review has shown [31], this contact mode also resulted in the inclusion of the highest proportion of the oldest old (i.e., 80 years or older). In addition, these individuals had the highest proportions of morbidity and limitations in physical functioning in our study. Thus, health surveys among individuals 65 years or older should use more than one contact mode and more than one data collection mode [31, 39], as estimates of health characteristics and subsequent estimates of health care needs could otherwise clearly be biased.

Although the non-response bias was reduced, it still occurred in our study. Regarding the comparison between participants and non-participants, we found patterns that are well-known in the literature [20, 25, 27, 28]: Non-participants were more often not married, nursing home residents or had a non-German citizenship, and they more often lived in the rural PSU. In contrast to others who reported that non-participants lived more often in socially deprived living areas [20, 27], we did not observe differences among participants and non-participants regarding low purchasing power of their living area.

The follow-up non-responder questionnaire helped to gather minimal information from the oldest old, unmarried individuals, persons with low levels of education and those who made use of proxy interviews as well as persons without available telephone number. Moreover, and in line with previous studies [15, 22, 23, 25, 28], the inclusion of soft refusers in the survey affected the sample composition with regard to health-related outcomes. Soft refusers more often reported limitations in daily life due to health problems, and their self-rated health was more often fair, poor or very poor compared to participants. However, not all of these univariate associations remained significant in the multivariable logistic regression model. This was especially true for those associations with health-related outcomes. Furthermore, the analysis of reasons for non-participation indicated as well that non-participation in our study was associated with poorer health. As found in other studies [20, 22, 27, 28], illness was one of the main reasons for non-participation. However, the relatively small numbers have to be taken into account.

Strengths and limitations

The main strengths of our study consist of applying only a few exclusion criteria; older age as well as physical or mental disability were not among them. In addition, some of our non-response analyses are based on sociodemographic register-based data for the total sample.

However, the study has a number of limitations. First, the different contact modes in our sequential mixed-mode design were not randomly allocated, and hence, the presented differences cannot completely be explained by the provided contact mode. Additional analyses (not presented) showed that individuals in the total sample for whom a telephone number was available differed significantly from those without an available telephone number regarding sociodemographic characteristics: they were more often married and lived in the rural PSU as well as had rarely a non-German citizenship or were nursing home residents. Therefore, nursing home residents in our study could only be reached by a face-to-face contact when postal contact failed [42]. Thus, if a face-to-face contact is not provided within a survey design, this will be one reason why some hard-to-reach groups such as nursing home residents and migrants will be underrepresented among study participants. Second, due to limited financial resources, it was necessary to limit further recruitment efforts to random subgroups of initial non-responders. This resulted in rather small numbers of participants in the telephone and the home visit group. Thus, the reported 95% confidence intervals were rather large in the analyses comparing these groups, which precluded multivariable analyses of differences between early and late participants. Nonetheless, it was possible to detect some differences between participants according to the contact mode. In addition, we tried to extrapolate the effects of our study design to those initial non-responders not selected for further recruitment to achieve at least some estimates about what could have been expected if these steps had been applied to the total sample. Third, the present study was conducted as a pilot study based on two PSUs only. Our main focus was the application of a sequential mixed-mode design and to understand and describe potential difficulties. Therefore, the study had rather a feasibility character and was not aimed at providing population-based prevalence estimates. Therefore, we did not apply population weights and our estimates of health characteristics can only be interpreted as proportions rather than prevalence estimates in the selected regions. Fourth, sequential mixed-mode designs have “a potential for measurement error as the modes used may cause measurement differences” [32, p. 241]. We did not control for potential measurement differences in our analyses. Fifth and finally, as our results relied on two PSUs only, which precludes the generalisability of our findings.

Practical implications

In sum, a sequential mixed-mode design starting with an inexpensive and resource-saving contact mode, such as postal contact, followed by more expensive contact modes, can contribute to enhanced response rates among older individuals and saves costs at the same time. Moreover, the offer of different data collection modes improves sample composition in samples of older individuals as in the IMOA pilot study. Furthermore, differences in sociodemographic characteristics between the total sample and the realised sample could be reduced due to the additional contact modes. Moreover, age-related adapted design features like proxy participation and choice for data collection mode were infrequently used in our study. Nevertheless, they are judged of paramount importance when aiming at integrating specific subgroups of older individuals, which could otherwise not participate. However, illness as reason for non-participation was still very present in the IMOA pilot study. Thus, more research is needed for the development of age-specific design features allowing individuals with cognitive and/or physical impairments to participate in surveys.

The experiences of the IMOA pilot study will support the design of the next national health interview and examination survey for adults in Germany which aims to reduce participation barriers for older people with functional impairments. One arm of this survey will explicitly be based on a two-stage cluster random sample of adults 65 years residing in Germany. Thus, it will provide a better data set for subgroup analyses that were beyond the scope of the present explorative study

Conclusion

To conclude, the sequential mixed-mode design of the IMOA pilot study seems to have been successful in improving contact and response rates as well as achieving a more heterogeneous sample composition and thus better resembling the gross sample in a general-population health study among individuals aged 65 years or older. In addition, register-based and external data as well as the integrated non-responder analysis indicated that some non-response bias still existed in our study. However, if this survey design is applied in future health surveys, it will lead to higher recruitment costs, especially as only a small proportion of our total sample had an available telephone number. Therefore, face-to-face contacts will most often be the only alternative contact option when postal contact fails. One cost-saving possibility could be the integration of supplemental study methods such as linkage to Geo information [60, 61], medical records or other registers [e.g. 22]. This approach could minimise the research burden for participants, the duration of data collection, missing data and the non-response bias. Nonetheless, health research campaigns are urgently needed for gaining a better image of health studies in the general public. To further improve participation in health studies, it seems essential to increase knowledge, to raise interest and to tackle concerns about health studies among individuals 65 years or older and their social surroundings, e.g. in broader mass media actions.

References

- GeroStat – Deutsches Zentrum für Altersfragen. Bevölkerung Deutschlands nach demographischen Merkmalen. Deutschland, Jahresende, nach Altersgruppen, 2016. [cited 25.01. 2019]; Available from: https://www.gerostat.de/scheduler2.py?Att_1=REGION&Att_1=D&Att_1=D&Att_2=GESCHLECHT&Att_2=I&Att_3=FSTAND&Att_3=I&Att_4=STAATSAN&Att_4=BI&Att_5=STAND&Att_5=JE&Att_2=I&Att_3=I&Att_4=BI&Att_5=JE&Att_6=ALTERSGR&Att_6=I&Att_6=I&Att_6=65-%3C00&Att_6=80-%3C00&Att_7=JAHR&Att_7=2016&Att_7=2016&VALUE_=D2%28QCode%2CFormat%2CWert%29&RESTRICT=YES&SUBMIT=Anfrage&TABLE_=BE_FO_2__DE.

- Destatis. 13. koordinierte Bevölkerungsvorausberechnung in Deutschland. 2018 [cited 2018 19.09.]; Available from: https://service.destatis.de/bevoelkerungspyramide/#!y=2038&a=65,80&g.

- German National Cohort (GNC) Consortium, The German National Cohort: aims, study design and organization. European Journal of Epidemiology, 2014. 29(5): p. 371-382.

- Holle, R., et al., KORA – A Research Platform for Population Based Health Research. Gesundheitswesen, 2005. 67(S 01): p. 19-25.

- John, U., et al., Study of Health in Pomerania (SHIP): A health examination survey in an east German region: Objectives and design. Sozial- und Präventivmedizin, 2001. 46(3): p. 186-194.

- Kamtsiuris, P., et al., [The first wave of the German Health Interview and Examination Survey for Adults (DEGS1): sample design, response, weighting and representativeness]. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz, 2013. 56(5-6): p. 620-30.

- Bayer, A. and W. Tadd, Unjustified exclusion of elderly people from studies submitted to research ethics committee for approval: descriptive study. BMJ, 2000. 321(7267): p. 992-3.

- Fried, L.P., et al., The Cardiovascular Health Study: design and rationale. Ann Epidemiol, 1991. 1(3): p. 263-76.

- Newman, A.B., et al., Strength and muscle quality in a well-functioning cohort of older adults: the Health, Aging and Body Composition Study. J Am Geriatr Soc, 2003. 51(3): p. 323-30.

- Zipf, G., et al., National Health and Nutrition Examination Survey: Plan and operations, 1999–2010 in Vital Health Stat. 2013, National Center for Health Statistics: Hyattsville, Maryland.

- infas Institut für angewandte Sozialwissenschaften GmbH, Alterssurvey 2008 – Die zweite Lebenshälfte- Durchführung der 3. Befragungswelle. Methodenbericht. 2009, infas Institut für angewandte Sozialwissenschaften GmbH: Bonn.

- Santourian, A. and S. Kitromilidou, Quality report of the second wave of the European Health Interview survey. 2018, Publications Office of the European Union: Luxembourg.

- Green, E., et al., Exploring patterns of response across the lifespan: the Cambridge Centre for Ageing and Neuroscience (Cam-CAN) study. BMC Public Health, 2018. 18(1): p. 1-7, art. no. 760.

- Groves, R.M. and M.P. Couper, Nonresponse in Household Interview Surveys. 1998, New York: John Wiley & Sons.

- Kelfve, S., et al., Do postal health surveys capture morbidity and mortality in respondents aged 65 years and older? A register-based validation study. Scand J Public Health, 2015. 43(4): p. 348-55.

- Herzog, R.A., W.L. Rodgers, and R.A. Kulka, Interviewing Older Adults: A Comparison of Telephone and Face-to-Face Modalities. Public Opinion Quarterly, 1983. 47: p. 405-418.

- Mody, L., et al., Recruitment and Retention of Older Adults in Aging Research. Journal of American Geriatric Sociology, 2008. 56(12): p. 2340–2348.

- Schneekloth, U. and H.W. Wahl, eds. Möglichkeiten und Grenzen selbständiger Lebensführung in privaten Haushalten (MuG III). Repräsentativbefunde und Vertiefungsstudien zu häuslichen Pflegearrangements, Demenz und professionellen Versorgungsangeboten. 2005, Integrierter Abschlussbericht im Auftrag des Bundesministeriums für Familie, Senioren, Frauen und Jugend: München.

- Kelfve, S., Underestimated Health Inequalities Among Older People-A Consequence of Excluding the Most Disabled and Disadvantaged. J Gerontol B Psychol Sci Soc Sci, 2017. 0(0): p. 1-10.

- Gaertner, B., et al., Baseline participation in a health examination survey of the population 65 years and older: who is missed and why? BMC Geriatr, 2016. 16: p. 1-12, art. no. 21.

- de Souto Barreto, P., Participation bias in postal surveys among older adults: the role played by self-reported health, physical functional decline and frailty. Arch Gerontol Geriatr, 2012. 55(3): p. 592-8.

- Langhammer, A., et al., The HUNT study: participation is associated with survival and depends on socioeconomic status, diseases and symptoms. BMC Med Res Methodol, 2012. 12: p. 1-14, art. no. 143.

- Launer, L.J., A.W. Wind, and D.J. Deeg, Nonresponse pattern and bias in a community-based cross-sectional study of cognitive functioning among the elderly. Am J Epidemiol, 1994. 139(8): p. 803-12.

- Minder, C.E., et al., Subgroups of refusers in a disability prevention trial in older adults: baseline and follow-up analysis. Am J Public Health, 2002. 92(3): p. 445-50.

- Nummela, O., et al., Register-based data indicated nonparticipation bias in a health study among aging people. J Clin Epidemiol, 2011. 64(12): p. 1418-25.

- Wihlborg, A., K. Akesson, and P. Gerdhem, External validity of a population-based study on osteoporosis and fracture. Acta Orthop, 2014. 85(4): p. 433-7.

- Gao, L., et al., Changing non-participation in epidemiological studies of older people: evidence from the Cognitive Function and Ageing Study I and II. Age Ageing, 2015. 44(5): p. 867-73.

- Stang, A., et al., Baseline recruitment and analyses of nonresponse of the Heinz Nixdorf Recall Study: identifiability of phone numbers as the major determinant of response. Eur J Epidemiol, 2005. 20(6): p. 489-96.

- Kearney, P.M., et al., Comparison of centre and home-based health assessments: early experience from the Irish Longitudinal Study on Ageing (TILDA). Age Ageing, 2010. 40(1): p. 85-90.

- Noguchi-Shinohara, M., et al., Differences in the prevalence of dementia and mild cognitive impairment and cognitive functions between early and delayed responders in a community-based study of the elderly. J Alzheimers Dis, 2013. 37(4): p. 691-8.

- Liljas, A.E.M., et al., Strategies to improve engagement of ‘hard to reach’ older people in research on health promotion: a systematic review. BMC Public Health, 2017. 17(1): p. 1-12, art. no. 349.

- De Leeuw, E.D., To Mix or Not to Mix Data Collection Modes in Surveys. Journal of Official Statistics, 2005. 21(2): p. 233-255.

- de Leeuw, E.D., J.J. Hox, and D.A. Dillman, Mixed-mode surveys: when and why in International Handbook of Survey Methodology, E.D. de Leeuw, J.J. Hox, and D.A. Dillman, Editors. 2008, Lawrence Erlbaum Assoc Inc: New York. p. 299-316.

- Roberts, C., Mixing modes of data collection in surveys: A methodological review. ESRC National Centre for Research Methods. NCRM Methods Review Papers. NCRM/008, 2007. NCRM/008.

- Hochstim, J.R., A Critical Comparison of Three Strategies of Collecting Data from Households. Journal of the American Statistical Association, 1967. 62(319): p. 976-989.

- Burkhart, Q., et al., How Much Do Additional Mailings and Telephone Calls Contribute to Response Rates in a Survey of Medicare Beneficiaries? Field Methods, 2015. 27(4): p. 409-425.

- Harris, T.J., et al., Optimising recruitment into a study of physical activity in older people: a randomised controlled trial of different approaches. Age Ageing, 2008. 37(6): p. 659-65.

- Ives, D.G., et al., Comparison of recruitment strategies and associated disease prevalence for health promotion in rural elderly. Prev Med, 1992. 21(5): p. 582-91.

- Norton, M.C., et al., Characteristics of nonresponders in a community survey of the elderly. J Am Geriatr Soc, 1994. 42(12): p. 1252-6.

- Kelfve, S., M. Thorslund, and C. Lennartsson, Sampling and non-response bias on health-outcomes in surveys of the oldest old. Eur J Ageing, 2013. 10(3): p. 237-245.

- Robert Koch-Institut, ed. Pilotstudie zur Durchführung von Mixed-Mode-Gesundheitsbefragungen in der Erwachsenenbevölkerung (Projektstudie GEDA 2.0). Beiträge zur Gesundheitsberichterstattung des Bundes 2015, Robert Koch-Institut: Berlin.

- Gaertner B, et al., Including nursing home residents in a population general population health survey in Germany. Survey Methods: Insights from the Field, accepted.

- Hawranik, P. and V. Pangman, Recruitment of community-dwelling older adults for nursing research: a challenging process. Can J Nurs Res, 2002. 33(4): p. 171-84.

- Samelson, E.J., et al., Issues in conducting epidemiologic research among elders: lessons from the MOBILIZE Boston Study. Am J Epidemiol, 2008. 168(12): p. 1444-51.

- Auster, J. and M. Janda, Recruiting older adults to health research studies: A systematic review. Australas J Ageing, 2009. 28(3): p. 149-51.

- Lacey, R.J., et al., Evidence for strategies that improve recruitment and retention of adults aged 65 years and over in randomised trials and observational studies: a systematic review. Age Ageing, 2017. 46(6): p. 895-903.

- Lange, C., et al., Implementation of the European health interview survey (EHIS) into the German health update (GEDA). Arch Public Health, 2017. 75: p. 1-14, art. no. 40.

- The American Association for Public Opinion Research (AAPOR), Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys, 9th edition. 2016, AAPOR.

- Federal Ministry of the Interior. Federal Act on Registration of 3 May 2013 (Federal Law Gazette I p. 1084), as last amended by Article 11 of the Act of 18 July 2017 (Federal Law Gazette I p. 2745). 2017 [cited 14.05. 2018]; Available from: https://www.gesetze-im-internet.de/englisch_bmg/englisch_bmg.html.

- Dutwin, D., et al., Current Knowledge and Considerations Regarding Survey Refusals: Executive Summary of the AAPOR Task Force Report on Survey Refusals. Public Opinion Quarterly, 2014. 79(2): p. 411–419.

- Long, S.J., Regression Models for Categorical and Limited Dependent Variables. 1997, Thousand Oaks: Sage.

- StataCorp., Stata Statistical Software: Release 15. 2018, StataCorp LLC: College Station, TX.

- Holzhausen, M., et al., Operationalizing multimorbidity and autonomy for health services research in aging populations–the OMAHA study. BMC Health Serv Res, 2011. 11: p. 1-11, art. no. 47.

- Bundesministerium für Familie Senioren Frauen und Jugend. Die Lebenslage älterer Menschen mit rechtlicher Betreuung: Abschlussbericht zum Forschungs- und Praxisprojekt der Akademie für öffentliches Gesundheitswesen in Düsseldorf. 2004 [cited 15.08. 2018]; Available from: https://www.bmfsfj.de/blob/78932/459d4a01148316eba579d64cae9e1604/abschlussbericht-rechtliche-betreuung-data.pdf.

- Paganini-Hill, A., et al., Comparison of early and late respondents to a postal health survey questionnaire. Epidemiology, 1993. 4(4): p. 375-9.

- Dillman, D.A., Mail and Telephone Surveys. The Total Design Method. 1978, New York: John Wiley & Sons.

- Miyamoto, M., et al., Dementia and mild cognitive impairment among non-responders to a community survey. J Clin Neurosci, 2009. 16(2): p. 270-6.

- Selmer, R., et al., The Oslo Health Study: Reminding the non-responders – effects on prevalence estimates. Norsk Epidemiologi 2003. 13(1): p. 89-94.

- Bootsma-van der Wiel, A., et al., A high response is not essential to prevent selection bias: results from the Leiden 85-plus study. J Clin Epidemiol, 2002. 55(11): p. 1119-25.

- Wright, K., et al., Geocoding to Manage Missing Data in a Secondary Analysis of Community-Dwelling, Low-Income Older Adults. Res Gerontol Nurs, 2017. 10(4): p. 155-161.

- Thissen, M., et al., [What potential do geographic information systems have for population-wide health monitoring in Germany? : Perspectives and challenges for the health monitoring of the Robert Koch Institute]. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz, 2017. 60(12): p. 1440-1452.

- Scheidt-Nave, C., et al., German health interview and examination survey for adults (DEGS) – design, objectives and implementation of the first data collection wave. BMC Public Health, 2012. 12: p. 1-16, art. no. 730.

- Brauns, H. and S. Steinmann, Educational Reform in France, West-Germany and the United Kingdom: Updating the CASMIN Educational Classification. ZUMA-Nachrichten, 1999. 23: p. 7-44.

- Lampert, T., et al., [Measurement of socioeconomic status in the German Health Interview and Examination Survey for Adults (DEGS1)]. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz, 2013. 56(5-6): p. 631-6.

- Cox, B., et al., The reliability of the Minimum European Health Module. Int J Public Health, 2009. 54(2): p. 55-60.

- Lawton, M.P., The functional assessment of elderly people. J Am Geriatr Soc, 1971. 19(6): p. 465-81.

- European Union, European Health Interview Survey (EHIS wave 2): Methodological manual. 2013, Luxembourg: Publications Office of the European Union.

- Katz, S., et al., Progress in development of the index of ADL. Gerontologist, 1970. 10(1): p. 20-30.

- National Health and Aging Trends Study (NHATS). Round 5 Data Collection Instrument Sections: Mobility. 2015 [cited 04.07. 2018]; Available from: https://www.nhats.org/scripts/instruments/018_MO_Round5.pdf.

- Lamb, S.E., et al., Development of a common outcome data set for fall injury prevention trials: the Prevention of Falls Network Europe consensus. J Am Geriatr Soc, 2005. 53(9): p. 1618-22.

- Lourenco, J., et al., Cardiovascular Risk Factors Are Correlated with Low Cognitive Function among Older Adults Across Europe Based on The SHARE Database. Aging Dis, 2018. 9(1): p. 90-101.

- d’Orsi, E., et al., Socioeconomic and lifestyle factors related to instrumental activity of daily living dynamics: results from the English Longitudinal Study of Ageing. J Am Geriatr Soc, 2014. 62(9): p. 1630-9.

- Sheffrin, M., I. Stijacic Cenzer, and M.A. Steinman, Desire for predictive testing for Alzheimer’s disease and impact on advance care planning: a cross-sectional study. Alzheimers Res Ther, 2016. 8(1): p. 1-7, art. no. 55.

- Knopf, H. and D. Grams, [Medication use of adults in Germany: results of the German Health Interview and Examination Survey for Adults (DEGS1)]. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz, 2013. 56(5-6): p. 868-77.