Investigating Respondent Multitasking and Distraction Using Self-reports and Interviewers’ Observations in a Dual-frame Telephone Survey

E. Aizpurua, E. O. Heiden, Ki.H. Park, J. Wittrock & M. E. Losch (2018), Investigating Respondent Multitasking and Distraction Using Self-reports and Interviewers’ Observations in a Dual-frame Telephone Survey. Survey Insights: Methods from the Field. Retrieved from https://surveyinsights.org/?p=10945

© the authors 2018. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Previous research has shown that people often engage in other activities while responding to surveys and that respondents’ multitasking generally has no effect on indicators of data quality (e.g., item non-response, non-differentiation). One of the limitations of these studies is that they have mostly used self-reported measures of respondents’ multitasking. We build on prior research by combining self-reported measures of multitasking with interviewers' observations of respondents' distractions recorded after each interview. The dataset comes from a statewide dual-frame random digit dial telephone survey of adults in a Midwestern state (n = 1,006) who were queried on topics related to awareness of and attitudes toward STEM education. We found that multitasking was frequent (45.6%) and that respondents who reported engaging in other activities were described as distracted twice as often as those who did not report multitasking (38.3% versus 19.0%). In terms of data quality, respondents who were multitasking provided less accurate responses to a knowledge question. However, we found no evidence that distractions, assessed by interviewers, compromised data quality. The implications of the results for survey practices are discussed.

Keywords

data quality, distraction, dual-frame, interviewers’ observations, multitasking, self-reports, Telephone survey

Acknowledgement

The STEM Attitudes Survey was funded by the Iowa Governor’s STEM Advisory Council. We thank them for allowing CSBR to conduct additional methodological research using this project. The opinions, findings, and conclusions expressed in this presentation are those of the authors and not necessarily those of the Governor of Iowa, the Iowa Governor’s STEM Advisory Council, or the University of Northern Iowa.

Copyright

© the authors 2018. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

Recent studies have shown how often people engage in two or more tasks simultaneously in daily life (Voorveld, Segijn, Ketelaar, & Smith, 2014). Although most studies have focused on media multitasking, there is a growing body of research indicating that a high proportion of respondents engage in other activities while answering surveys. Estimates of its prevalence vary according to the operationalisation of multitasking, survey mode, and survey length. In the case of telephone surveys, prevalence rates range from 17% (Pew Research Center, 2006) to 55% (Heiden, Wittrock, Aizpurua, Park, & Losch, 2017). Studies that have used self-reported measures of multitasking in random digit dialing (RDD) dual-frames and cellphone samples in the United States have found multitasking rates range between 50% and 55% (Aizpurua, Park, Heiden, Wittrock, & Losch, 2017; Heiden et al., 2017; Lavrakas, Tompson, Benford, & Fleury, 2010). However, the Pew Research study (2006), which reported a significantly lower incidence, was based on interviewers’ observations of respondents’ multitasking. This study found a slightly higher incidence of multitasking among cellphone respondents (20%) when compared to landline respondents (17%). Nevertheless, two recent studies have analysed the correlates of multitasking using multivariate models and found that the type of device –landline versus cellphone- is not significant in predicting the probability of multitasking after controlling for other factors (Aizpurua et al., 2017, Heiden et al, 2017).

The study by Pew Research (2006) also showed that the prevalence of distracted respondents, as measured by interviewers’ observations immediately after the interviews, was lower than that of multitasking. While the interviewers recorded that 20% of cellphone respondents and 17% of landline respondents were engaged in other activities during the interviews, only about 10% were identified as somewhat or very distracted (8% of those using cellphones and 11% of those using landlines). To our knowledge, no study has combined self-reported measures of multitasking with interviewers’ observations of distractions to assess whether multitaskers are more often perceived as distracted. A few studies, however, have examined the effect of self-reported multitasking on the quality of responses. Using a national RDD dual-frame telephone survey, Kennedy (2010) found no evidence that self-reported multitasking affected item non-response, the length of open-ended questions, non-differentiation, or response order effects. Nevertheless, it was found that respondents who were eating and/or drinking had difficulties with question comprehension although not with other response quality indicators. Lavrakas and colleagues (2010), using a cellphone sample, indicated that multitasking was not associated with answers to sensitive questions or non-differentiation. They did find, however, that multitaskers provided a higher number of non-substantive responses. Two recent statewide RDD dual-frame telephone surveys have examined the relationship between multitasking and data quality providing, in general, no indication that self-reported multitasking reduced the quality of responses (Aizpurua et al., 2017; Heiden et al., 2017). There was no evidence that multitaskers and non-multitaskers differed in their completion times, number of non-substantive responses and rounded numerical responses, or in their non-differentiation scores. Despite this, Heiden and colleagues (2017) found that multitasking was associated with significantly lower awareness of STEM (science, technology, engineering, and mathematics).

The work reported here builds on previous research by combining the use of self-reported measures of multitasking with interviewers’ observations of distractions to assess whether multitaskers are more often perceived as distracted. In addition, we analyse the relationship that multitasking and distraction might have with data quality by examining six different indicators. In particular, we aim to address the following research questions:

- How frequent are multitasking and distraction among survey respondents?

- What are the predictors of multitasking and distraction?

- Is there a relationship between multitasking and distraction?

- What, if any, are the effects of multitasking and distraction on data quality?

Based on prior research, we hypothesize that the prevalence of distraction will be lower than the prevalence of multitasking, although these concepts will be related. Finally, we anticipate that multitasking and distraction will have negative effects on data quality.

Methods

Data

Data were collected between June 19 and August 31, 2017, as part of a statewide dual-frame survey of adults in a Midwestern state regarding public awareness and attitudes toward STEM (science, technology, engineering, and mathematics). Interviews were administered by Computer Assisted Telephone Interviewing (CATI). A dual-frame random digit dial (DF-RDD) sample design, including landline and cellphones, was used to collect the data. Samples were obtained from Marketing Systems Group (MSG). Respondents were eligible if they lived in the state and were 18 years of age or older at the time of the interview. For the landline samples, interviewers randomly selected adult members of households using a modified Kish procedure.

The interviews (N = 1,006, which included 91 landline and 915 cellular interviews) averaged 20 minutes in length (SD = 4.36). They were conducted in English (n = 988) and Spanish (n = 18) by interviewers at the Center for Social & Behavioral Research at the University of Northern Iowa. No incentives or compensation were offered for participation. Utilising the American Association for Public Opinion Research calculations, the overall response rate (RR3, AAPOR Standard Definitions 2016) was 27.0%. The response rate for the RDD landline sample was 16.0%, and the cellphone sample was 28.4%, respectively. The overall cooperation rate (AAPOR COOP3) was 69.7%. The cooperation rate for interviews completed via cellphone (77.1%) was higher than for landline interviews (35.4%).

Variables of Interest

Multitasking. Similar to previous studies (Heiden et al., 2017; Ansolabehere and Scaffner, 2015), a self-reported measure of multitasking was included at the end of the survey. Specifically, respondents were asked whether they had engaged in any other activities while completing the survey (“During the time we’ve been on the phone, in what other activities, if any, were you engaged such as watching TV or watching kids?”). The question was field coded and respondents could indicate as many activities as applied.

Distraction. At the end of each survey, interviewers were asked to indicate whether or not there were evidences of distraction during the interview. If the answer was positive, they were requested to specify the type of distraction. Responses to this question were combined into three categories: (1) noises (e.g. wind, statics), (2) respondent behaviours (e.g. walking, moving objects), and (3) both noises and behaviours.

Respondent and interviewer characteristics. Respondent characteristics included age, gender, race, education, income, place of residence, type of telephone, time of the day in which the survey was completed, and whether or not they had children under 18 living in the household. Interviewer gender and experience were included in the final model.

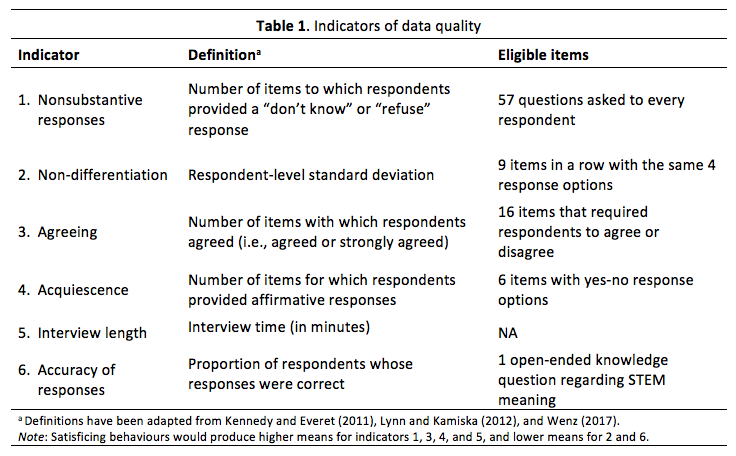

Data quality. Six indicators of data quality were used in this study, including: (1) nonsubstantive responses, (2) non-differentiation, (3) agreeing, (4) acquiescence, (5) interview length, and (6) accuracy of responses. Information about each of these indicators and how they were measured in the questionnaire is provided in Table 1. A greater tendency toward satisficing would produce higher means for indicators 1, 3, 4, and 5, and lower means for indicators 2 and 6.

Analytic Strategy

First, descriptive statistics of multitasking and distraction were calculated. Next, logistic regression models were used to predict the probability of multitasking as a function of respondent and interview characteristics. Potential differences in evaluations of distraction by multitasking status were analysed using chi-square tests. To examine the correlates of distraction, multilevel models were used, since they account for the clustering of respondents within interviewers. In particular, two-level logistic regression models with an interviewer random effect were estimated. Interviewers with less than nine interviews were excluded from the multilevel analysis leaving an analytic sample size of 953 interviews conducted by 22 interviewers. More restrictive models in which the minimum number of interviews were 20 or more were estimated (available upon request) and the results were highly consistent, suggesting the robustness of the findings.The analysis was performed in steps, starting with a null model. Model 2 added respondent level variables and model 3 was extended to include interviewer level variables. The examination of the variance inflation factors suggested no multicollinearity problems in the models (VIF < 1.5). To explore any differences in data quality indicators based on self-reported multitasking and/or interviewers’ evaluations of distraction, t-tests, chi-square tests, and a logistic regression were conducted.

Results

The Prevalence of Multitasking and Distraction

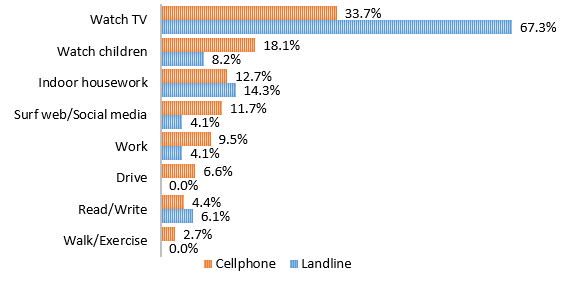

Nearly half of the respondents (45.6%) reported some form of multitasking during the interview. Although most of them (91.9%) reported one single activity, 8.1% of multitaskers indicated that they had engaged in two or more activities during the interview. Landline respondents more often reported multitasking when compared to cellphone respondents (53.8% versus 44.8%), although the difference did not reach significance (χ2 (1) = 2.73, p = 0.06, Cramer’s V = 0.05). Secondary activities slightly varied by telephone type as shown in Figure 1. The most common activities cited by cellphone multitaskers were watching television (33.7%), watching children (18.1%), and doing housework (12.7%). Landline multitaskers identified watching television, doing housework, and watching children as their most common activities (67.3%, 14.3%, and 8.2%, respectively). Some of the activities reported by respondents were unique to the telephone type such as driving and exercising, only mentioned by cellphone respondents.

Figure 1. Frequency of secondary activities by telephone type.

Note: Categories with fewer than 10 cases (combining cellphones and landlines) have been omitted from Figure 1.

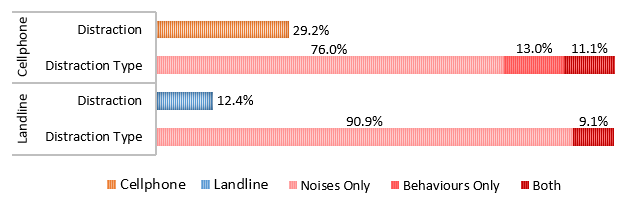

The interviewers described over one quarter of respondents (27.7%) as being distracted. This finding supports our first hypothesis (the prevalence of distraction will be lower than the prevalence of multitasking). As shown in Figure 2, cellphone respondents were more often described as distracted than landline respondents (29.2% versus 12.4%, χ2(1) = 11.52, p < .001, Cramer’s V = 0.11). When asked what evidence of distractions they had noticed, interviewers most often reported background noises (76% for cellphones, 90.9% for landlines) such as wind or road sounds, dogs barking, or phones ringing. Interviewers reported that 13.0% of cellphone respondents seemed distracted as evidenced by behaviours, including constantly asking to have questions repeated and providing particularly slow responses. The remaining 11.1% of cellphone respondents and 9.1% of landline respondents were classified as being affected by background noises and performing behaviours that suggested distraction.

Figure 2. Prevalence of distraction by telephone type.

Predictors of Multitasking

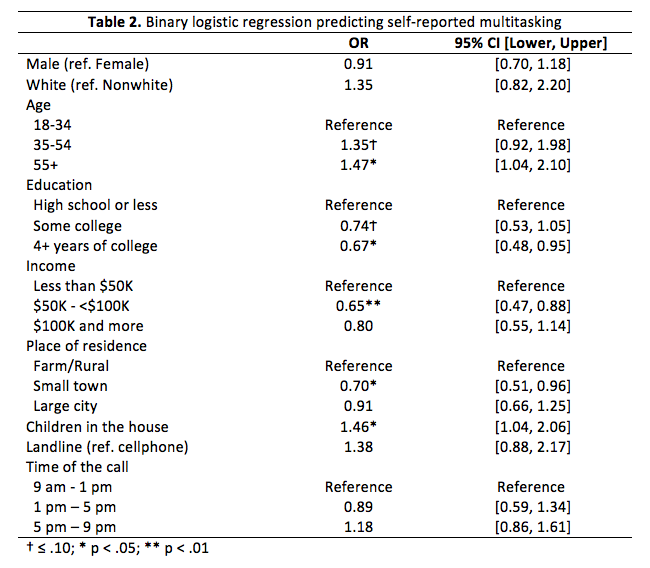

The first step of the multivariate analysis was to examine the predictors of multitasking. The odds ratio (OR) and corresponding 95% confidence interval (CI) from the binary logistic regression are presented in Table 2. Respondents who were 55 years or older were significantly more likely to report multitasking (OR = 1.47, CI = [1.04, 2.10]) as compared with younger respondents (18-34 years old). On the contrary, respondents with four or more years of college education were significantly less likely to report multitasking than those with high school educations or less (OR = 0.67, CI = [0.48, 0.95]). Similarly, respondents whose incomes fell between $50,000 and $100, 000 were significantly less likely to report multitasking than respondents with smaller incomes (OR=0.65, CI = [0.47, 0.88]). Those with children living in the house were significantly more likely to multitask than respondents with no children in the household (OR = 1.46, CI = [1.04, 2.06]). Finally, respondents who resided in small towns were less likely to report multitasking (OR = 0.70, CI = [0.51, 0.96]) than respondent who resided in rural areas.

Predictors of Distraction

In this section, the relationship between multitasking and interviewers’ assessments of distraction is analysed. At the bivariate level, chi-square results indicated that these variables are not independent (χ2 (1) = 45.46, p < .001, Cramer’s V = -0.22). Multitaskers were described as being distracted twice as often as non-multitaskers (38.3% versus 19.0%). As a result, slightly over six in ten respondents (61.7%) identified as distracted were multitaskers.

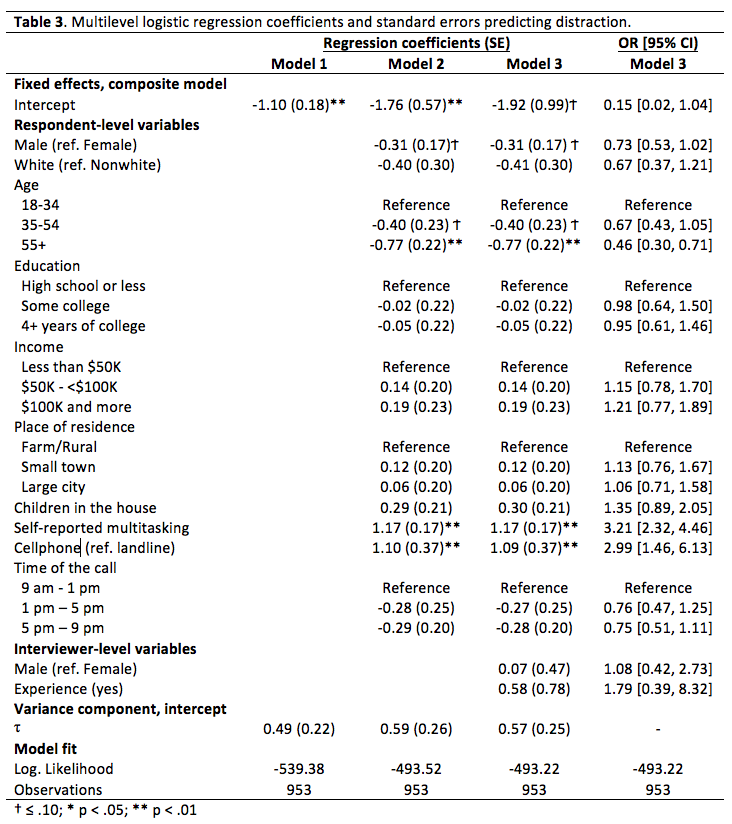

To further examine the relationship between multitasking and distraction, multilevel analyses that allowed the inclusion of covariates were carried out. Table 3 presents the coefficients for the three models. As indicated by VPC (proportional change variance), 13% of the variance in interviewers’ assessments of distraction is attributed to interviewers’ factors, justifying the use of mixed-effects models. Model 1 shows that there is significant variability on assessments of distraction across interviewers (τ = 0.49, SE = 0.22). The inclusion of respondent-level variables in the next model increases level 2 variation (τ = 0.59, SE = 0.26), while incorporating interviewer-level variables slightly reduces level 2 variation in model 3 (τ = 0.57, SE = 0.25).

Regarding respondent characteristics, interviewers evaluated respondents aged 55 and older as significantly less distracted than those 18 to 34 years old (OR = 0.46, p < .001). Cellphone respondents were seen as significantly more distracted than landline respondents (OR = 2.99, p < .001). As hypothesised, multitasking was positively and significantly associated with interviewers’ assessments of distraction (OR = 3.21, p < .001). Interviewer characteristics were not significantly associated with interviewers’ assessments of distraction.

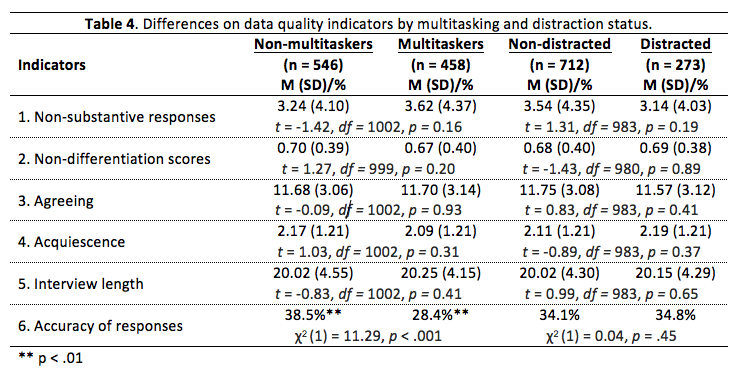

Effects of Multitasking and Distraction on Data Quality

Multitasking and distractions might damage data quality, as respondents’ attention is not devoted entirely to the survey. As can be seen in Table 4, there were no significant differences between multitaskers and non-multitaskers in any of the data quality indicators except for the accuracy of responses to the knowledge question. A greater proportion of non-multitaskers (38.5%) provided correct responses than did multitaskers (28.4%, χ2 (1) = 11.29, p < .001, Cramer’s V = -0.11). These findings provide partial support to our hypothesis that multitasking will have negative effects on data quality.

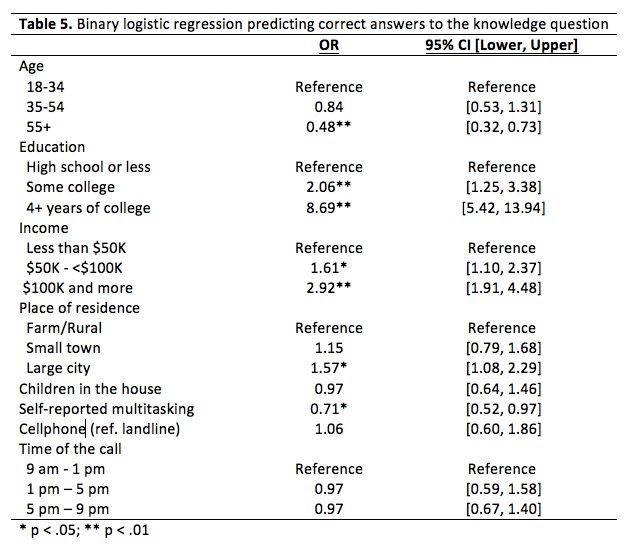

To further examine this relationship, a multivariate logistic was used to predict response accuracy based on multitasking status and respondents’ characteristics. Controlling for these covariates, self-reported multitasking predicted whether respondents provided correct answers to the knowledge question. Consistent with the bivariate findings, respondents who reported multitasking were less likely to provide correct answers (OR = 0.71, 95% CI = 0.52, 0.97). Odds ratios and corresponding confidence intervals for all of the covariates can be found in Table 5.

Contrary to our hypothesis, when focusing on interviewers’ assessment of distraction rather than respondents’ reports of multitasking, there was no evidence of reduced data quality when distractions were reported during the interviews. There were no differences in the number of non-substantive or acquiescent responses between distracted and non-distracted respondents (t = 1.31, df = 983, p = 0.19 and t = -0.89, df = 983, p = 0.37, respectively). Completion times were similar in both groups (t = 0.99, df = 983, p = 0.65) as well as non-differentiation scores (t = -1.43, df = 980, p = 0.89). Distracted respondents did not agree significantly more (t = 0.83, df = 983, p = 0.41) and, although they provided a smaller proportion of correct responses than their non-distracted counterparts, the difference was not statistically significant (χ2 (1) = 0.04, p = .45).

Discussion and Conclusions

One of the aims of this study was to examine the prevalence of self-reported multitasking and its influence on data quality using data from a random dual-frame telephone survey of adults in a Midwestern state. According to our results, nearly one in two respondents (45.6%) reported engaging in one or more activities during the interviews. These activities ranged from more passive cognitive demands, like watching television, to others demanding higher levels of attention, including driving, reading, and writing. Consistent with previous studies (Aizpurua et al., 2017; Ansolabehere & Schaffner, 2015), watching television was the most common activity, especially for landline respondents where its prevalence was double that among cellphone respondents (67.3% versus 33.7%). Other indicators suggested that activities are a function of the type of telephone on which the survey was completed. For example, some activities were reported only by cellphone respondents and others, while not exclusive to them, were twice as common (e.g., watching children, surfing the Internet, or working). Older respondents, parents with children in the household, less educated individuals and those living in rural areas were more likely to report multitasking.

In addition to revealing the elevated presence of multitasking, our results draw a link between it and interviewers’ observations of distractions. Respondents who reported engaging in other activities during the interviews were described as distracted twice as often as those who did not report multitasking (38.3% versus 19.0%). Multilevel analyses suggested that the significant covariates of interviewers’ observations of distractions were age, type of telephone in which the interview was completed, and multitasking status. Being younger, completing the survey via cellphone, and reporting multitasking were predictive of being described as distracted by interviewers. This last finding builds on previous research documenting similar findings using self-reported measures of distraction (Zwarun, & Hall, 2014). Interestingly, none of the interviewer characteristics included in the final model –sex and experience- explained the interviewer variation. Future studies could expand on these results by including additional interviewer characteristics that might explain these differences.

Respondents who were multitasking provided less accurate responses to the knowledge question. Only 28.4% of those who provided correct definitions of STEM education were multitasking compared to 38.5% among non-multitaskers. This finding is consistent with a previous study in which multitaskers reported lower awareness of STEM education than non-multitaskers (Heiden et al., 2017). In contrast, no evidence was found that multitasking affected other data quality indicators, including nonsubstantive and acquiescent responses and non-differentiation scores. These results support previous research using telephone and online surveys (Aizpurua et al., 2017; Heiden et al., 2017; Lavrakas et al., 2010; Sendelbah, Vehovar, Slavec, & Petrovcic, 2016). Contrary to previous online surveys documenting increased completion times among multitaskers (Ansolabehere & Schaffner, 2015), ours, along with prior studies using telephone interviews (Aizpurua et al., 2017; Heiden et al., 2017), reported no differences in completion times based on multitasking status. This finding suggests that the effect of multitasking on interview time might be conditional on survey mode.

Contrary to our expectations, we found no evidence that distractions, assessed by interviewers, compromise data quality. None of the data quality indicators differed significantly between distracted and non-distracted respondents. In conclusion, while multitasking and distraction were relatively common among respondents, the good news is that they appear to have limited impact on data quality, especially for questions that are not particularly demanding. Future studies could investigate the effect that multitasking and distraction might have in different types of questions (i.e., attitudinal, behavioural, factual). It will also be valuable to examine these results in greater depth by analysing additional indicators such as the length of responses to open-ended questions.

References

- Aizpurua, E., Park, K.H., Heiden, E.O., Wittrock, J., & Losch, M.E. (2017). Multitasking and data quality: Lessons from a statewide dual-frame telephone survey. Paper presented at the Annual Conference of the Midwestern Association for Public Opinion Research. Chicago, IL. November 17-18.

- Ansolabehere, S. & Schaffner, B.F. (2015). Distractions: The incidence and consequences of interruptions for survey respondents. Journal of Survey Statistics and Methodology 3, 216–239. doi: 10.1093/jssam/smv003.

- Heiden, E.O., Wittrock, J., Aizpurua, E., Park, K.H., & Losch, M.E. (2017). The impact of multitasking on survey data quality: Observations from a statewide telephone survey. Paper presented at the Annual Conference of the American Association for Public Opinion Research. New Orleans, LA. May 18-21.

- Kennedy, C. 2010. Nonresponse and measurement error in mobile phone survey. Doctoral dissertation. University of Michigan, Ann Arbor.

- Kennedy, C., & Everett, S. (2011). Use of cognitive shortcuts in landline and cell phone surveys. Public Opinion Quarterly 75, 336-348. doi: 10. 1093/poq/nfi007.

- Lavrakas, P.J., Tompson, T.N., & Benford, R. (2010). Investigating data quality in cell-phone surveying. Presentation at the Annual Conference of the American Association of Public Opinion Research, Chicago, IL. May 13-16.

- Lynn, P., and Kaminska, O. (2012). The impact of mobile phones on survey measurement error. Public Opinion Quarterly 77, 586-605. doi:10.1093/poq/nfs046.

- Pew Research Center (2006). The cell phone challenge to survey research: National Polls Not Undermined by Growing Cell-Only Population. Retrieved from http://www.people-press.org/2006/05/15/the-cell-phone-challenge-to-survey-research/

- Sendelbah, A., Vehovar, V., Slavec, A., & Petrovvcic, A. (2016). Investigating respondent multitasking in web surveys using paradata. Computers in Human Behavior 55, 777-787. http://dx.doi.org/10.1016/j.chb.2015.10.028.

- Voorveld, H.A.M., Segijn, c.m., Ketelaar, P.E., & Smith, E.G. (2014). Investigating the Prevalence and Predictors of Media Multitasking Across Countries. International Journal of Communication 8, 2755-2777.

- Wenz, A. (2017).Do distractions during web survey completion affect data quality? Presentation at the 7th Conference of the European Survey Research Association, Lisbon, Portugal. July 17-21.

- Zwarun, L. & Hall, A. (2004). What’s going on? Age, distraction, and multitasking during online survey taking. Computers in Human Behavior 41, 236-244. https://doi.org/10.1016/j.chb.2014.09.041.