Research Note: Reducing the Threat of Sensitive Questions in Online Surveys?

Couper, M. P. (2013). Research Note: Reducing the Threat of Sensitive Questions in Online Surveys? Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=1731

© the authors 2013. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

We explore the effect of offering an open-ended comment field in a Web survey to reduce the threat of sensitive questions. Two experiments were field in a probability-based Web panel in the Netherlands. For a set of 10 items on attitudes to immigrants, a random half were offered the opportunity to explain or clarify their responses, with the hypothesis being that doing so would reduce the need to choose socially desirable answers, resulting in higher levels of prejudice. Across two experiments, we find significant effects contrary to our hypothesis – the opportunity to comment decreased the level of prejudice reported, and longer comments were associated with more tolerant attitudes among those who were offered the comment field.

Keywords

attitudes toward immigrants, comment fields, social desirability, Web surveys

Acknowledgement

The LISS panel data were collected by CentERdata (Tilburg University, The Netherlands) through its MESS project funded by the Netherlands Organization for Scientific Research. We are grateful to the staff at CentERdata for the assistance with this project. The useful suggestions of the reviewers are also appreciated.

Copyright

© the authors 2013. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

This research note explores the effect of offering an open-ended comment field on the responses to a series of questions about attitudes toward immigrants in an online survey. The motivation for this research was a puzzling finding in an earlier study on race of interviewer effects. In that study (Krysan and Couper, 2003), it was found that – contrary to expectation – white respondents expressed more positive (less prejudicial) responses to minorities when interviewed using a virtual interviewer – a video of an interviewer in a computer-assisted self-interviewing (CASI) survey – than when interviewed by a live interviewer. This runs counter to the evidence that self-administered methods yield more candid responses to socially undesirable questions than interviewer-administered methods (see Tourangeau and Yan, 2007).

One post-hoc explanation for that surprising finding emerged from debriefings of respondents following the interview (Krysan and Couper, 2002). For example, one respondent offered the following comment: “Some questions were kind of broad and could use some clarification that may be possible with a live interview.” Another respondent expressed the following view: “I would have liked to add comments, while I did in the live interview, and that made it more comfortable.” In fact, 13% of white respondents spontaneously offered a comment of this type, while none of the African American respondents did so (Krysan and Couper, 2002).

This led Krysan and Couper to speculate that respondents may have been “reluctant in the virtual interviewer to give an answer that might appear racist because they could not explain themselves.” They went on to note that “even in mail surveys it is quite easy for respondents to make notations and marks on the margins of the questionnaire to explain or provide qualifications to their answer. When respondents are restricted in the virtual interviewer [CASI] to typing a single letter or number for their answer, they are not allowed such flexibility – though presumably the design of such an instrument could make this capability an option.” In a subsequent online study, Krysan and Couper (2006) did not explore this intriguing finding, and we know of no other studies that have investigated this possibility.

Given this, we designed a study to test such a speculation – that offering respondents the opportunity to clarify or explain their responses may provide greater comfort in expressing potentially negative stereotypes that are typically subject to social desirability effects. We thus expected that those who were offered the comment field would have higher rates of expression of stereotypical or prejudicial attitudes. We also expected that use of the comment field (i.e., entered a comment) and length of comments entered, would also be associated with higher prejudice scores.

Design and Methods

We tested the effect of offering the opportunity to comment in two different surveys administered to members of CentERdata’s LISS panel, a probability-based online panel of adults age 16 and older in the Netherlands (Scherpenzeel and Das, 2011; see www.lissdata.nl). Our experiments were restricted to panel members of Dutch ancestry (i.e., immigrants were excluded), and we used a standard battery of 10 items on attitudes towards immigrants. The items are reproduced in Table 1. These comprise a short form of the support for multiculturalism scale, developed by Breugelmans and van der Vijver (2004; see also Breugelmans, van der Vijver, and Schalk-Soekar, 2009; Schalk-Soekar, Breugelmans and van der Vijver, 2008). We reversed the meaning of the scale so that higher scores indicate greater opposition to multiculturalism or negative attitudes toward immigrants. All items were measured on a 5-point fully-labeled scale (1=disagree entirely, 5=agree entirely). Items marked (R) in Table 1 are reverse-scored, so that a high score indicates more negative attitudes.

Table 1. Immigrant Attitude Items

|

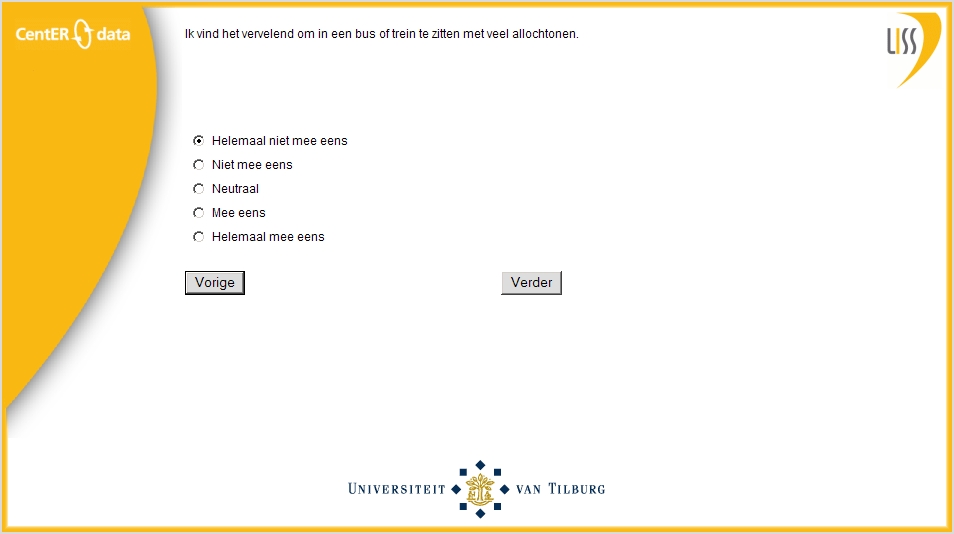

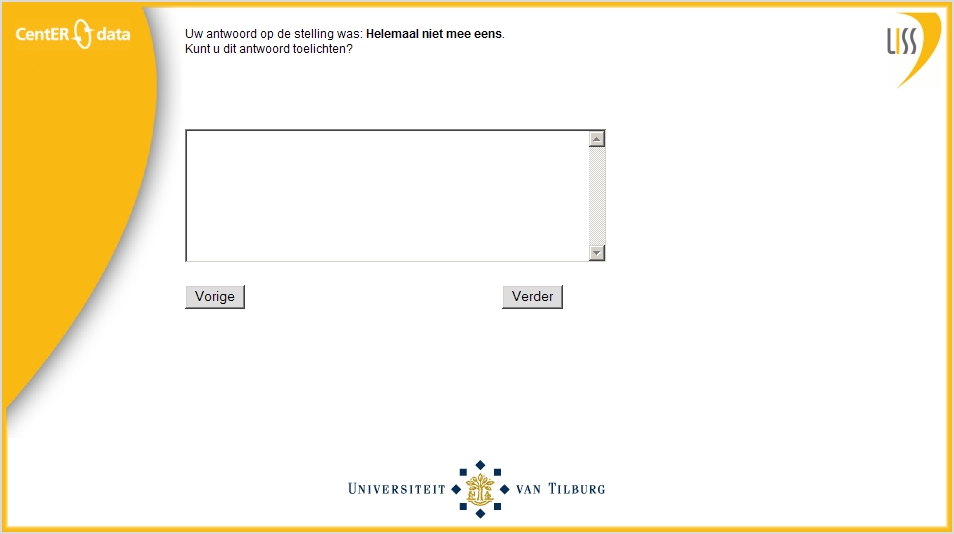

The first experiment was field in August 2009. A total of 8,026 panel members were selected for the survey, and 4,639 completed it, for a completion rate of 57.8%. Roughly one-third of eligible panel members were randomly assigned to the control condition, with the remaining two-thirds assigned to the experimental condition. After removing ineligibles and a small number of breakoffs (9), we are left with 4,363 observations for analysis. In the control condition, the items were presented one at a time on separate screens or Web pages (i.e., 10 pages), while in the experimental condition, each item was followed on the next page with an open text field offering the respondent the opportunity to elaborate on their answer (i.e., 20 pages). In this condition, the introduction to the series included the statement: “After each response, you can explain your response on the next screen.” The wording on the follow-up screen read: “Your response to this statement was [xxx]. Can you explain this answer?” Answers were required for both the closed-ended and the follow-up open-ended questions. See Figure 1 and Figure 2 for screenshots from Experiment 1.

Figure 1: Screenshot of Closed Question, Experiment 1

Figure 2: Screenshot of Open Question, Experiment 1

Experiment 1 was not implemented exactly as intended, because of software restrictions extant at the time. First, the open field was meant to appear below the relevant close-ended question (not on a separate page). Second, the open field was intended to be optional, not required. For these reasons, we repeated the study following an update to the survey software.

The second experiment was fielded in December, 2010. A total of 7,328 panel members were selected for the survey, with 5,328 completing it (a further 8 panelists broke off), for a completion rate of 72.7%. Half of the sample was randomly assigned to each of the experimental and control conditions. In the second experiment, the open comment field appeared below the closed-ended question on the same page, and answers to the open question were not required. Figure 3 shows one example item from Experiment 2.

Figure 3: Screenshot of Closed and Open Question, Experiment 2

Analysis and Results

We discuss the results of the two experiments in turn. For Experiment 1, Cronbach’s alpha for the 10-item scale is high (0.876), so we examine both the combined measure and the individual items. Contrary to expectation, we find significantly lower prejudice scores for those who got the open question (F[1, 4352]=25.6, p<.0001), although the effect size is trivial (≈0.16) per Cohen’s (1988) guidelines. The results are shown in Table 2.

Table 2. Means of Prejudice Scores by Experimental Condition, Experiments 1 and 2

| Prejudice Scores |

No comment field |

Comment field |

||

|

Mean |

(s.e.) |

Mean |

(s.e.) |

|

| Experiment 1 | 3.31 | (0.013) | 3.20 | (0.016) |

| Experiment 2 | 3.31 | (0.013) | 3.25 | (0.014) |

Looking at the individual items (not shown in Table 2), all ten items show the same negative trend (lower prejudice scores in the group with the comment field than in the group without the comment field), with seven of the differences reaching statistical significance (p<.05). The first item, which respondents answered before their first exposure to the experimental manipulation, did not show a significant difference (p=.47), suggesting that the randomization to experimental conditions was effective. A multivariate analysis of variance (MANOVA) of the ten items yields a similar result (Wilks’ Lambda=0.973, F[10, 4343]=11.98, p<.0001). Also contrary to expectation, among those who got the comment field, we find a small but significant negative correlation (r=−0.08, p<.01) between the number of words entered and the prejudice score. That is, those with higher prejudice scores entered fewer comments, on average.

Given the surprising finding, we wondered if the implementation of the experiment may have produced the unexpected result. So we replicated the experiment, as noted above. Cronbach’s alpha for the ten-item measure was similarly high (0.894) in the second implementation. The mean differences in prejudice scores again show significant effects (F[1, 5326]=7.1, p=0.0077), again with a small effect size (Cohen’s d ≈ 0.073). However, the direction of the effect is again opposite to what we hypothesized, with lower prejudice scores for those who got the optional comment field (see Table 2). All ten individual items show the same negative trend (with seven significant at p<.05), and a MANOVA yielded similar results (Wilks’ Lambda=0.973, F[10, 4343]=11.98, p<.0001).

Given that the comment field did not require an answer in Experiment 2, we can look at this in a little more detail. Among those in the experimental group, 37% entered at least one comment, while only 4.1% entered comments in all ten fields. Again, among those who got the comment field, we find a significant (p<.0001) negative correlation (r=−0.20) between the amount of words entered in the comment field and the prejudice score. In this group, the mean prejudice score was significantly (F[1, 2629]=8.85, p=0.0030) lower for those who entered any comments (mean=3.20, s.e.=0.023) than for those who entered no comments (mean=3.29, s.e.=0.017), again contrary to expectation.

As a check on the coding of the dependent variable, we regressed the prejudice scores on a series of background variables. As expected, education has a significant (p<.0001) negative association with prejudice towards immigrants, as did urbanicity (p<.001). Gender is also significantly (p<.0001) associated with prejudice, with women having lower prejudice scores than men, while age had a curvilinear association (p=0.012), with those age 45-54 reporting lower levels of prejudice than those in younger or older age group. We also tested interactions of the experimental manipulation with each of these demographic variables, and find only one significant interaction, with the difference between the two experimental groups being larger for men than for women. In other words, the unexpected findings do not appear to be due to errors in coding of the responses to the scale items.

Finally, could the negative correlation between the number of words entered and prejudice be explained by education? Given that education is negatively correlated with prejudice, is it that better-educated respondents are making more use of the comment fields? We find no evidence of this. There is so significant association between education and whether any comments were entered, and a curvilinear relationship between education and the length of comments, with those in the middle education groups entering longer comments than those with lower or higher education. In a multivariate model, we find no interaction of education and number of words entered on prejudice scores, among those who got the comment field.

Discussion

Across two experiments conducted in the same population, we find no support for the hypothesis that offering the opportunity to clarify, justify, or explain a response would lead to higher reports of socially undesirable attitudes – in this case, negative attitudes towards immigrants. Contrary to expectation, we find significant effects in the opposite direction, that is, those offered the opportunity to clarify their closed-ended responses, and those who availed themselves of the opportunity, expressed significantly more positive attitudes towards immigrants in the Netherlands. We also find that those who entered longer comments had lower levels of prejudice. What may account for these unexpected results?

First, the panel nature of the sample may have affected the results. LISS panel members have been asked questions of this type at various points before, and may be comfortable revealing their views on immigrants. In other words, would the same results be found in a cross-sectional sample, where respondents may have less trust in the survey organization or less comfort answering questions such as these?

Second, the questions themselves might not be particularly threatening or susceptible to social desirability biases. In general, the more threatening a question is, the more susceptible it is likely to be to social desirability bias, and hence the more likely it might be affected by the experimental manipulation. The questions used by Krysan and Couper (2003) ask more directly about overt racism, and hence may be more subject to social desirability bias than the items used here.

Third, the mode of data collection may be a factor. The need to explain one’s answers may be smaller in an online self-administered environment than in one where an interviewer is present. There is evidence that people are more willing to disclose sensitive information in Web surveys than in other survey modes (e.g., Kreuter, Presser, and Tourangeau, 2008).

However, all three of these possible explanations may account for a lack of effect of the experimental manipulation. They do not explain why we find significant – albeit small – effects in both studies. Finally, the initial hypothesis may of course be wrong. The results – replicated in two similar but not identical experiments, in the same population – suggest that the addition of the comment field has some effect on the level of prejudice reported – just not in the direction expected.

One alternative explanation may be that having people reflect on their answers (by asking them to explain their choices) may increase self-reflection and editing of responses, thereby decreasing the reporting of negative or stereotyped attitudes. Some support for this comes from the experimental literature on prejudice (e.g., Devine, 1989; Devine and Sharp, 2009; Dovidio, Kawakami, and Gaertner, 2002; Monteith and Mark, 2005; Powell and Fazio, 1984; Wittenbrink, Judd, and Park, 1997) which suggests that stereotypes may be activated automatically, and that the expression of less-prejudiced views takes conscious effort. In other words, faster responses may simply be more prejudiced responses. We find little evidence for this hypothesis using indirect indicators in our data. In an analysis of timing data from the second experiment, we find no correlation (r=-0.018, n.s.) between the time taken to answer these 10 questions and the level of prejudice, among those who did not get the comment fields.

Without knowing what the respondents’ “true” attitudes are, we can only surmise about the underlying mechanisms and the direction of the shift (whether the comment fields produce more socially desirable responding or more closely reflect underlying beliefs). Disentangling these effects requires further research.

References

1. Breugelmans, Seger M., and Fons J.R. van de Vijver (2004), “Antecedents and Components of Majority Attitudes toward Multiculturalism in the Netherlands.” Applied Psychology, 53 (3): 400-422.

2. Breugelmans, Seger M., Fons J.R. van de Vijver, and Saskia Schalk-Soekar (2009), “Stability of Majority Attitudes toward Multiculturalism in the Netherlands Between 1999 and 2007.” Applied Psychology, 58 (4): 653-671.

3. Cohen, Jacob (1988), Statistical Power Analysis for the Behavioral Sciences, 2nd ed., Hillsdale, NJ: Lawrence Earlbaum Associates.

4. Couper, Mick P. 2008. “Technology and the Survey Interview/Questionnaire.” In Michael F. Schober and Frederick G. Conrad (eds.), Envisioning the Survey Interview of the Future. New York: Wiley, pp. 58-76.

5. Devine, Patricia G. (1989), “Stereotypes and Prejudice: Their Automatic and Controlled Components.” Journal of Personality and Social Psychology, 56 (1): 5-18.

6. Devine, Patricia G., and Lindsay. B. Sharp (2009), “Automaticity and Control in Stereotyping and Prejudice.” In Todd D. Nelson (ed.), Handbook of Prejudice, Stereotyping, and Discrimination. New York: Taylor and Francis, pp. 61-88.

7. Dovidio, John F., Kerry Kawakami, and Samuel L. Gaertner (2002), “Implicit and Explicit Prejudice and Interracial Interaction.” Journal of Personality and Social Psychology, 82 (1): 62-68.

8. Kreuter, Frauke, Stanley Presser, and Roger Tourangeau (2008), “Social Desirability Bias in CATI, IVR, and Web Surveys: The Effects of Mode and Question Sensitivity.” Public Opinion Quarterly, 72 (5): 847-865.

9. Krysan, Maria, and Mick P. Couper (2002), “Measuring Racial Attitudes Virtually: Respondent Reactions, Racial Differences, and Race of Interviewer Effects.” Paper presented at the annual conference of the American Association for Public Opinion Research, St. Petersburg Beach, FL, May.

10. Krysan, Maria, and Mick P. Couper (2003), “Race in the Live and Virtual Interview: Racial Deference, Social Desirability, and Activation Effects in Attitude Surveys.” Social Psychology Quarterly, 66 (4): 364-383.

11. Krysan, Maria, and Mick P. Couper (2006), “Race-of-Interviewer Effects: What Happens on the Web?” International Journal of Internet Science, 1 (1): 5-16.

12. Monteith, Margo J., and Aimee Y. Mark (2005), “Changing One’s Prejudiced Ways: Awareness, Affect, and Self-Regulation.” European Review of Social Psychology, 16: 113-154.

13. Powell, Martha C., and Russell H. Fazio (1984), “Attitude Accessibility as a Function of Repeated Attitudinal Expression.” Personality and Social Psychology Bulletin, 10 (1): 139-148.

14. Schalk-Soekar, Saskia, Seger M. Breugelmans, and Fons J.R. van de Vijver (2008), “Support for Multiculturalism in the Netherlands.” International Social Science Journal, 59(192): 269-281.

15. Scherpenzeel, Annette, and Marcel Das (2011), “ ‘True’ Longitudinal and Probability-Based Internet Panels: Evidence from the Netherlands.” In Marcel Das, Peter Ester, and Lars Kaczmirek (eds.), Social Research and the Internet. New York: Taylor and Francis, pp 77-104.

16. Tourangeau, Roger, and Ting Yan (2007), “Sensitive Questions in Surveys.” Psychological Bulletin, 133 (5): 859-883.

17. Wittenbrink, Bernd, Charles M. Judd, and Bernadette Park (1997), “Evidence for Racial Prejudice at the Implicit Level and Its Relationship With Questionnaire Measures.” Journal of Personality and Social Psychology, 72 (2): 262-274.