Merchant Steering of Consumer Payment Choice: Lessons Learned From Consumer Surveys

Shy, O., & Stavins, J. (2014). Merchant Steering of Consumer Payment Choice: Lessons Learned from Consumer Surveys, Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=3008

© the authors 2014. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Recent policy changes in the U.S. allow merchants to influence consumers’ choice of payment instruments by offering price discounts and other incentives. This report describes lessons learned from using consumer survey responses to assess whether merchants tried to influence buyers’ choice of payment method. To measure the effects of these recent policy changes, we included questions about merchant steering in pilot versions of a new diary survey of U.S. consumers. Our findings were inconclusive because some respondents interpreted the questions differently from the way we intended. We improved the questions in the subsequent, full-sample survey in 2012. This paper explains why the initial pilot diary survey failed to deliver the desired results and shows how the revised questions led to better survey responses. We find that the interpretation of a survey depends on the way the questions are asked. Suggestions for further improvements on using surveys to evaluate the effects of policy changes are also included.

Keywords

Consumer surveys, diary surveys, merchant steering, payment choice

Acknowledgement

We thank an editor, two anonymous referees, Kevin Foster, and Marcin Hitczenko for their comments and suggestions that greatly improved the paper. We also thank Vikram Jambulapati and William Murdock III for excellent research assistance, Scott Schuh for his guidance and comments on earlier drafts, and the diary team for their input. The views expressed in this paper are those of the authors and do not necessarily represent the views of the Federal Reserve Bank of Boston or the Federal Reserve System.

Copyright

© the authors 2014. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

1. Introduction

Until recently, credit card networks prohibited merchants in the United States from giving price discounts on debit card purchases or surcharging consumers on any card transactions. Recent legislation and court settlements in the United States allow merchants to offer price discounts intended to steer customers toward means of payment that are preferred by the merchants—typically payment instruments that are less costly for them to accept. Steering may also involve disclosing merchant fees, for example by posting signs at the store or online.

Anticipating these legislative and regulatory changes, we included three questions in the 2010 and 2011 pilots of the Diary of Consumer Payment Choice (DCPC). The DCPC is a collaborative effort of the Federal Reserve Banks of Boston, Richmond, and San Francisco that complements and extends the Federal Reserve Bank of Boston’s Survey of Consumer Payment Choice. The questions were intended to learn whether buyers were being steered by merchants toward using payment methods that are less costly to merchants but may not be preferred by the buyers. The diary is representative of U.S. consumers, but the samples in the two pilot surveys were small.

Our initial attempts to use the diary survey to extract information from consumers about whether merchants were influencing their payment choices were not very successful. As we show below, some respondents were confused by some of the questions and provided inconsistent answers. In other cases, respondents interpreted the questions in ways that differed from what we had intended. In the hope that economists and survey methodologists will be able to learn from our experience, we analyze some preliminary findings on the frequency and direction of merchants’ attempts to influence consumer payment choices as reported by the respondents who participated in our pilot diary surveys.

Survey methodologists may find our experience useful for future surveys that involve an examination of a policy implementation. In particular, we find that by asking a small number of short questions may not reveal accurately whether the policy has had any effect. Moreover, we find that this problem becomes more severe when multiple parties are involved, such as consumers and merchants, as is the case with payment methods. That is because each payment must be approved by both consumers and merchants, and by surveying only one side (consumers) about actions of the other side (merchants), survey responses are not adequate. It is difficult to learn anything about the motivation for a particular response, because the respondents (here consumers) may mix their own preferences with the preferences of the other party (here merchants). This problem occurs when the surveyor has access to only one side of a multi-party transaction.

2. Motivation for conducting the survey

Although the new pieces of legislation introduced substantial changes to the way merchants are allowed to differentiate prices, very little is known about how these new policies have been or will be implemented. In particular, we do not know whether or how they will affect actual merchant steering attempts and whether merchants will actually differentiate their prices according to different payment methods. For this reason, we decided to include three questions about merchant steering in the DCPC pilots.

Credit cards have traditionally been considered the payment instrument that is most costly to merchants, with the largest component of that cost attributable to the high interchange fees merchants pay to card issuers. Merchants may therefore have an incentive to steer their customers away from credit cards and toward less costly payment instruments, such as cash or debit.

The goal of this paper is to identify mistakes that can be made in consumer surveys and ways to correct them. We do it by examining the respondents’ answers to 2010 and 2011 diary surveys. We found out that survey question interpretations varied across respondents. We then show how we fixed some of the ambiguities in a new 2012 diary survey. Survey methodologists designing consumer surveys might benefit from our analysis because problems that arise in surveys on consumer choice may appear in other types of survey. We show how cognitive interviews can provide a helpful tool to identify respondents’ interpretation of survey questions and how ambiguous survey questions can be avoided.

3. Diary data

To examine whether merchants attempt to influence consumers’ choice of payment method by giving buyers price discounts or other incentives, we use data from the Diary of Consumer Payment Choice (DCPC). Pilot versions of the diary, administered in 2010 and again in 2011, were designed to obtain detailed information on all transactions made by consumers within a designated three-day period. Following the two pilot diary surveys, a revised diary survey was administered to a much larger sample of respondents in October 2012.

The DCPC collects data on the dollar value, payment instrument used, and type of expense (consumer expenditure category) for each purchase and bill payment, to complement the information collected in the Survey of Consumer Payment Choice (SCPC). For details on the SCPC surveys, see Foster et al. (2011). Because the diary respondents also participate in the SCPC, we have access to a wide variety of information about them. Respondents where spread over the entire month of October, where each group of respondents recorded their transactions over three consecutive days. The data were weighted to represent all U.S. adults (ages 18+). The sampling weights were created by a raking procedure to match the sample in the diary to the U.S. population, based on gender, race, age, education, household income, and household size from the Current Population Survey (CPS). Weighted aggregate results were constructed by standardizing the weights by the number of days respondents were observed. Payment instruments recorded by the respondents include: cash, checks, credit cards, PIN and signature debit cards, prepaid cards, bank account payments, money orders, and more.

The two pilot diaries used relatively small samples: 353 respondents participated in the 2010 DCPC and 389 respondents participated in the 2011 DCPC. In the 2012 diary (the first full implementation), there were 2,468 respondents. This diary recorded 14,772 transactions (purchases and bill payments). The steering analysis excludes bill payments therefore leaving a sample of 12,584 transactions.

3.1 Measuring the effects of policy change

In addition to asking respondents to provide detailed information about each transaction, we also included questions designed to measure whether merchants took advantage of recent policy changes by trying to influence their customers’ payment choice. It is important to emphasize that the DCPC is a consumer survey and not a survey of merchants. Therefore, the aim was to solicit the information from consumers about actions taken by merchants, in which the perspective of respondents might be different from merchants’ perspectives. We did not expect consumers to understand or even to be aware of the recent policy changes—we simply asked them to record their experiences while conducting transactions with the merchants

In the 2010 and 2011 pilot surveys, respondents were asked to answer the following questions about each transaction:

Question 1: Did the merchant accept the payment method you most preferred to use for this purchase? (If yes, please leave blank. Otherwise, please indicate the payment method you most preferred to use.)

Objective: This question was designed to assess potential steering of consumer payment choices by merchant acceptance decisions. If the merchant accepts the buyer’s most preferred payment instrument, but the buyer pays with a different instrument, we may be able to infer that the merchant was able to influence the buyer’s payment choice. As pointed out by a reviewer of this paper, some people tend to be satisfied with what is offered to them, partly because of cognitive dissonance. That presents a methodological problem as well as a theoretical problem related to “rational choice” bias.

Question 2: Did the merchant try to influence your choice of payment method by offering discounts or incentive programs, posting signs, or refusing to accept certain payment methods? (Please circle Y for yes or N for no.)

Objective: This question was intended to get consumers’ perspectives on whether merchants actively influenced buyers by steering them toward less costly payment methods. Note that a “Yes” answer does not imply that the merchant successfully steered the customer to another payment method, only that he tried to influence the customer’s choice of payment. This question was intended to measure directly which merchants try to steer their customers and how often.

Question 3: Did the merchant give you a discount on your purchase for the payment method you used? (Please circle Y for yes or N for no.)

Objective: This question asked about a very specific method of steering, namely about providing price discounts for using less-costly payment instruments. Discounting for payment choice was not widespread.

3.2 Cognitive interviews: How did the respondents interpret the questions?

In order to understand how the respondents had assessed the diary questions, the Federal Reserve Bank of San Francisco and the RAND Corporation commissioned cognitive interviews conducted with 20 of the diary respondents. Cognitive interviews are the dominant mode of testing questions and questionnaires. In fact, the list of guidelines for pretesting questionnaires in Dillman et al. (2009, p.233) includes a recommendation to “conduct cognitive interviews of the complete questionnaire in order to identify wording, question order, visual design, and navigation problems.” Presser et al. (2004, Ch. 2—5) provide extensive discussions of cognitive interviews.

The main goal of the cognitive interviews was to identify potential confusion about the payment diary and misinterpretation of the questions, and to solicit respondents’ feedback about the clarity of instructions, questions, and categories of payment methods and merchants. Interviewees were also asked whether the diary booklet had provided a good memory aid to assist in accurately recording transactions. Because the 2010 and 2011 diaries were pilot surveys, we used the feedback we received from these interviews to improve the design of the 2012 diary.

The interviews revealed that respondents had interpreted the three questions in a variety of ways. For example, some respondents had answered “No” to Question 1 if a merchant did not accept Discover cards or “Yes” to Question 2 if a merchant offered a discount for using a store-branded credit card. These examples indicate that at least some respondents had interpreted the questions as referring to specific types of payment cards, rather than to the entire category of credit cards, as the question had intended.

4. Were the pilot diary questions successful in soliciting the desired information?

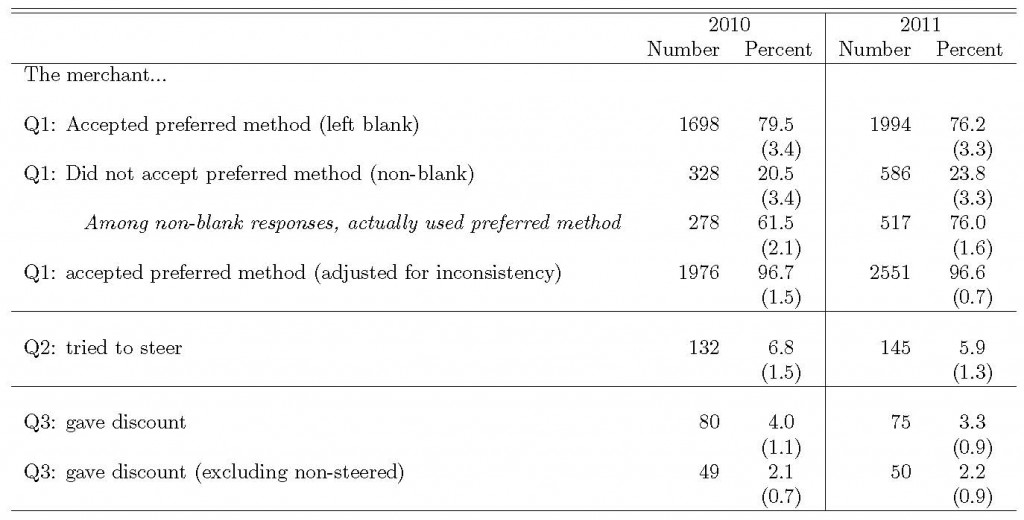

This section interprets the responses to the three questions described above. The diary questions served as a first attempt to analyze whether and, if so, how merchants steer buyers’ payment choice. Table 1 summarizes diary respondents’ answers to Questions 1–3, for both 2010 and 2011. Note that the data displayed in the tables are weighted to represent all U.S. adults (ages 18+), as described above.

For Question 1, the diary instructions asked respondents whose preferred payment method had been accepted to leave the question blank. In 2010, for about 80 percent of transactions the answer to Question 1 was left blank and for 20 percent of transactions the answer was not left blank. In 2011, the numbers were 76 percent and 24 percent, respectively. However, as Table 1 shows, the majority of transactions where the answer was not left blank were conducted using the respondent’s preferred payment method: 61.5 percent of transactions in 2010 and 76 percent in 2011. These respondents stated that the payment method they used was in fact their preferred method. This inconsistency (and more to follow) provides the main motivation for writing this paper because such inconsistencies should have been anticipated at the preparation stage of our diary survey.

Consequently, in cases where respondents stated that a merchant did not accept their preferred payment method but also stated that they used their preferred payment method, we corrected those responses and included them together with the responses of those who left the question blank. After correcting for the misinterpretations of question 1, we find that for over 96 percent of transactions the preferred payment method was accepted by the merchant.

Table 1: Reported merchant behavior regarding influencing consumer payment choices: 2010 and 2011 (transactions, by response to questions)

Notes: This table shows the number and weighted percent at the transaction level. Heteroscedasticity-consistent Huber-White robust standard errors reported in parentheses.In Question 1, respondents whose preferred payment method was accepted were instructed to leave the question blank. If respondents stated that a merchant did not accept their payment method, but also stated that they used their preferred payment method, we corrected those responses and included them with those from respondents who left the question blank. In Question 2, steering was defined as including discounts, while Question 3 asked specifically about discounts. Some respondents answered “No” to the steering question (Question 2), but “Yes” to the discount question (Question 3). If respondents said “No” to Question 2 (no steering), we assumed the answer should also be “No” to Question 3 (no discounts)

Question 2 asked about steering attempts (including discounts), whereas Question 3 asked specifically about discounts. Some respondents answered “No” to the steering question (Question 2), but “Yes” to the discount question (Question 3). We corrected those responses and assumed that if a merchant did not try to steer a consumer, no discount was offered. As Table 1 shows, by excluding the transactions for which no steering took place, the number of transactions for which a discount was given dropped from the reported 3–4 percent to about 2 percent. In other words, a “No” response to Question 2 means that there should also be a “No” response to Question 3. After these adjustments, the pilot DCPC indicates the following:

(a) In over 96 percent of transactions, merchants accepted the payment preferred by the buyer.

(b) Merchants attempted to steer buyers in about 6–7 percent of the recorded transactions.

(c) Merchants gave discounts on the payment instrument used in approximately 3–4 percent of the transactions, but we believe that only 2 percent of the transactions were offered discounts based on the choice of payment method.

Steering was not widely reported by the respondents, and price discounts were even less common. Both actions are costly to merchants (Briglevics and Shy, Forthcoming) and may be confusing to consumers, as discussed above.

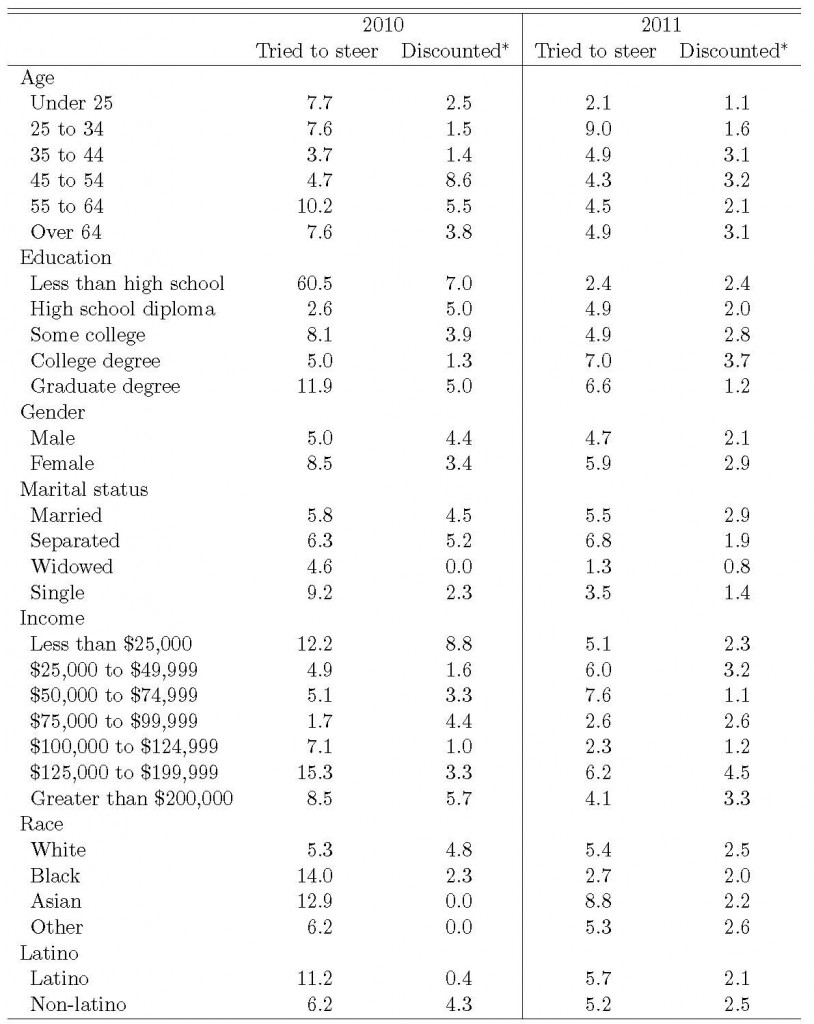

Table 2 displays responses to Question 2 (steering) and Question 3 (price discounting) by payment method.

Notes: * includes all transactions for which the respondents answered “Yes” to Question 3, which asked whether the merchant gave a discount for the payment method used.

Because credit cards are typically considered the most expensive payment method for merchants (Garcia-Swartz, Hahn, and Layne-Farrar 2006), we expected to find evidence of merchants’ attempting to steer consumers to pay using cash or debit instead of credit. If merchants successfully persuaded consumers not to use credit cards, the percentage of transactions where credit cards were used in the steered column (2) would be lower than the percentage of transactions in the non-steered column (1).

However, the pilot diary results were mixed. The 2010 results did not confirm our hypothesis: The percentage of steered transactions for which consumers used a credit card in 2010 (21.4 percent, column 2) is higher than the percentage of nonsteered transactions for which consumers used a credit card (16.9 percent, column 1). Note that the numbers indicate the payment instrument that was actually used for the transaction, regardless of whether the consumer initially wanted to use something else. There are several possible explanations for this finding: (a) merchants tried to steer consumers away from credit cards, but the steering attempts did not work; (b) merchants successfully steered consumers away from general-purpose credit cards toward private-label credit cards; (c) the transactions that were steered were conducted at different locations, with different merchants, and/or for different dollar values than the transactions that were not steered, and the share of transactions using credit cards would have been even higher had it not been for steering. The hypothesis suggested in (c) above was not confirmed by regression results which show that consumers were more likely to use a credit card than to use a debit card or cash in transactions that were steered in 2010, even when controlling for dollar amount.

Table 2 also shows that in 2011, the share of steered transactions that was conducted using credit cards is lower than the share of nonsteered transactions conducted using credit cards (11.4 percent vs. 15.5 percent), as we would expect. This could indicate that merchants were more successful in 2011 than in 2010 in persuading consumers not to use a credit card, but again the table does not control for the differences among merchants or transaction values. When we control for transaction values in regressions, there is no significant difference between the likelihood of using a credit card versus another payment method, for either steered or discounted transactions.

The results of the discount question displayed in Table 2 are counterintuitive in both years. If merchants offered discounts for not using credit cards—as we would expect—then the share of transactions that were discounted and in which credit cards were used would be lower than the share of transactions that were not discounted and in which credit cards were used. Instead, the opposite was reported: in 2010, 24.7 percent of transactions that were discounted were conducted using credit cards, compared with 16.9 percent of transactions that were neither steered nor discounted. In 2011, 17.4 percent of transactions that were discounted were conducted using credit cards, compared with 15.5 percent of transactions that were neither steered nor discounted. One possible explanation for this finding is that some merchants offer discounts for using their own private-label credit card, but not for general-purpose credit cards, while the diary does not track the exact type of credit card the consumer used. In fact, one of the cognitive interview participants specifically mentioned having been offered a discount for using a store credit card. A respondent who used such a credit card and received a discount might therefore state that he had received a discount for using a credit card, even though the discount would not have been offered for using a general-purpose credit card. This example shows that while the questions were intended to refer to the entire payment method category—all credit cards or all debit cards—respondents may have interpreted them differently. Because it is impossible to understand how each respondent interpreted the questions, we view these results as very preliminary and regard them as the first step in analyzing this important policy issue.

5. Revisions in the 2012 DCPC

After we discovered that the 2010 and 2011 diary questions about steering and discounting were ambiguous, we revised the questions that were included in the 2012 DCPC. We decided not to ask about merchant steering, as steering is not a well-defined concept. In addition, following the July 2012 proposed settlement in which Visa and MasterCard agreed to allow merchants to surcharge credit card transactions, we included a question about surcharging. Consequently, the new questions focus exclusively on discounts and surcharges. In addition, we added the word “specifically” to each question to guide respondents to report only the discounts or surcharges that were tied to the choice of payment method, rather than those offered or imposed for any other reason.

The new questions are as follows:

• If you used cash: Did you receive a discount from the merchant specifically for using cash? (Yes/No)

• If you used a debit card: Did you receive a discount from the merchant specifically for using this debit card? (Yes/No)

• If you used a credit card:

o Did you receive a discount from the merchant specifically for using this credit card? (Yes/No)

o Did you pay an extra charge, surcharge, or convenience fee to the merchant specifically for using this credit card? (Yes/No)

Even though the credit card question does not ask separately about store card versus general- purpose card, the diary does ask whether the respondent used Visa, MasterCard, American Express, or a Discover card, in the hope that the resulting responses will enable us to sort out the correct answers.

6. Preliminary analysis of the 2012 diary results

Because the steering questions were improved and asked about more specific merchant actions, the 2012 diary survey yielded much improved results. We analyze the preliminary 2012 results below.

6.1 Cash discounts

When asked whether they received a discount on a transaction specifically for using cash, only 1.7 percent of the respondents said yes. The fraction was higher for less educated and for lower income consumers, although only the differences across income groups were statistically significantly different from each other. A breakdown by transaction category shows that auto related transactions had the highest fraction of cash discounts (6.6 percent) among the broad consumption categories. Looking at the finer merchant breakdown, we find that gasoline stations had the highest rate of transactions with cash discounts—8.7 percent, followed by clothing and accessories stores at 7.3 percent. The median value of a cash transaction where a consumer received a discount was $20, and was statistically significantly higher than the median value of a cash transaction with no discount ($7.49).

6.2 Debit card discounts

Debit card discounts were similarly rare—only 1.8 percent of debit transactions were discounted specifically for using a debit card. Less educated consumers were again more likely to receive a discount. Among the merchant categories, housing-related transactions were most likely to receive debit card discounts, with respondents stating that 11.3 percent of their housing-related transactions received a discount specifically for using a debit card. Those included a broad range of merchants: furniture and home goods stores, appliance and electronics stores, hardware and garden stores. As was the case with cash discounts, the median value of a discounted debit transaction was significantly higher than the median value of a non-discounted debit transaction: $27.95 and $20.52, respectively.

6.3 Credit card discounts and surcharges

The 2012 survey responses to the discount questions are much better than the pilot responses. However, the answers to the credit card discount questions still indicate that the respondents interpreted the question differently than what we intended. We will continue to revise the credit card discount question in the future versions of the survey. In addition to questions about discounts, we asked respondents whether they had to pay any surcharges or other fees for paying with their credit card. Because merchants have been prohibited to surcharge by the credit card networks until recently, surcharging is still very rare and is even less common than cash discounts—only 1.2 percent of all credit card transactions were reported to have surcharges or other fees specifically for using that card. Youngest and least educated respondents reported the highest fraction of credit card transactions with charges or surcharges.

Looking at the finer merchant breakdown, we find that gas stations and tolls were the two payment categories with high rates of surcharging: 5.7 and 5.9 percent, respectively. Note that most gas stations post higher prices for credit cards than cash that can be interpreted as either cash discount or card surcharge. Therefore, it is possible that respondents report the same transactions that received cash discounts at gas stations as credit card surcharges. In addition, 13.7 percent of building service payments was reported to get credit card surcharges, although the absolute number of transactions in that category is very small. As was the case with cash and debit discounts, the median value of surcharged transaction was significantly higher than the median value of non-surcharged transaction: $30 and $26.

7. Conclusions: How to ask survey questions

Policymakers need tools to be able to evaluate whether a given policy has had an effect in practice, and how that change has affected the targeted population. In the case discussed here, it is important to assess both: (1) whether merchants took advantage of the more flexible policies and provided price discounts for using or avoiding particular payment methods and, perhaps, some other steering incentives, and (2) whether and how consumers changed their actions in response to merchants’ new practices. In order to measure the effect using consumer survey responses, researchers must establish that consumers understand how the policy changes affect them.

We found problems with the way the initial pilot diary survey questions were formulated and evidence that the questions were interpreted differently by different respondents. While there are possible explanations for the responses we received, it was impossible to confirm or reject the validity of these responses based on the available data.

Question 1 in the pilot survey had another problem because asking the respondent to leave it blank was the equivalent of giving the answer “my preferred payment method was accepted.” We did this for space allocation reasons on the paper diary. The lesson that we learned is that “passive” responses like this should be avoided because there is no good way to distinguish between people who actually answered the question and those who just ignored it. If the dropdown list would have included a category that allowed the respondent to indicate that they used their preferred payment instrument, we might have avoided some of these issues. This is similar to why we try to avoid “check all that apply” questions and instead ask a series of yes/no questions. In check-all-that-apply, the negative response is a passive action. In contrast, yes/no questions have the advantage that the respondent must actively select “no” in order to give a negative response. . In fact, the list of guidelines in Dillman et al. (2009, p.150) suggests to “use forced-choice questions instead of check-all-that-apply questions.”

In order to assess the extent of merchant steering or price discounting based on payment method—and therefore to assess whether the policies that relaxed restrictions on merchants were effective in practice—we improved on the 2010 and 2011 flawed questions in the 2012 full-sample diary survey. We find that the interpretation of a survey depends on the way the questions are asked. Survey methodology literature provides some help in how to ask survey questions (for example, Dillman et al. 2009, Fowler 1995, Groves et al. 2009, Harrison and List 2004, Louviere et al. 2000 and Presser et al. 2004). In particular, Tourangeau et al. (2000, Sec. 2.4) discuss common semantic effects that can derail a survey question, such as presupposition, vagueness, unfamiliarity, and lack of exhaustiveness.

Our results show that—so far—few respondents were affected by merchant steering. Because such pricing behavior is rare, alternative methods should be considered to measure the extent to which merchants set prices that vary by payment instrument. Those methods might include discrete choice experiments to measure how consumers would react to discounts or surcharges. In addition, natural field experiments might be a useful method to test some of the merchant steering effects. Another option under consideration is conducting consumer focus groups or cognitive interviews, although the high cost of developing and administering such tools would likely result in small sample sizes. Because the DCPC was designed to be a representative survey of U.S. consumers but not necessarily a representative survey of U.S. merchants, developing surveys of merchants should also be considered. However, merchant surveys present challenges that must be carefully evaluated. For example, small merchants might be afraid to disclose their steering methods. In particular, small merchants who already impose surcharges on credit card transactions may be afraid to admit to that practice in a survey.

References

1. Briglevics, T., and O. Shy. Forthcoming. “Why Don’t Most Merchants Use Price Discounts to Steer Consumer Payment Choice?” Review of Industrial Organization.

2. Dillman, D., J. Smyth, and L. Christian. 2009. Internet, Mail, and Mixed-Mode Surveys: The Tailored Design Method. John Wiley & Sons, Inc., (3rd edition).

3. Foster, K., E. Meijer, S. Schuh, and M. Zabek. 2011. “The 2009 Survey of Consumer Payment Choice.” Federal Reserve Bank of Boston, Public Policy Discussion Paper No. 11-1.

4. Fowler, Floyd J. Jr. 1995. Improving Survey Questions: Design and Evaluation, Sage Publications.

5. Garcia-Swartz, D, R. Hahn, and A. Layne-Farrar. 2006. “The Move Toward a Cashless Society: Calculating the Costs and Benefits.” Review of Network Economics 5(2): 25–54.

6. Grant, R. 1985. “On Cash Discounts to Retail Customers: Further Evidence.” Journal of Marketing 49(1): 145–146.

7. Groves, Robert M., Floyd J. Fowler Jr., Mick P. Couper, James M. Lepkowski, Eleanor Singer, and Roger Tourangeau. 2009. Survey Methodology, Wiley.

8. Harrison, G., and J. List 2004. “Field Experiments.” Journal of Economic Literature 42: 1009-1055.

9. Louviere, J., D. Hensher, and J. Swait. 2000. Stated Choice Methods. Analysis and Applications. Cambridge: Cambridge University Press.

10. Presser, S., J. Rothgeb, M. Couper, J. Lessler, E. Martin, J. Martin, and E. Singer. 2004. Methods for Testing and Evaluating Survey Questionnaires. John Wiley & Sons, Inc.

11. Tourangeau, R., L. Rips, K. Rasinski. 2000. The Psychology of Survey Response. Cambridge University Press.