A Free Audio-CASI Module for LimeSurvey

Beier H. & Schulz S. (2014). A Free Audio-CASI Module for LimeSurvey, Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=5889

© the authors 2015. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Audio-enhanced computer-assisted self-interviewing (ACASI) has several advantages over computer-assisted self-interviewing without audio enhancement (CASI). Being able to listen to sound files of questions and answer options while proceeding through a questionnaire can increase interest in the survey, improve comprehension of survey questions and considerably lessen the burden of a self-administered interview, especially on target populations with reading difficulties. In addition, it has been argued that ACASI reduces social desirability bias. One problem in implementing ACASI is the lack of affordable and easily accessible software solutions supporting it. This paper introduces an ACASI module for the open-source software LimeSurvey. The module supports the commonest question types, can be integrated into existing installations of LimeSurvey with only minor effort and is available from the authors, free of charge.

Keywords

ACASI, audio-enhancement, CASI, internet survey, LimeSurvey, self-administered survey, software

Acknowledgement

The ACASI module was developed for use in the project ‘Friendship and Violence in Adolescence’, funded by the German Science Foundation, grant KR 4040/2. We thank Dr. Marcel Minke and Tony Partner at Limesurvey-Consulting.com for their great work on the technical implementation of the software module. Kristina John gave helpful comments on an earlier draft of the paper.

Copyright

© the authors 2015. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

Use of computer-assisted modes of data collection, such as web surveys and computer-assisted self-interviewing (CASI), has increased considerably during the past two decades and now accounts for a large proportion of survey research (Couper, 2011). One advantage of these modes of data collection is they are flexible enough to include text, audio and video presentations of questions. In particular, audio enhanced computer-assisted self-interviewing (ACASI) provides the respondent with the option of listening to pre-recorded audio files of questions and answer options which increases data quality. As a result, since the first applications in the mid-1990s (e.g. Johnston & Walton, 1995; O’Reilly et al., 1994), ACASI has been applied in a multitude of studies (Couper, Tourangeau, & Marvin, 2009) including several large-scale data collections, such as the ‘National Longitudinal Study of Adolescent Health’ (Harris et al., 2009), the ‘National Survey of Family Growth’ (Groves, Mosher, Lepkowski, & Kirgis, 2009), the ‘National Survey of Drug Use and Health’ (U. S. Department of Health and Human Services, 2014) and the British ‘Offending, Crime and Justice Survey’ (Hales, Nevill, Pudney, & Tuipping, 2009).

Data quality can benefit from the use of ACASI (as compared with CASI, which does not have audio enhancement) for several reasons. First, it is argued that ACASI can improve comprehension of survey questions and considerably lessen the burden of proceeding through a self-administered questionnaire (Langhaug et al., 2011; Tourangeau & Smith, 1996; Turner et al., 1998). This is especially useful for respondents with reading difficulties such as those who are very young, old, blind or significantly illiterate. Literacy problems, for example, have been shown to be associated with several problems of data quality including more incorrect/inconsistent answers (Al-Tayyib et al., 2002; Iversen, Furstenberg, & Belzer, 1999), higher item non-response (Kupek, 1998; Langhaug, 2009) and higher survey non-response (Groves, 2006). While further research is needed to establish the degree by which ACASI can reduce these problems, a recent study with optional use of audio support reports that respondents with poor reading ability listened to the audio files more often and found them more helpful than respondents with better reading ability (U.S. Department of Health and Human Services, 2008; see also O’Reilly et al., 1994).

Secondly, being able to listen to the survey questions can increase the motivation of respondents. This reduces dropout during the survey and unwanted behaviour such as not responding to difficult questions. Several studies comparing ACASI with other modes of data collection report that respondents preferred ACASI rather than face-to-face interviews (e.g. Dolezal et al., 2012; Edwards et al., 2007) or self-administered paper questionnaires (e.g. Edwards et al., 2007; O’Reilly et al., 1994; U.S. Department of Health and Human Services, 2008). And when asked to evaluate the audio part of an ACASI questionnaire, respondents reported high degrees of acceptance of ACASI (U.S. Department of Health and Human Services, 2008). Since no study directly compares motivation of respondents using ACASI and CASI, more research is needed to assess the degree to which the reported higher motivation can be attributed to the use of computers on the one hand and to the audio part on the other.

Thirdly, it has been argued that ACASI decreases social desirability bias when sensitive questions are asked (Langhaug, 2009; Langhaug et al., 2011; Tourangeau & Smith, 1996; Turner et al., 1998). This generated huge interest in the method by researchers concerned with sensitive topics such as drug use (e.g. U. S. Department of Health and Human Services, 2014), crime (e.g. Hales et al., 2009) and sexual behaviour (e.g. Langhaug, Sherr, & Cowan, 2010). Several studies reported increased willingness to disclose sensitive behaviour in ACASI compared with using other types of survey, such as interviewer-administered techniques or self-administered paper questionnaires (e.g. Langhaug, 2009; O’Reilly et al., 1994; Perlis et al., 2004; Turner et al., 1998). Results of studies comparing answers to sensitive questions asked via ACASI or CASI are however mixed. While some studies (Langhaug, 2009; Tourangeau & Smith, 1996) find higher rates of disclosure for ACASI than for CASI, others find no significant differences (Couper, Singer, & Tourangeau, 2003; Couper et al., 2009). In a recent meta-analysis, Gnambs and Kaspar (in press) report that ACASI does not generally improve disclosure of sensitive behaviour over CASI but note that it might be more effective for specific subgroups with low literacy that were underrepresented in their meta-analysis (Gnambs & Kaspar, in press: 24).

Notwithstanding the need for further methodological research given these mixed results, ACASI is the method of choice for many data collection purposes. One practical problem in implementing ACASI is the question of what software to use. While common survey software allows for easy inclusion of audio files, a satisfying implementation of ACASI requires additional functionality, namely interactively reacting to respondents’ behaviour (e.g. stopping the audio file of a question once the question has been answered and automatically starting the file of the next question; avoidance of the simultaneous replay of the audio files belonging to different questions). When looking for a software solution for use in data collection for the project ‘Friendship and Violence in Adolescence’, no suitable software could be identified that

- allowed for a convenient and interactive implementation of ACASI;

- had low hardware requirements;

- was available at an affordable price, considering the project’s budget restrictions.[1]

Therefore, a software module for use with the open-source software LimeSurvey[2] was developed that facilitates the implementation of questionnaires using ACASI. The software module supports LimeSurvey’s most common question types and can be used with surveys needing complex filtering (i.e. providing certain questions which depend on answers given to previous questions). The ACASI module is available free of charge from the authors.[3] The ACASI module was developed for local use on laptop computers owned by the research project. To use it in internet surveys might require additional testing.[4] There is no support for it on tablet computers and smartphones. The implementation of the ACASI module is described in detail in the next paragraph and this is followed by the specific steps needed to implement it in a survey using the software module. The paper concludes with an appraisal of our experience with the software module during data collection, and a short discussion.

Software specification

Development of the ACASI module was guided by four principles. First, it should be unobtrusive. The main purpose of ACASI is to simplify answering the surveys. It should not stand out during the interview but naturally blend into the general process of answering the questions. In particular, at no point should the software module complicate answering the survey, e.g. by playing the audio file of a survey question while the respondent is currently thinking about the answer to some other question (e.g. a previous one). Closely related is the second principle that use of the ACASI should work automatically without requiring the respondent to be active in its operation but at the same time it must react to respondents’ needs. Audio files should start playing when a respondent starts to read a question, they should pause while the respondent thinks about the answer, and should automatically stop as soon as the respondent has answered that question. Thirdly, respondents should always be able to control the ACASI manually, overruling the automatic behaviour of the software. For example, respondents should be able to pause the replay of an audio file or be able to listen to a question more than once if, for example, they have problems understanding the question the first time. Fourthly and finally, the ACASI should be compatible with all major features of the survey software, especially supporting the most common question types as well as being capable of coping with filtering (sub-)questions.

Following these principles, a detailed specification of the ACASI module was formulated by the authors and technically implemented into the software LimeSurvey by the company Limesurvey-Consulting.com. At present, the ACASI module is fully integrated with a modified version of the LimeSurvey template ‘sherpa’. The figures in the following sections illustrate the ultimate design. Instructions on how to implement ACASI, either using the pre-defined template or customizing other templates to support it, are given below in the section on survey implementation.

General behaviour

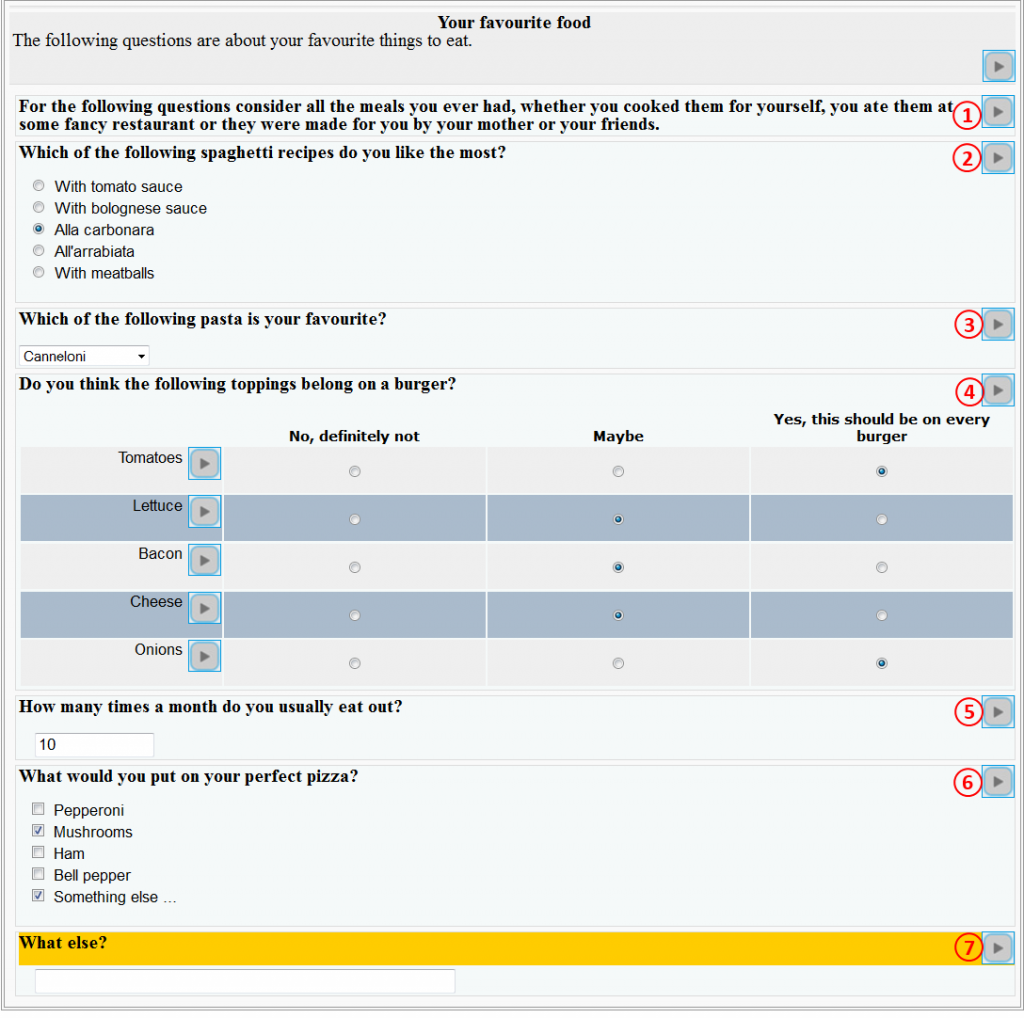

Figure 1 depicts the appearance of the ACASI module using the modified ‘sherpa’ template. Each player (1-6) is depicted as a small play button and linked to a pre-recorded audio file. Players can be assigned to screen headings (Player 1; group descriptions in LimeSurvey parlance), question texts (Players 2, 3) and sub-question texts (Players 4-6). Once a screen is loaded, the first player on the screen is automatically activated and starts playing the respective audio file. When activated, the text affiliated with the activated player is highlighted to assist respondents with reading along and the screen automatically scrolls to the player currently activated. If no action by the respondent is expected after an audio track ends (e.g. introductory or explanatory texts), the next player is automatically activated and the corresponding audio file starts playing. If an audio track ends and the respondent is expected to answer, then the player stops and the text remains highlighted. Respondents can think about their answer as long as needed without the next audio file starting to play. Once the question is answered, the next player is activated. If a question is answered even before the associated audio file is finished, the current player is paused immediately and the next player is activated. The subsequent section gives an overview of supported question types, and describes how the player judges when questions are considered to have been ‘answered’.

Figure 1: General behaviour of the ACASI module

Respondents can manually control the ACASI at any time by clicking on the respective player buttons. If the button of the active player is clicked, replay of the audio file is paused. If the button of any other player is clicked, this player is activated and replay of the corresponding audio file is started, while the previously active player (if any) is paused. At any time, only one audio file is played. The ACASI module is compatible with filtering. If questions are hidden by a filter, all corresponding players are hidden as well. Audio files corresponding to hidden question are not replayed.

Behaviour for supported question types

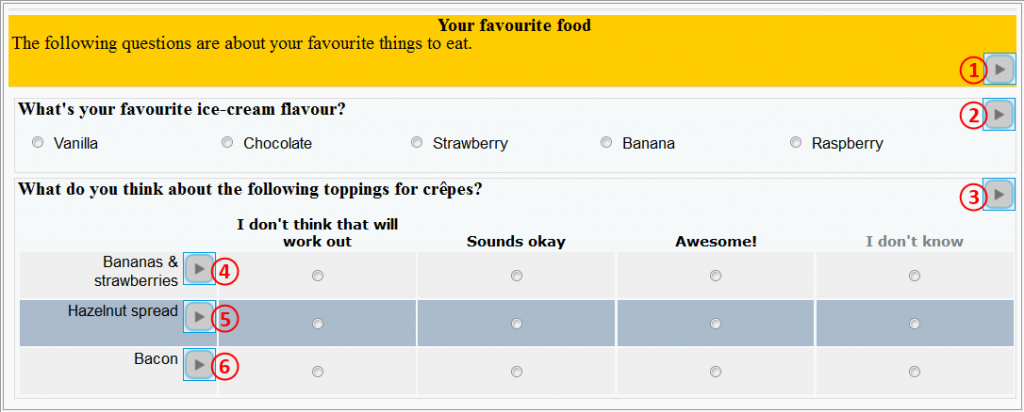

The most common question types are supported, including closed question formats as well as open ones. Question types differ in when they are considered ’answered‘, regulating activation of the next player. Figure 2 gives examples of the supported question types.

Text display (Figure 2, player 1): In LimeSurvey, displaying text additional to screen headings and question texts (e.g. additional information on how to answer the subsequent questions or providing other extra information) is treated as a question not requiring an answer. As with screen headings, these questions are considered as ‘answered’ (prompting the next player to start) after the replay of the audio file is completed.

Radio Button and dropdown menus (Figure 2, players 2 and 3): These question types are used when respondents can select only one answer. They are considered ‘answered’ once one of the possible answers is selected. If the audio file is completed before an answer is given, the player stops and the question remains highlighted until an answer is given. If the audio file is still playing when an answer is given, the audio player pauses immediately and the next player is activated.

Arrays (Figure 2, player 4): Array questions consist of one question and several sub-questions, where each of them can be answered using radio buttons. An individual player is assigned to the question and each sub-question. The question is considered ‘answered’ when replay of the audio file is completed, inducing activation of the first sub-question. If a sub-question is answered before replay of the question text is finished, the player corresponding to the question is paused and the player corresponding to the sub-question following the answer is activated. In all other circumstances players assigned to sub-questions behave like regular radio button questioners.

Multiple choice (Figure 2, player 6): Given that respondents are allowed to tick as many answers as they like, assessing whether a respondent has finished answering a question cannot be unambiguously determined. We therefore recommend positioning multiple choice questions at the bottom of a screen. However, asking additional questions on the same screen after a multiple choice question is sometimes important. For example, respondents might have to specify their answer given to a multiple choice question in a subsequent question, as depicted in the questions 6 and 7 of figure 2. To address this need, each answer category of a multiple choice question can also be separately addressed, thereby indicating when the question has been ‘answered’. In figure 2, for example, question 6 is treated as ‘answered’ if the ‘something else’ category is ticked, resulting in replay of question 7.

Free text and numerical input (Figure 2, players 5 and 7): Questions requiring respondents to type their answer, either as text or numerically, are considered as ‘answered’ if respondents start typing and then stop for a previously defined time span (default: 2 seconds). This provision is adequate for questions requiring comparatively short answers that respondents will usually type in one go. Questions likely to result in deliberation after respondents have started typing (e.g. the need to describe something in detail), though, should be positioned at the bottom of a screen to avoid complications.

Implementing a survey using the ACASI module

In LimeSurvey, appearance and customized behaviour in a survey is handled using templates. The ACASI module is fully integrated in a modified version of the ‘sherpa’ template, as depicted in figures 1 and 2. To use this template, it needs to be imported into LimeSurvey either by copying the corresponding files to the correct folder or via the template import option of LimeSurvey. The template can then be accessed by all surveys on the system. To use ACASI with other custom templates presupposes copying several files, as well as the javascript code controlling the audio players, into those templates. While this is a little more complex than using the modified ‘sherpa’ template, no programming skills are needed. Changes to the default settings of the template (e.g. changing the background colour of the activated player) can be made using the template editor of LimeSurvey. More elaborate changes (e.g. adding additional question formats) are also possible but require programming skills in javascript.

After setting up the template, pre-recorded audio files can be added to any survey on the system. The audio files need to be placed in the subfolder ‘audio’ of the folder of the survey in question and can then be accessed by referencing them in the survey editor, which is also used to devise the survey in LimeSurvey. When respondents answer the survey, each reference set in the survey editor will result in the display of an audio player. In figure 1, for example, six audio files were referenced using the survey editor, resulting in six audio players being displayed. Each player included this way will automatically behave as described in the previous paragraph.

Experience using the ACASI module

The ACASI module was developed for use in the project ‘Friendship and Violence in Adolescence’, an ongoing prospective longitudinal study funded by the German Science Foundation. It was first tested in the field during a pilot study in a medium-sized city in Southern Germany (N=167) and subsequently employed during the first (N=2635) and second rounds (N=2811) of data collection in five cities in the Ruhr, Germany. Respondents were students enrolled in the seventh grade of the lower and medium tracks of the general education system (German Hauptschule, Realschule, and Gesamtschule, median age = 13 years). The same students were interviewed again for round two when they were in grade eight. The pilot study took place in summer 2013, and then the first round of data collection occurred in fall 2013 and the second in fall 2014. Students were interviewed in class during two school lessons and all simultaneously completed the questionnaire on computers brought to the classroom by the researchers.[5] For further information on the study design and fieldwork see Beier, Schulz, and Kroneberg (2014).

Overall, experiences with the use of ACASI were positive. In addition to the advantages of ACASI described in the literature, interviewers reported that the use of headphones also contributed to better discipline in the classroom by heightening involvement with the survey and eradicating talking to other students. Of course, this advantage will be mainly restricted to the school context. The main purpose of the pilot study was to test the survey and procedures, not to compare ACASI with regular CASI. All interviews were therefore conducted using ACASI. However, several questions to evaluate the ACASI were included in the questionnaire of the pilot study to assess how it was used, whether it seemed helpful and whether or not respondents liked it (Table 1). Use of the ACASI was high, with 82% of respondents declaring that they listened closely to all or at least most questions and a fairly similar proportion, 79%, considered being able to listen to the questions as at least ‘somewhat helpful’. Finally, 73% indicated that it was fun to be able to listen to the questions via headphones. No questions evaluating the ACASI were included in the main study.

Table 1: Evaluation of the ACASI module (N=167)

| How many of the questions did you thoroughly listen to? | How helpful was it to be able to also listen to the questions via headphones? | Was it fun being able to listen to the questions via headphones? | |||

| … all of the questions | 42% | … very helpful | 49% | Yes, it was a lot of fun | 44% |

| … most questions | 39% | … somewhat helpful | 30% | Yes, it was fun | 29% |

| … only a few questions | 16% | … a little helpful | 15% | So-so | 19% |

| … no questions | 2% | … not helpful | 5% | No, it was no fun | 4% |

| No, it was no fun at all | 4% | ||||

No bugs in the ACASI module were encountered during data collection. However, problems emerged concerning the size of the audio files used during the pilot study. Smooth performance of the ACASI module depends on completely preloading all the necessary audio files on a screen upon page load. The hardware used was at times too weak, resulting in disruption to the audio enhancement. Downsizing the audio files mainly solved this problem, but the audio enhancement of 10 interviews (0.4%) in round one was nevertheless interrupted. In these cases, students had to finish the survey without audio enhancement. The capacity of the hardware (see description in endnote 5) should therefore be accepted as a minimum requirement for using the ACASI module, with better hardware recommended if financially feasible.

Summary and Limitations

Audio-enhancing computer-assisted self-interviewing can benefit data collection efforts for several reasons, such as raising motivation to participate, increasing comprehension of the questions, and possibly also weakening social desirability bias. Not surprisingly, then, several large projects have used ACASI to improve the quality of the data collected. Since the software used is proprietary, research projects with tighter financial limits may need either to adapt existing software to support audio enhancement, although this involves additional effort and costs, or forgo this promising feature.

The ACASI module presented in the current paper offers a solution to this problem, as it is integrated in the open source software LimeSurvey and available free of charge from the authors. The software is easy to use in a survey and unobtrusively helps respondents in answering questionnaires. Audio replay automatically reacts to respondents’ behaviour, while at the same time they can manually overrule the automatic behaviour, e.g. in order to listen to specific questions more than once. Data collection of two rounds of the project ‘Friendship and Violence in Adolescence’ was successfully supported by the ACASI module, indicating its usefulness for similar purposes.

Given that the software was developed to assist the data collection for a specific research project, one limitation is its applicability because projects differ in their requirements. In particular, the use of the ACASI module to collect data over the internet needs further testing. As all surveys in our study were conducted using Firefox (Version 22), most testing and debugging were done with regard to this specific browser. In addition, the software was tested using the most common browsers: Firefox (Version 31); Internet Explorer (Version 11); Chrome (Version 36); and Safari (Version 7). No experience exists with regard to different browser versions or more unusual browsers: further testing might therefore be necessary before using the ACASI module for web-based surveys. In addition, tests of the software using tablets and smartphones were not successful. While adapting the software to these devices might be possible, at the moment the application is restricted to computers. Notwithstanding these limitations, we believe that the developed ACASI module can be an asset to a wide range of research projects and hope that it will help scholars to improve the quality of their data.

[1] We identified several free survey software solutions that supported inclusion of audio files into surveys, but none of them provided the interactive functionality needed. Then again, one commercial software provider offered to incorporate ACASI into their software following our specifications but their software had hardware requirements not met by our computers. Several commercial software solutions existed that would support interactive ACASI but they all had a pricing scheme that billed per computer used (rather than per interview) when the software was hosted locally. This would have resulted in costs of > 5€ per interview due to our use of 70 computers.

[2] LimeSurvey is an application used to develop and publish computerized surveys. It is normally installed to a web server but can also be installed on a local computer. Surveys are generated and modified using a web interface and activated surveys are accessed using a web browser. Answers of respondents are recorded in a database from which they can be downloaded for analysis. For further information on LimeSurvey and to download the LimeSurvey software see http://www.limesurvey.org.

[3] The ACASI module is published as open source, licensed under GNU/GPL. At present, it is only available via email directly from the authors. We plan to provide a link in the future which will be published in the comments on this paper.

[4] The software has been tested using LimeSurvey 2.00+ and LimeSurvey 2.05. It runs stable using Firefox (Version 22 and 31), Internet Explorer (Version 11), Chrome (Version 36) and Safari (Version 7). Using it in internet surveys might require additional tests on other browsers. Surveys were successfully hosted using Linux Mint Maya 13 and Windows 7, and successfully accessed using Linux Mint Maya 13, Windows 7 and Windows 8. The software uses the javascript library jquery (version 1.11.1; jquery UI version 1.10.3) provided by the default installation of LimeSurvey.

Compatibility issues could arise with future releases if, for example, jquery were no longer included. This might require changes to the program code of the ACASI module. Such changes to the program code could be implemented by researchers with sufficient knowledge of JavaScript themselves under the used open source license. Also, professional software developers such as the company LimeSurvey-Consulting.com could be commissioned to change the ACASI module, to address both compatibility issues and to meet additional demands currently not catered for in the module (such as supporting additional question types).

[5] The hardware used were 11.6’’ netbooks (Acer Aspire One 725; AMD Dual Core Processor C70, 1,33 GHz; 2 GB RAM) running on Linux Mint Maya 13. The Survey was programmed using LimeSurvey 2.00+ (build 130226). The Survey was accessed by the students using Mozilla Firefox and locally hosted using Xampp for Linux. Mozilla Firefox only accessed data stored locally on the computers and no internet connection was established.

References

- Al-Tayyib, A. A., Rogers, S. M., Gribble, J. N., Villarroel, M., & Turner, C. F. (2002). Effect of Low Medical Literacy on Health Survey Measurements. American Journal of Public Health, 92(9), 1478-1480.

- Beier, H., Schulz, S., & Kroneberg, C. (2014). Freundschaft und Gewalt im Jugendalter. Feldbericht der ersten Erhebungswelle MZES Working Paper (Vol. 158). Mannheim: Mannheimer Zentrum für Europäische Sozialforschung.

- Couper, M. P. (2011). The Future of Modes of Data Collection. Public Opinion Quarterly, 75(5), 889-908.

- Couper, M. P., Singer, E., & Tourangeau, R. (2003). Understanding the Effects of Audio-Casi on Self-Reports of Sensitive Behavior. The Public Opinion Quarterly, 67(3), 385-395.

- Couper, M. P., Tourangeau, R., & Marvin, T. (2009). Taking the Audio Out of Audio-CASI. Public Opinion Quarterly, 73(2), 281-303.

- Dolezal, C., Marhefka, S. L., Santamaria, E. K., Leu, C.-S., Brackis-Cott, E., & Mellins, C. A. (2012). A Comparison of Audio Computer-Assisted Self-Interviews to Face-to-Face Interviews of Sexual Behavior among Perinatally HIV-Exposed Youth. Archives of sexual behavior, 41(2), 401-410.

- Edwards, S. L., Slattery, M. L., Murtaugh, M. A., Edwards, R. L., Bryner, J., Pearson, M., . . . Tom-Orme, L. (2007). Development and Use of Touch-Screen Audio Computer-Assisted Self-Interviewing in a Study of American Indians. American Journal of Epidemiology, 165(11), 1336-1342.

- Gnambs, T., & Kaspar, K. (in press). Disclosure of Sensitive Behaviors across Self-Administered Survey Modes: A Meta-Analysis. Behavior Research Methods.

- Groves, R. M. (2006). Nonresponse Rates and Nonresponse Bias in Household Surveys. Public Opinion Quarterly, 70(5), 646-675.

- Groves, R. M., Mosher, W. D., Lepkowski, J. M., & Kirgis, N. G. (2009). Planning and Development of the Continuous National Survey of Family Growth. Vital and health statistics. Ser. 1, Programs and collection procedures(48), 1-64.

- Hales, J., Nevill, C., Pudney, S., & Tuipping, S. (2009). Longitudinal Analysis of the Offending, Crime and Justice Survey 2003-2006.

- Harris, K., Halpern, C., Whitsel, E., Hussey, J., Tabor, J., Entzel, P., & Udry, J. (2009). The National Longitudinal Study of Adolescent Health: Research Design

- Iversen, R., Furstenberg, F., & Belzer, A. (1999). How Much Do We Count? Interpretation and Error-Making in the Decennial Census. Demography, 36(1), 121-134.

- Johnston, J., & Walton, C. (1995). Reducing Response Effects for Sensitive Questions: A Computer-Assisted Self Interview with Audio. Social Science Computer Review, 13(3), 304-319.

- Kupek, E. (1998). Determinants of Item Nonresponse in a Large National Sex Survey. Archives of sexual behavior, 27(6), 581-594.

- Langhaug, L. F. (2009). How You Ask the Question Really Matters: A Randomized Comparison of Four Questionnaire Delivery Modes to Assess Validity and Reliability of Self-reported Socially Censured Data in Rural Zimbabwean Youth. Doctor of Philosophy.

- Langhaug, L. F., Cheung, Y. B., Pascoe, S. J. S., Chirawu, P., Woelk, G., Hayes, R. J., & Cowan, F. M. (2011). How You Ask Really Matters: Randomised Comparison of Four Sexual Behaviour Questionnaire Delivery Modes in Zimbabwean Youth. Sexually Transmitted Infections, 87(2), 165-173.

- Langhaug, L. F., Sherr, L., & Cowan, F. M. (2010). How to Improve the Validity of Sexual Behaviour Reporting: Systematic Review of Questionnaire Delivery Modes in Developing Countries. Tropical Medicine & International Health, 15(3), 362-381.

- O’Reilly, J. M., Hubbard, M. L., Lessler, J. T., Biemer, P. P., & Turner, C. F. (1994). Audio and Video Computer-Assisted Self Interviewing: Preliminary Tests of New Technologies for Data Collection. Journal of Official Statistics, 10(2), 197.

- Perlis, T. E., Des Jarlais, D. C., Friedman, S. R., Arasteh, K., & Turner, C. F. (2004). Audio-Computerized Self-Interviewing Versus Face-to-Face Interviewing for Research Data Collection at Drug Abuse Treatment Programs. Addiction, 99(7), 885-896.

- Tourangeau, R., & Smith, T. W. (1996). Asking Sensitive Questions. Public Opinion Quarterly, 60(2), 275-304.

- Turner, C. F., Ku, L., Rogers, S. M., Lindberg, L. D., Pleck, J. H., & Sonenstein, F. L. (1998). Adolescent Sexual Behavior, Drug Use, and Violence: Increased Reporting with Computer Survey Technology. Science, 280(5365), 867-873.

- U. S. Department of Health and Human Services. (2014). National Survey on Drug Use and Health. Ann Arbor, MI: Inter-university Consortium for Political and Social Research (ICPSR) [distributor].

- U.S. Department of Health and Human Services. (2008). Development of Computer-Assisted Interviewing Provedures for the National Household Survey on Drug Abuse, from http://www.oas.samhsa.gov/nhsda/CompAssistInterview/toc.htm