Question Order Experiments in the German-European Context

Silber H., Höhne J. K., & Schlosser S. (2016). Question Order Experiments in the German-European Context, Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=7645

© the authors 2016. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

In this paper, we investigate the context stability of questions on political issues in cross-national surveys. For this purpose, we conducted three replication studies (N1 = 213; N2 = 677; N3 = 1,489) based on eight split-ballot design experiments with undergraduate and graduate students to test for question order effects. The questions, which were taken from the Eurobarometer (2013), included questions on perceived performance and identification. Respondents were randomly assigned to one of two experimental groups which received the questions either in the original or the reversed order. In all three studies, respondents answered the questions about Germany and the European Union/Europe differently depending on whether the question was asked first or second in the question sequence. Specifically, when answering a subsequent question in a question sequence, the preceding question seems to have functioned as a standard of comparison. Our empirical findings also suggest that the likelihood of the occurrence of such context effects can be reduced by implementing informed questionnaire design strategies.

Keywords

context effects, cross-national surveys, questionnaire design experiments

Acknowledgement

The authors would like to thank Steffen M. Kühnel (University of Göttingen, Germany) and the anonymous reviewers for their valuable comments on an earlier draft of the paper.

Copyright

© the authors 2016. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

Large-scale cross-national surveys such as the Eurobarometer (a survey on public opinion in 34 European countries), EUCROSS (a survey on crossing boarders in six European countries), and EUMARR (a survey on binational marriages in four European countries) regularly collect data on citizens of European countries. These surveys measure a variety of attitudes, opinions, facts, and behaviors toward the European Union/Europe, as well as toward the respondent’s home country. Interestingly, respondents in these surveys frequently evaluate their home country more positively than the European Union/Europe. Likewise, they evaluate their countries’ performance higher and the performance of the European Union/Europe lower. Considering, more precisely, the survey instruments, it was found that the most frequent way of measuring political issues in cross-national contexts is by first asking questions about the home country of the respondents (in this case Germany), and subsequently asking the identical question about the European Union/Europe. For instance, the Eurobarometer (2013) asks respondents the following two questions on their perceived performance of the democracy in their home country and then in the European Union:

On the whole, are you very satisfied, fairly satisfied, not very satisfied or not at all satisfied with the way democracy works in Germany?

On the whole, are you very satisfied, fairly satisfied, not very satisfied or not at all satisfied with the way democracy works in the European Union?

Upon analysis of the data of the Eurobarometer (2013) regarding this pair of questions, it was determined that respondents are much more satisfied with how democracy works in Germany than in the European Union. For instance, a total of 66.1% of the respondents stated that they are “very satisfied” or “fairly satisfied” with how democracy works in Germany. In contrast, only 45.9% of the respondents stated that they are “very satisfied” or “fairly satisfied” with how democracy works in the European Union; that is a difference of Δ = 20.2%. Let us consider a further example that was also taken from the Eurobarometer (2013). Respondents were asked to assess the current situation of the German economy:

How would you judge the current situation of the German economy?

Followed by the identical question about the European economy:

How would you judge the current situation of the European economy?

Upon analysis of the answer distributions of this pair of questions, we again observe a similar pattern. While 80.9% of the respondents assessed the national economy of Germany as “very good” or “rather good”, only 38.8% of the respondents assessed the economy of Europe as “very good” or “rather good”; that is a difference of Δ = 42.1%. Interestingly, a similar response pattern was observed for all eighteen West European countries (except Greece with respect to the first question on how democracy works) in the Eurobarometer (2013).

Assuming the existence of strong national identification within Europe, this is not an unexpected result (Checkel, & Katzenstein, 2009; Nissen, 2004) and might be a reason for the large differences between the assessment of the European Union/Europe and the home country. However, it is also quite conceivable that the order of the survey questions may have led to response effects (Groves, 2004). This implies that the findings are not only related to respondents’ attitudes and opinions toward the European Union/Europe but to the order in which these questions were asked. The question order – asking first about the home country (Germany) and then about the European Union/Europe – might have led to a response effect that is known as context effect (Schwarz, 1991). That is, previous questions (primes) in a questionnaire influence the responses of subsequent questions (targets) (Tourangeau, Rips, & Rasinski, 2000). In particular, it can be assumed that respondents compared their home country (Germany) and the European Union/Europe with each other when evaluating the European Union/Europe. This implies that respondents may have had a specific concept or comparison standard in mind based on the preceding question about Germany. Thus, their evaluation of the European Union/Europe may have been influenced by the preceding question about the home country.

Empirical evidence on questionnaire design (Saris, & Gallhofer, 2014), cognitive information processing (Strack, & Martin, 1987; Sudman, Bradburn, & Schwarz, 1996), as well as response effects (Schuman, & Presser, 1996; Strack, 1992) suggest that question order effects are more likely if the questions are related in content. This is especially the case when asking a content-related prime question, which can shift the distribution and the overall mean of the target question. Tourangeau, Rips, & Rasinski (2000), for instance, refer to this change in the overall direction of the answers to the target question as a directional context effect.

The survey literature additionally distinguishes between part/whole and part/part context effects (Schuman, & Presser, 1996). In part/whole comparisons, the inclusion/exclusion model is applied and the information from a previous question is either included in (assimilation effect) or excluded from (contrast effect) the judgment process (Schwarz, & Bless, 1992; Schwarz, Strack, & Mai, 1991). In contrast, in part/part comparisons, the previous question sets a standard of comparison for the following question (Tourangeau, Rips, & Rasinski, 2000). For instance, if we are asked to evaluate an average day of our life and we compare it to a very good day, the average day might be evaluated more negatively because of the very high standard of comparison. Likewise, if we compare an average day to a very bad, the same average day may be evaluated much better. The effect that the comparison standard has on an attitude judgment is referred to as judgmental contrast (Tourangeau, Rips, & Rasinski, 2000). With respect to the comparison of Germany and the European Union/Europe, it is assumed that respondents see both as separate entities, which may result in a part/part comparison. Even though Germany is geographically and politically a part of the European Union/Europe, they are likely to be processed as two separate entities in the cognition of the respondents that compete regarding economy, democracy, and identity.

Hypotheses

According to the concept of judgmental contrast, proposed by Tourangeau, Rips, & Rasinski (2000), we expect that the information in the question on the home country (Germany) will be used to answer the question on the European Union/Europe. Particularly, when asked about Germany, German respondents may have certain evaluation criteria in mind which are applied as a standard to evaluate the European Union/Europe. Since this standard is likely to be higher, the European Union/Europe is likely to be evaluated more negatively. Given this line of argumentation, we expect that:

The European Union/Europe will be evaluated more negatively in the Germany/Europe question sequence than in the Europe/Germany question sequence (Hypothesis 1).

Asking first about the European Union/Europe and then about the home country (Germany) may lead to a judgmental contrast as well. Respondents, who have just reported their opinion of the European Union/Europe, may also have a certain evaluation standard in mind which they apply when answering the question about their home country (Germany). Consequently, the home country (Germany) is likely to be evaluated more positively, because the evaluation standard may be lower than the evaluation of the home country without this contextual information. Accordingly, we suggest the following hypothesis:

Germany (home country) will be evaluated more positively in the Europe/Germany question sequence than in the Germany/Europe question sequence (Hypothesis 2).

Data and Methods

Krosnick (2011) recommends the use of experimental designs to evaluate the accuracy of survey questions. Following this suggestion, an experimental design was employed to investigate question order effects of part/part question sequences in a cross-national survey context. We conducted eight split-ballot design experiments on three different topics. Two questions dealt with perceived performance (economy and democracy) and one question with identification (solidarity). Respondents were randomly allocated to one of two experimental groups for each experiment. The first group first received the question about their home country (Germany) and then about the European Union/Europe (original order); the second group first received the question about the European Union/Europe, followed by the question about their home country (revised order). This design enabled us to compare differences between the attitudes toward the European Union/Europe as well as the home country (Germany) asked as the first independent question and as a follow-up question.

In addition to the questions on democracy and economy illustrated above, the experimental study included a third pair of questions on solidarity (identification):

Please tell me how attached you feel to Germany.

Please tell me how attached you feel to the European Union.

The study is based on three online surveys conducted at three universities in Germany with undergraduate and graduate students from various disciplines (see Table 1). The three data sets were collected in 2015. Participation was voluntary and the respondents did not receive any incentives for taking part in the survey. The respondents were invited to participate in the web survey, which was hosted by Unipark (Questback), via email. Each question was presented with vertical response categories on a separate survey page.

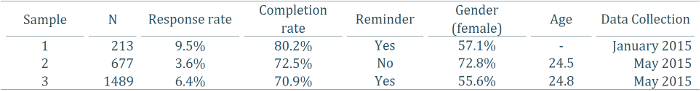

Table 1. Sample Descriptions

Sample 1 consists of two simultaneous data collections of different exercise groups of a seminar on research methods (n = 61 and n = 152). In both data collections together, 9.5% of the invited students responded by accessing the survey link after a period of 21 days. Within a field period of 18 days, 3.6% (sample 2) and 6.4% (sample 3) of the invited students participated in the survey. The low response rate in sample 2, in contrast to the other two samples, might have been caused by the fact that no reminders were sent to respondents of this sample. In addition, χ2-tests revealed no statistically significant socio-demographical differences between the two experimental groups with respect to gender (Sample 1: χ2 (1) = .01; p = .93; Sample 2: χ2 (1) = .95; p = .33; Sample 3: χ2 (1) = .12; p = .73) and age (Sample 2: χ2 (1) = .21; p = .65; Sample 3: χ2 (1) = 1.09; p = .30).

Table 2. Question Order Experiments in the Three Samples

Sample 1 included two split-ballot design experiments and samples 2 as well as sample 3 each included three split-ballot design experiments. Whereas the questions on democracy as well as on solidarity were asked in all three samples, only the respondents of sample 2 and 3 were asked about their assessment of the current situation of the German and European economy (see Table 2).

Results

In this section, the results of the question order experiments on part/part question sequences are presented one after another. The first research question was whether the European Union/Europe is evaluated more negatively when the respondents are asked about Germany (their home country) first. The second research question, in contrast, was whether Germany is evaluated more positively when the respondents were asked about the European Union/Europe first.

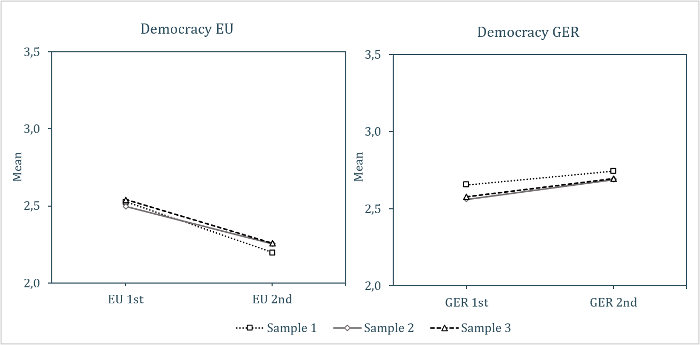

Figure 1. Results of the Experiments on Democracy

Note. Coding of the response categories: 1 = “Not at all satisfied”, 2 = “Not very satisfied”, 3 = “Fairly satisfied”, 4 = “Very satisfied”.

Figure 1 shows the results of the question order experiments on democracy assessed by a comparison of means. With respect to hypothesis 1, the respondents of samples 2 and 3 expressed significantly more satisfaction with the democracy of the European Union when they were asked about it first (Δ sample 2 = .24, p < .01; Δ sample 3 = .28, p < .01), and the respondents of sample 1 expressed similarly lower satisfaction but the difference was not significant (Δ sample 1 = .33, p = .08). Hypothesis 2 was also partially confirmed, as Germany was evaluated significantly more positively in two out of three experiments (Δ sample 1 = .09, p = .65; Δ sample 2 = .13, p = .02; Δ sample 3 = .12, p < .01).

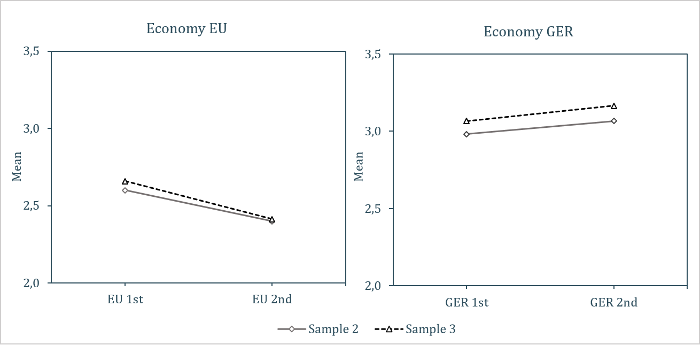

Figure 2. Results of the Experiments on the Economy

Note. Coding of the response categories: 1 = “Very bad”, 2 = “Rather bad”, 3 = “Rather good”, 4 = “Very good”.

With regard to the question order experiments on the economy (see Figure 2), in line with hypothesis 1 it was found that, in both samples, the respondents rated the economy of the European Union as significantly better when this question was asked before the question on the German economy (Δ sample 2 = .20, p < .01; Δ sample 3 = .24, p < .01). In line with hypothesis 2, it was likewise found that respondents rated the German economy better when this question was asked as the second in the question sequence (Δ sample 2 = .08, p = .06; Δ sample 3 = .10, p < .01). However, this difference was not significant for sample 2.

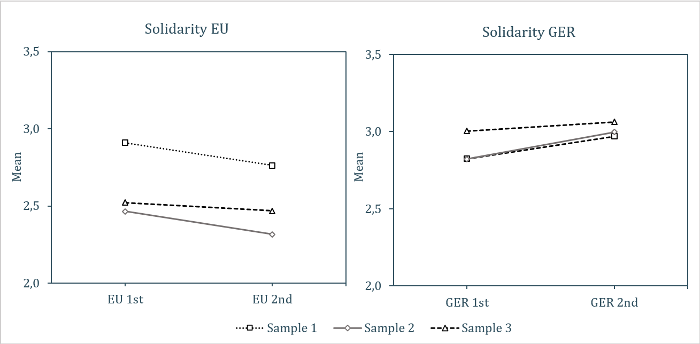

Figure 3. Results of the Experiments on Solidarity

Note. Coding of the response categories: 1 = “Not at all attached”, 2 = “Not very attached”, 3 = “Fairly attached”, 4 = “Very attached”.

Considering the result of the question order experiments on solidarity (see Figure 3), it is too see that in all three samples, respondents felt more attached to the European Union when this question was asked before the questions on solidarity toward German (hypothesis 1). This effect was significant in sample 2 (Δ sample 2 = .14, p = .03), whereas samples 1 and 3 showed only a tendency in the expected direction (Δ sample 1 = .15, p = .30; Δ sample 3 = .05, p = .22). Hypothesis 2 was only partially confirmed, as respondents of all three samples reported more solidarity with Germany when the question was asked as a subsequent question but only the effect of sample 2 was significant (Δ sample 1 = .14, p = .24; Δ sample 2 = .17, p < .01; Δ sample 2 = .06, p = .18).

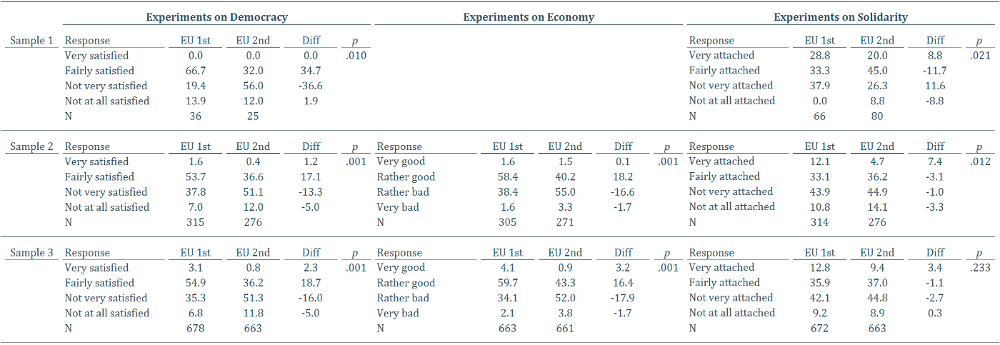

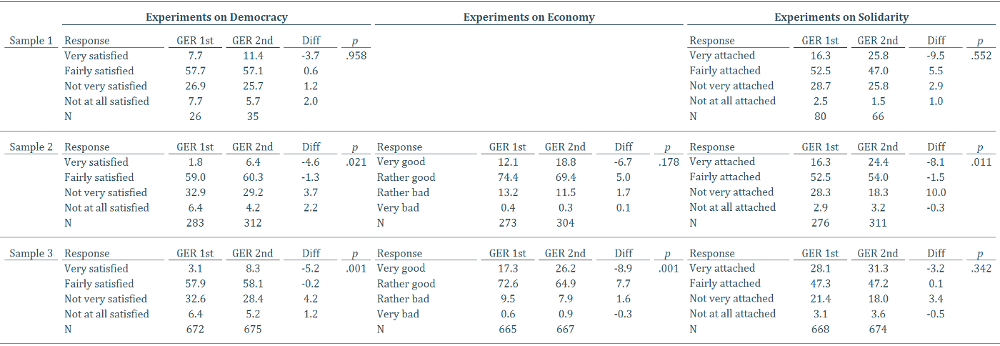

With respect to the distributions of the answers between the two experimental groups, the experiments on democracy and economy showed larger differences for the questions about the European Union/Europe than for the questions about Germany. In detail, the differences between the respondents’ answers in each of the two experimental groups, who responded “very satisfied” or “fairly satisfied” to the democracy question, ranged from Δ = 18.3% to Δ = 34.7% for the European Union (see Table A1) and from Δ = 3.1% to Δ = 5.9% for Germany (see Table A2). Additionally, the differences between the respondents’ answers in each of the two experimental groups, who assessed the economy as “very good” or “rather good”, ranged from Δ = 18.3% to Δ = 19.6% for Europe (see Table A1) and from Δ = 1.2% to Δ = 1.7% for Germany (see Table A2). However, the experiment on solidarity revealed differences of similar magnitude for questions about the European Union/Europe and Germany. More precisely, the differences between respondents, who felt “very attached” or “rather attached”, ranged from Δ = 2.3% to Δ = 4.3% for the European Union (see Table A1) and from Δ = 3.1% to Δ = 9.6% for Germany (see Table A2).

Discussion

The aim of the present experimental study was to examine question order effects in a cross-national context by employing different question sequences on several important topics in the German-European context. The question topics included perceived performance (democracy and economy) as well as identification (solidarity). In particular, it was investigated whether asking respondents about their home country (Germany) first and then about the European Union/Europe (or vice versa) increases the likelihood of the occurrence of question order effects. In line with our two hypotheses, the study showed that question order effects occur in both directions.

With respect to our first hypothesis, we expected that the European Union/Europe would be evaluated more negatively in the Germany/Europe than in the Europe/Germany question sequence (hypothesis 1). Overall, the statistical results revealed question order effects in five out of eight experiments (p < .05). Regarding our second hypothesis, we expected that Germany would be evaluated more positively when it was asked as the second question (hypothesis 2). Analogous to the results of our first hypothesis, we observed that four out of eight experiments showed significant results. The differences between the two question sequences ranged up to a remarkable Δ = 36.6% for a question about the European Union (see Table A1) and up to Δ = 10.0% for a question about Germany (see Table A2).

Our findings are consistent with the theoretical framework of directional context effects with the cognitive information processing mechanism of judgmental contrast (Tourangeau, Rips, & Rasinski, 2000), which suggests that question order effects are more likely to occur if questions are related. Particularly, if the questions form a part/part comparison, respondents use the information of the first question as a standard of comparison and evaluate the preceding question according to this standard. This implies that respondents seem to have certain evaluation criteria and standards in mind when answering the second questions.

The present study has certain limitations. First, it was conducted among students, who represent a homogenous subgroup of the general population. However, because our study was based on survey experiments and designed to demonstrate “what can happen”, we are quite confident that our findings will be valuable to studies with non-student respondents as well (Druckman, & Kam, 2011). Additionally, the robustness of our findings is strengthened by the fact that the question order effects replicated across large student samples from three different universities. This is particularly notable because two of the universities focus on humanities and one on technology. However, the inferences from student samples cannot simply be applied to the general population, but can rather serve as a proxy on which future investigations can build. Second, the experimental studies were conducted employing the online survey mode, whereas many cross-national surveys are based on face-to-face interviews. However, online samples are increasingly being used in empirical social research and, more importantly, recent research suggests that the data quality obtained by using internet surveys is comparable to traditional high quality survey modes, such as face-to-face or telephone survey modes (Couper, 2000; de Leeuw, 2005; Dillmann et al., 2009; Kaplowitz, Hadlock, & Levine, 2004; Kaplowitz et al., 2012; Malhorta, & Krosnick, 2007; Shin, Johnson, & Rao, 2012). Third, the political climate at the time when the survey was conducted may have had a considerable impact on the size of the question order effects. Therefore, future research could address this limitation by replicating these experiments in a longitudinal study design.

Our findings have theoretical as well as practical implications. Theoretically, our results show that the question order led respondents to use the information of the previous questions in order to evaluate preceding questions in a very different way. Specifically, our findings suggest that information obtained by first answering a question about one group in a part/part comparison leads respondents to compare the two groups with each other when answering the question about the second group instead of evaluating the group by itself, which leads to a directional context effect. From a practical perspective, this seems to be highly relevant for questions on performance and less relevant for questions on identification. However, it remains an open question if other question types such as factual or behavioral questions follow the same information processing mechanisms and whether they are affected by the question order to the same extent. In addition, the study reveals new problems concerning how and in what order many cross-national surveys pose their questions. Asking questions on the same topic or political issue about two related entities requires informed question design strategies.

The scope of our findings might not only be limited to cross-national surveys, because national as well as community surveys are similarly interested in part/part and part/whole comparisons (Carlson et al., 1995; Mason, Carslon & Tourangeau, 1994). In order to decrease the likelihood of the occurrence of question order effects, it seems highly recommendable to implement one of the following strategies: first, placing a series of buffering questions between the target questions; second, using the order that is less affected by the question sequences (in our case the question order Europe/Germany); and/or third, explicitly excluding the previous question in the follow-up question. Irrespective of a specific strategy, a final recommendation we can derive from our study is that survey designers should be encouraged to test their questionnaires carefully for context effects caused by the order of questions.

Appendix

Table A1. Question Order Effects on the Question About the European Union/Europe

Note. All values are reported in percent. P-values are based on chi-square tests.

Table A2. Question Order Effects on the Question About Germany

Note. All values are reported in percent. P-values are based on chi-square tests.

References

- Carlson, J. E., Mason, R., Saltiel, J., & Sangster, R. (1995). Assimilation and Contrast Effects in General/Specific Questions. Rural Sociology, 60(4), 666–673.

- Checkel, J. T. & Katzenstein, P. J. (2009). The politicization of European identities. In Checkel, J. T., & P. J. Katzenstein (Eds.), European Identity. Cambridge, UK: Cambridge University Press.

- Couper, M. P. (2000). Review: Web surveys: A review of issues and approaches. Public Opinion Quarterly, 64(4), 464–494.

- de Leeuw, E. D. (2005). To mix or not to mix data collection modes in surveys. Journal of Official Statistics, 21(2), 233–255.

- Dillman, D. A., Phelps, G., Tortora, R., Swift, K., Kohrell, J., Berck, J., & Messer, B. L. (2009). Response rate and measurement differences in mixed-mode surveys using mail, telephone, interactive voice response (IVR) and the Internet. Social Science Research, 38(1), 1–18.

- Druckman, J. N. & Kam, C. D. (2011). Students as Experimental Participants. In Cambridge Handbook of Experimental Political Science. In Druckman, J. N., Green, D. P., Kuklinski, J. H., & Lupia, A. (Eds.), New York: Cambridge University Press.

- Groves, R. M. (2004). Survey errors and survey costs. New York: John Wiley & Sons.

- Kaplowitz, M. D., Hadlock, T. D., & Levine, R. (2004). A comparison of web and mail survey response rates. Public Opinion Quarterly, 68(1), 94–101.

- Kaplowitz, M. D., Lupi, F., Couper, M. P., & Thorp, L. (2012). The effect of invitation design on web survey response rates. Social Science Computer Review, 30(3), 339–349.

- Krosnick, J. A. (2011). Experiments for evaluating survey questions. In Madans, J., Miller, K., Maitland, A., & Willis, G. (Eds.), Question Evaluation Methods: Contributing to the Science of Data Quality. Wiley Online Library: New York.

- Malhotra, N. & Krosnick, J. A. (2007). The effect of survey mode and sampling on inferences about political attitudes and behavior: Comparing the 2000 and 2004 ANES to Internet surveys with nonprobability samples. Political Analysis, 15(3), 286–323.

- Mason, R., Carlson, J. E., & Tourangeau, R. (1994). Contrast effects and subtraction in part-whole questions. Public Opinion Quarterly, 58(4), 569–578.

- Nissen, S. (2004). European Identity and the Future of Europe. Aus Politik und Zeitgeschichte, 38, 21–29.

- Saris, W. & Gallhofer, I. N. (2014). Design, Evaluation, and Analysis of Questionnaires for Survey Research. Hoboken, NJ: John Wiley & Sons.

- Schuman, H. & Presser, S. (1996). Questions and answers in attitude surveys: Experiments on question form, wording, and context. Thousand Oaks, CA: Sage Publications.

- Schwarz, N. (1991). In what Sequences Should Questions Be Asked? Context Effects in Standardized Surveys. Social Science Open Access Repository, 16, 1–14.

- Schwarz, N. & Bless, H. (1992). Assimilation and Contrast Effects in Attitude Measurement: An Inclusion/Exclusion Model. Advances in Consumer Research, 19, 72–77.

- Schwarz, N., Strack, F., & Mai, H.-P. (1991). Assimilation and Contrast Effects in Part-Whole Question Sequences: A Conversational Logic Analysis. Public Opinion Quarterly, 55, 3–23.

- Shin, E., Johnson, T. P., & Rao, K. (2012). Survey mode effects on data quality: Comparison of web and mail modes in a US national panel survey. Social Science Computer Review, 30(2), 212–228.

- Strack, F. (1992). “Order Effects” in Survey Research: Activation and Information Functions of Preceding Questions. In Schwarz, N. & Sudman, S. (Eds.), Context Effects in Social and Psychological Research. New York: Springer.

- Strack, F. & Martin, L. L. (1987). Thinking, Judging, and Communicating: A Process Account of Context Effects in Attitude Surveys. In Hippler, H.-J., Schwarz, N., & Sudman, S. (Eds.), Social Information Processing and Survey Methodology. New York: Springer.

- Sudman, S., Bradburn, N. M., & Schwarz, N. (1996). Thinking about answers: The application of cognitive processes to survey methodology. San Francisco: Jossey-Bass Publishers.

- Tourangeau, R., Rips, L. J., & Rasinski, K. (2000). The Psychology of Survey Response. Cambridge: Cambridge University Press.