Developments in fieldwork procedures and monitoring in longitudinal surveys: case prioritisation and electronic contact sheets on the UK Millennium Cohort Study

Calderwood L., Haselden L., Agalioti-Sgompou V., Cleary A., Rose N. , Bhaumik C. & Thom J. (2020). Developments in fieldwork procedures and monitoring in longitudinal surveys: case prioritisation and electronic contact sheets on the UK Millennium Cohort Study in Survey Methods: Insights from the Field, Special issue: ‘Fieldwork Monitoring Strategies for Interviewer-Administered Surveys’. Retrieved from https://surveyinsights.org/?p=11714 .

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Maximising response is important in any survey and especially so in a longitudinal survey where non-response at a particular wave contributes to attrition. A key element of response maximisation in face-to-face surveys is the adoption and implementation of thorough fieldwork procedures. The introduction of electronic sample management systems has provided more timely and accurate para-data with which to monitor interviewers’ compliance with fieldwork procedures. One of the major advantages of longitudinal surveys is that they are able to make use of prior wave data in order to identify cases at highest risk of non-response and thereby target appropriate fieldwork interventions designed to minimise non-response. This paper examines two developments in the fieldwork procedures used on the UK Millennium Cohort Study (MCS) designed to maximise response: case prioritisation for low-contact propensity cases and electronic contact sheets to help ensure adherence to contact protocols. We compare fieldwork procedures used in the fifth wave in 2012 (at age 11) with those used at the sixth wave in 2015 (at age 14), utilising wave-on-wave changes in procedures to compare the effectiveness of different approaches to response maximisation. In the first part of our paper, we compare our two different approaches to case prioritisation: response propensity models employed at wave 5 and a simpler approach using prior wave outcomes only used at waves 6. We conclude that the simpler approach to identifying cases which are likely to have low contact propensity, based on prior wave outcomes only, is more effective than a more complex approach based on response propensity models. The second part of our paper, we evaluate the effectiveness of using of electronic contact sheets (ECS) at wave 6 to improve compliance with fieldwork procedures, cost-effectiveness and reduce non-response. We show that at wave 6 interviewer compliance rates were higher and non-contact rates were lower than at wave 5, and argue that the introduction of the ECS has led to this improvement in fieldwork quality and reduction in non-response.

Keywords

call protocols, case prioritisation, fieldwork procedures, response propensity model, sample management

Acknowledgement

The UK Millennium Cohort Study (MCS) is run by the Centre for Longitudinal Studies at UCL Institute of Education and funded by the ESRC and a consortium of UK government departments. Ipsos MORI carried out the data collection for the fifth and sixth waves of MCS. We would like to thank the research and operational staff at CLS and Ipsos MORI who were responsible for the implementation of the data collection for this investigation.

Copyright

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

1. Introduction

Maximising response is important in any survey and especially so in a longitudinal survey where non-response at a particular wave contributes to attrition. A key element of response maximisation in face-to-face surveys is the adoption and implementation of thorough fieldwork procedures. Most high-quality surveys use well-established fieldwork procedures and considerable resources are typically devoted to monitoring their implementation, with the aim of ensuring that field interviewers are correctly following them. The widespread move to electronic sample management systems over the last 5-10 years has provided survey organisations with more timely and accurate para-data with which to monitor interviewers, including their compliance with fieldwork protocols.

In order to improve fieldwork practice, it is important to consider the different sources of non-response separately in order to design response maximisation strategies to address their different causes (Groves and Couper, 1998). For longitudinal surveys, non-location is an important source of non-response, in addition to non-contact and non-cooperation (Lepkowski and Couper, 2002). Fieldwork procedures designed to minimise non-location i.e. mover tracking, are also employed in addition to procedures for minimising non-contacts and refusals (Couper and Ofstedal, 2009). One of the major advantages of longitudinal surveys is that they are able to make use of prior wave data in order to identify cases at highest risk of non-response and thereby target appropriate fieldwork interventions designed to minimise non-response.

This paper examines two developments in the fieldwork procedures used on the UK Millennium Cohort Study (MCS) designed to maximise response: case prioritisation for low-contact propensity cases and electronic contact sheets to help ensure adherence to contact protocols. We compare fieldwork procedures used in the fifth wave in 2012 (at age 11) with those used at the sixth wave in 2015 (at age 14), utilising wave-on-wave changes in procedures to compare the effectiveness of different approaches to response maximisation.

2. Background

Recent trends in non-response research have moved away from a uniform approach to response maximisation to a more sophisticated approach based on increasing understanding that the same fieldwork intervention may have different impacts on sample sub-groups and that maximising response rates does not necessarily minimise non-response bias (Groves, 2006; Groves and Peytchev, 2008). In particular, there has been increasing interest in the use of responsive or adaptive designs which involve introducing greater flexibility in survey design protocols in order to improve cost efficiency and to improve the accuracy of survey estimates (e.g. Groves and Heeringa, 2006; Couper and Wagner, 2011, Wagner et al., 2012, Schouten et al., 2013; Tourangeau et al., 2017; Schouten, Peytchev and Wagner, 2017). These kinds of designs can involve either using different protocols for different groups of sample members from the outset or adapting fieldwork protocols in real-time during data collection based on the evaluation of information collected as part of the survey process.

As longitudinal surveys are able to use information from previous waves, they are better able than cross-sectional surveys to estimate in advance of data collection which cases will require more field effort, and which cases are most important to retain in the sample in order to minimise non-response bias. This information can therefore be used to target fieldwork interventions to maximise response more effectively from the outset. Lynn (2015, 2017) argues that longitudinal surveys should make greater use of prior wave data to target fieldwork interventions aimed at reducing non-response, and advocates the use of targeted response inducement strategies on longitudinal surveys. There are now a number of examples of longitudinal surveys using this approach for a range of different fieldwork interventions (e.g. Peytchev et al., 2010; Luiten and Schouten, 2013; Lynn, 2016, Kaminska and Lynn, 2017).

Case prioritization is particular type of targeted or adaptive design, which usually involves identification of low response propensity cases prior to the start of fieldwork for a specific fieldwork intervention. There is a small but growing literature in this area, on both cross-sectional and longitudinal surveys. Peytchev et al. (2010) found that interviewer incentives for additional effort in relation to difficult cases was not effective at increasing response rates or reducing non-response bias on a longitudinal survey. Rosen et al (2014) find that a face-to-face non-response follow-up for difficult cases on a longitudinal survey was effective at boosting the response rate and led to a slight reduction in non-response bias. Wagner et al. (2012) conducted a number of different experimental interventions for priority cases on a cross-sectional survey, primarily designed to increase interviewer call attempts. Overall, although they had some success at changing interviewer behaviour, the impact on response rates was modest. Gummer and Blumenstiel (2018) allocate better interviewers to low propensity cases in a longitudinal survey. They find this intervention slightly improved co-operation rates and led to a small reduction in the risk of non-response bias. All of these studies used model-based approaches for identifying low response propensity cases.

In the context of increasing concern about the cost-effectiveness of fieldwork procedures, particularly for face-to-face surveys, as well as increasing demand for real-time para-data to monitor fieldwork effort (e.g. Kreuter, 2013), many survey organisations have invested in developing electronic sample management systems to enable this. As well as introducing cost efficiencies, the aim of these improved monitoring systems is also to improve compliance with fieldwork procedures and to reduce non-response rates. However, to our knowledge, there is relatively little evidence in the research literature about the effectiveness of electronic sample management systems to improve compliance, cost-effectiveness and reduce non-response.

This paper provides new evidence about the effectiveness of fieldwork procedures and monitoring for improving efficiency and minimising non-response in the Millennium Cohort Study (MCS), a large-scale longitudinal survey in the UK. Specifically we look at two developments in fieldwork procedures and monitoring designed to maximise response: case prioritisation for low-contact propensity cases and electronic contact sheets to help ensure adherence to contact procedures.

The MCS is a longitudinal birth cohort study following over 19,000 children born in the UK in 2000/1. The data collection for the study takes place in the home and involves face-to-face interviews with up to two co-resident parents and the cohort member. There have been seven waves of the study so far: at 9 months (2001-2), age 3 (2003-4), age 5 (2006), age 7 (2008), age 11 (2012), age 14 (2015) and age 17 (2018). The nature and extent of data collection from the cohort member themselves has changed over time and the length of the household visit has increased as the cohort members have got older. Further information on the study design and sample can be found in Plewis (2007). The study is funded by the Economic and Social Research Council (ESRC), and a consortium of UK government departments. It is run by the Centre for Longitudinal Studies at the UCL Institute of Education. The fieldwork for the fifth sixth and seventh waves was carried out by Ipsos MORI.

The MCS employs a wide range of best-practice approaches to maximise retention (Ipsos MORI, 2013, 2017), and achieves high response and retention rates (Fitzsimons, 2017). Field interviewers are required to make extensive efforts to contact cohort families, by phone as well as face-to-face, and to locate those who have moved, by approaching neighbours, stable contacts and schools. Interviewers make contact via the cohort member’s parents, and contact details are provided for up to two resident parents. The fieldwork takes place over an extended period, and a high proportion of non-contacts and refusals at first issue are re-issued, usually to more experienced interviews. Interviewers receive extensive project-specific training, and work is closely monitored in the field to ensure adherence to protocols. Keep-in-touch mailings are sent between-waves, and office tracking, including using administrative data, takes place for known movers. Non-responders are issued to the field at subsequent waves, unless they are long-term or permanent refusals or untraced.

Despite this, and in common with all major longitudinal studies, the achieved sample size has reduced over time, and in particular there was a sizeable drop in the sample size between wave 5 and wave 6 (Fitzsimons, 2017). At wave 5, 13, 287 families were interviewed which is 69% of the total sample and 81% of cases issued to the field. At wave 5, 11,726 families were interviewed which is 61% of the total sample and 76% of cases issued to the field. The lower response rates at wave 6 were primarily due to an increase in non-response due to refusals, which may be related to longer interview at wave 6 and the age of the cohort member.

For this paper, we examine two distinct developments in fieldwork procedures – electronic contact sheets and case prioritisation – where a change has occurred between wave 5 and wave 6, and utilise this wave-on-wave change to evaluate the effectiveness of these fieldwork interventions. We recognise that as the interventions were implemented at different time points, rather than experimentally at the same wave, this evaluative approach has limitations. Additionally, the two interventions are not independent of each other. Nevertheless, we argue that our approach to evaluating these interventions is reasonable and appropriate. Although there were differences in the study design between waves, the fieldwork design and procedures were highly stable. The timing and duration of fieldwork, as well as the fieldwork procedures, were the same at each wave. The fieldwork was conducted by the same survey organisation, with a relatively high degree of interviewer continuity. The composition of cases issued to the field was similar. The main differences between waves were that the interview was significantly longer at wave 6 than wave 5 and the approach to engaging study members changed, reflecting the age of the cohort member. However, as noted above, we would expect that these changes would influence co-operation rates, rather than contact rates. We therefore argue that, despite the limitations of our evaluative approach, our findings are informative and potentially of interest and utility for other studies.

The MCS uses a case prioritisation approach where cases with low contact propensity are given a longer fieldwork period in order to maximise the amount of time available for locating and contacting. This approach was adopted for the first time at wave 5, and has been used at all subsequent waves. We compare the effectiveness of different approaches to identifying low contact propensity cases: response propensity models used at wave 5 in 2012 and prior wave outcomes only used at wave 6 in 2015. The use of response propensity models was a major innovation reflecting developments in the research literature, and to our knowledge, was the first time that a modelling approach has been used to inform fieldwork practice on a major longitudinal survey in the UK. Our main focus for this part of the paper is to compare the effectiveness of different strategies for identifying low contact propensity cases, rather than evaluate the effectiveness of case prioritisation for minimising non-response, as the prioritisation was the same at each wave.

The MCS uses an electronic contact sheet (ECS) to monitor fieldwork and collect real time para-data on interviewer call patterns and tracking activities. This was introduced at wave 6 of the study in 2015 and has subsequently been used at wave 7 in 2018. This was a significant innovation, and a major development in the technical infrastructure for sample management and fieldwork monitoring within the fieldwork agency, which has subsequently been rolled out to other face-to-face projects. The aim of the ECS was to provide real-time para-data and to improve cost effectiveness and interviewer compliance with fieldwork procedures. In common with many high-quality longitudinal surveys, interviewers on MCS are required to follow extensive and complex contact and tracking procedures to minimise non-contact and non-location and there is potential for mistakes or failure to follow procedures correctly. We compare overall non-contact rates and interviewer compliance with contact protocols at wave 6 with wave 5 to evaluate the impact of the ECS.

3. Case prioritisation for low contact propensity cases

3.1 Modelling contact propensities at wave 5

One of the challenges of targeting fieldwork interventions to minimise non-response in longitudinal surveys is accurately identifying which cases to target. There is considerable evidence in the literature that demographic characteristics of sample members are strong predictors of non-response, as well as survey related factors such as prior wave participation (e.g. Groves and Couper, 1998; Watson and Wooden, 2009). As the contact outcome at wave 5 is not observed, we used the wave 4 contact outcome as the dependent variable in our contact propensity model. The definition of non-contact used in this model, and in this section of the paper, includes cases who were not located, as well as those who were located but not contacted. Our preferred model used household information from previous surveys and history of past survey contacts as predictor variables, and the combination of these two types of information optimised predictive power compared with other combinations. As advocated by Plewis, Ketende and Calderwood (2012) we use receiver operating characteristic (ROC) curves to assess the accuracy of different response propensity models. It was clear from the contact propensity model (see Appendix) that the impact of the pattern of past contacts on the likelihood of contact is strongly related to the recency of the contact event, with contact at the most recent wave being the strongest indicator of contact at the next wave. On this basis, we might expect the wave 4 contact outcome to prove to be the most important predictor of contact at wave 5, but of course this cannot be included in these predictions (as it is the dependent variable). This is clearly a limitation of the modelling approach.

3.2. Prioritisation of cases with low contact propensity at wave 5

Fieldwork for the fifth wave of the MCS ran from January to July 2012 in England and Wales[1]. For low contact propensity cases, it was felt that it would be beneficial to have a longer fieldwork period to maximise the time available for interviewer phone calls and face-to-face visits to be made to contact and locate families and for tracing activities to be undertaken. We therefore decided to allocate all of the England and Wales low contact propensity cases to the first fieldwork batch beginning in January 2012.

No additional procedures e.g. higher minimum call requirements were put in place for these cases. The intention of the intervention was simply to maximise time in the field. Based on the distribution of the contact probabilities estimated by the model, our intention was that the bottom 10% of cases should be prioritised (hereafter referred to as ‘priority cases’). Above this point the predictions of contact propensities flattened out (see Chart 1 below) and are broadly similar across the range and therefore this seemed an appropriate cut-off point.

Chart 1: Distribution of non-contact cases

However, as we also wanted to prioritise families known to have moved and those for whom a new address had recently been found, we were only able to prioritise the bottom 5% of cases in the response propensity distribution. We exclude these additional mover cases from our analysis. In order to preserve fieldwork efficiency, non-priority cases were assigned to clusters, which were randomly assigned to the first or second batch of fieldwork. Priority cases were then added to their nearest batch 1 assignment.

3.3 Prioritisation of cases with low contact propensity at wave 6

At wave 6 of the study we changed our approach to identifying cases with low contact propensity. Rather than using statistical modelling we used prior wave outcomes to identify low contact propensity cases. Cases that were not contacted at one or both of the previous two waves were assigned as priority cases. This definition included non-location outcomes as well as those located but not contacted. The fieldwork design was otherwise the same as at wave 5, and the same fieldwork intervention i.e. case prioritisation of difficult to contact cases was used.

3.4 Comparison of different approaches to identifying low contact propensity cases

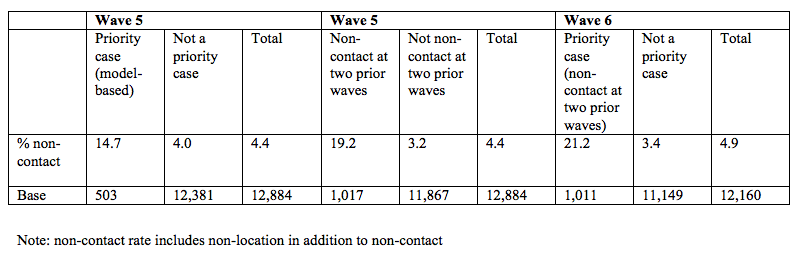

Table 1 shows the overall proportion of cases with non-contact outcomes (including non-location) at wave 5 and wave 6 respectively, by whether or not they were priority cases. We evaluate the effectiveness of different approaches to targeting of low contact propensity cases by comparing the overall proportion with non-contact outcomes. We argue that higher proportion of non-contact outcomes indicates a more effective targeting approach.

Table 1: Non-contact rate by whether or not priority case

Table 1 shows that, as expected, at both waves, priority cases were much more likely to have a non-contact outcome than non-priority cases (p<0.01). At wave 5 we also observed that cases with a non-contact outcome at wave 4 and/or wave 3 had a higher non-contact rate (19.2%) than priority cases selected via the modelling approach (14.7%). This indicates that the simpler approach using prior wave outcomes only appears to more accurately identify cases, which are likely to be non-contact at wave 5, than the approach using the response propensity modelling. We also conducted statistical tests of sensitivity and specificity, with the approach using prior wave outcomes having a much higher sensitivity score (34.3% vs. 13.0%), indicating fewer false positives and a higher proportion of true positives i.e. actual non-contacts correctly identified, with only a slightly lower specificity score (93.3% vs. 96.5%), indicating the proportion of true negatives i.e. contacted cases who were not targeted. Based on this evidence, at wave 6, we adopted a simpler approach of identifying priority cases based on prior wave outcome only.

However, we are aware that our conclusion that this simpler approach is more effective is subject to a number of caveats. It is likely that at wave 5 the fieldwork intervention i.e. the prioritisation has led to a lower non-contact rate among the priority cases than prior wave non-contacts who were not prioritised. We should also note that some of the wave 4 and/or wave 3 non-contact cases (n=244) were also classified as priority cases, so these groups are not independent. Though for those priority cases, which were not prior wave non-contacts, the non-contact rate was lower (8.9%), which provides further indicative evidence that that the prior wave outcome is more informative for prediction than the modelling approach. We are also able to compare priority cases identified by different methods, and subject to the same intervention, on the two different waves of the study. Table 1 also shows that a higher proportion of priority cases were non-contacts at wave 6 (21.2%) than at wave 5 (14.7%), though the non-contact rate overall was higher at wave 6 than wave 5. This provides further evidence that the simpler method of identifying cases with low contact propensity at wave 6 was more effective than the modelling approach used at wave 5. However, this comparison also has limitations, as the priority cases at each wave are not independent of each other i.e. some cases were priority cases at both waves.

3.5 Summary and conclusions on case prioritisation

We conclude that the simpler approach to identifying cases which are likely to have low contact propensity, based on prior wave outcomes only, is more effective than a more complex approach based on response propensity models. It appears that the major disadvantage of the modelling approach is that as we used the wave 4 outcome as the dependent variable in our model, we were not able to use it as a predictor and therefore the modelling approach misses the considerable predictive power of the prior wave outcome. We demonstrate the value of using prior wave data in longitudinal studies to target fieldwork interventions. However we also show that relatively simple ways of identifying cases i.e. using prior wave outcome can be effective and that complex response propensity models may not always be necessary or beneficial.

4. Electronic contact sheets to help ensure adherence to contact procedures

4.1 Contact procedures

In order to minimise non-response due to non-contact, interviewers are required to make extensive efforts to contact and locate sample members prior to recording an unproductive field outcome. These include the number and timing of call attempts, the mode of the calls (face-to-face or by telephone) and the duration over which calls should be made. In relation to contact procedures, at both wave 5 and wave 6, interviewers were expected to meet the following call requirements before they can return the case as a non-contact:

- Make a minimum of 8 face-to-face calls

- Make at least 4 of those face-to-face calls at the weekend or in the evening

- Make at least 5 phone calls

- Allow at least 2 weeks between the first and last call

For this paper we focus on compliance with contact procedures, however there are also protocols for tracking which interviewers need to follow to attempt to find sample members if they are not located at the issued address to minimise non-response due to non-location.

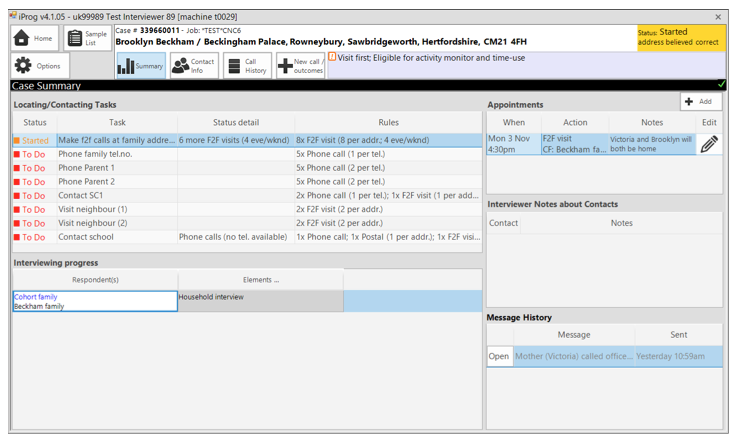

4.2 The Electronic Contact Sheet (ECS)

It is clearly important that interviewers follow fieldwork procedures, and extensive resources are generally used in face-to-face surveys to monitor interviewer compliance with fieldwork procedures. We examine the introduction of an electronic contact sheet (ECS) at wave 6 to replace paper call records and evaluate its impact on interviewer compliance and on non-contact rates. At wave 5, interviewers logged all of their calls on a paper contact sheet in the field and then entered this electronically into a more basic system, called iprogress, when they were at home. At wave 6 interviewers were required to log calls electronically in real-time on the ECS while they were in the field and were issued with new lightweight laptops which could be used in tablet format to facilitate this. Whenever an interviewer logged a call, they were prompted to enter in details of the time, date and mode of the call as well as whom they spoke to and any notes about the call. The ECS incorporates consistency checks within the call logs, reminds interviewers of what actions they have to still carry out in order to meet the contact and tracking requirements and requires interviewer to give an explanation if they return a case with an unproductive outcome without having fulfilled all of the requirements. These explanations are reviewed by the field team and if they are not satisfactory, the case may be returned to the interviewer for them to do further work. The iprogress system used at wave 5 had none of these features and so interviewers were less aware if they had not completed all the required tasks and it was harder for the field office to monitor.

A screen shot of the ECS is shown below:

This shows the ‘case summary screen’. The top-left section of the screen (‘locating and contacting tasks’) lists of all the call requirements for making contact and tracking (‘rules’), indicates the status of each (whether they are outstanding, completed and started) and what is remaining (‘status detail’). This gives interviewers and field staff a clear record of what has been done and what else is required. Calls are entered on a different screen, which includes consistency checks on the information entered by interviewers and an outcome must be recorded for each call. The case summary screen will then be updated and the number of outstanding calls needed reduces accordingly.

The ECS had other advantages for field management. It allowed interviewers to easily and securely access a range of information about the household (e.g. a range of contact information and information about household members, previous participation, special needs requirements of cohort members and sensitive notes made by previous interviewers at interview), manage appointments electronically and receive up to date information from head office.

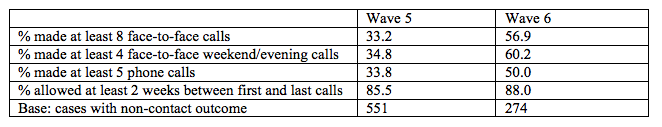

4.3 Comparing compliance with contact procedures at wave 5 and wave 6

As a result of the introduction of the ECS, we anticipated that interviewer compliance with contact procedures would be higher at wave 6 compared with wave 5. We restrict our analysis to compliance at first issue as this covers the vast majority of the work carried out by interviewers and fieldwork protocols at first issue were the same at each wave. As only a selected sub-set of cases are re-issued, restricting the analysis to first issue calls also avoids this potential confounder and maximises the sample size available for analysis. Moreover, fieldwork protocols at re-issue are different than at first issue and re-issue interviewers are generally more experienced and higher performing.

We examine compliance with contact procedures among cases returned by the interviewer with a non-contact outcome. We restrict our analysis to only those non-contact outcomes where interviewers would have been expected to meet all of the call requirements. We would expect to observe high compliance rates for these cases as the interviewers were required to complete the call requirements before returning the case with a non-contact outcome.

Table 2: Interviewer compliance with call procedures

Table 2 shows for wave 5 and wave 6 the proportion of cases with a non-contact outcome for which each of the call requirements was met, which we use as our compliance measures. It shows that on all of our measures, the proportion of non-contact cases in which interviewers complied with the contact procedures and met the minimum call requirements was higher at wave 6 than at wave 5. This increase was considerable and statistically significant (p<=0.01) for all of the measures, except the final one (at least 2 weeks between first and last calls). The lack of increase on this measure was not unexpected as this measure had very high compliance at both waves, which is likely because MCS has a long fieldwork period. The largest increase in compliance was in relation to face-to-face calls. For example, almost two-thirds (60.2%) of cases met the requirement for at least four face-to-face weekend/evening calls at wave 6 compared with around one-third (34.8%) at wave 5.

However, it is notable that compliance is still much lower than 100% at wave 6, and was very low at wave 5. In part this is due to the fact that we only look at compliance at first issue. Most of these non-contact cases would have been reissued to another interviewer for further call attempts, which would have increased the proportion of cases for which the call requirements were met. It is also likely that there is some under-reporting of calls by interviewers. Additionally, there are a number of other reasons why the call requirements may not be fully met including lack of time in the field, interviewer or respondent sickness or unavailability, case reallocation and hostility towards the interviewer.

4.4 Comparing non-contact rates at wave 5 and wave 6

We would expect higher compliance with contact procedures at wave 6 would imply a lower overall non-contact rate[2]. This was observed with the non-contact rate at first issue falling from 3.4% at wave 5 to 1.8% at wave 6, which was a statistically significant change (p<=0.01). We are not able to definitively conclude that the increase in compliance to contact procedures is the reason for the reduction in the non-contact rate. However, this evidence is certainly strongly indicative of a relationship between improving compliance with fieldwork procedures and reductions in non-contact.

4.5 Summary and conclusions on electronic sample management systems

We have shown that at wave 6 interviewer compliance rates were higher and non-contact rates were lower than at wave 5, and have argued that the introduction of the ECS is likely to have led to this improvement in fieldwork quality and reduction in non-response. Although it is possible that the ECS has led to better recording of call attempts by interviewers, we feel that the improved interviewer monitoring and reminders within the ECS meant that the call requirements were followed more thoroughly and enforced more robustly and that this between wave increase is a real change in actual compliance rather than only recorded compliance. The fact that non-contact rates were lower at wave 6 also provides indicative evidence that there was a real change in actual compliance. From a fieldwork management perspective, even if this change was only in recorded compliance, this is still a major benefit of the ECS.

As well as improving compliance, the ECS provides several efficiency and cost benefits at a survey project and organisational level. It has lead to greater cost-effectiveness, in particular as it facilitates greater centralisation and automation in fieldwork monitoring. The ECS led to a number of efficiencies in relation to fieldwork management and monitoring; paper contact sheets did not need to be booked-in and processed, there were considerable savings on printing and postage as well as improved information security. Less staff time was needed to contact interviewers individually about their fieldwork progress and to check their compliance with fieldwork procedures. As field staff can monitor interviewer performance more effectively, this enables problems to be identified more quickly and improves the accuracy of forecasting of response and coverage rates. Interviewers were involved in the design of the ECS and all interviewers were given additional training and support on how to use the ECS. It has proved very popular with interviewers who value having a single, centralised and flexible system to help them with case management. The ECS has now been rolled out to other complex face-to-face surveys within the survey organisation, providing additional organisational level benefits including standardisation of para-data and reporting across projects and improved forecasting and resource allocation.

5. Summary and conclusions

This paper provides new evidence about the effectiveness of fieldwork procedures and monitoring for improving efficiency and minimising non-response in the Millennium Cohort Study (MCS), a large-scale longitudinal survey in the UK. Specifically we evaluate two developments in fieldwork procedures and monitoring designed to maximise response: case prioritisation for low-contact propensity cases and electronic contact sheets to help ensure adherence to contact and tracking procedures. We utilise a wave-on-wave change, between wave 5 and wave 6, to evaluate the effectiveness of these fieldwork interventions. As these interventions were implemented at different time points, rather than experimentally at the same wave, our findings should be interpreted in the context of these limitations, which we acknowledge and discuss throughout. Nevertheless, with these limitations in mind, we argue that our approach to evaluating these interventions is appropriate and reasonable one, and that our findings are informative, make a valuable contribution to the research literature and are of interest and utility for other studies.

We utilise the longitudinal nature of the MCS to make use of prior wave data in order to identify cases at highest risk of non-response and thereby target appropriate fieldwork interventions designed to minimise non-response. In the first part of this paper, we demonstrate the value of using prior wave data in longitudinal studies to target fieldwork interventions and compare two different approaches used on MCS to identifying low contact propensity cases: response propensity models used at wave 5 in 2012 and prior wave outcomes only used at wave 6 in 2015. We conclude that the simpler approach to identifying cases which are likely to have low contact propensity, based on prior wave outcomes only, is more effective than a more complex approach based on response propensity models. Our focus is on evaluating different approaches to case prioritisation, rather than evaluating the effectiveness of the fieldwork intervention. Our findings make a valuable contribution to the important and growing literature on targeted and adaptive designs and the use of case prioritisation (e.g. Schouten, Peytchev and Wagner, 2017), which has a strong focus on model-based approaches. We demonstrate that simpler approaches to targeting low response propensity cases can be effective, and argue that complex approaches to allocating different fieldwork interventions are not always needed when implementing targeted or adaptive designs in surveys.

In the second part of this paper, we examine the effectiveness of electronic sample management systems to improve compliance with fieldwork procedures, cost-effectiveness and reduce non-response. Despite the widespread move to electronic sample management systems over the last 5-10 years, and considerable financial investment by survey organisations in these systems, there is relatively little published evidence about how effective these systems are at delivering quality improvements to face-to-face fieldwork. We utilise the introduction of electronic contact sheets on MCS at wave 6 to compare overall non-contact rates and interviewer compliance with contact protocols with wave 5, and to therefore evaluate the impact of the ECS. We show that at wave 6 interviewer compliance rates were higher and non-contact rates were lower than at wave 5, and argue that the introduction of the ECS led to this improvement in fieldwork quality and reduction in non-response. Our findings make a valuable contribution to the important and growing literature on the use of para-data for fieldwork monitoring (e.g. Kreuter, 2013) and provide a case-study example regarding the design and implementation of an electronic sample management system in a major fieldwork organisation.

Overall, our paper provides new evidence in relation to response maximisation in surveys, evaluating innovative developments on a large-scale, complex face-to-face longitudinal survey in the UK to minimise non-response due to non-contact. Our findings will be of interest and utility to survey practitioners and organisations considering similar interventions and innovations, and make a valuable contribution to the research literature.

[1] Due to other constraints in fieldwork allocations, it was not possible to implement this prioritisation in Scotland and Northern Ireland.

[2] Note: non-contact rate excludes non-location

Appendix: Response propensity modelling

References

- Couper, M.P. and Wagner, J. (2011). Using Paradata and Responsive Design to Manage Survey Nonresponse. Proceedings of the 58th World Statistical Congress, Dublin, 542-548. http://2011.isiproceedings.org/papers/450080.pdf

- Couper, M.P. and Ofstedal, M.B. (2009). Keeping in Contact with Mobile Sample Members. In Lynn, P. (ed.), Methodology of Longitudinal Surveys, Chichester: John Wiley & Sons, Inc, pp. 183-203.

- Fitzsimons, E. (2017). Millennium Cohort Study. Sixth Survey 2015-2016. User Guide. Centre for Longitudinal Studies.

- Groves, R.M. and Heeringa, S.G. (2006). Responsive Design for Household Surveys: Tools for Actively Controlling Survey Errors and Costs. Journal of the Royal Statistical Society, Series A, 169, 3, pp.439-459. DOI: 10.1111/j.1467-985X.2006.00423.x

- Groves, R.M. (2006). Nonresponse Rates and Nonresponse Bias in Household Surveys. Public Opinion Quarterly, 70, pp. 646-675. DOI: 10.1093/poq/nfl033

- Groves, R.M. and Couper, M.P. (1998). Nonresponse in Household Interview Surveys. Wiley: New York. DOI: 10.1002/9781118490082.index

- Gummer, T. and Blumenstiel, J.E. (2018). Experimental Evidence on Reducing Nonresponse Bias through Case Prioritization: The Allocation of Interviewers. Field Methods, 30(2), pp.124-139

- Ipsos MORI (2017). Millennium Cohort Study Sixth Sweep: Technical Report. Centre for Longitudinal Studies.

- Ipsos MORI (2013). Millennium Cohort Study Fifth Sweep: Technical Report. Centre for Longitudinal Studies.

- Kaminska, O. and Lynn, P. (2017). The implications of alternative allocation criteria in adaptive design of panel surveys. Journal of Official Statistics, 33, pp.781-800.

- Kreuter, F. (ed.) Improving Surveys with Paradata. Analytic Uses of Process Information. Wiley: New Jersey. DOI: 10.1002/9781118596869

- Lepkowski, J.M. and Couper, M.P. (2002). Nonresponse in the second wave of longitudinal household surveys. In Groves, R.M., Dillman, D.A., Eltinge, J.L., and Little, R.J.A. (eds.). Survey Nonresponse, New York: John Wiley and Sons. pp. 259-272.

- Luiten, A. and Schouten, B. (2013). Tailored fieldwork design to increase sample representative household survey response: an experiment in the Survey of Consumer Satisfaction. Journal of the Royal Statistical Society, Series A. pp. 169-189.

- Lynn, P. (2017). From standardised to targeted survey procedures for tackling non-response and attrition. Survey Research Methods, 11, pp.93-103.

- Lynn, P. (2016). Targeted appeals for participation in letters to panel survey members. Public Opinion Quarterly, 80, 771-782.

- Lynn. P. (2015). Targeted response inducement strategies on longitudinal surveys. In Engel, U., Jann, B., Lynn, P., Scherpenzeel, A. and Sturgis, P. (eds.). Improving Survey Methods: Lessons from Recent Research. Routledge.

- Peytchev, A., Riley, S., Rosen, J., Murphy, J. and Lindblad, M. (2010). Reduction of Nonresponse bias through Case Prioritisation. Survey Research Methods, 4, 1, pp.21-29.

- Plewis, I., Ketende, S. and Calderwood, L. (2012). Assessing the accuracy of response propensity models in longitudinal studies. Survey Methodology, 38(2).

- Rosen, J.A., Murphy, J., Peytchev, A., Holder, T., Dever, J.A., Herget, D.R. and Pratt, D.J. (2014). Prioritizing Low-Propensity Sample Members in a Survey: Implications for Nonresponse Bias. Survey Practice 7(1)

- Schouten, B., Peytchev, A. and Wagner, J. (2017). Adaptive Survey Design. Chapman and Hall.

- Schouten, B., Calinescu, M. and Luiten, A. (2013). Optimizing quality of response through adaptive survey designs. Survey Methodology, 39 (1).

- Tourangeau, R., Brick, J.M., Lohr, S. and Li, J. (2017). Adaptive and responsive survey designs: a review and assessment. Journal of the Royal Statistical Society, Series A, pp.203-223

- Wagner, J., West, B.T., Kirigis, N., Lepkowski, J.M., Axinn, W.G., Kruger Ndiaye, S. (2012). User of paradata in a responsive design framework to manage a field data collection. Journal of Official Statistics, 28, pp. 477-499.

- Watson, N. and Wooden, M. (2009). Identifying factors affecting longitudinal survey response, In Lynn, P. (ed.) Methodology of Longitudinal Surveys Chichester: Wiley.