Using field monitoring strategies to improve panel sample representativeness: Application during data collection in the Survey of Health, Ageing and Retirement in Europe (SHARE)

Bergmann, M. & Scherpenzeel, A. (2020). Using field monitoring strategies to improve panel sample representativeness: Application during data collection in the Survey of Health, Ageing and Retirement in Europe (SHARE) in Survey Methods: Insights from the Field, Special issue: ‘Fieldword Monitoring Strategies for Interviewer-Administered Surveys’. Retrieved from https://surveyinsights.org/?p=12720

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

The Survey of Health, Ageing and Retirement in Europe (SHARE) is a multidisciplinary and cross-national face-to-face panel study of the process of population ageing. For the sixth wave of data collection, we applied an adaptive/responsive fieldwork design in the German sub-study of SHARE to test actual possibilities and effects of implementing targeted monitoring strategies during fieldwork. The central aim of this design was to improve panel sample representativeness by attempting to achieve more equal response probabilities across subgroups. However, our findings show that we only partly met this goal. Although our adaptive design (interviewer bonus incentives for 80+ respondents) indicated some positive effects, very old panelists still participated less than average in the end. Furthermore, our responsive design measure (contact schedule optimization for young, still working respondents) during fieldwork appeared to be complicated to implement within the regular fieldwork conditions and therefore ineffective. Overall, our results are hence in line with Tourangeau (2015), who argued that respondent characteristics that are suitable for responsive fieldwork measures might in fact be of limited use for true bias reduction.

Keywords

adaptive and responsive survey design, panel sample representativeness, response probability

Acknowledgement

This paper uses data from SHARE Wave 5 and Wave 6 (w5_internal_release_monitoring, w6_internal_release_monitoring; see Börsch-Supan et al., 2013 for methodological details). The SHARE data collection has been primarily funded by the European Commission through FP5 (QLK6-CT-2001-00360), FP6 (SHARE-I3: RII-CT-2006-062193, COMPARE: CIT5-CT-2005-028857, SHARELIFE: CIT4-CT-2006-028812) and FP7 (SHARE-PREP: N°211909, SHARE-LEAP: N°227822, SHARE M4: N°261982). Additional funding from the German Ministry of Education and Research, the Max Planck Society for the Advancement of Science, the U.S. National Institute on Aging (U01_AG09740-13S2, P01_AG005842, P01_AG08291, P30_AG12815, R21_AG025169, Y1-AG-4553-01, IAG_BSR06-11, OGHA_04-064, HHSN271201300071C) and from various national funding sources is gratefully acknowledged (see www.shareproject.org). We would like to thank Frederic Malter and Gregor Sand of the SHARE operations department as well as Johanna Bristle and Felizia Hanemann for their efforts in developing the monitoring system and helping us to use it as the basis for the present study.

Copyright

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

The Survey of Health, Ageing and Retirement in Europe (SHARE) is a multidisciplinary and cross-national panel study that explores the aging process of individuals aged 50 and older (see Börsch-Supan, Brandt, et al., 2013). As for all panel surveys, retaining respondents over waves is crucial for SHARE. Panel attrition not only decreases the number of longitudinal observations but can also lead to biased estimates since some groups of respondents are more likely to drop out than others. SHARE therefore devotes much attention to the motivation of longitudinal respondents to guarantee sample representativeness, for example implementing incentive schemes for respondents and interviewers, extensive interviewer training and monitoring as well as tracing and tracking of respondents between waves (Blom & Schröder, 2011; Börsch-Supan, Krieger, & Schröder, 2013; Kneip, Malter, & Sand, 2015; Malter, 2013). The present study is a continuation of these efforts, extending them to recent developments in survey methodology, which concentrate on the adaptation of fieldwork procedures to respondent characteristics.

Field monitoring designs including adaptive as well as responsive elements have been given a lot of attention in the recent survey methodology literature (for overviews, see Lynn, 2017; Schouten, Peytchev, & Wagner, 2017; Tourangeau, Brick, Lohr, & Li, 2017). The principle of such designs is that, instead of using the same design and same treatment for all sample units, the survey fieldwork strategy is tailored to different persons or households and adapted in response to different fieldwork results. The different, tailored strategies may either be defined before the survey starts and hence are fixed through fieldwork (adaptive designs, or static adaptive designs; Schouten, Calinescu, & Luiten, 2013), or depend on data that is observed during data collection and thus can be seen as a response to current developments in fieldwork (responsive designs or dynamic adaptive designs; Schouten, et al., 2013). The choice of differential strategies is based on background variables that are known before data collection, for example sampling frame information or data from previous waves of data collection. In addition, CAPI- and paradata that are collected during fieldwork can be used.

Concerning adaptive designs, a few studies have used differential interviewer or respondent incentives to improve cooperation. Peytchev, Riley, Rosen, Murphy, and Lindblad (2010) gave interviewers higher payments for completed interviews in the low propensity groups, but this manipulation did not affect the response rate variation in comparison to the control condition. Fomby, Sastry, and McGonagle (2017) used time-limited monetary incentives to boost participation of hard-to-reach respondents, resulting in a temporary increase in fieldwork productivity but not in a significant improvement of the final cooperation rates. Concerning responsive designs, several studies have implemented responsive interventions by prioritizing hard-to-reach cases during fieldwork or adapting call schedules to subgroup response probabilities. However, the results of these contact stage interventions are also mixed: Whereas Kreuter and Müller (2015) and Wagner (2013) did not find significant effects on the subgroup contact probabilities, substantial improvements were reported by Luiten and Schouten (2013), Lipps (2012), Kirgis and Lepkowski (2013) and Wagner et al. (2012). It seemed that the main reason for a lack of results in the former studies was non-compliance of the interviewers with the given instructions, especially in face-to-face interviewing (Wagner, 2013).

To better understand the underlying reasons regarding possible effects of adaptive and responsive interventions, we applied several fieldwork adaptations in the German sub-study of SHARE Wave 6. Besides common incentives for respondents and interviewers, which were not targeted to specific groups, we also implemented targeted adaptive and responsive designs using background information of respondents to increase the efficiency of the measures. In the following, we focus on these targeted interventions, namely an additional interviewer bonus incentive and a contact schedule optimization.

- Interviewer bonus incentives: Since it was known from previous research (Bristle, Celidoni, Dal Bianco, & Weber, 2019) that people of 80 years and older had especially low conditional response probabilities, we put in place a specific strategy for this group in advance. Before the fieldwork started, interviewers were assured a bonus incentive of 5 € extra, on top of their normal[1] per interview payment, for each interview with an 80+ person. This can be regarded as a (static) adaptive design measure (Schouten, et al., 2013).

- Contact schedule optimization: This measure was developed during fieldwork monitoring, when the results showed that the youngest age group (<65 years) had a lower than average response probability, as well as respondents who were still working. Based on the incoming contact data, we proved that the early evening was the best time to contact these groups, showed this finding to the interviewers, and urged them to do so. This intervention can be regarded as a responsive design measure (Schouten, et al., 2013).

Since previous research had shown that the response probability of panel members in SHARE depend on respondent characteristics such as age, self-reported health or working status (Bristle, Celidoni, Dal Bianco, & Weber, 2019), our main question in this article is whether adaptive and responsive interventions can help to increase the homogeneity of response probabilities across respondent subgroups and thus improve the overall panel sample representativeness. Based on the answer to this question, we can better evaluate the feasibility and utility of implementing an adaptive/responsive fieldwork design also for other countries in SHARE.

In the following, we first introduce the data used and describe the different adaptive/responsive interventions that have been applied in Wave 6 along with our analysis strategy. Afterwards, we analyse the effectiveness of such designs for different groups of panel members within the framework of a general field monitoring strategy. We conclude with a summary of our findings and give practical advises for researchers in directing their efforts by using this kind of additional information.

Data and methods

SHARE is a survey that is especially suitable for an adaptive/responsive fieldwork design, because it already conducts a high level of fieldwork monitoring, has in place an advanced system across countries to register fieldwork results, and possesses extensive background information about the panel members and their response behaviour in previous waves. The present study uses data from Wave 5, lasting from February to September 2013, and Wave 6, lasting from February to October 2015, of the German sub-study of SHARE. In Wave 5, a large refreshment sample was drawn in addition to the original panel sample that has been recruited in Wave 1 and Wave 2 (Kneip, et al., 2015). This offers the possibility to compare the effect of our adaptive/responsive design on improving panel sample representativeness in two different respondent groups: one group for whom the sixth SHARE wave constitutes the first re-interview and one group having been part of the panel since six waves. In addition, we can use the Wave 5 fieldwork, in which no targeted designs have been applied, to compare response patterns during the field periods. We are well aware that this is not the same as a randomized experimental setting. Differences between waves can be related, for example, to many other factors that are unobserved or we cannot take into account. However, the purpose of this additional benchmark is not to draw comparisons across waves based on absolute response rates. Instead, we are interested in exploring whether changes during fieldwork reflect a normal pattern for a specific subgroup or might be attributed to responsive/adaptive fieldwork interventions.

Since several types of response probabilities (or rates) can be distinguished for longitudinal surveys, it is useful to define which response measure we use in this study in order to evaluate the adaptive/responsive design. Our definition is based on the “conditional cross-sectional” response rate for longitudinal studies, defined by Cheshire, Ofstedal, Scholes, and Schröder (2011) as the proportion of sample members who respond in a given wave (including partial interviews) of those who responded in the immediately prior wave. This rate can also be called “re-interview” response rate (Cheshire, et al., 2011) as it reflects the success of retaining respondents from one wave to the next. To make field monitoring more useful during the early stages of fieldwork we made an adaptation to this definition and included in the denominator only eligible sample members for whom at least one contact attempt has been made in the present wave. Based on this overall re-interview rate, we calculated the deviation for specific subgroups by using respondent characteristics from the previous wave to analyse the effect of the adaptive/responsive design on the representativeness of the panel sample in Wave 6 including 2905 respondents. The graphical illustration of these deviations allows an easy identification of discrepancies in respondent characteristics and provides information on how well a certain subgroup is represented at a certain point in time during fieldwork. A negative (positive) value means that this subgroup is lagging behind (running ahead) regarding the re-interview rate at this specific point in time and responsive interventions might be warranted to reduce the heterogeneity in response probabilities between respondent groups and, in turn, increase the homogeneity of the panel sample.

In the following analyses, we focus on the oldest respondents aged 80 years and older as well as on the youngest, most likely still working age group in SHARE aged below 65 years to analyse the adaptive and responsive design interventions in Wave 6. Regarding the interviewer bonus incentive for interviews with 80+ respondents, we expected a higher response probability for this subgroup especially at the beginning – despite higher efforts needed to realize an interview with this group of respondents, who frequently are also in poor health. In addition, a contact schedule optimization was implemented during fieldwork as it turned out that, after several weeks in field, persons under 65 years, who are likely to be still working, showed a much lower response probability than the overall sample. We hence requested the fieldwork agency, by means of several memos, to allocate households with young (<65 years) respondents to the optimal contact time slot between 5pm and 7pm. During this intervention, we monitored the development of the number of contact attempts in this time slot to evaluate interviewers’ compliance as well as the resulting response probability of the younger respondent group.

Results

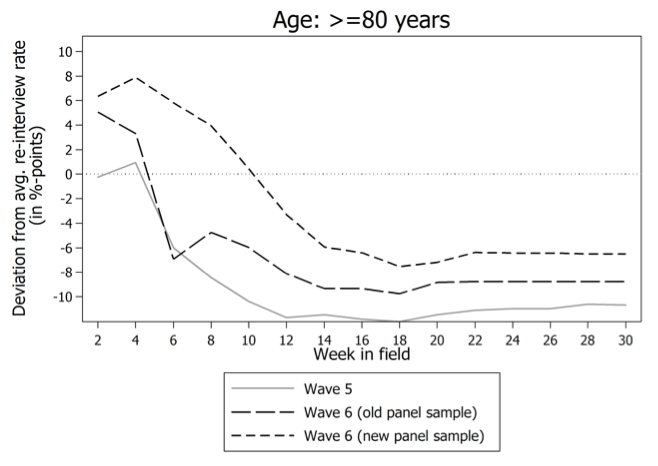

We start with presenting the results of field monitoring in Wave 6, based on bi-weekly synchronized data during the first 30 weeks in field. After this point in time, response probabilities remained stable until the official end of fieldwork in all countries. Figure 1 shows the development of the response probability for respondents aged 80 years and older depicted as deviations from the average re-interview rate that is marked as dotted zero line. This is done for Wave 5 and Wave 6, whereas the latter can be further differentiated between respondents who were recruited in Wave 1 and Wave 2 (“old” panel) and respondents who were only recruited in Wave 5 (“new” panel). As can be seen in Figure 1, 80+ respondents responded better than average at the beginning of Wave 6, both for the old (n=198) and the new panel sample (n=196). After two weeks in field, the positive deviation was about 5-6%-points. In contrast, at the start of fieldwork in Wave 5 there was nearly no deviation between the re-interview rate in the whole sample and the subgroup of 80+ respondents (n=195).

Figure 1: Deviation from average re-interview response rate for the oldest age group by week in field

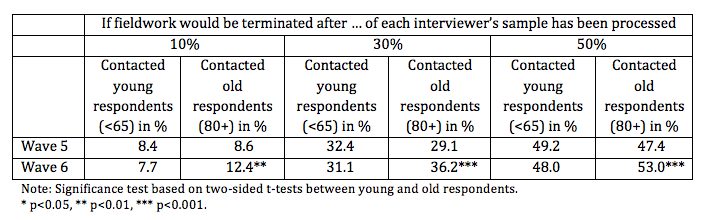

While the higher than average re-interview rate of the 80+ respondents at the beginning of Wave 6 might be a consequence of the applied adaptive design, i.e. a bonus payment for every conducted interview with them, the sequence in which interviewers process their assigned samples of respondents can further corroborate this finding. Table 1 shows the interviewers’ sample processing over the fieldwork time: At the time point in Wave 6 at which 10 percent of each interviewer’s sample had been processed, 12.4 percent of the 80+ respondents had already been contacted, versus only 7.7 percent of the respondents younger than 65 years (p<0.01). Similarly, at 30 and 50 percent processing of each sample, interviewers in Wave 6 favoured to attempt households with 80+ respondents first (p<0.001, respectively). In contrast, no significant differences between the contact rates of these age groups were observed in Wave 5. As, unlike actually reaching or interviewing a respondent, the mere attempt to contact a certain household is largely independent from respondent characteristics, such as age, health, time constraints or individual motivations to participate in the survey, this finding is a strong indication that the implemented adaptive design indeed had an effect on interviewer behaviour. In our case, the assured bonus interviewer payment increased the response probability of 80+ respondents compared to the average re-interview rate. Interestingly, this effect seems to continue over the fieldwork period resulting in a smaller deviation at the end of Wave 6 (-8%-points for the old panel sample), in which the adaptive design was implemented, compared to Wave 5 (-11%-points).

Table 1: Sequence of interviewers’ sample processing: Number of respondents contacted per age group as percentage of the interviewer´s total processed sample

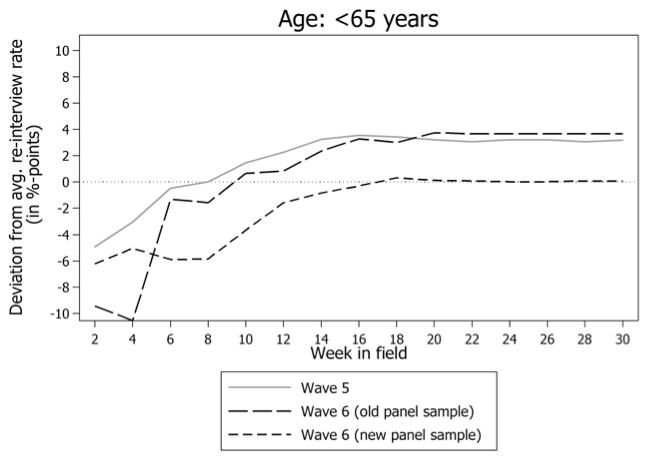

In contrast to the adaptive design that focuses on the very old respondents in SHARE, the responsive intervention we implemented during fieldwork of Wave 6 focuses on the youngest, most likely still working respondents. Figure 2 shows the differences in re-interview response rates for respondents younger than 65 years. The graph shows a relatively low re-interview rate of this subgroup at the beginning of fieldwork in Wave 6 (old panel respondents: n=305, new panel respondents: n=926). The response deviation of this group is more pronounced than it was in Wave 5 (n=454), in particular for the old panel sample that was recruited in Waves 1 and 2. Since we also observed a lower response probability for the respondent group that was still working in the previous wave, we hypothesized that the response deviation is related to a lower contact probability of this group. The responsive intervention chosen to increase this probability was therefore contact-timing optimisation. Largely in line with previous research (e.g. Durrant, D’Arrigo, & Steele, 2011; Weeks, Jones, Folsom Jr., & Benrud, 1980), tests on the incoming fieldwork data showed that contact attempts during the early evening (5-7pm) had a significant higher probability for an interview appointment with a respondent younger than 65 years (not shown here). In co-operation with the staff of the fieldwork agency, we informed several interviewers who did very few evening contacts about our finding, in fieldwork week 5 as well as in week 8 and 12, and recommended them to contact the youngest age group more often between 5pm and 7pm. In fieldwork week 10, we wrote a midterm motivational letter for all interviewers that also stressed this recommendation.

Figure 2: Deviation from average re-interview response rate for the youngest age group by week in field

Although Figure 2 seems to confirm that a change took place after four and eight weeks in field, we cannot directly test whether it was exclusively our recommendation that caused this increase in response probability for the youngest age group. The Wave 5 response probability for young respondents also seems to increase around the same time of fieldwork, although this effect is less pronounced. We therefore further analysed the development of the number of contact attempts between 5pm and 7pm for 29 interviewers who had been recommended by the fieldwork agency to shift their contact schedule (“treated”) and 118 interviewers who did not receive this recommendation (“control”). The results of this analysis are presented in Figure 3, along with the timing of each memo that conveyed this recommendation. Overall, we find no clear pattern: On the one hand, a strong increase in contact attempts is observed after six weeks in field, intensifying after fieldwork week 8. This increase parallels the timing of the first two memos that had been sent to the fieldwork agency. On the other hand, a similar strong decrease in contact attempts is observed after week 10, which does not match our expectations. Moreover, the relative number of 5pm to 7pm contact attempts strongly decreases following the midterm feedback letter, which once more stressed the importance of contacting young households in the early evening. Finally, the relative number of contact attempts in the 5pm to 7pm time slot increases again in parallel to the third memo, after 12 weeks in field. The inconsistent pattern could indicate that general memos addressing all interviewers do not work as good as more specific interventions using background information (e.g., here the timing of previous contact attempts) and executed by regional field coordinators of the survey agency.

Figure 3: Contact attempts in young households between 5pm and 7pm by week in field

The short-term up and down patterns could, however, also be related to the small number of new contact attempts that were made in households without a final code, i.e. households without a completed interview or a final refusal. About two third of all valid contact attempts (n=4069) had been done already after six weeks of fieldwork, i.e. shortly after the first memo. At this time, nearly 500 contact attempts (out of approximately 700 overall) had been conducted by the treated interviewers. The analysis after the midterm feedback letter in fieldwork week 10 is based on less than 100 contact attempts of treated interviewers in households with at least one respondent younger than 65 years. This small number leaves little room for effective responsive interventions. Moreover, it is unclear whether our analyses suffer from a selection bias. Perhaps, those interviewers with very few contact attempts of young respondents in the evening at the beginning of fieldwork were simply not being able to conduct interviews at this time of the day due to other reasons like personal working time constraints – a fact that cannot be changed easily. These are serious problems in the practical implementation of a responsive design during fieldwork – at least for large-scale projects such as SHARE, in which data deliveries can only be realized with a certain delay. In addition, it is very difficult to determine when exactly an intervention should affect the contact schedule of interviewers, as we do not know when the interviewers exactly read the memos or start to implement the recommendations. At least in SHARE, but commonly in European countries, interviewers are employees or freelancers of the contracted survey agency, implying that only that agency can legally take up contact with them. Therefore, there is no direct link between interviewers and the fieldwork management in a survey like SHARE, which would allow timely interventions during fieldwork. In addition, the freelance employment of interviewers limits the possibility to direct their working hours or to know the reasons for their contacting schedules.

Conclusion

The primary goal of the adaptive/responsive fieldwork design in this study was to increase the homogeneity of response probabilities across respondent subgroups in SHARE. This aim was only partly met. Although the adaptive design, consisting of an interviewer bonus incentive for interviews with the oldest-old respondents, seems to have a positive effect, 80+ panel members still participated much less than average in Wave 6. Furthermore, the responsive intervention, consisting of a request to shift the contact schedule for young respondents younger than 65 years, based on their lower than average response probability, was not very successful. Similar to findings of Wagner (2013) and Kreuter and Müller (2015), our interviewers seemed not (being able) to comply very well with the recommendations given to them during fieldwork, thus making it difficult to find a clear effect on the re-interview response rate. The lower than average response probability of the younger respondents in the panel sample recruited in Wave 1 and Wave 2 at the start of the Wave 6 fieldwork developed into a higher than average response probability after several weeks in field. As a very similar development can also be observed in Wave 5, the responsive intervention seems to have been ineffective, perhaps even strengthening the common fieldwork development. It seems likely that the younger panel members in SHARE are often still working and therefore need relatively many contact attempts to be reached, spread over the first fieldwork weeks. Once they are reached, however, they are more likely to participate than the older age groups.

In this study, we mainly focused on deviations from the average re-interview rate for the youngest and the oldest age group in SHARE, because these groups can be targeted with specific interventions, such as incentives or re-scheduled contact attempts. Lower response probabilities were also observed for other respondent characteristics, but most of these cannot easily be counteracted by fieldwork interventions. For example, this holds for the (cognitive or physical) inability to participate caused by old age and/or poor health. This is largely in line with Tourangeau (2015), who formulated the paradox that respondent characteristics that are suitable for responsive fieldwork measures might in fact be of limited use for true bias reduction.

Based on these findings, we have to acknowledge that the applied design is yet not efficient enough to outweigh the time and effort needed for the continuous monitoring, analysing, and implementing of targeted measures at the same time. We therefore consider doing more in-depth studies of specific groups with low response probabilities before we think about extending the design adaptations to other countries. Knowing more about the reasons of observed low response probabilities in certain groups could result in better adapted strategies. For example, we observed, in our monitoring dashboard, a strong relationship between item nonresponse in financial questions in one wave and unit nonresponse in the next wave. By studying the underlying cause of this relationship, we could develop interventions that are effectively targeted at respondents who show item nonresponse patterns to prevent them from dropping out in the next wave, such as different advance letters, specific incentives, or specially trained interviewers. Furthermore, it seems worthwhile to focus more on the group of 80+ respondents and panelists in bad health. When contacting these older or ill panel members, SHARE interviewers often encounter so-called “gatekeepers”, i.e. family members or care givers who are hesitant to let the interviewer in or allow the interview. Such contact attempts could possibly be aided by studies finding out what information would convince the gatekeepers or by adapting the instruments to decrease the burden of the interview. In conclusion, we will continue to differentiate the field monitoring based on respondent characteristics, but will focus more on adaptive strategies for specific groups than on responsive measures during fieldwork. In this way, we can make better use of the large amount of respondent information that is available in a longitudinal study like SHARE and reduce the costs of extensive monitoring.

[1] In Wave 6, interviewers were paid 50 (40) € for the first (partner) interview.

References

- Blom, A. G., & Schröder, M. (2011). Sample composition 4 years on: Retention in SHARE Wave 3. In M. Schröder (Ed.), Retrospective data collection in the Survey of Health, Ageing and Retirement in Europe. SHARELIFE Methodology (pp. 55-61). Mannheim: MEA.

- Börsch-Supan, A., Brandt, M., Hunkler, C., Kneip, T., Korbmacher, J., Malter, F., et al. (2013). Data resource profile: The Survey of Health, Ageing and Retirement in Europe (SHARE). International Journal of Epidemiology, 42(4), 992-1001. doi: 10.1093/ije/dyt088.

- Börsch-Supan, A., Krieger, U., & Schröder, M. (2013). Respondent incentives, interviewer training and survey participation. SHARE Working Paper (12-2013). MEA, Max Planck Institute for Social Law and Social Policy. Munich.

- Bristle, J., Celidoni, M., Dal Bianco, C., & Weber, G. (2019). The contributions of paradata and features of respondents, interviewers and survey agencies to panel co-operation in the Survey of Health, Ageing and Retirement in Europe. Journal of the Royal Statistical Society: Series A (Statistics in Society), 182(1), 3-35. doi: 10.1111/rssa.12391.

- Cheshire, H., Ofstedal, M. B., Scholes, S., & Schröder, M. (2011). A comparison of response rates in the English Longitudinal Study of Ageing and the Health and Retirement Study. Longitudinal and Life Course Studies, 2(2), 127-144. doi: 10.14301/llcs.v2i2.118.

- Cleary, A., & Balmer, N. (2015). The impact of between-wave engagement strategies on response to a longitudinal survey. International Journal of Market Research, 57(4), 533-554. doi: 10.2501/IJMR-2015-046.

- Durrant, G. B., D’Arrigo, J., & Steele, F. (2011). Using paradata to predict best times of contact, conditioning on household and interviewer influences. Journal of the Royal Statistical Society: Series A (Statistics in Society), 174(4), 1029-1049. doi: 10.1111/j.1467-985X.2011.00715.x.

- Fomby, P., Sastry, N., & McGonagle, K. A. (2017). Effectiveness of a time-limited incentive on participation by hard-to-reach respondents in a panel study. Field methods, 29(3), 238-251. doi: 10.1177/1525822X16670625.

- Freedman, V. A., McGonagle, K. A., & Couper, M. P. (2017). Use of a targeted sequential mixed mode protocol in a nationally representative panel study. Journal of Survey Statistics and Methodology, 6(1), 98-121. doi: 10.1093/jssam/smx012.

- Fumagalli, L., Laurie, H., & Lynn, P. (2013). Experiments with methods to reduce attrition in longitudinal surveys. Journal of the Royal Statistical Society: Series A (Statistics in Society), 176(2), 499-519. doi: 10.1111/j.1467-985X.2012.01051.x.

- Kirgis, N. G., & Lepkowski, J. M. (2013). Design and management strategies for paradata-driven responsive design: Illustrations from the 2006-2010 National Survey of Family Growth. In F. Kreuter (Ed.), Improving Surveys with Paradata. Analytic Uses of Process Information (pp. 121-144). Hoboken, New Jersey: John Wiley & Sons.

- Kneip, T., Malter, F., & Sand, G. (2015). Fieldwork monitoring and survey participation in fifth wave of SHARE. In F. Malter & A. Börsch-Supan (Eds.), SHARE Wave 5: Innovations & Methodology (pp. 102-159). Munich: MEA, Max Planck Institute for Social Law and Social Policy.

- Kreuter, F., & Müller, G. (2015). A note on improving process efficiency in panel surveys with paradata. Field Methods, 27(1), 55-65. doi: 10.1177/1525822X14538205

- Lipps, O. (2012). A note on improving contact times in panel surveys. Field Methods, 24(1), 95-111. doi: 10.1177/1525822×11417966.

- Luiten, A., & Schouten, B. (2013). Tailored fieldwork design to increase representative household survey response: An experiment in the Survey of Consumer Satisfaction. Journal of the Royal Statistical Society: Series A (Statistics in Society), 176(1), 169-189. doi: 10.1111/j.1467-985X.2012.01080.x.

- Lynn, P. (2017). From standardised to targeted survey procedures for tackling non-response and attrition. Survey Research Methods, 11(1), 93-103. doi: 10.18148/srm/2017.v11i1.6734.

- Malter, F. (2013). Fieldwork management and monitoring in SHARE Wave four. In F. Malter & A. Börsch-Supan (Eds.), SHARE Wave 4: Innovations & Methodology (pp. 124-139). Munich: MEA, Max Planck Institute for Social Law and Social Policy.

- McGonagle, K., Couper, M., & Schoeni, R. F. (2011). Keeping track of panel members: An experimental test of a between-wave contact strategy. Journal of Official Statistics, 27(2), 319-338.

- Peytchev, A., Riley, S., Rosen, J., Murphy, J., & Lindblad, M. (2010). Reduction of nonresponse bias through case prioritization. Survey Research Methods, 4(1), 21-29. doi: 10.18148/srm/2010.v4i1.3037.

- Rosen, J. A., Murphy, J., Peytchev, A., Holder, T., Dever, J., Herget, D., et al. (2014). Prioritizing low propensity sample members in a survey: Implications for nonresponse bias. Survey Practice, 7(1), 1-8. doi: 10.29115/SP-2014-0001.

- Schouten, B., Calinescu, M., & Luiten, A. (2013). Optimizing quality of response through adaptive survey designs. Survey Methodology, 39(1), 29-58.

- Schouten, B., Peytchev, A., & Wagner, J. (2017). Adaptive survey design. Boca Raton: CRC Press.

- Tourangeau, R. (2015). Nonresponse Bias: Three Paradoxes. Paper presented at the 26th International Workshop on Household Survey Nonresponse, Leuven.

- Tourangeau, R., Brick, M. J., Lohr, S., & Li, J. (2017). Adaptive and responsive survey designs: A review and assessment. Journal of the Royal Statistical Society: Series A (Statistics in Society), 180(1), 203-223. doi: 10.1111/rssa.12186.

- Wagner, J. (2013). Adaptive contact strategies in telephone and face-to-face surveys. Survey Research Methods, 7(1), 45-55. doi: 10.18148/srm/2013.v7i1.5037.

- Wagner, J., West, B. T., Kirgis, N., Lepkowski, J. M., Axinn, W. G., & Ndiaye, S. K. (2012). Use of paradata in a responsive design framework to manage a field data collection. Journal of Official Statistics, 28(4), 477-499.

- Weeks, M. F., Jones, B. L., Folsom Jr., R. E., & Benrud, C. H. (1980). Optimal times to contact sample households. Public Opinion Quarterly, 44(1), 101-114. doi: 10.1086/268569.