Fieldwork Monitoring Strategies for Interviewer-Administered Surveys

Meitinger K., Ackermann-Piek, D., Blohm M., Edwards B., Gummer T. & Silber H. (2020). Fieldwork Monitoring Strategies for Interviewer-Administered Surveys. Survey Methods: Insights from the Field. Special issue: ‘Fieldwork Monitoring Strategies for Interviewer-Administered Surveys’. Retrieved from https://surveyinsights.org/?p=13732

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Copyright

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Fieldwork monitoring is essential during the data collection of surveys to ensure high-quality data (Koch, et al., 2009; Lyberg & Biemer, 2008; Lynn, 2003; Malter, 2014). During the data collection period, the continuous evaluation of performance indicators (Edwards, Maitland, & Connor, 2017; Schouten, Sholmo, & Skinner, 2011; Schouten et al., 2012) such as response rates, risk of nonresponse bias, contact attempts, or fieldwork intensity per sampling point and interviewer, provides the possibility to detect data collection issues at an early stage and to react timely with targeted interventions to tackle these issues. In this regard, adaptive and responsive survey designs (Groves & Heeringa, 2006; Wagner, 2008; Schouten, Peytchev, & Wagner, 2018) have received increasing attention by survey researchers.

A large variety of performance indicators are available and there are many opportunities to intervene if any issues are detected during the data collection phase (Kreuter, 2013). Depending on the specific survey context, some indicators might be more useful to monitor than others. Also, the optimal monitoring frequency for indicators may differ depending on the specific setting of a survey. An important distinction regarding optimal fieldwork monitoring strategies is whether the survey is conducted by the research organization itself or whether a commercial survey agency is contracted to field the survey. In the latter case, some indicators might be less informative because the performance indicators are usually not delivered to the research organization on a daily basis, and field interventions need more time to be implemented successfully.

This special issue spotlights the lessons learned when working with different fieldwork monitoring strategies in various settings. In particular, the topics of interest include which performance indicators have been implemented successfully (e.g., to reduce errors described in the Total Survey Error framework, see Groves & Lyberg, 2010) and which have been deemed to be less useful. The large variety of indicators is paralleled by a multitude of possible fieldwork measures or interventions that address specific aspects of the data collection process (e.g., change or re-training of interviewers, re-contact of soft refusals, tailored reminder letters or adjustment of incentives). Many large scale-survey programs have an abundance of experiences regarding the efficiency and effectiveness of different fieldwork monitoring strategies. Due to the often nonexperimental nature of many field activities, articles sharing this expertise are rare. This special issue provides a platform to share this valuable best practice knowledge and provide insights on which fieldwork strategies and tools are employed in the field.

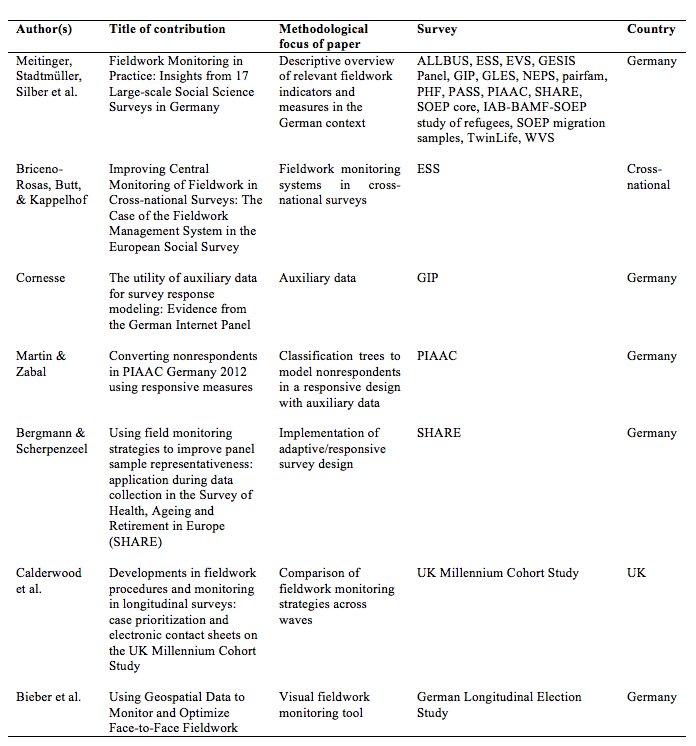

Altogether, seven contributions are included in this special issue. The study by Meitinger et al. provides a descriptive overview and relevance rating of fieldwork indicators and measures that are currently being used by seventeen large-scale surveys in Germany. Five articles provide case studies from specific national and international survey programs. Briceno-Rosas, Butt, and Kappelhof describe the fieldwork management system of the European Social Survey. Cornesse discusses the utility of auxiliary data with the example of the German Internet Panel. Martin and Zabal illustrate a responsive design approach, developing classification trees for auxiliary data to model and predict classes of nonrespondents with the fieldwork procedures of PIAAC Germany 2012. Bergmann and Scherpenzeel provide insights into the field monitoring strategies of the Survey of Health, Ageing and Retirement in Europe and focus the implementation of adaptive/responsive survey design and its effects on sample representativeness. Calderwood et al. share their experiences in the UK Millennium Cohort Study by comparing differences in the fieldwork monitoring procedures across waves. In the final contribution, Bieber et al. illustrate the potential of visualization of geospatial data for fieldwork monitoring in the context of the German Longitudinal Election Study.

An overview of the contributions by authors’ names, title, methodological focus, survey, and country

References

- Edwards, B., Maitland, A., & Connor, S. (2017). Measurement Error in Survey Operations Management: Detection, Quantification, Visualization, and Reduction. In P. Biemer, E.D. de Leeuw, S. Eckman, B. Edwards, F. Kreuter, L. Lyberg, N.C. Tucker, and B.T. West (Eds.): Total Survey Error in Practice (pp.255-278). Hoboken, New Jersey: John Wiley & Sons.

- Groves, R. M., & Heeringa, S. G. (2006). Responsive design for household surveys: tools for actively controlling survey errors and costs. Journal of the Royal Statistical Society: Series A (Statistics in Society), 169(3), 439-457.

- Groves, R. M., & Lyberg, L. (2010). Total survey error: Past, present, and future. Public opinion quarterly, 74(5), 849-879.

- Koch, A., Blom, A., Stoop, I., & Kappelhof, J. W. (2009). Data collection quality assurance in cross-national surveys: The example of the ESS. Methoden, Daten, Analysen (MDA), 3(2), 219-247.

- Kreuter, F. (2013). Improving surveys with paradata: Analytic uses of process information. New York: Wiley.

- Lyberg, L. & P. B. Biemer (2008). Quality assurance and quality control in surveys. In E. D. de Leeuw, J. J. Hox, and D. A. Dillman (Eds.): International Handbook of Survey Methodology (pp. 421-441). New York: Psychology Press

- Lynn, P. (2003). Developing quality standards for cross-national survey research: five approaches. Int. J. Social Research Methodology, 6(4), 323-336.

- Malter, F. (2014). Fieldwork monitoring in the survey of health, ageing and retirement in Europe (SHARE). Survey Methods: Insights from the Field, 8.

- Schouten, B., Bethlehem, J., Beullens, K., Kleven, Ø., Loosveldt, G., Luiten, A., & Skinner, C. (2012). Evaluating, comparing, monitoring, and improving representativeness of survey response through R‐indicators and partial R‐indicators. International Statistical Review, 80(3), 382-399.

- Schouten, B., Peytchev, A., & Wagner, J. (2018). Adaptive Survey Design. Boca Raton, FL: CRC Press.

- Schouten, B., Shlomo, N., & Skinner, C. (2011). Indicators for Monitoring and Improving Representativeness of Response. Journal of Official Statistics 27(2):231–53.

- Wagner, J. (2008). Adaptive Survey Design to Reduce Nonresponse Bias. (PhD thesis), University of Michigan, Ann Arbor, MI.