Integrating online data collection in a household panel study: effects on second-wave participation

Voorpostel, M., Roberts C. & Ghoorbin, M. (2021). Integrating online data collection in a household panel study: effects on second-wave participation. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=15709. The data used in this article is available for reuse from http://data.aussda.at/dataverse/smif at AUSSDA – The Austrian Social Science Data Archive. The data is published under the Creative Commons Attribution-ShareAlike 4.0 International License and can be cited as: ”Replication data for: Voorpostel, M., Roberts C. & Ghoorbin, M. (2021) Integrating online data collection in a household panel study: effects on second-wave participation.” https://doi.org/10.11587/9HRKFJ, AUSSDA.

© the authors 2021. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Received wisdom in survey practice suggests that using web mode in the first wave of a panel study is not as effective as using interviewers. Based on data from a two-wave mode experiment for the Swiss Household Panel (SHP), this study examines how the use of online data collection in the first wave affects participation in the second wave, and if so, who is affected. The experiment compared the traditional SHP design of telephone interviewing to a mixed-mode design combining a household questionnaire by telephone with individual questionnaires by web and to a web-only design for the household and individual questionnaires. We looked at both participation of the household reference person (HRP) and of all household members in multi-person households. We find no support for a higher dropout at wave 2 of HRPs who followed the mixed-mode protocol or who participated online. Neither do we find much evidence that the association between mode and dropout varies by socio-demographic characteristics. The only exception was that of higher dropout rates among HRPs of larger households in the telephone group, compared to the web-only group. Moreover, the mixed-mode and web-only designs were more successful than the telephone design in enrolling and keeping all eligible household members in multi-person households in the study. In conclusion, the results suggest that using web mode (whether alone or combined with telephone) when starting a new panel shows no clear disadvantage with respect to second wave participation compared with telephone interviews.

Keywords

Attrition, longitudinal survey, Nonresponse, web questionnaires

Acknowledgement

This study has been realized using the data collected by the Swiss Household Panel (SHP), which is based at FORS, the Swiss Centre of Expertise in the Social Sciences. The project is supported by the Swiss National Science Foundation.

Copyright

© the authors 2021. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

The potential for cost-savings, growing population coverage, and the possibility to eliminate interviewer effects have bolstered interest in online data collection methods in social surveys. The rise of online surveys, however, has coincided with a concomitant need to mix modes of data collection, to reduce combined selection effects resulting from remaining shortfalls in internet penetration and typically low response rates associated with web surveys. These developments, along with the rapid uptake of mobile devices have resulted in a sea change in the methods used to carry out many long-standing, large-scale, academic and government-commissioned studies in Europe and North America, and have stimulated demand for methodological research into their impact on survey quality and how they compare with traditional approaches (Olsen et al., 2019).

In a cross-sectional survey, the decision to mix multiple data collection modes in a ‘web first’ sequential design can offer several advantages, including, for example, cost savings and the potential to increase web-only response rates (Tourangeau, 2017). However, it carries some notable inconveniences due to the potential for non-equivalent measurements across the different modes, which can confound comparisons across subgroups over- or under-represented in the samples responding in different modes (Hox et al., 2017). Nevertheless, effective questionnaire design procedures aimed at harmonising the stimulus across modes (Dillman et al., 2014), combined with post-survey adjustments, can help to render measurements comparable, and this disadvantage is generally considered to be offset by the reduction in selection error obtained by switching modes to reduce nonresponse rates.

In a longitudinal survey setting, however, the decision to incorporate web-based data collection in a mixed mode design can entail additional complications (Jäckle, Gaia, and Benzeval, 2017), depending on factors such as when the mixed mode design is introduced to the study (midstream versus at the outset), which modes are combined and how (interviewer versus self-administered; sequentially versus concurrently) and the target population and/ or sampling unit (individuals only versus all household members). Not much is known about optimal ways of combining web with other modes in longitudinal studies, particularly in household panel surveys, because relatively few major panels have implemented and documented the effects of such designs (see Voorpostel, Lipps, Roberts, in press and Jäckle, A., Gaia, A., and Benzeval, M. (2017)., for recent reviews).

Much of the existing research into web-based or mixed mode data collection involving web in the context of longitudinal surveys has considered the impact of switching modes within existing panel studies. Evidence from the UKHLS-IP showed that switching from face-to-face to web initially had a detrimental effect on participation, but that response rates recovered after a number of waves (Bianchi, Biffignandi and Lynn, 2017). These findings suggest that, at least in the short term, switching to web-based data collection within an existing panel study may be risky. However, longer term, cost-related benefits may justify the transition and outweigh any negative impact.

Although evidence as to the effects of mode switches in existing longitudinal surveys is accumulating – e.g. on subsequent attrition rates and sample selection (Bianchi, Biffignandi and Lynn 2017; Lüdtke and Schupp, 2016), and cross-mode measurement equivalence (Cernat and Sakshaug, in press) – not much is known about whether web offers an effective solution at the first wave of a new multi-wave survey. Received wisdom suggests that because web typically obtains lower response rates than other modes, and excludes the offline population, and because personal contacts with an interviewer may be beneficial in motivating participation, online data collection may not be a suitable choice in the first wave of a panel (Tourangeau, 2018). A number of large-scale probability-based online panels recruit sample members face-to-face or by telephone because offline recruiting has been shown to produce fewer coverage problems (depending on the strategy used for sample units without internet access) and obtain higher response rates (Blom et al., 2016). The absence of contact with an interviewer at the start of a household panel may be detrimental for later-wave participation due to the complexity of a household survey. Certain tasks, such as the completion of the household grid, can be experienced as more burdensome for the respondent without the guidance of an interviewer. This seems likely to affect the experience of the interview at the first wave, which in turn is an important determinant of later-wave participation (Lipps & Voorpostel, 2020). Also, an interviewer provides the opportunity of personal contact with the respondent, which may increase loyalty to the panel study in the longer term.

However, the need to subsequently switch to online administration means that drop out after the first wave in such studies is often greater than in interviewer-administered panels (De Leeuw and Lugtig, 2015). As switching modes in surveys has been found to provoke drop-outs generally (Sakshaug, Yan and Tourangeau, 2010; Tourangeau, Conrad and Couper, 2013; Sakshaug and Kreuter, 2011), it is not clear to what extent the response rate advantages of using interviewers at the first wave may be offset by post-recruitment drop-out caused by the mode switch.

The alternative it is to recruit panel members directly to a web-based survey, though its feasibility depends on the available sampling frame. For general population studies (in the absence of email addresses), where postal address- or individual-based sampling is possible, this can be achieved by sending details of how to log-in via the pre-notification or invitation letter. Encouraging results have been obtained with postal recruitment, which suggest that the presence of an interviewer may not be essential for participation in panel surveys. Rao, Kaminska and McCutcheon (2010) showed for the Gallup Panel multi-mode experiment, that recruitment by post significantly increased the likelihood of joining the panel compared with recruitment by random-digit-dialling telephone mode. Similarly, the Norwegian Citizen Panel, a web-based panel, obtained a recruitment rate of 20 % in the first wave, using postal mail instead of an interviewer (Hogestol and Skjervheim, 2013). Combined with incentives and reminders (Martinsson and Riedel, 2015), using the initial invitation to push respondents directly to respond by web at the recruitment wave, therefore, appears to be an effective alternative to using interviewers for offline recruitment and then later switching to web.

Despite these initial promising results for postal recruitment in longitudinal studies of individuals, however, its suitability in panel surveys of households and its longer term impact on panel loyalty is less certain. Furthermore, it is still an open question whether web has any advantage over interviewer modes with respect to the participation of all household members. On the one hand, with telephone interviewing it may be necessary to establish contact with all household members, often in separate telephone contact attempts, which may make it less efficient for enrolling all household members in the study compared with a web-based design (with postal contacts). On the other hand, interviewer contact with one household member at recruitment may be helpful for convincing other household members to participate and more so than a web-based self-completion recruitment interview. Although no evidence so far exists on the comparison between web and telephone, the UKHLS showed that after three waves a mixed-mode design of web combined with a face-to-face follow-up was more successful in obtaining complete household participation than a face-to-face only design (Bianchi, Biffignandi, & Lynn, 2017). However, this was in the context of an existing panel, and not one incorporating web already at the recruitment wave.

In the present study, we shed light on the implications of the use of web in the first wave of a panel study for second wave participation. We use data from a mode experiment testing alternative recruitment strategies for a refreshment sample for the Swiss Household Panel (SHP) study. The experiment investigated the impact of incorporating online data collection in different ways on first-wave nonresponse and second-wave drop-out, in comparison to the standard SHP recruitment strategy involving telephone interviewing. Elsewhere, Voorpostel et al. (2020) showed that response rates in the first wave of the experiment were lower in the groups invited to take part online. In this paper, we consider possible longer-term effects of participating online on later drop-out. We address the following research questions:

RQ1: Does using web mode in the first wave of a panel study affect the probability of participating in the second wave compared with using telephone interviewing?

RQ2: Does this effect vary by sociodemographic characteristics?

RQ3: Does using web mode affect the likelihood of all eligible household members completing the individual questionnaire?

Data and methods

Design of the Swiss Household Panel mode experiment and response rates

The SHP has been interviewing households (resident in Switzerland) annually since 1999, predominantly by telephone (Tillmann et al., 2016). Refreshment samples were added in 2004 and 2013, and the SHP launched a third refreshment sample in 2020. In preparation, a mode experiment was conducted in 2017-2018 to compare the standard SHP telephone-based recruitment and fieldwork strategy with two online alternatives, which we report on here.

The standard SHP protocol involves a first telephone interview with a household reference person (HRP) to collect information on the household and its members. The HRP as well as all household members of at least 14 years old are then asked to complete an individual questionnaire (also by phone). This protocol has the advantage of allowing the HRP to complete all questionnaires in one interview and, therefore, may lead to fuller HRP cooperation. However, as mentioned, it often entails multiple follow-ups to secure the cooperation of household members. Furthermore, the longer initial interview places considerably greater burden on the HRP, which may decrease their likelihood of participating in the first place, as well as in subsequent waves.

The first online alternative tested was a mixed mode protocol combining a telephone interview with the HRP to complete the household grid and questionnaire, with web for the HRP and household members to complete individual questionnaires. This design resembles the usual telephone-based design on the household level but has the advantage of making the telephone interview shorter and giving the HRP and household members more flexibility to complete the individual questionnaire online at their convenience. The second online alternative tested was a web-only protocol using web for the grid and both the household and individual questionnaires (see Voorpostel et al., 2020). In practice the SHP uses a mix of modes to follow-up nonrespondents and maximise participation, and the same strategy was employed in the experiment. In this article, however, we focus on participation rates in the intended treatment groups.

The initial sample for the study was a simple random sample of individuals, stratified by region, drawn from a sample frame based on population registers maintained by the Swiss Federal Statistical Office. The households of these individuals were then randomly assigned to one of the three protocols. For the majority of households, the sampled individual was approached first as a HRP unless the sampled person was an adult child living with their parents (knowable from auxiliary frame data), in which case a parent was selected at random to act as the HRP instead. At both waves, household members were free to select an alternative HRP than the one initially approached.

At wave 1, HRPs in all three treatment groups received an invitation letter by post. In the telephone group, data was collected predominantly using telephone. In the mixed-mode group, initial contact and the household interview with the HRP were by telephone. Eligible individual household members, including the HRP, subsequently received login codes by mail and could then complete the individual questionnaire online. Telephone numbers were available from the sampling frame for 60 percent of the addresses sampled. In both the control and the mixed mode groups, face-to-face and web were offered as alternatives if no telephone number was available and to initial refusals (as was standard practice for the most recent SHP refreshment sample). In the mixed mode group, nonresponding individual household members received two reminders before they were contacted by phone if a telephone number was available. HRPs in the web-only group received a login code with their invitation letters and completed all questionnaires by web. Household members received login codes for their individual questionnaires after the HRP had provided information on the household composition. Upon request, respondents could be interviewed by telephone. Households and household members that did not respond after two reminder letters, and for whom a telephone number was available, were contacted by phone in a final effort to recruit them into the panel.

Wave 2 followed the same protocols, but with 30 percent of the mixed-mode group switched to the protocol of the web-only group to assess whether, once recruited, a web-only strategy would be more advantageous than continuing to use telephone for interviewing the HRP. For this reason, the mixed-mode group started out with a larger sample size (2192 households) at wave 1 than the telephone group (790 households). As response rates tend to be lower in web surveys, the web group was also larger than the telephone group (1213 households).

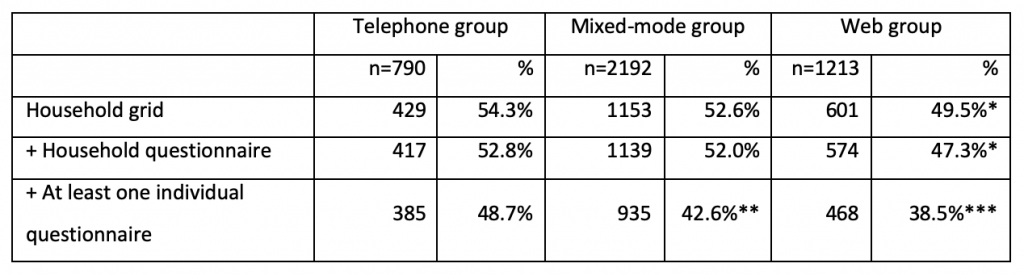

Table 1 shows the overall wave 1 response rates for all participating households (number of participating households/all eligible households approached, AAPOR response rate 1). In the first wave the telephone group obtained the highest response rate on the household level, regardless of how participation was defined (only grid, also household questionnaire, also at least one individual questionnaire). Response rates were lowest in the web group, whereas the mixed-mode group took an intermediary position. On the household level, response in the web group was significantly lower in the first wave compared with the telephone group. When considering response to both the household and one individual questionnaire both the mixed-mode and the web group performed significantly worse than the telephone group (see Voorpostel et al., 2021 for details).

Table 1. Household response rates by treatment group in Wave 1 (full sample, N=4195)

Notes: Significant differences with telephone group tested with two-sided z-tests, *p<.05, **p<.01, ***p<.001

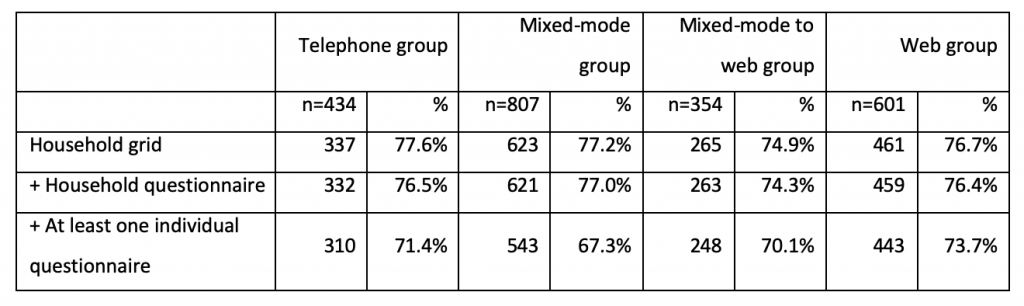

All households that completed at least the grid in the first wave were re-approached at wave 2 (N=2131), unless they requested not be re-contacted. Table 2 shows response rates for Wave 2 and indicates that, on the household level, they were quite comparable across treatment groups. Z-tests indicated that none of the alternative designs differed significantly in response rate in the second wave from the original telephone design.

Table 2. Household response rates by treatment group in Wave 2 (full sample, N=2196)

Notes: Significant differences with telephone group tested with two-sided z-tests, *p<.05, **p<.01, ***p<.001

In sum, whereas the protocols differed with respect to successfully recruiting households into the panel in the first wave, no differences in response rates were observed in the second wave. The initial recruitment differences at wave 1 produced lower cumulative response rates in the mixed-mode and especially the web group relative to the original sample. After two waves, the telephone group contained 42.7% of the original sample of households approached in Wave 1. This was 40.5% for the mixed-mode group and 38.0% for the web group. Only the difference between the telephone group and the web group was significant.

Analytical sample

Our study focuses on HRPs who completed the grid, household questionnaire and the individual questionnaire in wave 1 (N=1668) and their household members. We exclude 96 households that switched HRP between waves. The household grid was completed in the intended mode for about 85% of the responding HRPs in the first wave in all three groups. Thus, our analytical sample consists of 1227 HRPs (281 in the telephone group, 610 in the mixed-mode group and 336 in the web group). Of these 1227 HRPs, 1022 completed the household questionnaire in the second wave (83.3%) and 951 completed both the household and the individual questionnaire (77.5%).

For the analysis on complete household participation, we included all households of which the HRP completed at least the grid questionnaire in W2, in order to have information on which household members were eligible. We further restricted our sample to households consisting of more than one person and considered household members interviewed by proxy (those aged under 14 or those unable to participate according to HRP) as ineligible. The analytical sample consisted of 1511 households containing 3968 eligible household members. This was 928 households containing 2349 eligible household members in Wave 2.

Analytical strategy

To assess whether using web mode in the first wave of a panel study affects the probability of participating in the second wave compared with using telephone interviewing (RQ1), we first compare overall wave 2 participation for the three groups. We define participation in wave 2 in two ways: completion of at least the household questionnaire and completion of both the household and the individual questionnaire by the HRP. Next, we estimate logistic regression models predicting participation in wave 2 (for both dependent variables), including as covariates dummy variables representing the recruitment protocol, a dummy variable indicating whether the household was switched to web-only in the second wave (relevant to the mixed mode group only), and a number of socio-demographic variables measured in the first wave. The latter are included to control for any differences in the composition of the sample recruited at wave 1, as well as to assess whether any effect of using online data collection at wave 1 on second wave drop-out persists when controlling for known correlates of attrition in household panels (e.g. Bianchi and Biffignandi, 2019), the SHP included (Lipps, 2007; Voorpostel, 2010). Specifically, we include household characteristics: household size (1 (ref.), 2, or 3+ household members) and an indicator of the presence of children under 18 in the household; and characteristics of the HRP: gender, age (18-39, 40-54 (ref.), 55-64, and 65 and older), civil status (never married, married (ref.), divorced/separated/widowed), level of education (primary (ref.), secondary, tertiary) and nationality (Swiss (ref.), non-Swiss).

Table A.1 in the appendix shows the sample composition (with respect to the socio-demographic variables) after the first wave for the three protocols. The frequency distributions across subgroups at the end of wave 1 varied to some extent as a function of the assigned mode, although only two variables in the mixed-mode and the web groups differed significantly from the telephone group: the age and level of education of the HRP. The mixed-mode and the web group recruited more younger and higher educated HRPs into the study than the telephone group. It is possible that this selective participation by mode in the first wave could result in differential dropout between protocols. For example, as higher education is associated with survey response, the higher share of highly educated HRPs in the mixed-mode and web group may affect re-interview rates in these groups positively compared with the telephone group.

To assess whether the effect of using online data collection on second-wave drop-out is the same for all sample subgroups (RQ2), we add interaction terms between the treatment group and all socio-demographic variables to the model (one interaction per model), and retain in the final results only those interaction terms that are statistically significant.

To assess whether using web mode affects the complete participation of the household at wave 2 (RQ3), we estimated logistic regression models predicting the likelihood that all eligible household members (in households with more than one person) completed the individual questionnaire. We ran this analysis separately for both waves 1 and 2, with the design groups as the main independent variables, and controlling for assignment to the switch-to-web group in Wave 2, the number of eligible household members and the presence of children in the household.

Results

Overall response rates in the three protocols

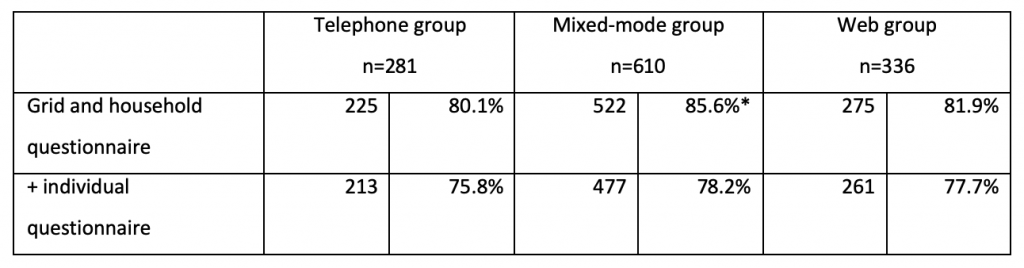

Table 3. Participation and response rates in the three protocols in Wave 2 (analytic sample, N=1227)

Significance tested with Chi-2 tests, * p<.05, ** p<.01, *** p<.001

When only considering completion of the household questionnaire, response rates in wave 2 are significantly higher in the mixed-mode group than in the telephone group. There were no significant differences between the web-only group and the telephone group. If completion of the individual questionnaire by the HRP is also taken into account, the differences between the groups follow the same pattern, but are smaller and not statistically significant.

Results of the logistic regression analysis predicting participation at wave 2 confirmed the bivariate results presented above, and are presented in Appendix A.2 (Models 1A and 1B). In Model 1A (household questionnaire completion), we see that HRPs in the mixed-mode group are significantly more likely to complete the wave 2 household questionnaire than HRPs in the telephone group, even when controlling for other variables. The likelihood of the HRP completing the wave 2 household questionnaire in the web group was not significantly different to that in the telephone group. Yet, when analysing completion of both the household and the individual questionnaire (Model 1B), we find that none of the protocol groups differ significantly from one another. Hence, HRP’s who used web during wave 1 (whether for the household or individual questionnaire) were no less likely to complete wave 2 than respondents in the telephone group, even when controlling for differences in sample composition, and the modes offered at wave 2.

Interaction of protocol with socio-demographic characteristics

When comparing response rates by protocol and household and individual socio-demographic characteristics (see Appendix A.3), bivariate analyses reveal some variation between groups. For example, in the telephone group, HRP’s of larger households were less likely to participate in the second wave compared with those of smaller households, whereas the response rates for larger households compared with smaller households were higher in the web group. At the individual level, whereas there was a larger share of female HRPs in all three groups in wave 1, male HRPs were more likely to repeat the survey in wave 2 in the mixed mode and the web group. By contrast, in the telephone group, a larger share of the female HRPs repeated the survey in wave 2 (both household and individual questionnaire completion).

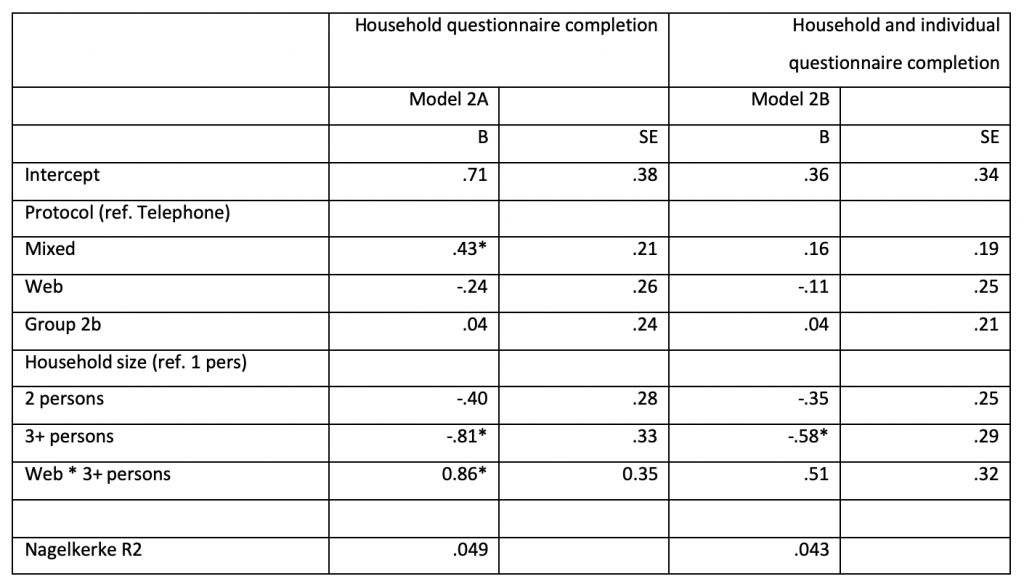

The results of the logistic regression analysis predicting participation at wave 2 with the addition of interactions between the protocol and socio-demographic characteristics of the HRP (RQ2) are shown in Table 4, and allow us to assess whether the bivariate associations are statistically significant when controlling for the other observed sociodemographic variables. Model 2A shows the only interaction effect that was significant: the interaction between web and three or more person households was positive and about the same size as the significant negative main effect for three or more person households. This shows that the HRPs of households of three persons or more were less likely to complete the wave 2 household questionnaire in the telephone and mixed-mode protocols, but this negative effect disappeared for the web-only group.

Table 4. Coefficients of logistic regression models predicting participation in Wave 2 (N=1221)

Note: Only coefficients relevant to the interpretation of the interaction effects are shown. Models controlled for children living in the household, sex, age, civil status, education and nationality of the HRP. Both models had 5 missing values for education and 1 for nationality. * p<.05, ** p<.01, *** p<.001

In other words, compared to the web-only protocol, interviewing HRPs by telephone was less effective for recruiting and keeping HRPs of larger households in wave 2 of the panel. However, the moderating effect of using web only did not extend to wave 2 completion of both the household and the individual questionnaire – the same interaction term added to Model 2B was not statistically significant. Overall, our findings suggest that the likelihood of participating in the second wave did not vary much by design, and the effect of the design did not vary much as a function of household or individual socio-demographic characteristics.

Complete household participation

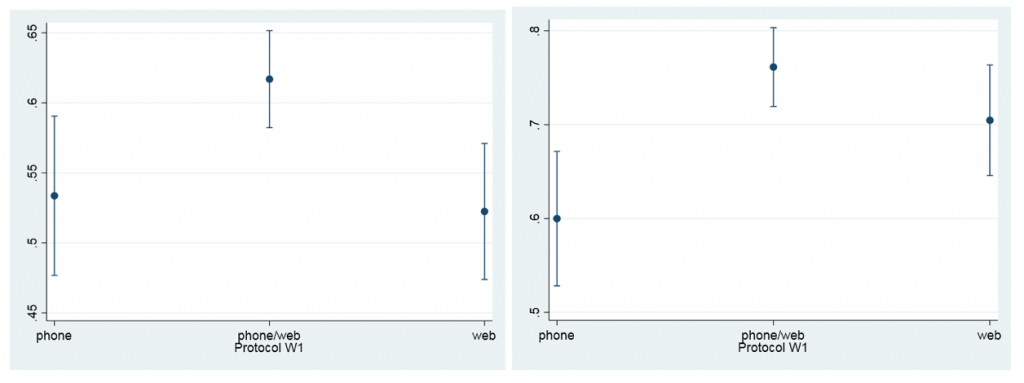

Finally, we address the question of whether the designs differed with respect to the participation of other eligible household members. Figures 1a and 1b show the predicted probabilities of all eligible members in multi-person households completing the individual questionnaire in wave 1 and wave 2. Results show that at wave 1, the mixed-mode design was significantly more successful than the telephone design, whereas there were no significant differences between the web-only group and telephone groups. At wave 2, both the mixed mode group and the web group were more successful than the telephone group at securing the participation of all eligible household members.

Figures 1a (left) and 1b (right). Predicted probability of complete household participation in Wave 1 (1a) and in Wave 2 (1b) by protocol.

Conclusion/Discussion

The main finding of our study is that the use of web in the first wave of a panel study may initially produce lower response rates, but it does not result in significantly higher dropout at the second wave than the use of telephone interviewing. So far household panels have introduced web only by switching already loyal panel members from face-to-face interviews to web questionnaires (Bianchi, Biffignandi, & Lynn, 2017). Our study shows that the use of web questionnaires from the start of the study (with a postal contact strategy), despite producing slightly lower response rates in the first wave, does not lead to higher drop-out rates in the second wave. This is a promising finding for the use of web in the context of setting up a new panel or refreshment sample: panel retention after the first wave does not seem more at stake when sample members are approached by post and ‘pushed-to-web’, rather than by telephone interviewer.

In particular, incorporating web in a mixed mode recruitment strategy (in this case, for household members to complete individual questionnaires following a household-level telephone interview with the HRP) appears to have positive benefits for the subsequent participation of the HRP at wave 2 (at least as far as completing the household questionnaire is concerned). Presumably, this is because the telephone interview is relatively short compared with the standard SHP telephone (control group) protocol, where HRPs are encouraged to complete both the household and the individual questionnaire in the same interview.

We also assessed to what extent effects of using web at wave 1 on dropout varied by demographic characteristics. Whereas bivariate analyses showed that the sample composition differed by treatment group at wave 1, we found no evidence that different demographic groups varied significantly in their propensity to respond at wave 2 as a function of the recruitment strategy they experienced at wave 1. There was one exception to this: larger households, for which the web-only protocol appears to protect slightly against post-recruitment dropout. This again, is likely due to the possibility for HRPs to avoid a lengthy telephone interview, in which they would need to provide details for every additional household member. Nonetheless, the differences in sample composition observed in the three treatment groups (at both waves) suggest that over time, the composition of a longitudinal panel would vary depending on the mode in which survey waves are conducted.

So-called adaptive designs that approach a sample member with a mode with the highest likelihood of response are possible at panel recruitment if auxiliary data on the characteristics of sample members are available, as well as prior empirical data about the response propensities of different sample subgroups in different modes (Carpenter and Burton, 2018; Carpenter et al., 2019; Jäckle et al., 2017; Schouten, Peytchev, and Wagner, 2017). Our study’s findings do not suggest any clear recommendations for the implementation of an adaptive design, except that larger households may benefit from online recruitment.

A specific challenge for household panels is to gain cooperation of all eligible household members. Our study is the first to compare the use of telephone and web for complete household participation, and find a positive effect of the use of web mode. In the first wave the mixed-mode strategy combining a household-level telephone interview with the HRP, with individual web questionnaires for household members, led to a larger share of full household cooperation. In the second wave, whether or not the household questionnaire was completed by the HRP by telephone or by web was no longer a determining factor: web questionnaires on the individual level led to more complete household cooperation in the study.

A number of decisions we made in this study have likely influenced our results. Limiting the study to HRPs who completed the questionnaire in the assigned mode means that our results only show part of the overall picture. As all protocols effectively offered respondents a choice of mode (by offering alternatives at non-response follow-ups or if the respondent expressed a preference), the final sample composition obtained in each group in the mode experiment varied less than is suggested by the analysis presented here (Voorpostel et al, 2020). Similarly, the wave 1 selection errors are larger in the telephone and mixed mode groups due to the non-availability of telephone numbers for 40% of the sample. Further analysis should consider the impact of combining modes sequentially for the household-level response and achieved sample characteristics. Our approach nevertheless allows us to isolate the ‘pure’ effect of the intended treatments, even if, in practice, mode mixing was permitted (and necessary) in all three groups to improve response rates and representation. Finally, our results may be affected by the fact that the wave 1 fieldwork period was relatively short (about two months), which affected the web group the most. This likely led to a selection of respondents in the web group that were particularly motivated to participate and hence less likely to drop out after recruitment.

The findings presented here testify to the ever-growing potential and advantages of web-based survey data collection in general population studies, including those with a longitudinal perspective. A caveat to this is that internet coverage in Switzerland is very high, and the SHP is able to make use of a sampling frame of individuals based on population registers, meaning that a target HRP can (in most cases) be identified and contacted directly. It is not clear to what extent our conclusions would generalise to countries where such frames are unavailable, or with lower internet penetration rates. Equally, as more and more people depend on their smartphones for internet access, survey designs will need to be adapted to accommodate mobile respondents, and decisions about mode may need to take account of questionnaire design features and their suitability for web-based self-completion. Nevertheless, our findings support the overriding conclusion that using some combination of web and interviewer modes (Jäckle, Gaia and Benzeval, 2017) in longitudinal studies is mostly beneficial – both for reducing selection errors associated with the use of a single mode, as well as for reducing data collection costs (Bianchi, Biffignandi and Lynn, 2017; Carpenter and Burton, 2018; Sakshaug, Cernat and Raghunathan, 2019).

Appendix

References

- Bianchi, A., & Biffignandi, S. (2019). Social indicators to explain response in longitudinal studies. Social Indicators Research, 141(3), 931-957.

- Bianchi, A., Biffignandi, S., & Lynn, P. (2017). Web-face-to-face mixed-mode design in a longitudinal survey: Effects on participation rates, sample composition, and costs. Journal of Official Statistics, 33, 385-408.

- Blom, A. G., Bosnjak, M., Cornilleau, A., Cousteaux, A. S., Das, M., Douhou, S. & Krieger, U. (2016). A comparison of four probability-based online and mixed-mode panels in Europe. Social Science Computer Review, 34(1), 8-25. doi: 10.1177/0894439315574825

- Carpenter, H. & Burton, J. (2018). Adaptive push-to-web: Experiments in a household panel study. Understanding Society Working Paper Series 2018-05.

- Carpenter, H., Parutis, V., & Burton, J. (2019). The implementation of fieldwork design initiatives to improve survey quality. Understanding Society Working Paper Series 2019-11.

- Cernat, A. & Sakshaug, J. (in press). Estimating the measurement effects of mixed modes in longitudinal studies: Current practice and issues. In: P. Lynn (Ed.), Advances in longitudinal survey methodology. Hoboken: Wiley.

- De Leeuw, E.D. & Lugtig, P. (2015). Dropouts in Longitudinal Surveys. Wiley StatsRef: Statistics Reference Online. John Wiley & Sons, Ltd. DOI: 10.1002/9781118445112.stat06661.pub2

- Dillman, D., Smyth, J.D., & Christian, L.M. (2014). Internet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design Method. Hoboken, NJ: John Wiley & Sons.

- Hogestol, A., & Skjervheim, O. (2013). Norwegian citizen panel. 2013, First wave.

- Hox, J., De Leeuw, E., and Klausch, T. (2017). Mixed mode research: Issues in design and analysis. In: P. Biemer, E. De Leeuw, S. Eckman et al. (Eds.), Total Survey Error in practice, 511-530. New York: Wiley.

- Jäckle, A., Gaia, A., & Benzeval, M. (2017). Mixing modes and measurement methods in longitudinal studies. CLOSER Resource Report. . London: UCL, Institute of Education.

- Jäckle, A., Lynn, P., & Burton, J. (2015). Going online with a face-to-face household panel: Effects of a mixed mode design on item and unit non-response. Survey Research Methods, 9(1), 57-70.

- Lipps, O. (2007). Attrition in the Swiss household panel. Methoden, Daten, Analysen (Mda), 1(1), 45-68.

- Lipps, O. and Voorpostel, M. (2020). Can interviewer evaluations predict short-term and long-term participation in telephone panels? Journal of Official Statistics, 36(1), 117-136. doi: https://doi.org/10.2478/jos-2020-0006

- Lüdtke, D. and Schupp, J. (2016). Wechsel von persönlichen Interviews zu webbasierten Interviews in einem laufenden Haushaltspanel Befunde vom SOEP. In: Methodische Probleme von Mixed-Mode-Ansätzen in der Umfrageforschung (Ed. S. Eifler and F. Faulbaum), 141-160. Wiesbaden: Springer.

- Martinsson, J., & Riedel, K. (2015). Postal recruitment to a probability based web panel. Long term consequences for response rates, representativeness and costs. Retrieved from https://gup.ub.gu.se/publication/222612.

- Olsen, K. et al. (2019). Transitions from telephone surveys to self-administered and mixed-mode surveys. AAPOR Report (https://www.aapor.org/Education-Resources/Reports/Transitions-from-Telephone-Surveys-to-Self-Adminis.aspx)

- Olsen, K., Smyth, J.D., & Wood, H.M. (2012). Does giving people their preferred survey mode actually increase survey participation rates? An experimental examination. Public Opinion Quarterly, 76(4), 611-635.

- Rao, K., Kaminska, O., & McCutcheon, A. L. (2010). Recruiting Probability Samples for a Multi-Mode Research Panel with Internet and Mail Components. Public Opinion Quarterly, 74(1), 68–84.

- Sakshaug, J.W., Cernat, A., & Raghunathan, T.E. (2019). Do Sequential Mixed-Mode Surveys Decrease Nonresponse Bias, Measurement Error Bias, and Total Bias? An Experimental Study. Journal of Survey Statistics and Methodology 7(4):545–71. https://doi.org/10.1093/jssam/smy024.

- Sakshaug, J.W. & Kreuter, F. (2011). Using paradata and other auxiliary data to examine mode switch nonresponse in a “recruit-and-switch” telephone survey. Journal of Official Statistics, 27(2), 339-357.

- Sakshaug, J.W., Yan, T., & Touraneau, R. (2010). Measurement error, and mode of data collection: Tradeoffs in a multi-mode survey of sensitive and non-sensitive Items. Public Opinion Quarterly, 74(5), 907-933. https://doi.org/10.1093/poq/nfq057

- Schouten, B., Peytchev, A., & Wagner, J. (2017). Adaptive survey design. New York: Chapman and Hall/CRC.

- Tillmann, R., Voorpostel, M., Kuhn, U., Lebert, F., Ryser, V. A., Lipps, O., Wernli, B., & Antal, E. (2016). The Swiss household panel study: Observing social change since 1999. Longitudinal and Life Course Studies, 7(1), 64-78.

- Tourangeau, R. (2017). Mixing modes: Tradeoffs among coverage, nonresponse, and measurement error. In P. P. Biemer, E. D. De Leeuw, S. Eckman, B. Edwards, F. Kreuter, L. E. Lyberg, C. Tucker & B. T. West (Eds.), Total Survey Error in practice: Improving quality in the ear of big data (pp. 115-132). Hoboken, NJ: Wiley.

- Tourangeau, R. (2018). Choosing a mode of survey data collection. In D. L. Vannette & J. A. Krosnick (Eds.), The Palgrave Handbook of Survey Research (pp. 43-50). Cham: Springer International Publishing.

- Tourangeau, R., Conrad, F. G., & Couper, M. P. (2013). The science of web surveys. New York: Oxford University Press.

- Voorpostel, M. (2010). Attrition patterns in the Swiss household panel by demographic characteristics and social involvement. Swiss Journal of Sociology, 36(2), 359-377.

- Voorpostel, M., Lipps, O., & Roberts, C. (2021). Mixing modes in household panel surveys: recent developments and new findings. In P. Lynn (Ed.), Advances in Longitudinal Survey Methodology. Oxford: Wiley.

- Voorpostel, M., Kuhn, U., Tillmann, R., Monsch, G. A., Antal, E., Ryser, V. A., … & Dasoki, N. (2020). Introducing web in a refreshment sample of the Swiss Household Panel: Main findings from a pilot study. FORS Working Paper Series, paper 2020-2. Lausanne: FORS.