Using surveys to assess students’ political knowledge: Evidence of a gender gap or disparate response styles?

Research note

Winkler C. E. (2024). Using surveys to assess students’ political knowledge: Evidence of a gender gap or disparate response styles? Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=16162

© the authors 2024. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

The aims of public education often include preparing students to be actively engaged in the civic and political realms of society. However, research findings have consistently provided evidence of a gender gap in political knowledge, with men demonstrating significantly higher levels of political knowledge than women. Recently, scholars have begun questioning the validity of such findings due to the nature of the measures used to assess political knowledge. This study applies item response theory, specifically IRTree modeling, to evaluate the extent to which a reported gender gap in high school students’ political knowledge can be attributed to survey response styles. Implications for similar instruments designed to assess political knowledge are discussed.

Keywords

data quality, gender comparisons, item response theory, measurement, validity

Copyright

© the authors 2024. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Background

Numerous educators and scholars have articulated the role of public education in promoting political and civic engagement (e.g., Dewey, 1916; Covaleski, 2007; Flanagan et al., 2010; McLeod et al., 2010). They note that, “more than any other institution, the public school introduces younger generations to and develops their capacities for participating in a democratic society” (Flanagan et al., 2010, p. 323). Recent research has found that school experiences exert a particularly strong influence on the development of knowledge and attitudes that precede civic engagement in adulthood (Dassonneville et al., 2012; Kisby & Sloam, 2014; Pontes et al., 2019). Experiences inside and outside the classroom, such as civics courses and discussing public affairs, have been found to promote civic engagement across the life course (McLeod et al., 2010). Thus, civic and political engagement, like other developmental outcomes, are rooted in the early educational experiences of youth (Astuto & Ruck, 2017).

Previous studies measuring political knowledge, a foundational component of civic engagement, have often pointed to the particularly limited knowledge base of adolescents (Dudley & Gitelson, 2002). Other studies have noted a gender gap in adolescents’ political knowledge. For example, Wolak and McDevitt (2011) found that, even when accounting for factors such as political interest and efficacy, young women scored significantly lower on political knowledge items than young men. This disparity persists across the life cycle, as numerous authors have found that men consistently exhibit higher levels of political knowledge than women in adulthood (Dolan, 2011). In fact, Dow (2009) described this gender gap in political knowledge as “[o]ne of the most robust findings” in the realm of political research (p. 117).

Recently, scholars have raised concerns regarding the survey practices common in research of political knowledge (Mondak & Davis, 2001). Mondak and Davis (2001) suggested that the presence and/or magnitude of a gender gap may be conflated with attempts to quantitatively measure political knowledge. For example, including a “don’t know” response option is the norm in many political knowledge surveys; then, “don’t know” and incorrect responses are collapsed together, grounded in the untested assumption that both represent an absence of political knowledge (Mondak & Davis, 2001).

With these common practices in mind, Mondak and Anderson (2004) examined whether the political knowledge gap between men and women is merely an artifact of how such knowledge is measured. They hypothesized that if men were more likely to guess than women (or vice versa), then the observed differences in political knowledge by gender would be artificially inflated (Mondak & Anderson, 2004). They ultimately concluded that “a substantial portion of the knowledge gender gap is the consequence of a response set effect” (Mondak & Anderson, 2004, p. 507). Similarly, Lizotte and Sidman (2009) conducted multiple analyses, including a three-level hierarchical model and three parameter (3PL) item response models, which together suggested that the difference in guessing behavior of men and women accounted for nearly 36% of the gender gap in political knowledge. These studies, however, focused political knowledge among adult populations.

Although much of the aforementioned research was conducted in the United States (U.S.) political context, scholars have noted the applicability of such studies in both the U.S. and abroad (Jerit & Barabas, 2017). For example, questions related to persistent gender gaps in political knowledge have been explored within the specific contexts of Spain (Ferrín & Fraile, 2014), Britain (Frazer & Macdonald, 2003), and Canada (Stolle & Gidengil, 2010). Similar cross-national studies have also been conducted across Europe (e.g., 27 European Union countries examined by Fraile (2014)) and across democracies globally (e.g., 47 countries spanning Europe, Asia, and Latin America examined by Fortin-Rittberger (2016)). In fact, Fortin-Rittberger (2016) found that significant gaps in political knowledge existed in nearly all of the 47 democratic countries examined.

Purpose

This study sought to evaluate the presence and magnitude of a gender gap in political knowledge among high school students, after accounting for students’ response styles. More specifically, the purpose was to first ascertain whether political knowledge surveys of adolescents, specifically those which include “don’t know” response options, are truly testing one latent construct (political knowledge ability) or whether such surveys are testing two separate latent constructs (political knowledge ability and propensity to respond). Based on the results, this research also aimed to evaluate the extent to which the gender gap in political knowledge surveys for adolescents reflects true differences in knowledge between young men and women in high school, and the extent to which it reflects a substantively irrelevant response effect.

Ultimately, the underlying goal of this study was to inform survey methods that aim to produce an accurate measure of adolescents’ true levels of political knowledge; such information will inform schools efforts to more effectively prepare all students to be knowledgeable and engaged citizens. Although the current study pertains primarily to the U.S. context, the question examined and methodological approach employed can inform measurement of political knowledge across contexts. Given the prevalence of questions related to gender gaps in political knowledge noted above, a similar approach might offer insights for other democratic nations across the globe.

Methods

De Boeck & Partchev (2012) introduced a category of item response models, which they refer to as IRTree models. These models, as their name suggests, represent categories of items with a tree structure (De Boeck & Partchev, 2012). In a linear response tree, each node can be modeled with a logit link, resulting in the estimation of probabilities for each response choice. De Boeck and Partchev (2012) suggested applying response trees to model missing data, as ability and omission tendency can potentially be measured as separate latent traits. In such cases, the missing response (i.e., omission tendency, or likelihood of selecting a “don’t know” response option) is modeled as a third response category. Subsequently, Debeer et al. (2017) illustrated, via a simulation study, that ignoring missing responses can result in biased estimates, and that bias in such instances can be reduced with the application of IRTree models (Debeer et al., 2017), thereby resulting in more accurate estimates of the latent trait of interest.

Data

The data used in this study were collected by McDevitt (2016) and are publicly available via the Inter-university Consortium for Political and Social Research (ICPSR). Sampling efforts were intended to produce a dataset that reflected the relational, cultural, and demographic diversity in the U.S. context. In order to do this, data were collected from ten states that varied in regional influence, sociopolitical culture, and state size. Those states included five “blue” (i.e., Republican-leaning in terms of political party affiliation) states and five “red” (i.e., Democratic-leaning in terms of political party affiliation) states; in other words, states affiliated with each of the dominant parties in the U.S. political system were equally represented in the sample frame.

Using a database of approximately 8,000,000 students in those 10 states, which was provided by American Student Lists, McDevitt (2016) selected a random sample of high school seniors. Survey data were obtained via computer-assisted telephone interviews, and resulted in 950 completed interviews—95 completed interviews were obtained from each of the 10 states in the sample frame. A slight majority of respondents identified as young women (n = 532; 56.0%), while the remaining respondents identified as young men (n = 418; 44.0%). Most respondents were white (n = 700; 73.7%), though Hispanic (n = 86; 9.1%), African American (n = 70; 7.4%), Asian (n = 35; 3.7%), and other (n = 41; 4.3%) racial and ethnic identifications were reported as well. When compared to National Center for Educational Statistics (NCES) data, McDevitt (2009) found that respondents were generally representative of U.S. high school population at the time data were collected (McDevitt, 2009).

Using this same dataset, Wolak and McDevitt (2011) previously explored the roots of the gender gap in adolescents’ political knowledge. Guided by the work of Mondak and Anderson (2004), Wolak and McDevitt (2011) conducted their analyses on predictors of political knowledge using a multinomial logit model. The current study expanded on their work by examining more deeply the role that multiple response options play in the measurement of respondents’ political knowledge via application of IRTree models. Although initial data collection efforts by McDevitt (2016) included measurement of students’ dispositions, cognition/media use, and activist identity, the current study utilized only a subset of items—those intended to measure political knowledge, specifically.

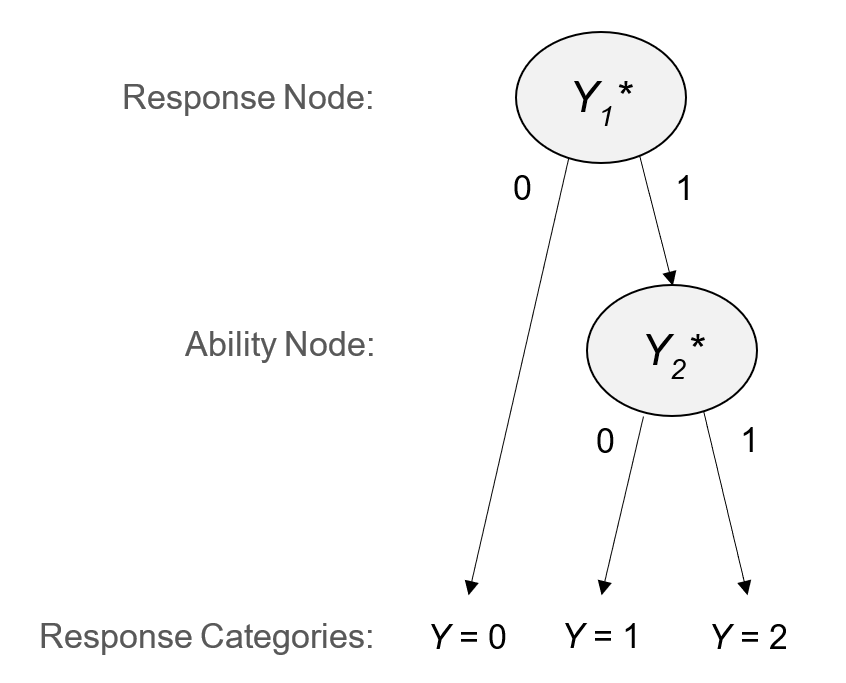

Analyses

IRTree models are members of the generalized linear mixed model (GLMM) family, and thus can be estimated using the lme4 package in R (Bates et al., 2011; De Boeck et al., 2011). In order to conduct the intended analyses in lme4, data were restructured as described by De Boeck and Partchev (2012). The restructured data reflected the IRTree structure (Figure 1), with two nodes representing two potentially separate latent traits. The first node (Y1: the “response” node) measured individuals’ propensity to respond to the knowledge items; the first branch indicated a respondent answered “don’t know” (0), while the second branch indicated that the respondent attempted a response, regardless of whether that attempt was correct or incorrect (1). Consequently, the second node (Y2: the “ability” node) measured level of political knowledge for those who attempted a response to each item; the first branch indicated a respondent provided an incorrect response (0), while the second branch indicated a respondent provided a correct response (1). Together, the two nodes contained individuals’ three possible response options: “Don’t know” (Y = 0), an incorrect response (Y = 1), or a correct response (Y = 2).

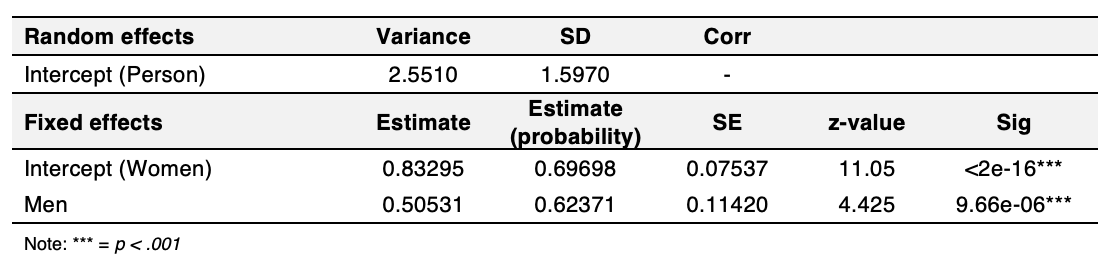

Three models were tested to represent multiple conditions related to respondents’ political knowledge and survey response styles. First, for comparison to subsequent models, Model 1 estimated the differences in political knowledge between young men and women using only fixed effects. It did not include effects by node, and thus did not simultaneously measure the propensity of men and women to provide a response to the political knowledge items. Model 1 essentially recreates the conditions under which claims of a political knowledge gender gap are often made (i.e., treating omission of a response as an incorrect response).

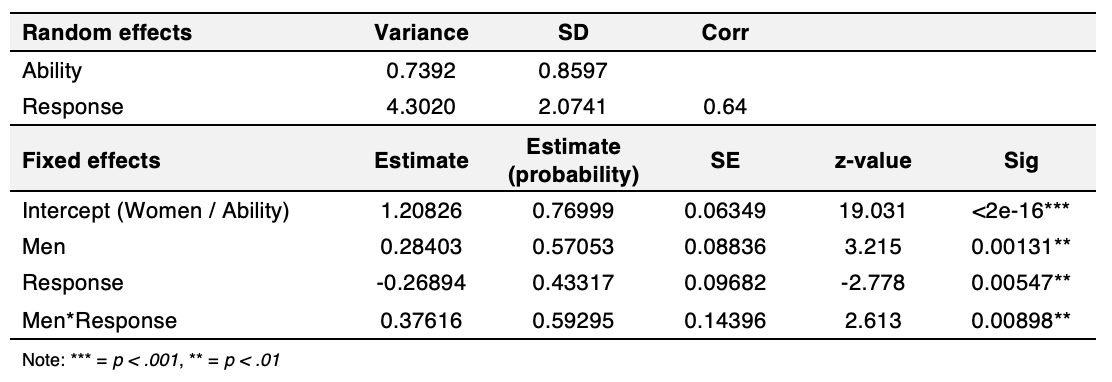

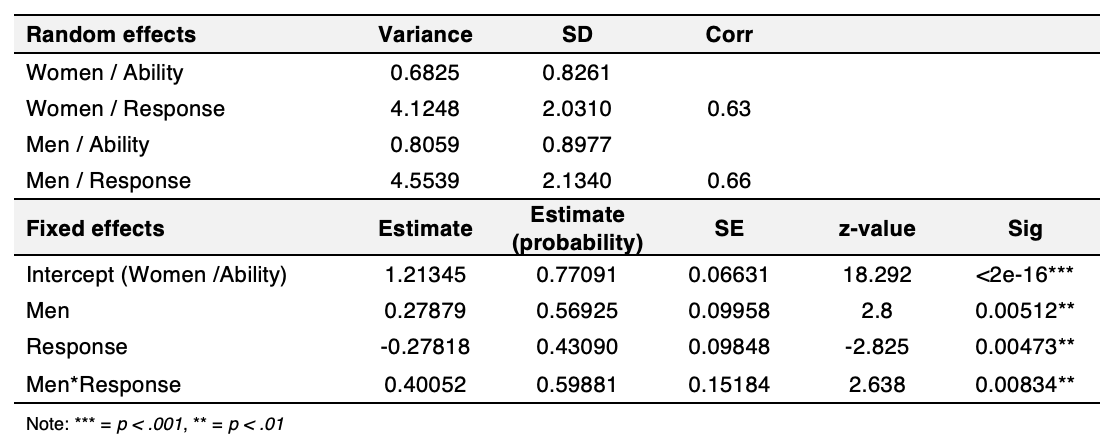

Conversely, Models 2 and 3 applied an IRTree structure to test whether propensity to respond and political knowledge were separate latent traits in the sample. This was tested via estimation of fixed effects for both gender (men/women) and node (response/ability). While Model 2 estimated random effects by node only, Model 3 estimated random effects for both node and gender (i.e., allowed for heteroscedasticity by gender); this means that Model 2 estimated unique variances for the response node and ability mode, and Model 3 estimated unique variances for the response node and ability node by gender. Model fit for the three alternatives was compared using AIC, BIC, deviance, and a log likelihood ratio test.

Results

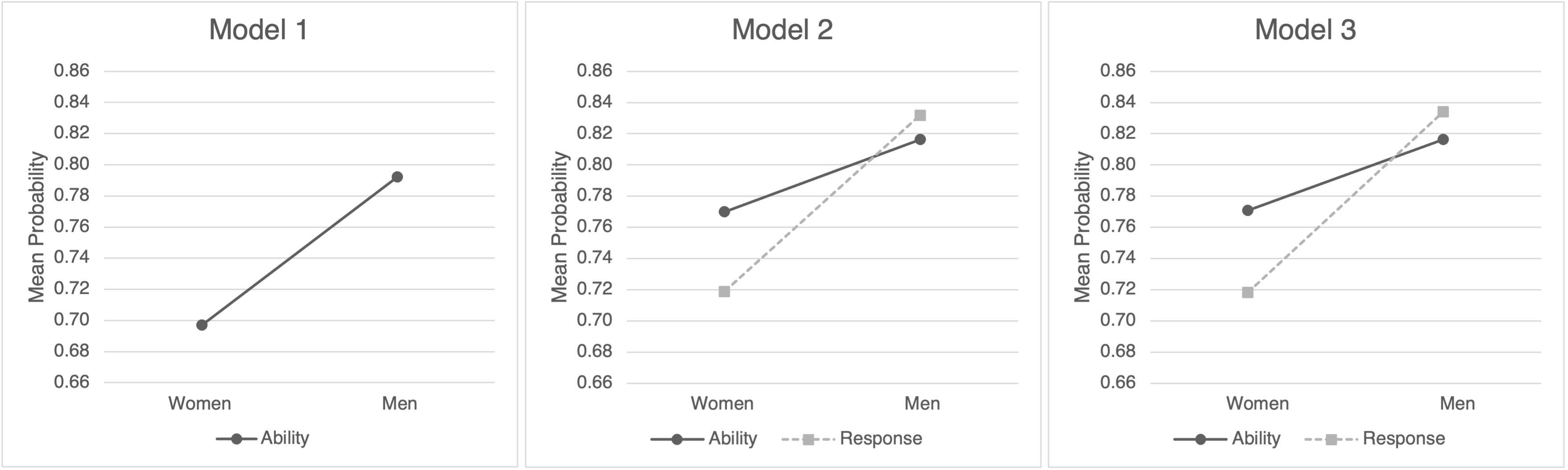

As expected based on the substantive literature, the fixed effects estimated from Model 1 suggested that young men had significantly higher levels of political knowledge than young women (p < .001) (Table 1). The mean ability estimates indicated that, on average, young men in high school had a 79.22% probability of responding correctly to the political knowledge items, whereas on average young women in high school had a 69.70% probability of responding correctly to the same political knowledge items. This gender gap was consistent with the findings of Wolak and McDevitt (2011), as well as other studies. This was to be expected, as Model 1 collapsed “don’t know” and incorrect responses, and thus estimated individual ability based solely on correct responses.

Model 2 also found that young men had significantly higher levels of political knowledge than young women (p = .00131) (Table 2). This difference, however, was coupled with a significant difference in the propensity for individuals to respond to the political knowledge items on the survey (p = .00547), as well as a significant interaction between gender and response (p = .00898). When accounting for differences in the response node, the gender gap with regard to the ability node decreased (from .50531 logits to .28403 logits). Variance estimates for the two nodes indicated that there was greater variance in the propensity to respond (4.3020) than in political knowledge (.7392). Additionally, a moderate correlation between the ability and response nodes (.64) revealed that respondents with higher levels of political knowledge were generally more likely to provide a response to the survey questions than those with lower levels of political knowledge.

Model 3 produced results nearly identical to those of Model 2 in terms of estimates of fixed effects (i.e., mean estimates of the ability and response latent traits by gender). The primary difference in Model 3 was its additional estimation of variance for each node by gender (Table 3). Interestingly, the variance estimates by gender were quite similar for both the response node (4.5539 for young men and 4.1248 for young women) and the ability node (.8059 for young men and .6825 for young women); the correlations between the ability and response nodes were also similar (.66 for young men and .63 for young women).

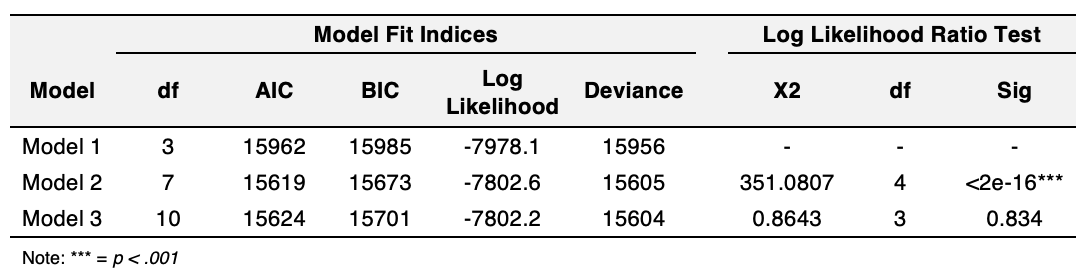

As indicated in Table 4, Model 2 fit the data significantly better than model 1 (∆χ2 (4) = 351.0807, p < .001), indicating that accounting for survey response styles improved estimates of students’ underlying political knowledge. Model 3, however, did not fit the data significantly better than Model 2 (∆χ2 (3) = 0.8643, p = .834). The nearly equivalent random effects by gender in Model 3, coupled with less favorable model fit indices, indicated that – although it was crucial to account for response styles – allowing for heteroscedasticity by gender did not significantly improve the model.

In other words, accounting for differences in respondents’ propensity to select the “don’t know” response option resulted in different conclusions regarding political knowledge among young men and women in high school. As depicted in Figure 2, differences by gender are much less prominent in Models 2 and 3, which accounted for the increased likelihood among young women to respond using the “don’t know” option.

Significance

The results of this study, including the significantly better fit of both Models 2 and 3 when compared to Model 1, suggest that when using surveys to measure political knowledge, respondents’ ability should be viewed as a separate latent trait than their propensity to respond (versus select “don’t know”) to survey items. Current practices in political knowledge surveys which treat “don’t know” response options as equivalent to incorrect responses conflate individuals’ response tendencies with their true levels of political knowledge. While there may remain a difference in political knowledge between young men and women, the magnitude of that difference decreases when analyses such as IRTrees are utilized to more appropriately parse out the effects of respondent ability (the latent trait of interest) and survey response style.

These findings provide guidance for scholars and educators interested in cultivating students’ civic literacy, skills, and engagement. In particular, attention should be paid to evaluations of student learning in such realms, as efforts must be taken to eliminate potential sources of measurement error in the assessment of civic and political knowledge across student populations. After all, as Sherrod et al. (2010) contend, “research on the development of citizenship is essential to local, national, and worldwide efforts to promote an active engaged citizenship” (p. 15). Although the current study was conducted within the context of the U.S. political and school system, and thus may be limited in its generalizability, the methods illustrated in the current study may serve to inform measurement of other knowledge-based surveys across a variety of topics and contexts.

References

- Astuto, J., & Ruck, M. (2017). Growing up in poverty and civic engagement: The role of kindergarten executive function and play predicting participation in 8th grade extracurricular activities. Applied Developmental Science, 4, 301-318.

- Bates, S., Maechler, M., & Bolker, B. (2011). lme4: Linear mixed-effects models using S4 classes. R package version 0.999375-38, URL http://CRAN.R-project.org/package=lme4.

- Covaleski, J. (2007). What public? Whose schools? Educational Studies, 42, 28-42.

- Dassonneville. R., Quintelier, E., Hooghe, M., & Claes, E. (2012). The relation between civic education and political attitudes and behavior: A two-year panel study among Belgian late adolescents. Applied Developmental Science, 16, 140-150.

- De Boeck, P., Bakker, M., Zwitser, R., Nivard, M., Hofman, A., Tuerlinckx, F., & Partchev, I. (2011). The estimation of item response models with the lmer function from the lme4 package in R. Journal of Statistical Software, 39(12), 1-28.

- De Boeck, P. & Partchev, I. (2012). IRTrees: Tree-based item response models of the GLMM family. Journal of Statistical Software, 48, 1-28.

- Debeer, D., Janssen, R., & De Boeck, P. (2017). Modeling skipped and not-reached items using IRTrees. Journal of Educational Measurement, 54(3), 333-363.

- Dewey, J. (1916). Democracy and education. New York, NY: The Free Press.

- Dolan, K. (2011). Do women and men know different things? Measuring gender differences in political knowledge. The Journal of Politics, 73(1), 97-107.

- Dow, J. K. (2009). Gender differences in political knowledge: Distinguishing characteristics-based and returns-based differences. Political Behavior, 31, 117-136.

- Dudley, R. L., & Gitelson, A. R. (2002). Political literacy, civic education, and civic engagement: A return to political socialization? Applied Developmental Science, 6(4), 175-182.

- Ferrín, M., & Fraile, M. (2014). Measuring political knowledge in Spain: Problems and consequences of the gender gap in knowledge. Revista Expanola de Investigaciones Sociologicas, 147, 53-72.

- Flanagan, C., Stoppa, T., Syvertsen, A. K., & Stout, M. (2010). Schools and social trust. In L. R. Sherrod, J. Torney-Purta & C. A. Flanagan (Eds.), Handbook of research on civic engagement in youth. Hoboken, NJ: John Wiley & Sons Inc.

- Fortin-Rittberger, J. (2016). Cross-national gender gaps in political knowledge: How much is due to context? Political Research Quarterly, 69(3), 391-402.

- Fortunato, D., Stevenson, R. T., & Vonnahme, G. (2016). Context and political knowledge. The Journal of Politics, 78(4), 1211-1228.

- Fraile, M. (2014). Do women know less about politics than men? The gender gap in political knowledge in Europe. Social Politics, 21(2), 261-289.

- Frazer, E., & Macdonald, K. (2003). Sex differences in political knowledge in Britain. Political Studies, 51, 67-83.

- Jerit, J., & Barabas, J. (2017). Revisiting the gender gap in political knowledge. Political Behavior, 39, 817-838.

- Kisby, B., & Sloam, J. (2014). Promoting youth participation in democracy: The role of higher education. In A. Mycock & J. Tonge (Eds.), Beyond the youth citizenship commission: Young people and politics. London: Political Studies Association.

- Lizotte, M., & Sidman, A. H. (2009). Explaining the gender gap in political knowledge. Politics & Gender, 5, 127-151.

- McDevitt, M. (2009). Spiral of rebellion: Conflict seeking of democratic adolescents in republican counties (Working Paper #68). The Center for Information & Research on Civic Learning & Engagement (CIRCLE).

- McDevitt, M. (2016). Colors of Socialization: Political and Deliberative Development among Older Adolescents in 10 States, 2006-2007. Ann Arbor, MI: Inter-university Consortium for Political and Social Research [distributor].

- McLeod, J., Shah, D., Hess, D., & Lee, N. (2010). Communication and education: Creating competence for socialization into public life. In L. R. Sherrod, J. Torney-Purta & C. A. Flanagan (Eds.), Handbook of research on civic engagement in youth. Hoboken, NJ: John Wiley & Sons Inc.

- Mondak, J. J., & Anderson, M. R. (2004). The knowledge gap: A reexamination of gender-based differences in political knowledge. The Journal of Politics, 66(2), 492-512.

- Mondak, J. J., & Davis, B. C. (2001). Asked and answered: Knowledge levels when we will not take “don’t know” for an answer. Political Behavior, 23(3), 199-224.

- Pontes, A. I., Henn, M., & Griffiths, M. D. (2019). Youth political (dis)engagement and the need for citizenship education: Encouraging young people’s civic and political participation through the curriculum. Education, Citizenship, and Social Justice, 14(1), 3-21.

- Sherrod, L. R., Torney-Purta, J., & Flanagan, C. A. (Eds.). (2010). Handbook of research on civic engagement in youth. Hoboken, NJ: John Wiley & Sons Inc.

- Stolle, D., & Gidengil, E. (2010). What do women really know? A gendered analysis of varieties of political knowledge. Perspectives on Politics, 8(1), 93-109.

- Wolak, J., & McDevitt, M. (2011). The roots of the gender gap in political knowledge in adolescence. Political Behavior, 33, 505-533.