Optimizing Advance Letters: Findings From a Cognitive Pretest

Martin, S., Zabal, A., Kapidzic, S. & Lenzner, T. (2022). Optimizing Advance Letters: Findings From a Cognitive Pretest. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=16480

© the authors 2022. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

In many surveys, advance letters are the first point of contact with target persons. The letters have multiple objectives, such as informing about the survey request, gaining trust, and evoking participation. Numerous previous quantitative and experimental studies have varied the content and layout of advance letters to examine effects on outcome rates and nonresponse bias. In contrast, studies on advance letters using qualitative approaches are rather scarce, although they can provide more information on how respondents process advance letters and perceive certain aspects of advance letters. The present study implemented a cognitive pretest in which 20 participants evaluated three advance letters for the field study of a large-scale assessment in Germany. Key findings showed: Text length and structure are central elements that affect whether the advance letter is read; sponsor information, reference to the source of target persons’ addresses, and information about interviewers seem to convey legitimacy and trust. The results of this qualitative study point to recommendations in the design of respondent-oriented advance letters that may be of interest to other survey programs, especially in the face-to-face setting.

Keywords

advance letter, cognitive pretest, cooperation, face-to-face survey, PIAAC

Acknowledgement

Special thanks go to several colleagues: Jens Bender and Natascha Massing from the German PIAAC team for feedback on content and layout of the advance letters; Patricia Hadler and Cornelia Neuert from the pretest laboratory for the implementation of the cognitive pretest; Friederike Quint, Niklas Reisepatt, Patricia Steins, and Gina-Maria Unger for conducting the cognitive interviews. The PIAAC survey in Germany is funded by the Federal Ministry of Education and Research with the participation of the Federal Ministry of Labor and Social Affairs.

Copyright

© the authors 2022. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

Interviewer-administered population surveys worldwide suffer from declining response rates (e.g., Bethlehem et al., 2011; Beullens et al., 2018; Kreuter, 2013). Despite this development, large-scale cross-country surveys, such as the European Social Survey or the Programme for the International Assessment of Adult Competencies (PIAAC), aim for high response rates to maximize the quality of the data (OECD, 2014; Stoop et al., 2018). Survey practitioners need to employ sophisticated fieldwork strategies and invest a great deal of effort in gaining cooperation and counteracting increasing survey fatigue and lack of interest. Theories of survey participation declare that persons are differentially motivated to respond to a survey request (cf. social exchange theory, leverage-saliency theory: Dillman et al., 2009; Groves et al., 2000). The tailored design method builds on the theory of social exchange. It combines several measures to establish trust and encourage the cooperation of different target persons (Dillman et al., 2009). Advance letters are one element in this strategy.[1]

Crafting advance letters is a challenging task. There is no one size that fits all: What convinces one person may alienate another. As the first point of contact with the target person, a central objective of the advance letter is to make an excellent first impression. At the very least, the advance letter should not deter persons from participating in the survey. However, the value of advance letters cannot only be weighed by their role in increasing response rates. In terms of survey quality, the crux is whether and how the advance letter affects differential nonresponse and whether the advance letter increases participation among those least likely to cooperate without the advance letter. Thus, the advance letter should be well constructed, and both the content and tone carefully chosen. According to Dillman et al. (2009), advance letters should provide certain critical pieces of information concisely and engagingly, inform target persons about the survey request, convey trust, and emphasize the credibility of the survey.

Many previous studies on cover or advance letters took place in the context of mail surveys. In a mail survey, the cover letter is the most important document besides the questionnaire. It is the only instrument of persuasion to gain respondent cooperation; at the same time, it provides instructions on the survey tasks. Research in the context of interviewer-administered surveys has primarily focused on telephone rather than face-to-face surveys (see, e.g., the meta-analysis by de Leeuw et al., 2007). In face-to-face surveys, advance letters are a supportive tool to aid interviewers in their recruitment efforts, i.e., they pave the way for a positive doorstep interaction between interviewer and target person.

Previous research on cover and advance letters generally focused on identifying essential features of advance letters, preferably those that appeal to all sample persons (Lynn, 2016). Most studies were quantitative and investigated specific details of advance letters based on survey practitioners’ assumptions about the relevant dimensions, without exploring whether these are indeed considered relevant by respondents. While quantitative approaches can provide valuable results on whether specific interventions (e.g., including a sponsor logo) affect participation, they do not offer further insight into the differential effects of the interventions and how these are perceived by participants: Why do they work or not work, or why do they only sometimes work? Qualitative approaches are well suited to obtain as much information as possible about how people evaluate different aspects of the advance letter, what they find important, or what they misunderstand. Vogl et al. (2019) recently pointed out the need for more qualitative research on this topic, as only a few studies focus on the respondents’ perspectives of advance letters (e.g., Landreth, 2001; Landreth, 2004; White et al., 1998).

The present study focuses on the respondent’s perspective and aims to gain insights into how they perceive and react to the content and design of advance letters in the context of a face-to-face survey. A cognitive pretest was conducted with 20 test persons, who provided feedback on three different advance letters, which varied in design and content. The focus was to investigate how design features and components of advance letters may motivate participation or trigger concerns and hamper cooperation. The study contributes to the body of knowledge on respondent-oriented advance letters and may be of interest to practitioners of other face-to-face surveys.

Function and Design of Advance Letters

Advance letters are usually the first contact with target persons, irrespective of whether they are cover/invitation letters enclosed with a paper questionnaire in mail surveys or pre-notification letters in telephone/face-to-face surveys. The letters have multiple objectives (de Leeuw et al., 2007; Dillman et al., 2009; Lynn, 2016): They should briefly inform target persons about the survey request, motivate participation, and – if applicable – announce the interviewer’s visit/call. The structure, wording, and style should enable a heterogeneous group of recipients to easily extract essential information, such as why they are being contacted or what the survey is about. Beyond the informational function, the letter should also convey the legitimacy of the survey. Personalized advanced letters aim to establish trust and create a positive impression conducive to participation.

Survey practitioners should craft informative, respondent-oriented advance letters. However, measuring the direct (positive) effect of advance letters on participation is difficult because they are only one of several measures in the toolbox. Other measures at the respondent level include, for example, incentives and additional contact material such as brochures and flyers. Measures at the interviewer level (e.g., careful selection and training of interviewers, attractive remuneration) and fieldwork activities (e.g., elaborated contact and follow-up strategies) also impact participation.

Comparing the manifold research findings on advance letters is not straightforward because studies differ concerning modes, sampling, content, layout, and survey contexts (e.g., experimental designs, selective samples). Many findings come from mail (e.g., Dillman et al., 2001; Finch & Thorelli, 1989; Gendall, 1994; Redline et al., 2004) or telephone surveys (e.g., de Leeuw et al., 2007; Goldstein & Jennings, 2002; Link & Mokdad, 2005; Vogl, 2019). With an increase in online surveys, some research has shifted its focus to this mode (e.g., Fazekas et al., 2014; Heerwegh & Loosveldt, 2006; Kaplowitz et al., 2012). Studies of advance letters in face-to-face surveys, however, are scarce (e.g., Lynn et al., 1998; Sztabiński, 2011).

Most studies on advance letters aimed to test the effects of advance letters on outcome rates and nonresponse bias and investigated the cost-effectiveness of mailings (Vogl et al., 2019). Different aspects of letters were varied, contrasting specific dimensions (e.g., Dillman et al., 2001; Fazekas et al., 2014; Finch & Thorelli, 1989; Gendall, 1994; Luiten, 2011; Redline et al., 2004), for example: appeal (altruistic, egoistic, help-the-sponsor), language (complex, simple), tone (formal, informal), visual design (graphics, logos), personalization. Overall, the findings on outcome rates and recommendations on the design and content of advance letters are inconclusive. Due to the heterogeneity of their design features, results often differ from study to study (e.g., Billiet et al., 2007; de Leeuw et al., 2007; Gendall, 1994; Lynn et al., 1998; von der Lippe et al., 2011).

Some studies used an experimental design to either compare letters with different design elements or examine whether sending a letter had an effect compared to not sending a letter at all (e.g., Finch & Thorelli, 1989; Heerwegh & Loosveldt, 2006; Luiten, 2011; Lynn et al., 1998; Redline et al., 2004). Other studies occurred in the context of consumer or marketing surveys (e.g., Albaum & Strandskov, 1989; Childers et al., 1980; Greer & Lohtia, 1994) or relied on small or selective samples, e.g., health practitioners or students (e.g., Kaplowitz et al., 2012; Leece et al., 2006), rather than the general population.

Although the number of studies on advance letters emphasizes that advance letters are a central component of the survey design, it appears that, overall, their positive effect on response rates is often only modest. Furthermore, the studies focus on key aspects of content and design of advance letters that seem to primarily reflect researchers’ assumptions of relevance, but not necessarily the respondent’s perspective. Findings from qualitative studies on respondents’ perspectives are scarce. Landreth (2004) and White et al. (1998) evaluated advance letters with cognitive interviews to identify details that are important to respondents. According to the authors, advance letters should mention the survey’s purpose and the survey organization, provide details about the interviewer’s visit, explain that the selected person represents thousands of persons, fulfill the recipients’ information needs, and convey content in an accessible form. Content should be limited because recipients do not capture every detail but often skim or skip text passages. Qualitative approaches allow to identify shortcomings in advance letters and explore whether the presented information is correctly captured or misunderstood by recipients. They can be instrumental in providing recommendations for designing targeted, respondent-oriented advance letters.

Present Study

The present study evaluated the content and design features of three advance letters in the context of the field test of PIAAC Cycle 2 in Germany. For this purpose, a cognitive pretest examined participants’ evaluation of these features. Three drafts were crafted based on in-house experience and a review of advance letters used in large-scale surveys in Germany. The study aimed at identifying central components of advance letters that make them informative, appealing, and motivating for a heterogeneous group of persons. Another objective was to determine whether information was missing, not noticed, or misunderstood. Besides the overall impression, components that alleviate or trigger concerns, foster participation, and convey trust and legitimacy were examined.

PIAAC is a multi-cycle cross-sectional survey initiated by the Organisation for Economic Co-operation and Development, assessing cognitive skills of adult populations (ages 16-65) in over 30 countries. The cognitive pretest was part of the preparatory work for the data collection of the PIAAC Cycle 2 field test in Germany. A set of comprehensive measures was developed, including an advance mailing consisting of an advance letter, a flyer, and a data privacy sheet, to gain the cooperation of randomly selected target persons for face-to-face interviews. The flyer and data privacy sheet contain additional information but were not tested in the pretest to keep pretesting time in check and focus on the advance letter per se.

Variations of the Advance Letters

As the first point of contact with the target person, advance letters must convey specific survey-related content, and the inclusion of some elements is essential and non-negotiable (Stadtmüller et al., 2019). Although content-wise the scope for variation is limited, there are degrees of freedom with respect to layout/design, granularity, complexity, and positioning of information. In our case, the following information had to be included: survey name and purpose, responsible organizations, sample selection, data protection, voluntary participation, contact information, information about interviewers, incentives, and survey-specific content.

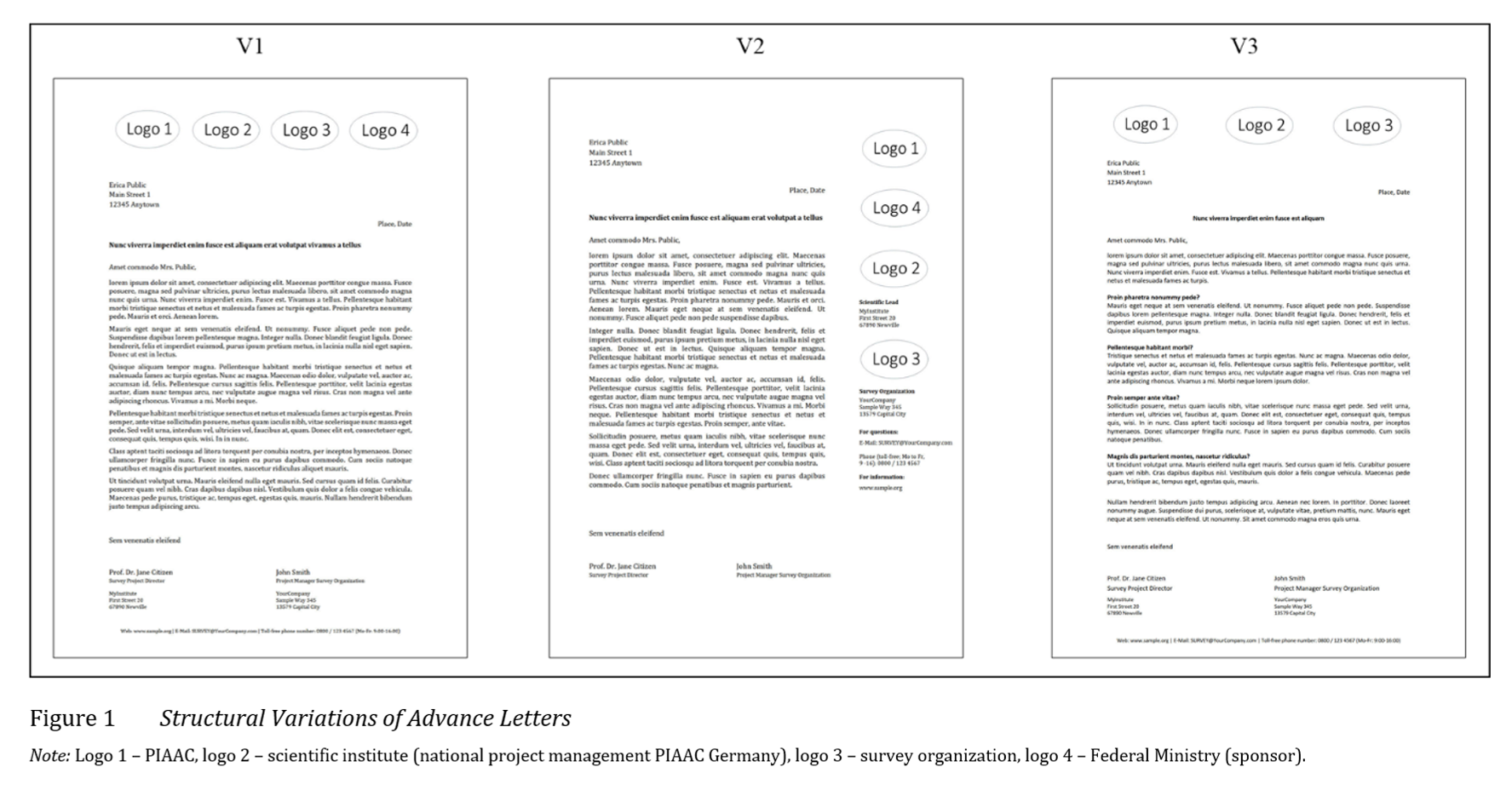

Three advance letters (V1, V2, V3) were developed using variations of several formal and content-related components. Formal aspects of the advance letter are scope, language/tone, and layout. Each letter was limited to a single page (scope). The tone was professional, and the language was easy to understand and grammatically correct. In terms of layout, the structure, positioning of logos, font types, font sizes, and contact information were varied. Figure 1 shows the general arrangement of texts and logos in each letter.

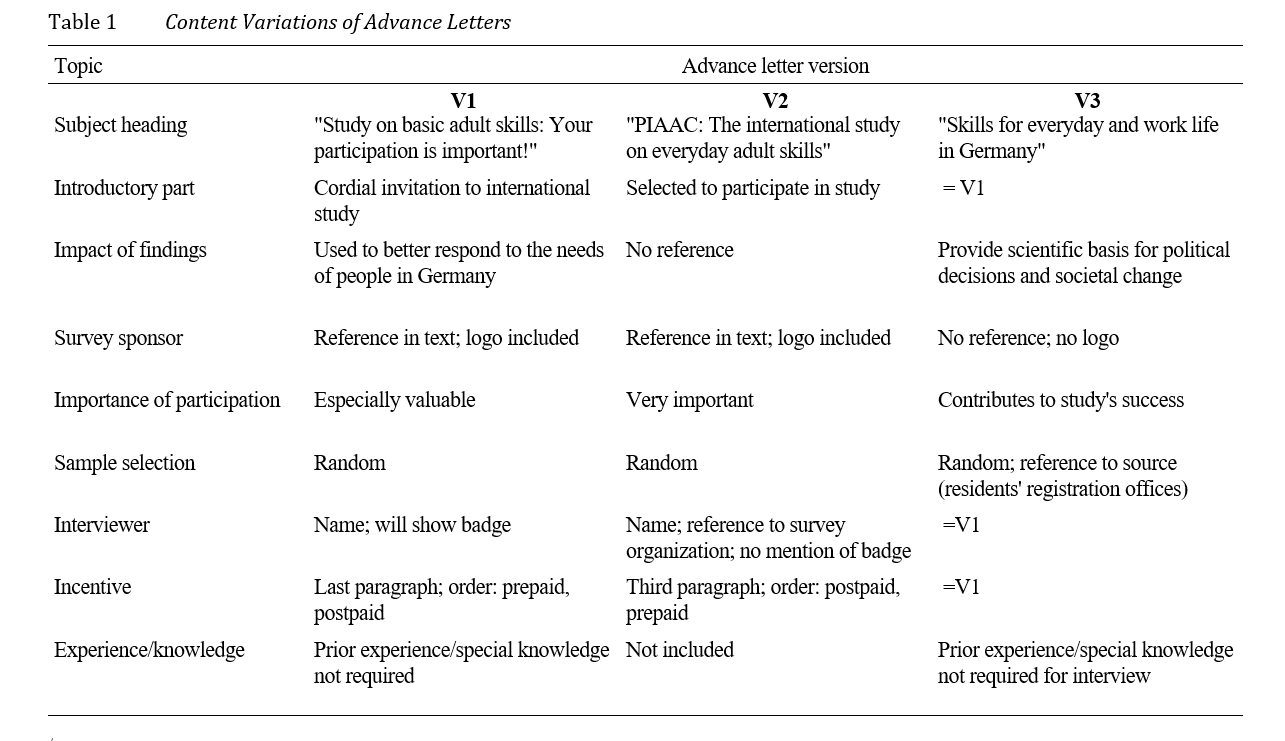

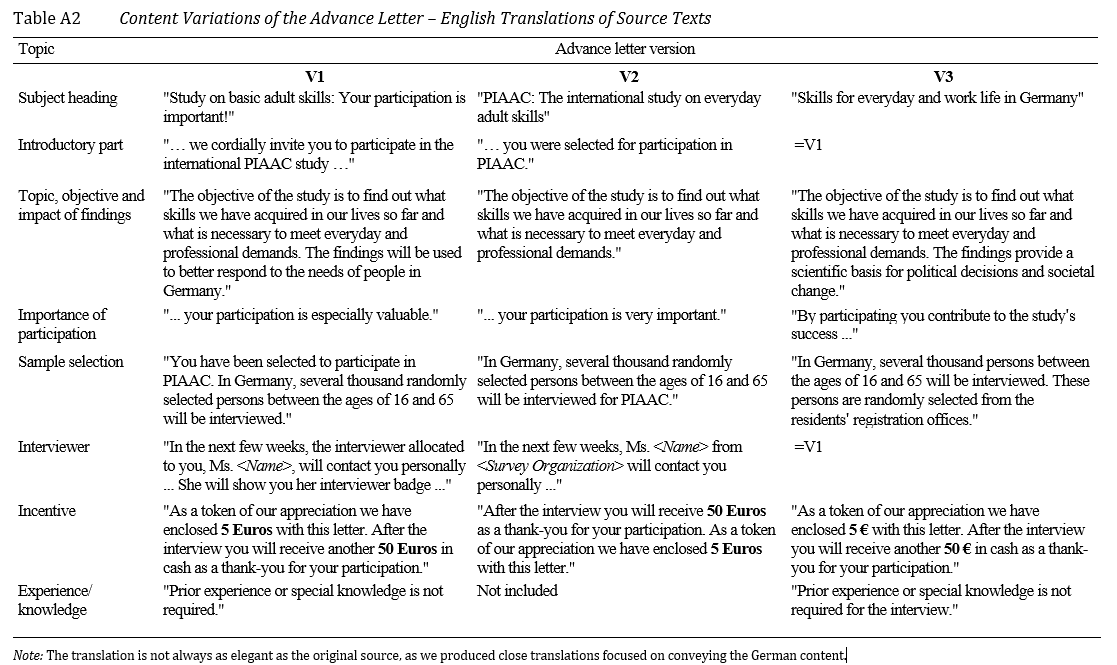

Table 1 summarizes content-related variations in the advance letters.[2] To assess whether or under which conditions persons notice the subject heading, different alignments and texts were used, only one of which includes the survey acronym PIAAC (V2). The introductory part was varied in the way the target person was approached and informed of their selection. V2 was more formal in tone and merely informed target persons that they had been selected for participation. In contrast, V1 and V3 (both identical) used a warmer tone, cordially inviting recipients to participate in the study. While the wording of the study’s topic and objective were identical, positioning in the advance letters was varied and different texts on the use of the findings were implemented.

One central study goal was to determine whether an explicit reference to the survey sponsor (Federal Ministry) is advisable since mentioning the survey sponsor may have different effects. The reference may be perceived as positive because the survey gains legitimacy. However, some persons may also reject the survey request because they do not trust the government or fear surveillance. While V1/V2 contained the sponsor’s logo and alluded to it in the text, V3 did not mention it at all.

Textual variations on the importance of participation reflect different appeals: participation is “especially valuable,” “very important,” or “contributes to the study’s success.” Information on data protection and voluntary participation was identical in each letter. Because the PIAAC survey in Germany uses a registry-based sample, V3 clarified that addresses originated from residents’ registration offices. The other two letters provided no information on the source of the persons’ addresses.

Mentioning the survey organization and announcing the interviewer’s visit is standard protocol. Each letter included the interviewer’s name; V2 also repeated the survey organization’s name after the interviewer’s name. Letters V1 and V3 specified that interviewers would show their badges, which was added to investigate whether this information helps establish trust.

Beyond standard contents, the advance letters addressed two survey-specific aspects. First, the German PIAAC field test planned to offer prepaid and postpaid incentives to target persons. Incentives were mentioned at different places and in different orders to learn more about how best to impart this information. Second, two letters included a sentence indicating no prior experience or special knowledge is required for participation. Since PIAAC asks respondents to complete a cognitive assessment, this information may reduce possible concerns or fears.

Cognitive Pretest

In cognitive pretests, participants provide feedback on survey questions and survey-related material (questionnaires, advance letters). They answer questions on how they understood specific terms and how easy or difficult it was to process the material. Cognitive pretests are suitable for testing comprehensibility, identifying problems, and revealing their causes (Beatty & Willis, 2007; Lenzner et al., 2016).

In fall 2019, four specifically trained interviewers of the pretest laboratory staff at the authors’ institute conducted cognitive interviews with 20 test persons (Lenzner et al., 2019). Participants came to the laboratory for individual interview sessions and received a compensation of 30€. The interviews took approximately 60 minutes and were video recorded.

The participants were recruited by quota sampling from the institute’s respondent pool or by word of mouth. Special emphasis was put on selecting a heterogeneous group of persons belonging to the PIAAC target population to obtain a broad spectrum of feedback. Quotas were determined by sex (female/male), age groups (16-29/30-49/50-65 years), education (no university entrance degree/university entrance degree), and native language (German/non-German). Of the participants, 50% were female; 25% were 16-29, 35% were 30-49, and 40% were 50-65 years old. Fifty percent of the participants had a university entrance qualification. Three participants had a non-German native language but were fluent in German. None of the participants had difficulties reading the advance letters and answering probes.

Recruited participants understood that they had not been selected for the PIAAC study but should envision themselves as a potential target person that received an advance letter by mail. With this mindset, they knew, for example, that the advance letter did not include a prepaid incentive. The standardized interview followed a structured interview protocol. It included probes to obtain an idea about the participants’ general impression of the letters, their comprehension of key information, and their feedback on specific issues.

First, participants opened an envelope with the three advance letters. The order of the letters was systematically varied with three different sort sequences to avoid exposure effects on the preference for one of the letters. Participants briefly looked at each of the three letters. They were asked to indicate which one of the letters they would most likely read or discard if the letter was received in a real-life situation and were then invited to substantiate their decision. Second, the participants read the letter that appealed most to them and answered a series of pre-scripted probing questions (e.g., “Assuming you received this letter by mail, would you participate in the survey?“; “Suppose you have questions about the study. Would you know whom to contact for more information?“).

Third, the participants were instructed to read the other two letters and answer comparative probes about all three advance letters. The probing focused on which version they liked most and which one would have encouraged them to participate in the survey (e.g., “Which of the three advance letters do you find most comprehensible?“; “The advance letters differ in how they convey the importance of participation. Which wording would most likely motivate you to participate?“).

Results

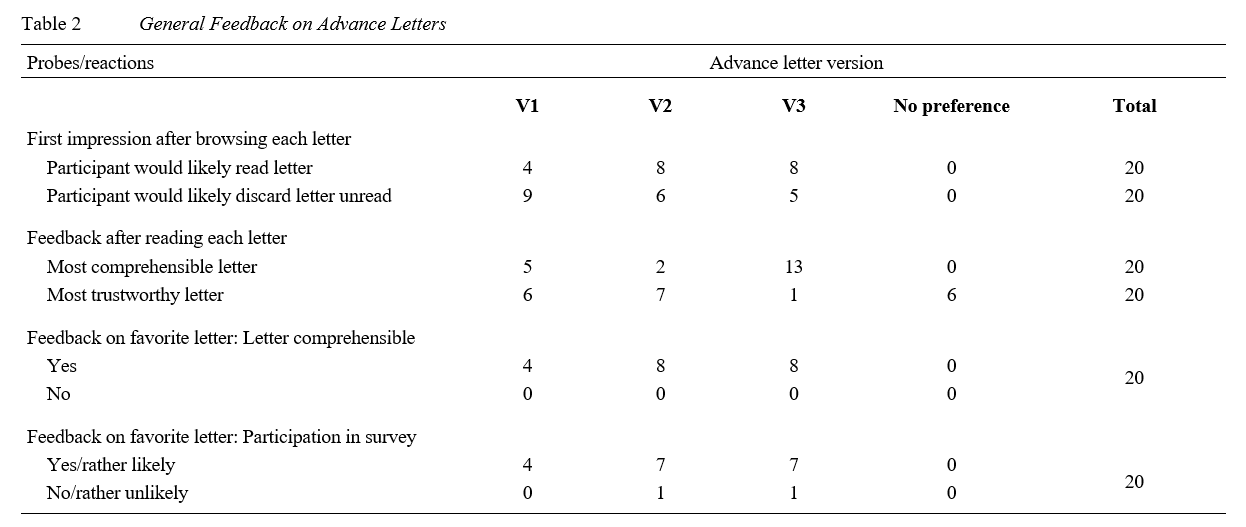

The presentation of the study’s main findings follows the structure and order of topics given in the section on variations of the advance letters (cf. Table 1). Table 2 shows participants’ initial general feedback on the advance letters regarding structure, comprehensibility, and layout.

Structure, Comprehensibility, and Layout. Participants had to choose the most appealing letter without reading the letters in detail. Overall, they preferred the format of V2 (standard letter, narrow text block) and V3 (letter with subheadings) over V1 (standard letter). The seeming length and lack of structure of V1 (especially in comparison to V3) were reasons for participants to most likely not read the text/letter. However, six participants regarded V1 as the most trustworthy due to its standard format. The unusual format of letter V2, including logos on the right-hand side of the page, and the seemingly short text were evaluated positively. Participants found the subheadings used in V3 appealing as they provided the text with structure and made it easier to skim it without much effort. Probing revealed that most participants found V3 the easiest to comprehend, most likely due to the structure and subheadings in question form. However, V3 was also rated the least trustworthy, primarily due to the format (subheadings) and lack of the study sponsor logo.

After reading all the advance letters, participants had to choose their favorite letter. Eight participants favored V2, eight V3, and four V1. Participants always indicated that the letter of their choice was easy to understand. They were asked how likely their participation in the survey would be had they received this letter by mail. Irrespective of the favored letter, more than 87% of the participants indicated they were sure or most likely to participate in the survey.

Participants seemed to prefer a serif font (V1, V2) in a larger size (V2). Regarding the placement of logos, most participants expressed their preference for the vertical logo design (V2) because it looked more modern and allowed for the contact information to be presented directly below the logos. Almost all participants noticed the contact information; 75% mentioned that it was adequate. However, some would have liked to see the interviewer’s contact information to allow them to approach the interviewer for more details about the interview process.

Subject Heading. Almost all participants expressed a preference for a left-aligned subject heading (V1, V2), saying it looked neater and resembled a typical letter. Different subject headings were tested. Eleven participants preferred the V1 wording (“Study on basic adult skills: Your participation is important!”). One reason for this is that it highlights the importance of participation. However, a few participants did not like this reference, for example, because they felt it sounded like an advertisement or even a bit desperate. Eight participants preferred the subject heading in V2 (“PIAAC: The international study on everyday adult skills”), mainly due to the reference to the international nature of the study, which was described as important. Thirteen participants found the heading in V3 the least appealing, describing it as too vague (“Skills for everyday and work life in Germany”).

Introductory Part. The introductory part of the letters presented the survey and its objective. Two alternative introductory sentences were tested. Most participants preferred the cordial invitation in V1/V3 (“we cordially invite you to participate in the international PIAAC study”), stating it was more polite, friendly, and less demanding. The wording in V2 was associated with unwanted advertising (“you were selected for participation in PIAAC”).

Topic, Objective, and Findings. The description of the study objective was identical in all three letters. An additional sentence about the use of the findings was added in V1 (“findings will be used to better respond to the needs of people in Germany”) and V3 (“findings provide a scientific basis for political decisions and societal change”). Three-quarters of participants preferred the V3 wording because it highlighted the relevance of participation and the possibility to have an impact on their participation. Fourteen participants correctly described the study’s objective; those who could not found the text was too vague.

Survey Sponsor. V1/V2 mentioned the survey sponsor and included its logo; V3 did not include any reference to the sponsor. All participants except one reacted positively to survey sponsor information and logo, stating it contributed to the study’s trustworthiness.

Importance of Participation. Only twelve participants noticed the information on the importance of participation. The position of this information in the letter seems irrelevant. The text was varied to express different appeals. Twelve participants preferred the V1 wording (“especially valuable”), eight the V3 wording (“contribute to the study’s success”), and none the V2 wording (“very important”). Participants reported V1 wording conveyed appreciation, V3 highlighted the reason why participation was important.

Sample Selection. The sample selection description was identical in V1/V2 and explained that survey participants are randomly selected; V3 also mentioned residents’ registration offices as the source of persons’ addresses. Most participants expressed a preference for V3. Mentioning the registration office seemed to increase the perceived trustworthiness of the survey.

Interviewer Announcement. The interviewer’s visit was announced in different ways. Fifteen participants preferred V2 (“Ms. <Name> from <Survey Organization> will contact you personally”) due to the direct link between the interviewer and the survey organization. Most participants found the reference to the interviewer badge in V1/V3 positive (“She will show you her interviewer badge”), as it evoked trust and emphasized the trustworthiness of the survey. Although almost all participants noticed the information about an interviewer visit, follow-up probes revealed that none of the participants understood the interviewer would visit them at their homes to establish the first contact. All participants believed they would be contacted by telephone or e-mail, with some pointing to the lack of information about contact procedures. A few participants thought an in-person visit would be intrusive.

Incentive. The advance letters provided information on pre- and postpaid incentives in different ways, varying content, wording, and text placement. Sixteen participants noticed and correctly captured the incentive information, and all participants liked the postpaid incentive amount (50€). However, 75% of the participants were bewildered by the prepaid cash incentive (5€). Fourteen participants thought it was better to position incentive information at the end of the advance letter like in V1/V3, preferably in a separate paragraph, to make the information more prominent. Thirteen participants found it important to mention that the incentive would be paid in cash because this alleviates concerns about having to provide bank account information. No exact preferences could be observed about the order in which prepaid and postpaid incentives are referred to.

Experience/Knowledge. The cognitive interviews explored whether it is helpful to specify that no prior experience or special knowledge is required for the interview. Many participants found the reference important, stating that it alleviated fears of not knowing how to respond or embarrassing oneself during the interview. Of these participants, eleven preferred the more specific V3 formulation with an explicit reference to the interview.

Finally, participants were asked to report whether they thought relevant information was missing from the advance letters. Participants stated that they would have preferred to see more information on the interview length, the nature of the first contact, the interview location, and whom to contact for further information.

Discussion and Conclusion

Sending advance letters is established best practice in high-quality face-to-face surveys (Lynn et al., 1998; Stadtmüller et al., 2019). Because of substantial variations and differences between surveys, experiences and findings from previous surveys and research need to be thoughtfully adapted to each specific new survey context. However, what happens after sending the letters remains a black box, as there is no direct feedback on the reception of the letter. Even if the target persons do read the advance letter, which may not always be the case, it is unclear how much they read, what they understand, and which effect the letter has on their potential cooperation. The cognitive interviews presented here shed some light onto this less known terrain.

The present study compared three advance letters. These letters were worded and constructed with care. All three letters had the potential to be sent out as they were. However, the question is always whether letters drafted by academics – albeit experienced survey practitioners – are appropriate for average target persons, people from all walks of life.

The pretesting results show several clear takeaways. The first impression of the letter, and the subsequent inclination to read the letter, are impacted most by the length and structure of the text and by the logos. In a nutshell: The shorter the text, the better. Although a standard letter format is preferred, the text needs to be well-structured, and subheadings seem to be useful for processing information. They make it easier to find specific information and improve comprehension. Including the logo of the sponsor, the Federal Ministry, was perceived positively by almost all participants, as it lends legitimacy to the letter and the survey request and emphasizes the importance of the survey. Interestingly, none of the participants expressed any concern about “the government”. Of course, this particular pool of participants has a generally positive attitude to survey participation and may have been somewhat biased.

In terms of content and arguments, the cognitive pretest confirmed that participants appreciated being told that their addresses were obtained from the residents’ registration offices. Mentioning that interviewers would show their badge was also found to be important. These two elements, together with the sponsor information and logo, seem to be key in conveying the legitimacy and trustworthiness of the survey. Participants found it important for the letter to mention that “no prior experience or special knowledge is required for the interview.” While this is especially important for large-scale assessments, it might also be relevant for other surveys since it seems to allay possible vague fears and insecurities about the interview. A convincing argument supporting participation is that the scientific results of the survey will be used to inform political decisions. Another crucial motivation is the considerable conditional incentive. Specifying that it would be paid in cash was found to be reassuring and evoked positive reactions. The at times somewhat unfavorable reactions to the prepaid incentives were rather surprising, since various surveys have found they have positive effects on response rates (e.g., Blohm & Koch, 2021; Singer et al., 1999). It is possible there may be a dissociation between conscious spontaneous reactions to the prepaid incentive versus its overall effect on participation.

The findings from the cognitive interviews were used to re-design and finalize the advance letter for the German PIAAC field study. Due to certain constraints and some contradictions inherent in the recommendations, not all proposed changes derived from these results could be taken on board simultaneously in the final version of the advance letter. For example, it was not possible to have a structured letter format, with logos on the right-hand side, a larger font, and have everything fit on one page. Thus, the final reconciled advance letter version combined what were thought to be the essential aspects. The final letter profited from the valuable pointers on content elements to include or avoid and the wording recommendations.

Some participants would have liked additional information that was not included in the letter, such as information on interview length. This information was laid out in the flyer enclosed with the advance letter and the privacy statement. A conscious decision was made not to include this additional material in the cognitive interview to avoid overloading the pretest and be able to focus only on the advance letter as the central element of the advance mail. It would be interesting to expand on research by Chan and Pan (2011) and further explore how advance mail in its entirety is perceived and processed by target persons.

In our view, some recommendations derived from this cognitive pretest, for example with respect to brevity and layout, may also be helpful for other surveys. For large-scale assessments, paying special attention to the reading load, the complexity of wording, and the density of the advance letter, is particularly important: It is essential for the advance letter to be suitable for target persons with limited literacy skills. Likewise, over-simplifying the letter would imply leaving out important information. Furthermore, language and style need to remain professional to convey the status and legitimacy of the survey.

Due to the small sample size, interpretation and generalization of our findings are limited. For example, it was not possible to investigate differences between persons with different characteristics. Future studies with samples sizes and compositions that allow to analyse how elements of advance letters affect different persons differentially would be greatly beneficial. Interviewer focus groups could also complement cognitive pretests (White et al., 1998). Experimental designs are attractive from a survey methodological point of view. However, it is to be expected that the magnitude of the effect of advance letters will be small, thus requiring large sample sizes (Lynn et al., 1998).

To sum, a qualitative approach was chosen to explore the strengths and weaknesses of the different design variants, contents, and wordings. Cognitive pretesting proved to be a useful tool to support survey practitioners in optimizing their advance letter design. Some of the specific recommendations derived from the cognitive interviews may be of interest to other face-to-face surveys and be partly applicable to letters in the context of other survey modes.

Appendix

[1] Advance letter is the general term used in this study and subsumes cover/pre-notification/survey letter.

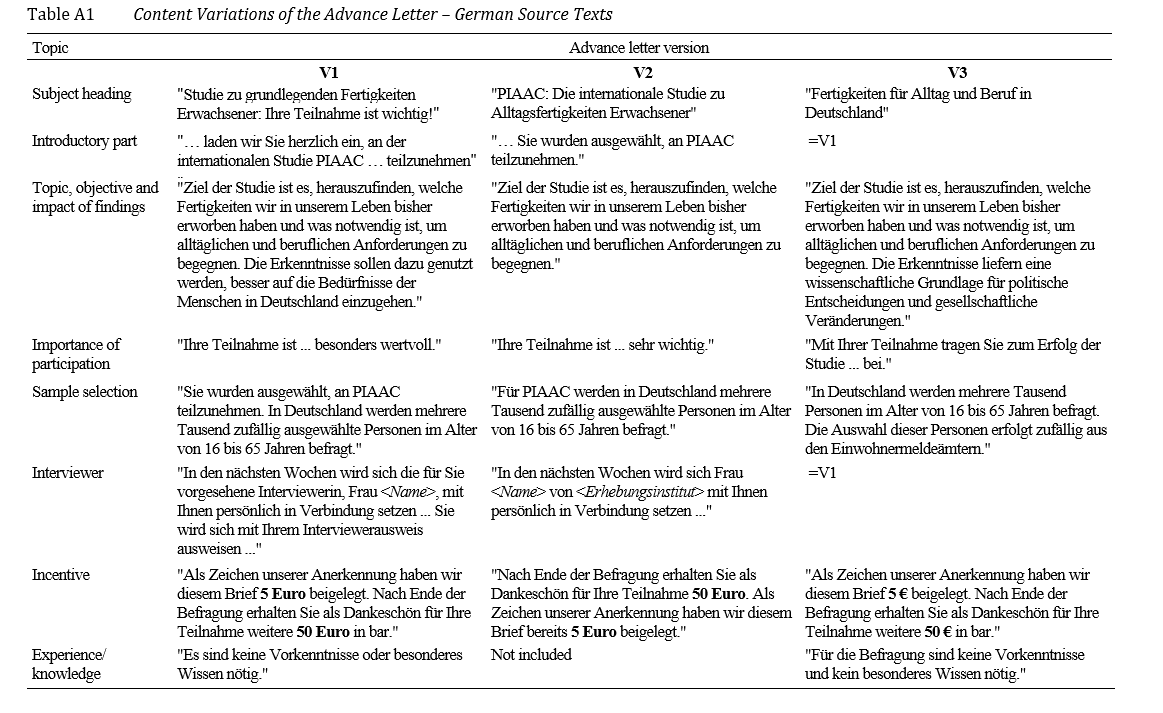

[2] Tables A1 and A2 in the appendix show German source texts and translations from German into English.

References

- Albaum, G., & Strandskov, J. (1989). Participation in a mail survey of international marketers. Journal of Global Marketing, 2(4), 7-24. https://doi.org/10.1300/J042v02n04_02

- Beatty, P. C., & Willis, G. B. (2007). Research synthesis: The practice of cognitive interviewing. Public Opinion Quarterly, 71(2), 287-311. https://doi.org/10.1093/poq/nfm006

- Bethlehem, J. G., Cobben, F., & Schouten, B. (2011). Handbook of nonresponse in household surveys. John Wiley & Sons.

- Beullens, K., Loosveldt, G., Vandenplas, C., & Stoop, I. A. L. (2018). Response rates in the European Social Survey: Increasing, decreasing, or a matter of fieldwork efforts? Survey Methods: Insights from the Field. https://doi.org/10.13094/SMIF-2018-00003

- Billiet, J., Koch, A., & Philippens, M. (2007). Understanding and improving response rates. In R. Jowell, C. Roberts, R. Fitzgerald, & E. Gillian (Eds.), Measuring attitudes cross-nationally: Lessons from the European Social Survey (Ch. 6, pp. 113-137). SAGE Publications.

- Blohm, M., & Koch, A. (2021). Monetary incentives in large-scale face-to-face surveys: Evidence from a series of experiments. International Journal of Public Opinion Research. https://doi.org/10.1093/ijpor/edab007

- Chan, A. Y., & Pan, Y. (2011). The use of cognitive interviewing to explore the effectiveness of advance supplemental materials among five language groups. Field Methods, 23(4), 342-361. https://doi.org/10.1177/1525822X11414836

- Childers, T. L., Pride, W. M., & Ferrell, O. C. (1980). A reassessment of the effects of appeals on response to mail surveys. Journal of Marketing Research, 17, 365-370. https://doi.org/10.1177/002224378001700310

- de Leeuw, E. D., Callegaro, M., Hox, J. J., Korendijk, E., & Lensvelt-Mulders, G. (2007). The influence of advance letters on response in telephone surveys: A meta-analysis. Public Opinion Quarterly, 71(3), 413-443. https://doi.org/10.1093/poq/nfm014

- Dillman, D. A., Lesser, V., Mason, B., Carlson, J., Willits, F., Robertson, R., & Burke, B. (2001). Personalization of mail surveys on general public and other populations: Results from nine experiments. JSM Proceedings. Survey Research Methods Section, American Statistical Association. http://www.asasrms.org/Proceedings/y2001/Proceed/00446.pdf

- Dillman, D. A., Smyth, J. D., & Christian, L. M. (2009). Internet, mail, and mixed-mode surveys: The tailored design method (3rd ed.). Wiley.

- Fazekas, Z., Wall, M. T., & Krouwel, A. (2014). Is it what you say, or how you say it? An experimental analysis of the effects of invitation wording for online panel surveys. International Journal of Public Opinion Research, 26(2), 235-244. https://doi.org/10.1093/ijpor/edt022

- Finch, J. E., & Thorelli, I. (1989). The effects of cover letter format and appeal on mail survey response patterns. Journal of Applied Business Research (JABR), 5(4), 63-71. https://doi.org/10.19030/jabr.v5i4.6336

- Gendall, P. (1994). Which letter worked best? Marketing Bulletin, 5(2), 53-63.

- Goldstein, K. M., & Jennings, M. K. (2002). The effect of advance letters on cooperation in a list sample telephone survey. Public Opinion Quarterly, 66(4), 608-617. https://doi.org/10.1086/343756

- Greer, T. V., & Lohtia, R. (1994). Effects of source and paper color on response rates in mail surveys. Industrial Marketing Management, 23(1), 47-54. https://doi.org/10.1016/0019-8501(94)90026-4

- Groves, R. M., Singer, E., & Corning, A. D. (2000). Leverage-saliency theory of survey participation. Public Opinion Quarterly, 64(3), 299-308. https://doi.org/10.1086/317990

- Heerwegh, D., & Loosveldt, G. (2006). An experimental study on the effects of personalization, survey length statements, progress indicators, and survey sponsor logos in web surveys. Journal of Official Statistics, 22(2), 191-210.

- Kaplowitz, M. D., Lupi, F., Couper, M. P., & Thorp, L. (2012). The effect of invitation design on web survey response rate. Social Science Computer Review, 30(3), 339-349. https://doi.org/10.1177/0894439311419084

- Kreuter, F. (2013). Facing the nonresponse challenge. The ANNALS of the American Academy of Political and Social Science, 645(1), 23-35. https://doi.org/10.1177/0002716212456815

- Landreth, A. (2001). SIPP advance letter research: Cognitive interview results, implications, & letter recommendations. Center for Survey Methods Research – U.S. Census Bureau. https://www.census.gov/content/dam/Census/library/working-papers/2001/adrm/sm2001-01.pdf

- Landreth, A. (2004). Survey letters: A respondent’s perspective. JSM Proceedings (pp. 4810-4817). Survey Research Methods Section, American Statistical Association. http://www.asasrms.org/Proceedings/y2004/files/Jsm2004-000240.pdf

- Leece, P., Bhandari, M., Sprague, S., Swiontkowski, M. F., Schemitsch, E. H., & Tornetta, P. (2006). Does flattery work? A comparison of 2 different cover letters for an international survey of orthopedic surgeons. Canadian Journal of Surgery, 49(2), 90-95.

- Lenzner, T., Hadler, P., Neuert, C., Unger, G.-M., Steins, P., Quint, F., & Reisepatt, N. (2019). PIAAC Cycle 2 – Anschreiben Feldtest. Kognitiver Pretest (GESIS Projektbericht, Version 1.0). GESIS – Pretestlabor. http://doi.org/10.17173/pretest77

- Lenzner, T., Neuert, C., & Otto, W. (2016). Cognitive pretesting (GESIS Survey Guidelines). GESIS – Leibniz Institute for the Social Sciences. https://doi.org/10.15465/gesis-sg_en_010

- Link, M. W., & Mokdad, A. (2005). Advance letters as a means of improving respondent cooperation in random digit dial studies: A multistate experiment. Public Opinion Quarterly, 69(4), 572-587. https://doi.org/10.1093/poq/nfi055

- Luiten, A. (2011). Personalisation in advance letters does not always increase response rates: Demographic correlates in a large scale experiment. Survey Research Methods, 5(1), 11-20. https://doi.org/10.18148/srm/2011.v5i1.3961

- Lynn, P. (2016). Targeted appeals for participation in letters to panel survey members. Public Opinion Quarterly, 80(3), 771-782. https://doi.org/10.1093/poq/nfw024

- Lynn, P., Turner, R., & Smith, P. (1998). Assessing the effects of an advance letter for a personal interview survey. International Journal of Market Research, 40(3), 1-6. https://doi.org/10.1177/147078539804000306

- OECD. (2014). PIAAC Technical standards and guidelines (PIAAC-NPM(2014_06)PIAAC_Technical_Standards_and_Guidelines). OECD. http://www.oecd.org/skills/piaac/PIAAC-NPM(2014_06)PIAAC_Technical_Standards_and_Guidelines.pdf

- Redline, C., Oliver, J., & Fecso, R. (2004). The effect of cover letter appeals and visual design on response rates in a government mail survey. JSM Proceedings (pp. 4873-4880). Survey Research Methods Section, American Statistical Association. http://www.asasrms.org/Proceedings/y2004/files/Jsm2004-000330.pdf

- Singer, E., van Hoewyk, J., Gebler, N., Raghunathan, T., & McGonagle, K. A. (1999). The effect of incentives on response rates in interviewer-mediated surveys. Journal of Official Statistics, 15(2), 217-230.

- Stadtmüller, S., Martin, S., & Zabal, A. (2019). Das Zielpersonen-Anschreiben in sozialwissenschaftlichen Befragungen (GESIS Survey Guidelines). GESIS – Leibniz Institute for the Social Sciences. https://doi.org/10.15465/gesis-sg_029

- Stoop, I., Koch, A., Loosveldt, G., & Kappelhof, J. (2018). Field procedures in the European Social Survey round 9: Enhancing response rates and minimising nonresponse bias. ESS ERIC Headquarters. Retrieved October 9, 2020 from https://www.europeansocialsurvey.org/docs/round9/methods/ESS9_guidelines_enhancing_response_rates_minimising_nonresponse_bias.pdf

- Sztabiński, P. B. (2011). How to prepare an advance letter? The ESS experience in Poland. ASK: Research & Methods, 20(1), 107-148.

- Vogl, S. (2019). Advance letters in a telephone survey on domestic violence: Effect on unit nonresponse and reporting. International Journal of Public Opinion Research, 31(2), 243-265. https://doi.org/10.1093/ijpor/edy006

- Vogl, S., Parsons, J. A., Owens, L. K., & Lavrakas, P. J. (2019). Experiments on the effects of advance letters in surveys. In P. J. Lavrakas, M. W. Traugott, C. Kennedy, A. L. Holbrook, E. D. de Leeuw, & B. T. West (Eds.), Experimental methods in survey research: Techniques that combine random sampling with random assignment (pp. 89-110). John Wiley & Sons.

- von der Lippe, E., Schmich, P., & Lange, C. (2011). Advance letters as a way of reducing non-response in a national health telephone survey: Differences between listed and unlisted numbers. Survey Research Methods, 5(3), 103-116. https://doi.org/10.18148/srm/2011.v5i3.4657

- White, A., Martin, J., Bennett, N., & Freeth, S. (1998). Improving advance letters for major government surveys. In A. Koch & R. Porst (Eds.), Nonresponse in survey research. Proceedings of the 8th International Workshop on Household Survey Nonresponse (pp. 151-171). Zentrum für Umfragen, Methoden und Analysen (ZUMA). https://www.ssoar.info/ssoar/handle/document/49720