Are you listening? Examining the level of multitasking and distractions and their impact on data quality in a telephone survey

Stefkovics, A. (2022). Are you listening? Examining the level of multitasking and distractions and their impact on data quality in a telephone survey. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=16622

© the authors 2022. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Earlier studies suggest that multitasking and distractions are common in telephone surveys. When respondents engage in secondary activities or are being distracted, they may not be able to fully engage in the cognitive process of answering survey questions. As a result, multitasking may harm data quality. To assess the level of multitasking and distractions and their impact on data quality I draw on self-reports from a telephone survey (N=1000) conducted in Hungary in 2021. The results show that the majority of respondents were multitasking, whereas only 7.5 percent got distracted during the survey. Few factors predicted multitasking and distractions, while multitasking, distractions, and attention went hand in hand. Although multitasking was common, no strong negative effect of multitasking or distractions on data quality was found. The findings indicate that in relatively short telephone surveys, with a low cognitive burden on respondents, multitasking may not be a cause for concern.

Keywords

data quality, distraction, multitasking, self-reports, telephone surveys

Copyright

© the authors 2022. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

In telephone surveys researchers have lower control over the response process compared to face-to-face surveys. While in the presence of an interviewer respondents are less likely to engage in other activities during the interview, studies have shown that multitasking is common among telephone respondents (Aizpurua et al., 2018a; Aizpurua et al., 2018b; Heiden et al., 2017; Lavrakas et al., 2010; Park et al., 2020). The increase in the use of mobile phones in telephone surveys is expected to create more room for respondents to flexibly engage in a survey, although using a landline phone also allows for multitasking according to previous reports. Another related concern is distractions. Although distractions may also occur in face-to-face surveys, telephone respondents may answer questions in environments where distractions are simply more likely to occur (e.g. answering during car driving), and interviewers have lower control over them.

Multitasking and distractions during survey completion may lead to poor data quality. A long line of studies have shown that multitasking has a negative effect on concentration, the ability to focus, or task performance (see for instance the meta-analysis of Clinton-Lisell, 2021 and the review of May & Elder, 2018) . This is especially likely when the cognitive load is high, and the two concurring tasks require similar cognitive resources (Salvucci & Taatgen, 2011). These aspects can be important during a survey interview. Dividing one’s attention or being distracted may influence the response process, potentially causing problems in the four cognitive steps involved in answering survey questions (Tourangeau et al., 2000). The first, comprehension step requires respondents to understand the meaning of the question. In the second, retrieval step, respondents recall relevant information, then formulate an answer based on this information (third step, judgment), and formulate and answer to fit the demands (fourth step, communication). Comprehension can be especially problematic in telephone surveys, when respondents engage in other activities, get distracted or are in a noisy environment, since respondents have to rely on aural clues only. Respondents experiencing a high cognitive load may satisfice (Krosnick, 1991) rather than optimize, for instance, by giving fewer substantive responses, non-differentiating between response options, providing short responses for open questions, spending less time with their answers, etc.

Despite its potential negative impact on data quality, to date, the problem of multitasking has received little scientific attention. To address this gap, the aim of this study was to survey the extent of multitasking and distractions and assess their impact on data quality in telephone surveys.

Literature review

According to Threaded Cognition theory, the most widespread theory of multitasking proposed by Salvucci & Taatgen (2011), multitasking occurs when a mind performs multiple tasks at once. Building on the ACT-R (Adaptive Control of Thought Rational) cognitive architecture of Anderson (2008), they consider multitasking behavior as cognitive threads, which sometimes experiences conflicts to perform tasks. They proposed different domains of multitasking performance based on how often the brain switches between tasks. One extreme of the continuum is concurrent multitasking, where tasks are performed simultaneously. The other extreme is sequential multitasking where resumption following interruptions takes longer (minutes or even hours). The ACT-R architecture and other capacity theories (e.g. Limited Capacity Model (Lang, 2000)), suggest that human processing resources are limited. When multitasking, conflicts may emerge between simultaneously performed similar activities. In other words, a certain combination of activities may reach the limit of cognitive resources, while other types of multitasking may not. For instance, listening to radio news and answering survey questions is more likely to lead to conflicts of processing than driving a car during the survey interview. The former may result in the interruption of one of the two activities, as the mental resources required by the two tasks are similar. Based on Zwarun & Hall (2014), multitasking activities can be classified as environmental distractions (e.g. background music, traffic noise), non-media multitasking (e.g. using the bathroom), and media-multitasking (e.g. using the media device for other activities). Environmental distractions are often concurrent with the primary activity, while non-media and media-multitasking are more intentional task-switches.

Salvucci and Taatgen (2011) further highlight that task switches and interruptions are only disruptive when the task is difficult and the cognitive load is high. Cognitive capacity and working memory capacity, however, may be subject to individual characteristics, such as age. Research shows that multitasking performance may also depend on intelligence, polychronicity, familiarity of the task, and motivation (Konig et al., 2005; Wenz, 2019). In such cases, multitasking performance may vary between respondent groups. According to Kraushaar & Novak (2010), multitasking can even be considered productive when secondary tasks are aimed to help the primary task (e.g. taking notes while watching a video).

How can the above apply to telephone survey interviews? It seems plausible to assume that during concurrent multitasking where secondary activities require similar cognitive processing, such as the task of answering a survey question, respondents may not be able to go through the four stages of the response process (Höhne et al., 2020; Kennedy, 2010; Tourangeau et al., 2000; Wenz, 2019). Any failure during the response process can push respondents to satisfice (Krosnick, 1991), to find a satisfactory answer instead of optimizing. Comprehension and understanding of the question can especially be problematic in telephone surveys. In self-administered modes, respondents have more opportunities, for instance, to re-read the questions, and even during face-to-face interviews, respondents have more clues to understand the questions, whereas telephone respondents depend only on aural clues. Mishearing or misunderstanding the question or the response options may introduce bias in later phrases of the response process. Either due to problems during comprehension, or the higher level of cognitive load, multitasking respondents may fail to or only partially recall elements of the relevant information from memory, may be unable to integrate the retrieved information, or optimally map and choose the appropriate response option. Consequently, some respondents may not be able to provide a substantive response (higher item-nonresponse), or seek another “easy way out” by, for instance, giving socially desirable answers, acquiescent responses, or choosing a random response option. Presumably, the mapping phase can be particularly problematic in telephone surveys. When respondents rely on the interviewer’s oral presentation of the response options and the cognitive load is high, memory bias is likely to occur. This may lead to primacy or recency effects, choosing a typical (e.g. middle) response option, straightlining, etc.

A handful of previous studies reported high levels of multitasking in telephone surveys. Lavrakas et al. (2010) found that 51% of mobile phone respondents engaged in some form of multitasking. Similarly, the prevalence of respondent multitasking was between 45% and 55% in dual-frame telephone samples (Aizpurua et al., 2018a; Aizpurua et al., 2018b; Heiden et al., 2017; Park et al., 2020). Nevertheless, the vast majority of the respondents tend to engage in only one secondary activity. Although the level of multitasking was found to be similar on mobile and landline interviews, the type of activities respondents engaged in tended to vary with the device used. As common sense suggests, landline respondents were found to be far more likely to watch television during the interview, whereas driving or watching children were more common among mobile phone respondents (Aizpurua et al., 2018a; Aizpurua et al., 2018b). In the study of Aizpurua et al. (2018a), the prevalence of distractions was lower than the prevalence of multitasking, but based on the interviewers’ reports, every fourth respondent was distracted in some way during the interview. Moreover, those engaging in multitasking were twice as likely to be distracted than those who were not multitasking. Online studies drawing on self-reports and paradata reported similar results: higher levels of distraction, lower levels of concentration, and attention among multitaskers (Höhne & Schlosser, 2018; Wenz, 2019). Also, distractions were more common among mobile phone respondents.

During telephone survey interviews, certain groups of respondents tended to engage in multitasking more often than others. Aizpurua et al. (2018b) found that older respondents, parents with children in the household, and individuals with lower levels of education were more likely to multitask. These findings were backed up by the evidence found by Aizpurua et al. (2018a). However, in their study, younger respondents were less likely to multitask, but more likely to be distracted. The findings related to age remain varied, as some earlier studies using online samples and paradata documented higher multitasking rates among younger respondents (Ansolabehere & Schaffner, 2015; Höhne et al., 2020).

Previous studies provide inconsistent evidence on the effect of multitasking on data quality. In line with studies using online samples (Ansolabehere & Schaffner, 2015; Antoun et al., 2017), Aizpurua et al. (2018b) found no difference between multitaskers and non-multitaskers on a range of satisficing indicators in a telephone survey. In the study of Aizpurua et al. (2018a), multitaskers provided less accurate answers to a question requesting the definition of STEM education, but multitasking had no effect on other quality indicators. Moreover, the completion time was similarly unrelated to multitasking. Lavrakas et al. (2010) reported higher item-nonresponse rates among multitaskers, but comparable results between multitaskers and non-multitaskers answering sensitive questions. In contrast, Park et al. (2020) found that multitasking correlated with an increase in socially undesirable responses, and led to less accurate responses to knowledge questions. Kennedy (2010) found that respondents engaging in eating and drinking had more problems with comprehension. The one study investigating the effect of distractions observed by the interviewers found no association between distractions and data quality (Aizpurua et al., 2018a).

On the one hand, previous results on respondent multitasking should come as good news, because while multitasking is common among telephone respondents, it does not seem to largely affect the quality of the responses. On the other hand, some of the findings indicate that at least parts of the response process (e.g. comprehension, (Kennedy (2010)), some question types (e.g. sensitive questions, and those requiring a high cognitive task (Aizpurua et al., 2018a; Kennedy, 2010; Park et al., 2020)), and some respondent groups (e.g. older respondents, Aizpurua, et al. (2018a); Aizpurua, et al. (2018b)) may still be burdened by multitasking behavior.

As research on multitasking in telephone surveys is scarce, and previous findings are inconclusive, there is a need for future research. The results of this study may contribute to the existing literature in several ways. First, most of the studies were conducted in the U.S., and to my best knowledge, no study has investigated multitasking in telephone surveys in the European context, while cultural differences between survey climates may question the generalizability of previous findings. Second, in this study, the measures of earlier studies have been extended with self-reports of distractions.

Data and methods

Data

Data is drawn from a dual-frame telephone survey conducted in Hungary by Századvég Foundation, in January of 2021. The sample was drawn with a stratified sampling procedure. The response rate (RR1) was 24 percent, and the total number of completed interviews was 1000. Only citizens 18 years of age or older were eligible to participate in the survey. Kish-method[i] was used for within-household selection in the landline interviews. The data were weighted using four basic demographic factors (gender, age, settlement type, and educational level) through an iterative procedure. I used the SPSS’s ‘rake weight’ function to weight the data. The procedure follows a random iterative method that simultaneously balances the distribution of the variables to fixed values.

Measures

The first part of the questionnaire was about factual data on the respondents’ position in the labor market. In the second part, we used standard questions of the European Social Survey (ESS) to measure institutional trust, and attitudes towards immigrants (see e.g. the questionnaire of Round 10). Some of the demographic questions were at beginning of the questionnaire, whilst others were towards the end. By the end of the interview, we asked questions about the circumstances of the interview (detailed below).

Multitasking

Building on measures used in previous studies (Aizpurua et al., 2018a; Aizpurua et al., 2018b; Ansolabehere & Schaffner, 2015; Heiden et al., 2017; Park et al., 2020), multitasking was measured with a self-reported question block at the end of the interview. Respondents were asked the following question: “During the time we’ve been on the phone, what other activities were you engaged in?”. Although one study has shown that providing examples may not influence responses (Aizpurua et al., 2020), we preferred not to provide examples, to avoid any potential bias. The question was open for the respondents, answers were field coded by the interviewers.

Distractions

Similarly, a self-reported measure of distractions during the interview was used. The question wording was as follows: “During the time we’ve been on the phone, how much have you felt that something was disturbing or distracting you?” A rating scale was used ranging from 1 (“Not at all”) to 5 (“Very much”).

Attention

Respondents’ attention was also assessed with a self-reported measure. At the end of the interview, respondents were asked to evaluate the attention they paid to answering questions (“How much have you been able to able to pay attention to the questions during the interview”). A similar 1-5 rating scale was used (from 1 – “Not at all” to 5 – “Completely”).

Interview characteristics

Several characteristics of the interview were assessed, such as the setting of the interview (where the respondents were located during the interview), interview length, and the device of completion.

Respondent characteristics

Respondents’ gender, age, educational level, place of residence, household size and characteristics, and self-reported financial situation were also included in the analysis.

Outcome data quality indicators

Five data quality indicators were used in the study, drawing on earlier studies. These included item-nonresponse rates (1), nondifferentiation (2), socially desirable responding (3), length of open responses (4), and interview duration (5). Item-nonresponse was calculated according to the number of non-substantial (‘Do not know’ or ‘Refused’) options given in the whole questionnaire. The mean of this variable was 0.19 (SD=0.69, Med=0, Min=0, Max=9). Thus, most of the respondents gave a valid response to every question in the survey. Nondifferentiation was calculated according to the standard deviation of answers given to a set of questions with the same response options (see Appendix I for exact questions), with a mean of 2.08 (SD=1.03). Socially desirable responding was calculated according to the number of socially desirable responses given in a special set of 13 questions suggested by Roberts and Jäckle (2012) (see Appendix I for the exact questions). The mean was 4.48 (SD=2.36, Med=4). The length of the open responses was measured in characters, with a mean of 12.90 (SD=16.90), ranging between 0 and 347 characters (for the question see the same Appendix table). The interview duration was measured in seconds. Extremely short and extremely long interviews (mean +/- SD*2) were excluded. The final variable’s mean was 12.30 minutes (SD=3.70 minutes), with a minimum of 5.50, and a maximum of 23.40 minutes.[ii]

Analysis plan

First, descriptive statistics on the prevalence of multitasking and distractions will be presented. To explore what drives multitasking and distractions, binary logistic regression models were fitted on each variable. To assess the impact of multitasking, distractions, and attention on data quality, several regression models were fitted with the data quality indicators as dependent variables. Poisson models were fitted on item-nonresponse, SDB and open responses since these were discrete variables, and their distribution was skewed. Simple linear models were fitted on nondifferentiation and interview duration (with logarithmic transformation). Multitasking, distractions, and attention were included as predictors, as well as the other variables used in the first models (for controlling purposes).

Results

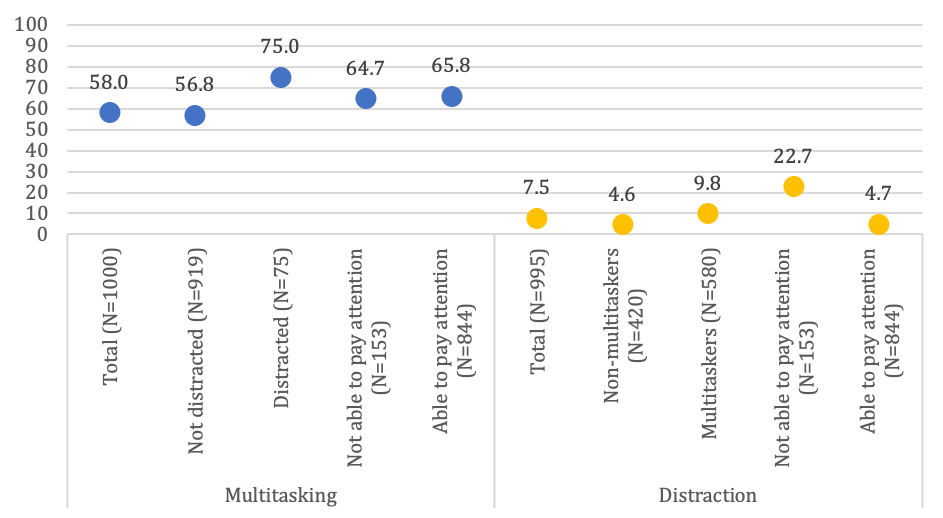

The prevalence of multitasking, distraction, and attention is presented in Figure 1. All in all, multitasking was widespread, with the majority of the respondents (58%) engaging in an additional activity besides answering survey questions. 71.5% of the multitaskers engaged in only one additional activity. Working was the most common activity (16.9%), with watching television the second (15.1%), and housework the third (14.0%). Only 7.5 percent of the respondents reported being distracted at least moderately during the interview, and 84.7 percent of them were able to fully pay attention to the questions. Multitaskers were twice more likely to be distracted during the interview (9.8%) and were able to pay less attention to the questions. In line with this, only 54.1 percent of distracted respondents reported that they had been able to pay full attention to the questions, in comparison to 87.4 percent of those not being distracted.

Fig. 1. Levels of multitasking and distractions, %

In general, the variables included in the models did not explain much of the variance of multitasking or distractions (Nagelkerke R2: .07; .16). Males had higher odds to multitask during the interview, and watching children increased the odds of multitasking by 1.75. Multitasking, distractions, and attention were strongly related. Those who were distracted had more than twice higher odds of multitasking (and vica versa), and the odds of distractions were a lot lower among those who could pay attention to the survey (OR=0.17). The only other variable that affected distractions was the setting of the interview: being home during the interview decreased the odds of distractions. No other variables (such as the type of phone, for instance) had an effect on the two variables. The absence of the effect of being in a car or on the street may be explained by their low frequency.

Table I. Results of the binary logistic models predicting multitasking and distractions

| Multitasking | Distractions | |||

| Predictors | Odds Ratios | CI (95) | Odds Ratios | CI (95) |

| Intercept | 2.74 | 0.78 – 9.90 | 0.85 | 0.10 – 7.37 |

| Gender (ref: female) | 0.73 * | 0.55 – 0.95 | 1.18 | 0.70 – 2.01 |

| Age | 1.01 | 1.00 – 1.02 | 0.98 | 0.96 – 1.00 |

| Vocational school (ref: lowest level) | 1.00 | 0.67 – 1.49 | 1.12 | 0.51 – 2.43 |

| High school (ref: lowest level) | 0.78 | 0.51 – 1.18 | 0.62 | 0.27 – 1.43 |

| College or higher (ref: lowest level) | 0.71 | 0.46 – 1.11 | 0.90 | 0.38 – 2.11 |

| Household size | 1.00 | 0.90 – 1.12 | 0.97 | 0.78 – 1.18 |

| Watching children during the interview | 1.75 ** | 1.19 – 2.58 | 0.93 | 0.45 – 1.90 |

| Being home | 0.71 | 0.31 – 1.55 | 0.30 * | 0.11 – 0.90 |

| Working | 0.90 | 0.37 – 2.06 | 0.43 | 0.14 – 1.41 |

| Being in a car | 1.96 | 0.60 – 6.89 | 0.60 | 0.13 – 2.63 |

| Being on the street | 0.40 | 0.12 – 1.23 | 1.11 | 0.24 – 4.84 |

| Landline (ref: mobile) | 0.96 | 0.59 – 1.57 | 1.17 | 0.36 – 3.08 |

| Distracted (ref: not distracted) | 2.12 ** | 1.22 – 3.83 | ||

| Attention | 0.78 | 0.53 – 1.15 | 0.17 *** | 0.10 – 0.29 |

| Multitasker (ref: non-multitasker) | 2.17 ** | 1.24 – 3.96 | ||

| N | 992 | 992 | ||

| Nagelkerke R2 | .07 | .16 | ||

Note* p<.05 ** p<.01 *** p<.001

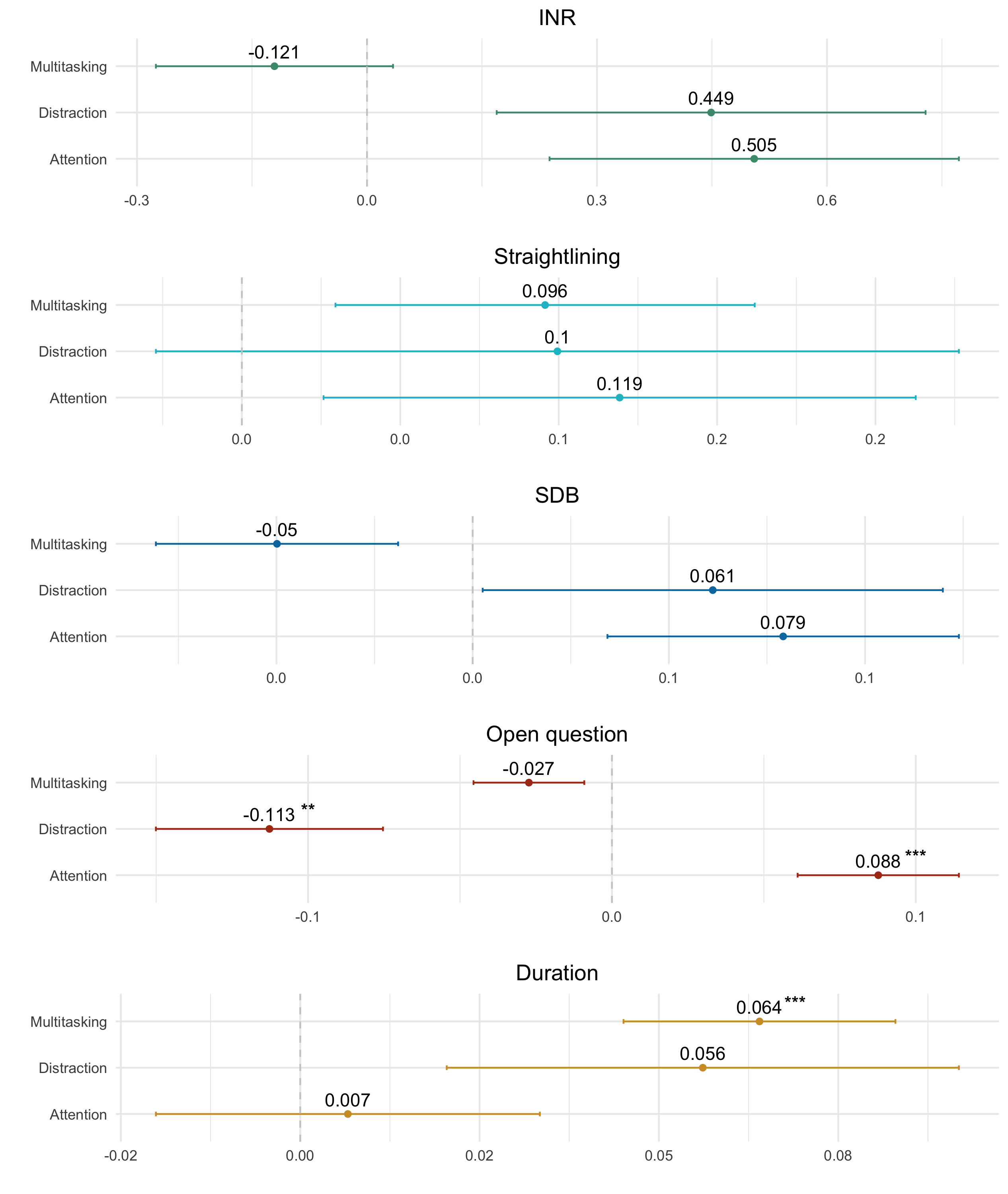

Now I turn to whether multitasking or distractions affected data quality. As shown in Figure 2. and Appendix II., only open responses and interview duration were associated with multitasking, distractions, and attention, while INR, nondifferentiation, and SDB were not. Distractions decreased the number of open responses, while high attention increased it. Finally, multitaskers were more likely to answer the questions slower than non-multitaskers.

Fig. 2. The effect of multitasking, distractions, and attention on the five data quality indicators

Note* p<.05 ** p<.01 *** p<.001

Conclusion/Discussion

This study aimed at assessing the prevalence of multitasking and distractions, as well as their impact on data quality in telephone surveys conducted in Hungary. In line with earlier findings (Aizpurua et al., 2018a; Aizpurua et al., 2018b; Heiden et al., 2017; Lavrakas et al., 2010; Park et al., 2020), multitasking was common amongst the majority of the respondents. The types of secondary activities respondents engage in also corroborated the previous findings, except for working during the interview as the most common activity. No further information was available on what types of work respondents engaged in. Perhaps the high prevalence of working during the interview is due to many people working from home due to the pandemic. Multitasking was strongly subject to watching children, and distractions to the setting of the interview, while respondent characteristics were not associated with multitasking or distractions. Interestingly, the level of multitasking was the same on mobile and landline surveys (as also found by Park et al., (2020)). This piece of evidence contradicts the assumption that using a mobile phone would encourage respondents to engage in more secondary activities in comparison to those using landline phones. In fact, multitasking was common at home.

Besides, only a small minority of the respondents reported at least moderate distractions during the survey or being unable to pay full attention to the questions. In line with earlier studies (Aizpurua et al., 2018a; Höhne & Schlosser, 2018; Wenz, 2019), multitasking and distraction went hand in hand. Also, distractions often led to respondents’ attention being drawn away.

Multitasking behavior had a limited effect on data quality, indicating that even if respondents engaged in other activities they were able to balance, divide their attention, and perform the tasks successfully, either because the cognitive load was low enough, or they were engaging in activities that required cognitive efforts different from that of answering of a survey question. In contrast to the findings of Aizpurua, et al. (2018a), interview length was found to be longer when respondents were multitasking. Similarly, little effect of distractions was found on data quality (except the length of open questions). This result backs up earlier studies on telephone (Aizpurua, et al., 2018a) and online surveys (Wenz, 2019).

These results are good news for survey takers, although the challenge of multitasking should not be neglected. One potential explanation of the null effects is that the survey was relatively short. Long interviews may increase exhaustion and pose a cognitive burden, which may raise the possibility of respondents being unable to perform multitasking as efficiently as in shorter interviews. Second, earlier studies only found a negative relationship between multitasking and data quality when questions were sensitive or knowledge tests (Aizpurua, et al., 2018a; Park et al., 2020). In this survey, questions were neither sensitive nor knowledge tests. Third, increasing interview duration due to multitasking may be a concern in other studies. Long interview duration is not necessarily an indication of poor data quality, although early studies show it can be (Draisma & Dijkstra, 2004; Heerwegh, 2003; Vandenplas et al., 2019). Slow completion time may be an indication of comprehension problems (asking for clarification), failures of retrieval, integration, or mapping of response options. Long interview duration is also often attached to higher drop-out rates.

One limitation of this study is that the assessment of multitasking and distractions was based on self-reports of the respondents. Self-reports may introduce bias through socially desirable responding, or recall problems (Ansolabehere & Schaffner, 2015; Sendelbah et al., 2016; Wenz, 2019; Zwarun & Hall, 2014). This likely leads to an underestimation of multitasking and distractions. Using an online survey and paradata, Höhne et al. (2020) found that self-reports of distractions were far lower than the rates measured by paradata. In the study of Aizpurua et al. (2018a) interviewers assessed one-quarter of the respondents as being distracted, which rate is nearly three times higher in comparison to what was found in the present survey. Although validation of self-reports in telephone surveys is challenging, it would be an important direction for future studies. An additional possible limitation is that multitasking and distractions were measured on the survey level, i.e. we do not know whether all questions were affected by them or only a subset of the questions. This may mask some of the effects. Future studies may try to ask questions about multitasking and distractions more frequently, for instance, after each block of the questionnaire to get a more nuanced picture. Another limitation is related to generalizability. Findings from Hungary cannot necessarily be generalized to other countries, as cultural differences may alter the effects of respondent behavior. For instance, general values (such as Hofstede’s individualism–collectivism, power distance, or uncertainty avoidance [Hofstede, 1980]) have been found to influence item-nonresponse rates (Lee et al., 2017; Meitinger & Johnson, 2020). Since Hungarian society is considered to be individualistic[iii], it is possible that respondents, in general, tended to put less effort into answering survey questions, or engaged in multitasking more frequently, because in individualistic societies people’s privacy is better valued, as opposed to collectivist societies where people are more likely to perform response tasks conscientiously (similar to requests from e.g. authorities).

Another promising direction for future research would be to discover secondary activities and distractions in more detail. Little is known about whether respondents engage in these activities sequentially (e.g. watching one part of a TV program for while, then switching back to answering questions with full attention), or concurrently (e.g. watching TV during the entire interview), or about the nature of the reported distractions. A more in-depth understanding of the secondary activities and distractions would allow researchers to better assess their cognitive impact on the response process.

This study contributed to assessing the prevalence and the impact of respondent multitasking and distractions in survey taking. The positive results indicate that although both sequential and concurrent multitasking is very common in telephone surveys when the questionnaire is relatively short, the questions are not sensitive, or are not testing knowledge (the cognitive load is relatively low), the respondents are able to adjust their multitasking performance without harming the quality of their responses.

Appendix

Appendix I. Questions used for data quality indicators

Appendix II. Results of the models predicting the five data quality indicators

Endnotes

[i] During the Kish-selection method, eligible household members are placed in a selection grid and the interviewer randomly selects one of them to be interviewed. The selection method offers some advantages over the first- or last birthday method, since not every culture celebrates birthdays, and birthdays of the household members may not be known by the person who is contacted first.

[ii] The high range of duration was due to the high number of conditional questions in first part of the questionnaire.

[iii] https://www.hofstede-insights.com/country-comparison/hungary/. Accessed on 23 July, 2022.

References

- Aizpurua, E., Heiden, E. O., Park, K. H., Wittrock, J., & Losch, M. E. (2018). Investigating Respondent Multitasking and Distraction Using Self-reports and Interviewers’ Observations in a Dual-frame Telephone Survey. Survey Methods: Insights from the Field (SMIF). https://doi.org/10.13094/SMIF-2018-00006

- Aizpurua, E., Park, K. H., Heiden, E. O., & Losch, M. E. (2020). I say, they say: Effects of providing examples in a question about multitasking. International Journal of Social Research Methodology, 1–7.

- Aizpurua, E., Park, K. H., Heiden, E. O., Wittrock, J., & Losch, M. E. (2018). Predictors of Multitasking and its Impact on Data Quality: Lessons from a Statewide Dual-frame Telephone Survey. Survey Practice, 11(2), 3723. https://doi.org/10.29115/SP-2018-0025

- Anderson, J. R. (2008). How can the human mind occur in the physical universe? Oxford University Press.

- Ansolabehere, S., & Schaffner, B. F. (2015). Distractions: The incidence and consequences of interruptions for survey respondents. Journal of Survey Statistics and Methodology, 3(2), 216–239.

- Antoun, C., Couper, M. P., & Conrad, F. G. (2017). Effects of mobile versus PC web on survey response quality: A crossover experiment in a probability web panel. Public Opinion Quarterly, 81(S1), 280–306.

- Clinton-Lisell, V. (2021). Stop multitasking and just read: Meta-analyses of multitasking’s effects on reading performance and reading time. Journal of Research in Reading, 44(4), 787–816. https://doi.org/10.1111/1467-9817.12372

- Draisma, S., & Dijkstra, W. (2004). Response latency and (para) linguistic expressions as indicators of response error. Methods for testing and evaluating survey questionnaires, 131147.

- Heerwegh, D. (2003). Explaining response latencies and changing answers using client-side paradata from a web survey. Social Science Computer Review, 21(3), 360–373.

- Heiden, E. O., Wittrock, J., Aizpurua, E., & Losch, M. E. (2017). The impact of multitasking on survey data quality: Observations from a statewide telephone survey. Annual Conference of the American Association for Public Opinion Research, 18–21.

- Hofstede, G. H. (1980). Culture’s consequences: International differences in work-related values. Sage.

- Höhne, J. K., & Schlosser, S. (2018). Investigating the adequacy of response time outlier definitions in computer-based web surveys using paradata SurveyFocus. Social Science Computer Review, 36(3), 369–378.

- Höhne, J. K., Schlosser, S., Couper, M. P., & Blom, A. G. (2020). Switching away: Exploring on-device media multitasking in web surveys. Computers in Human Behavior, 111, 106417. https://doi.org/10.1016/j.chb.2020.106417

- Kennedy, C. K. (2010). Nonresponse and measurement error in mobile phone surveys. https://deepblue.lib.umich.edu/bitstream/handle/2027.42/75977/ckkenned_1.pdf?sequence%C2%BC1&isAllowed%C2%BCy

- Konig, C. J., Buhner, M., & Murling, G. (2005). Working Memory, Fluid Intelligence, and Attention Are Predictors of Multitasking Performance, but Polychronicity and Extraversion Are Not. Human Performance, 18(3), 243–266. https://doi.org/10.1207/s15327043hup1803_3

- Kraushaar, J. M., & Novak, D. C. (2010). Examining the affects of student multitasking with laptops during the lecture. Journal of Information Systems Education, 21(2), 241–252.

- Krosnick, J. A. (1991). Response strategies for coping with the cognitive demands of attitude measures in surveys. Applied cognitive psychology, 5(3), 213–236.

- Lang, A. (2000). The limited capacity model of mediated message processing. Journal of communication, 50(1), 46–70.

- Lavrakas, P. J., Tompson, T. N., Benford, R., & Fleury, C. (2010). Investigating data quality in cell phone surveying. Annual American Association for Public Opinion Research conference, Chicago, Illinois, 13–16.

- Lee, S., Liu, M., & Hu, M. (2017). Relationship Between Future Time Orientation and Item Nonresponse on Subjective Probability Questions: A Cross-Cultural Analysis. Journal of Cross-Cultural Psychology, 48(5), 698–717. https://doi.org/10.1177/0022022117698572

- May, K. E., & Elder, A. D. (2018). Efficient, helpful, or distracting? A literature review of media multitasking in relation to academic performance. International Journal of Educational Technology in Higher Education, 15(1), 13. https://doi.org/10.1186/s41239-018-0096-z

- Meitinger, K. M., & Johnson, T. P. (2020). Power, Culture and Item Nonresponse in Social Surveys. In P. S. Brenner (Szerk.), Understanding Survey Methodology: Sociological Theory and Applications (o. 169–191). Springer International Publishing. https://doi.org/10.1007/978-3-030-47256-6_8

- Park, K. H., Aizpurua, E., Heiden, E. O., & Losch, M. E. (2020). Letting the cat out of the bag: The impact of respondent multitasking on disclosure of socially undesirable information and answers to knowledge questions. Survey Methods: Insights from the Field. https://doi.org/10.13094/SMIF-2020-00014

- Salvucci, D. D., & Taatgen, N. A. (2011). The multitasking mind (o. xi, 304). Oxford University Press.

- Sendelbah, A., Vehovar, V., Slavec, A., & Petrovčič, A. (2016). Investigating respondent multitasking in web surveys using paradata. Computers in Human Behavior, 55, 777–787.

- Tourangeau, R., Rips, L. J., & Rasinski, K. (2000). The psychology of survey response.

- Vandenplas, C., Beullens, K., & Loosveldt, G. (2019). Linking interview speed and interviewer effects on target variables in face-to-face surveys. Survey Research Methods, 13(3), 249–265.

- Wenz, A. (2019). Do Distractions During Web Survey Completion Affect Data Quality? Findings From a Laboratory Experiment. Social Science Computer Review, 39(1), 148–161. https://doi.org/10.1177/0894439319851503

- Zwarun, L., & Hall, A. (2014). What’s going on? Age, distraction, and multitasking during online survey taking. Computers in Human Behavior, 41, 236–244. https://doi.org/10.1016/j.chb.2014.09.041