Targeting Incentives in Mature Probability-based Online Panels

Research note

Lipps,O., Felder M., Lauener L., Meisser A., Pekari N., Rennwald L. & Tresch A. & (2023). Targeting Incentives in Mature Probability-based Online Panels. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=18404

The data used in this paper are available on request from the authors.

© the authors 2023. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

This paper complements earlier work about incentive effects in wave 5 of the Panel Survey of the Swiss Election Study (Selects) about the possibilities to decrease incentives for high estimated response propensity respondents. In the present paper, we study possibilities to decrease incentives in wave 6 for the complementary group, the low-propensity respondents. For high-propensity respondents, continuing an (expensive) conditional CHF 10 (cash) produced only slightly higher response rates and a similar sample composition compared with an (inexpensive) lottery (5 x 300 CHF) in wave 5. For low-propensity respondents, however, switching to the lottery produced an 8% points lower response rate compared with continuing a conditional CHF 20 (cash), although sample compositions were similar in wave 6. In addition, the more costly incentives from wave 5 (CHF 10 as opposed to the lottery) continued to produce slightly higher response rates for high-propensity respondents in wave 6 such that due to cumulated effects we need to relativize our statements about the small effects from wave 5.

Keywords

costs, incentives, mature panel, online, Probability sample

Copyright

© the authors 2023. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

We know little about effects on response and costs by targeting incentives to different respondent groups in mature probability-based online panel surveys. While there is literature about responsive design approaches such as mode switching, giving incentives, or generally increasing the level of effort to increase response rates of more difficult cases (Brick & Tourangeau 2017, Tourangeau et al. 2017), targeted incentives were tested on groups with differential nonresponse especially in cross-sectional surveys (Link & Burks 2013). In one of the rare examples that was based on a panel survey, Kay et al (2001) used different incentives for households with different response behavior in the previous wave but did not use an experimental design. Martin & Winters (2001) tested debit cards worth 20 USD and 40 USD or no incentive in waves 8 and 9 on prior nonrespondents in wave 7 or 8 in the US-Survey of Income and Program Participation (SIPP). They found improved conversion rates with incentives but no improvement from the higher incentive compared with the lower incentive. Households in the high poverty stratum were already responsive to a 20 USD incentive unlike households in the low poverty stratum. Rodgers (2002) found the greatest cost-benefit ratio by offering a higher incentive to households who did not respond at the previous wave, based on an experiment in the Health and Retirement Survey (HRS).

This scarce of experimental research about targeting incentives in probability-based online panel surveys is surprising. First, response rates are (still) considerably lower in online surveys compared with interviewer administered surveys (Daikeler et al. 2020). Second, effects of incentives are more complex in panel surveys because they may be temporary, constant, delayed or cumulative over time (Nicolaas et al. 2019). Third, to identify low-propensity respondents, much is known about sample members in a mature panel with five waves from their answers given in previous waves. Therefore, targeting incentives of different values to different (response propensity) groups may be effective in terms of costs and response rates in a mature panel.

In this paper, we complement the findings from an incentive experiment on response rates and costs in wave 5 of the Panel Survey of the Swiss Election Study (Selects) by adding findings from an incentive experiment in wave 6. In wave 4, the sample was divided into those with a low response propensity and those with a high response propensity. While in wave 5, those with a low response propensity continued to receive a conditional CHF 20 cash incentive, those with a high response propensity were randomized into a group that continued to be offered a conditional CHF 10 cash incentive and a group that were offered a conditional lottery incentive (5 x 300 CHF). Neither response rates (82.9% in the cash incentive group vs. 80.0% in the lottery incentive group) nor sample composition were statistically different from each other (see Lipps et al. 2022). However, costs were much lower in the lottery incentive group compared with the conditional cash incentive group. Hence, in wave 6, the high-propensity group took part in a cost-saving conditional lottery (5 x 300 CHF). Those with a low response propensity were randomized into a group that continued to receive a CHF 20 conditional cash incentive and a group that took part in the 5 x 300 CHF lottery. The idea to conduct the wave 6 experiment was to find out if high incentives are needed to motivate low propensity respondents to continue the survey or if – similarly to high propensity respondents – intrinsic motivation in this group is high enough to allow for a low-cost incentive. In addition, it is interesting to analyze if there are carryover effects, that is, if the different incentives in wave 5 in the high-propensity group have an impact on participation in wave 6.

Cost efficiency of incentives in mature panel surveys

In surveys, the most efficient incentive with respect to response rates and – at the same time – the most expensive incentive is prepaid cash (Singer & Ye 2013). Prepaid incentives work better than promised incentives because social exchange is based on the “norm of reciprocity”—i.e., prepaid incentives create an obligation to reciprocate by participating in the survey (Gouldner 1960). Second, leverage-salience theory postulates that the decision to participate in the survey depends on the subjective weight (leverage) given to how factors for participation, such as the survey topic or incentives, are made salient (Groves et al. 2000). Consequently, the more intrinsically motivated (e.g., by topic salience) would participate with a small or even no incentive while others need higher incentives to participate (Deci & Ryan 2013). Cash works better than other incentives due to its universally understood value (Becker et al. 2019).

Still, conditional cash or lotteries can work surprisingly well in longitudinal web surveys compared with offline and/or cross-sectional surveys (Nicolaas et al. 2019, Wong 2020). Concerning monetary incentives, however, be it conditional or prepaid, the survey budget in general exerts financial constraints (Olson et al. 2021). Fortunately, in longitudinal surveys, higher amounts do not necessarily yield higher response rates (Wong 2020). It is likely that costs can be saved because respondents who participate in several waves are more likely to be driven by other factors than incentives themselves (Pforr et al. 2015; Sacchi et al. 2018). Such factors include a higher interest in the survey and a higher intrinsic motivation, or just being used to take the survey at each wave. In addition, panel attrition has left a sample of loyal and interested respondents who may be less responsive to extrinsic incentives (Laurie and Lynn 2009). For these respondents using (inexpensive) conditional incentives in later waves may be a sufficient motivator to continue to respond. For example, Pekari (2017) found that after the first wave with an unconditional cash incentive, adding a cost-efficient lottery of iPads was just as effective as an expensive conditional cash-prize at reducing attrition for wave 2 and 3. Lipps et al. (2022) report that the response rate of high-propensity respondents is largely independent of whether they participated in a (low-cost) lottery or received a (high-cost) conditional cash incentive. In addition, sample composition was very similar in the two designs. For more difficult respondents, however, it may be worth using more expensive incentives (Becker et al. 2019): there is evidence that incentives have a stronger effect on people with low response propensities (Wong 2020). Finally, regarding decreasing the incentive for high-propensity respondents, positive effects of an incentive in a panel seem to persist even without repeated incentives (Laurie & Lynn 2009).

Data and experimental design

The Swiss Election Study (Selects) investigates the determinants of turnout and vote choice in national parliamentary elections. We use the self-administered online Panel Survey (Selects 2023) that was one of the main components of the Selects 2019 study. The Selects Panel Survey traditionally investigates the dynamics of opinion formation and vote intention during the election year. In 2019 it was decided to expand the Panel Survey for the first time to additional yearly waves up until 2023 to capture changes in problem perceptions, attitudinal stances, and party choice, between two successive national elections. Six waves have been conducted so far, three in 2019 (one before the 2019 election campaign, one during the election campaign and one after the federal elections), and three subsequent yearly waves in 2020, 2021 and 2022.

The initial sample was drawn by the Swiss Federal Statistical Office from the Swiss population register using random methods and comprised 25,575 Swiss citizens aged 18 and above. Sample members received a pre-notification letter one week before the beginning of the online survey together with an unconditional incentive of CHF 10 in the form of a postal check. In addition, respondents were told that if they participated in the waves 1, 2 and 3 they could win one of five iPads. 7,939 valid interviews were conducted in wave 1. All respondents from wave 1 were invited to take part in wave 2 (5,577 valid interviews) and in wave 3 (5,125 valid interviews). At the end of wave 3, 3,030 respondents gave consent to participate in yearly follow-up surveys until the next national elections (in 2023). Respondents who gave consent and did not wish to leave the sample constitute active panel members. In total, 2,499 valid interviews were conducted in wave 4 (out of 2,955 active panel members).

In wave 4, those with a low political interest in wave 1 (“not at all” or “rather not interested”, N=536) or those who systematically responded only after the second reminder (N=56) were deemed low response propensity respondents. In wave 5, this group (N=592) continued to receive a conditional CHF 20 cash incentive, while of the remaining sample (N=2,286), who received CHF 10 (cash) upon completion of the questionnaire in wave 4, one random half received the same incentive. The second half was informed that they would participate in a lottery upon completion of the questionnaire (5×300 CHF). To calculate valid responses, speeders (N=2), respondents reporting a sex or a birthyear inconsistent with information from the sampling frame (N=41), and drop-offs (N=20) counted as non-respondents, leaving N=2,323 respondents in wave 5.

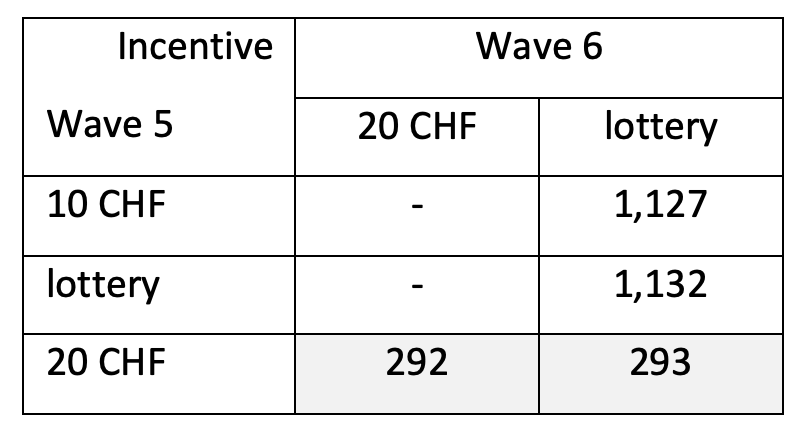

In wave 6, still active panel members were again invited to participate (N=2,844). The high probability respondents (N=2,259) took part in a lottery (5×300 CHF) while the low probability respondents (N=585) were randomized into two equally sized subsamples. The first subsample (N=292) was informed that they would receive CHF 20 (cash) upon completion of the questionnaire. The second subsample (N=293) was informed that they would participate in the lottery upon completion of the questionnaire. Table 1 illustrates the samples in the different incentive schemes of wave 5 and wave 6.

Table 1: Sample sizes in the different designs (wave 5 and wave 6). Shaded: low response probability group. Data: Selects 2019 Panel Survey.

Before we investigate response rates and selection effects, we check if the randomization of the low-propensity members into the two incentive groups CHF 20 and lottery worked well.

We use the following variables:

- political interest

- having voted in the 2019 national election

- education level

- whether the questionnaire of wave 3 was interesting to answer.

Previous research shows that these variables, which are good proxies for social inclusion and participation, are generally negatively correlated with attrition (e.g., Voorpostel 2010). We imputed 4 missing values for political interest, 17 for having voted in the 2019 national election, 2 for education level, and 17 for interest in the wave 3 questionnaire, using chained equations. We include the following variables:

- gender

- language region (Swiss-German, French, Italian)

- age group (18-30, 31-43, 44-56, 57-69, 70+)

as controls. Linearly regressing the wave 6 incentive on these independent variables results in an insignificant model (Prob > chi2 = 0.635). This suggests that the randomization of the two low response probability groups in wave 6 was successful, similar to the randomization of the two high response probability groups in wave 5 (Lipps et al. 2022).

Results

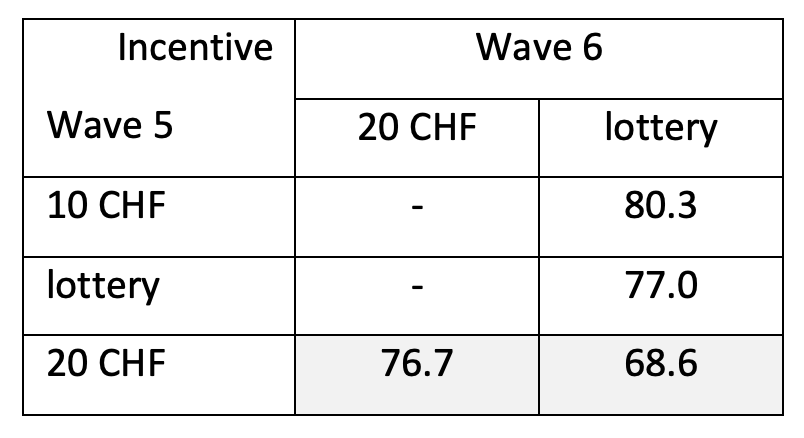

We report the AAPOR RR2 response rates in wave 6 in Table 2 for the samples from Table 1.

Table 2: Response rates in wave 6 in the different designs (wave 5 and wave 6). Shaded: low response probability group. Data: Selects 2019 Panel Survey.

First, we find that – unlike for the high-propensity group in wave 5 – a higher incentive in wave 6 pays off for the low-propensity group: the response rate is about 8% points higher if a conditional CHF 20 is provided compared with a 5×300 CHF lottery. Second, the incentive from wave 5 seems to carry over to wave 6: those in the high-propensity group, who received a conditional CHF 10 in wave 5, had a more than 3% points higher response rate than those in the lottery group. The first difference is significant on the 3% level (Pr(|T| > |t|) = 0.028), the second on the 6% level (Pr(|T| > |t|) = 0.058).

Next, we analyze respondent selection effects due to the incentive in the two low-propensity groups and the two high-propensity groups in wave 6, similar to the effects due to the incentive in the two high-propensity groups in wave 5 (Lipps et al. 2022). Specifically, for both the low and the two high-propensity groups, we test if the interactions of the incentive with the independent variables (used to test the randomization above) are jointly significantly improving the model regressing completion of wave 6 on the main effects only. A likelihood ratio test shows that these interactions are neither jointly significant in the high-propensity groups (Prob > chi2 = 0.349), nor in the low-propensity groups (Prob > chi2 = 0.482). This result is in line with absent response selection effects in the two high-propensity groups in wave 5 (Lipps et al. 2022).

Overall, this suggests that while response rates differ between the two experimental designs in wave 6, the respondent sample composition does not differ between these groups.

Discussion

While the differences of the response rates in wave 6 were large between the wave 6 incentive groups in the low-propensity sample (20 CHF vs. lottery) and still measurable in the wave 5 incentive groups in the high-propensity sample (10 CHF vs. lottery), we do not find respondent selection effects in the respective incentive groups. Interestingly, in the high-propensity sample we found almost the same differences of the response rates of both groups in wave 5, when the incentive experiment was conducted. It seems that people remember incentives quite well and the response rate differences are carried forward to future waves. These carry-over effects of course relativize the statement we made in our previous paper (Lipps et al. 2022) about the possibility to save costs in the high-propensity sample, because cumulative effects reduce sample sizes considerably across multiple waves.

Compared to 20 CHF in the cash group, the incentive costs per respondent in wave 6 for the low-propensity sample amount to only .76 CHF (1500 [cost lottery] / (.803 [response rate high-propensity group 1] * 1127 [sample size high-propensity group 1] + .770 [response rate high-propensity group 2] * 1132 [sample size high-propensity group 2] + .686 [response rate low-propensity group] * 293 [sample size low-propensity group]) in the lottery group. The question is whether an 8% points higher response rate is worth this additional money.

The limitation of this study mentioned in our previous paper still holds: our definition of who has a low and who has a high-propensity by using political interest and late participation in previous waves can obviously be contested and it is likely that more sophisticated models are better able to distinguish low from high-propensity members. Nevertheless, we still think that we took a pragmatic choice by using a simple definition. In addition, none of the two experiments used a proper control group receiving no treatment at all. Finally, the sample size of the low-propensity sample is rather small and tends to lack statistical power. To sum up the message of the paper, we need to relativize our previous statement that in a mature panel of adult citizens, it is no longer appropriate to invest a lot of money in expensive (cash-like) incentives. This clearly does not hold for low-propensity members and to an increasingly lesser extent to high-propensity members if they continue to remember previous incentives and participate in the same way as they did in the wave where the incentive experiment was conducted.

Endnotes

[1]Becker et al. (2019) consider a panel in its seventh wave as “mature” as opposed to a panel in earlier waves, in which less is known about respondents. We adopted this terminology for our panel in its sixth wave.

[2] >50% of the questionnaire completed. Those with 50% or less completed were deemed invalid.

[3] Respondents, who answered the questionnaire in less than a third of the median time.

References

- Becker, R., Möser, S. & Glauser, D. (2019). Cash vs. vouchers vs. gifts in web surveys of a mature panel study–Main effects in a long-term incentives experiment across three panel waves. Social Science Research, 81, 221-234.

- Brick, M. & Tourangeau, R. (2017). Responsive Survey Designs for Reducing Nonresponse Bias. Journal of Official Statistics, 33(3), 735-752.

- Deci, E., & Ryan, R. (2013). Intrinsic motivation and self-determination in human behavior. Springer Science & Business Media.

- Gouldner, A. 1960. The norm of reciprocity: A preliminary statement. American Sociological Review 25 (2): 161–78.

- Groves, R., Singer, E., and Corning, A. (2000). Leverage-salience theory of survey participation: Description and an illustration. Public Opinion Quarterly 64 (3): 299–308.

- Kay, W., Boggess, S., Selvavel, K., & McMahon, M. (2001). The use of targeted incentives to reluctant respondents on response rate and data quality. In Proceedings of the Survey Research Methods Section, American Statistical Association.

- Laurie, H. & Lynn, P. (2009). The Use of Respondent Incentives on Longitudinal Surveys. In Methodology of Longitudinal Surveys (ed. Lynn, P.), Chichester: Wiley.

- Link, M., & Burks, A. (2013). Leveraging auxiliary data, differential incentives, and survey mode to target hard-to-reach groups in an address-based sample design. Public Opinion Quarterly, 77(3), 696-713.

- Lipps, O., Jaquet, J., Lauener, L., Tresch, A. & Pekari, N. (2022). Cost efficiency of incentives in mature probability-based online panels. Survey Methods: Insights from the Field. DOI: 10.13094/SMIF-2022-00007.

- Martin, E., & Winters, F. (2001). Money and motive: effects of incentives on panel attrition in the survey of income and program participation. Journal of Official Statistics, 17(2), 267.

- Nicolaas, G., Corteen, E., & Davies, B. (2019). The use of incentives to recruit and retain hard-to-gret populations in longitudinal studies. NatCen Social Research, London.

- Olson, K., Wagner, J. & Anderson, R. (2021). Survey costs: Where are we and what is the way forward? Journal of Survey Statistics and Methodology, 9(5), 921-942.

- Pekari, N. (2017). Effects of contact letters and incentives in offline recruitment to a short-

term probability-based online panel survey. Presented at the European Survey Research

Association (ESRA) Conference, Lisbon. - Pforr, K. et al. (2015). Are incentives effects on response rates and nonresponse bias in large-scale, face-to-face surveys generalizable to Germany? Evidence from ten experiments. Public Opinion Quarterly, 79(3), 740-768.

- Rodgers, W. (2002). Size of incentive effects in a longitudinal study, presented at the 2002 American Association for Public Research conference, mimeo, Survey Research Centre, University of Michigan, Ann Arbor.

- Sacchi, S., von Rotz, C., Müller, B., & Jann, B. (2018). Prepaid incentives and survey quality in youth surveys. Experimental evidence from the TREE panel. Presentation at the III. Midterm Conference of the ESA RN 21 Cracow, October 3–6, 2018.

- Selects (2023). Panel Survey (waves 1-6) – 2019-2022 [Dataset]. Distributed by FORS, Lausanne, 2023. https://doi.org/10.48573/4my6-rd07.

- Singer, E., & Ye, C. (2013). The use and effects of incentives in surveys. The ANNALS of the American Academy of Political and Social Science, 645(1), 112-141.

- Tourangeau, R., Brick, M., Lohr, S., & Li, J. (2017). Adaptive and responsive survey designs: A review and assessment. Journal of the Royal Statistical Society Series A: Statistics in Society, 180(1), 203-223.

- Voorpostel, M. (2010). Attrition patterns in the Swiss household panel by demographic characteristics and social involvement. Swiss Journal of Sociology, 36(2), 359-377.

- Wong, E. (2020). Incentives in longitudinal studies, CLS Working Paper 2020/1. London: UCL Centre for Longitudinal Studies.