Response Rates in the European Social Survey: Increasing, Decreasing, or a Matter of Fieldwork Efforts?

Beullens, K., Loosveldt G., Vandenplas C. & Stoop I. (2018). Response Rates in the European Social Survey: Increasing, Decreasing, or a Matter of Fieldwork Efforts? Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=9673

© the authors 2018. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Response rates are declining increasing the risk of nonresponse error. The reasons for this decline are multiple: the rise of online surveys, mobile phones, and information requests, societal changes, greater awareness of privacy issues, etc. To combat this decline, fieldwork efforts have become increasingly intensive: widespread use of respondent incentives, advance letters, and an increased number of contact attempts. In addition, complex fieldwork strategies such as adaptive call scheduling or responsive designs have been implemented. The additional efforts to counterbalance nonresponse complicate the measurement of the increased difficulty of contacting potential respondents and convincing them to cooperate. To observe developments in response rates we use the first seven rounds of the European Social Survey, a biennial face-to-face survey. Despite some changes to the fieldwork efforts in some countries (choice of survey agency, available sample frame, incentives, number of contact attempts), many characteristics have been stable: effective sample size, (contact and) survey mode, and questionnaire design. To control for the different country composition in different rounds, we use a multilevel model with countries as level 2 units and response rates in each country-year combination as level 1 units. The results show a declining trend, although only round 7 has a significant negative effect.

Keywords

European Social Survey, fieldwork effort, Response rate trend

Copyright

© the authors 2018. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

1. Introduction

In recent years, survey nonresponse has received increasing attention because of the greater risk of error that goes hand-in-hand with increasing nonresponse rates. Survey researchers seem to agree that there is an international trend toward declining response rates or, equivalently, increasing nonresponse rate (Atrostic et al., 2001; de Leeuw and de Heer, 2002; Rogers et al., 2004, Curtin et al., 2005; Dixon and Tucker, 2010; Bethlehem et al., 2011; Brick and Williams, 2013; Kreuter, 2013). Williams and Brick (2017) demonstrate that in face-to-face surveys in the United States, response rates generally decreased in the period from 2000 to 2014, despite an increase in the level of effort. This increase in nonresponse rates is mainly due to the increasing difficulty to contact sample units and to convince them to participate (Singer, 2006). Some societal evolutions such as new technologies (for example, the Internet, mobile phones, or tablets), family composition, or survey fatigue may have eroded the favorable climate in which surveys could successfully recruit respondents.

In face-to-face interviews, contactability issues may arise from an increase in barriers or impediments intended to keep unwanted visitors out. So-called gated communities (Tourangeau, 2004) contribute to this problem. Anecdotal evidence from fieldwork directors in Switzerland and France for the European Social Survey suggests that this growing problem is particularly prominent in larger cities. Further, at-home patterns affect the ease with which sampled cases can be contacted. This can be related to variables such as labor-force participation, life stage, socioeconomic status, health, or gender (Smith, 1983, Stoop, 2007). According to Goyder (1987), people who are single, have a paid job, live in an apartment, in a big city, or belong to higher socioeconomic status groups, are harder to contact, while the elderly or larger families are easier to contact. Campanelli and colleagues (1997) report similar findings. Groves and Couper (1998) observe that families with young children and elderly people are more likely to be at home and are therefore more easy to contact. Contactability may also simply be a function of the number of household members. The larger the household, the more likely it is that someone will be at home to answer the call or the door (Stoop, 2005). Therefore, Dixon and Tucker (2010) argue that as the average household size declines, additional fieldwork efforts may be required to successfully contact the target people. Similarly, from the observations of Tucker and Lepkowski (2008), one can conclude that the increase of labor-market participation among women may have had a detrimental effect on contactability.

The main source of nonresponse in face-to-face surveys is in fact not noncontact, but the inability to gain cooperation from the sample units once they have been contacted. Survey reluctance may be caused by an increasing number of survey requests and growing awareness of privacy or confidentiality issues (Singer and Presser, 2008). However, Williams and Brick (2017) note that although both nonresponse and refusal rates are increasing, the proportion of nonresponse due to refusal remained relatively stable in the surveys that they examined.

Another reason for nonresponse that may partly explain the raise in nonresponse rate is “inability”: Some people are not mentally or physically able to participate, and others may not participate because of language barriers. In ageing societies and countries with increasing levels of immigration, this source of nonresponse may become more important.

Face-to-face surveys usually have the highest response rates compared to other modes (de Heer, 1999; Hox and de Leeuw 2004; Betschmeider and Schumacher,1996). These surveys rely of course heavily on the interviewers collecting the data. Dixon and Tucker (2010) and Schaeffer, Dykema and Maynard (2010) state that survey researchers experience increased difficulties in finding capable interviewers at a reasonable cost. Interviewer tasks include, among other things, contacting the households or individuals who have been selected, convincing them to participate, and conducting the interview in a standardized way. Interviewers are nowadays also responsible for the collection of paradata through so-called contact forms for each contact attempt, or through an interviewer questionnaire to assess each interview. These tasks may have become more complicated due to technological innovations and changing societal aspects, such as the diversity of household composition, including language barriers or cultural differences. This means that the demands on interviewers are increasing, and that these should be compensated for by rigorous interviewer selection, training, and remuneration.

This paper seeks to provide evidence as to whether the European Social Survey shows decreasing trends of response rates, contact rates, and cooperation rates. As this survey has now been conducted in 36 countries over 12 years, the potentially declining response rate trend should be apparent. Nonetheless, fieldwork strategies have been altered over the years in order to anticipate an unfavorable evolution. Therefore, we also analyze the trends in fieldwork efforts.

2. Data and Methods

From 2002 to 2014, the European Social Survey (ESS) has collected seven rounds of data in 36 countries. Not all the countries participated in all rounds, with the result that only 181 country-round combinations are available. The survey has been repeated biennially and is relatively stable in terms of its implementation. The management is divided in a national level and a cross-national level (e.g., Koch et al. 2009). The ESS – European Research Infrastructure Consortium (ESS-ERIC) is responsible for the design and conducting of the survey. The ESS ERIC is governed by a General Assembly which appoints the Director, who is supported by the Core Scientific Team (CST)[i].

For each round, Survey Specifications are drafted that outline, among other things, in detail how the fieldwork has to be conducted, including sampling, data collection and data processing.[ii] These tasks are the responsibility of the national management, i.e. the National Coordinator (NC) and their team. The Survey Specifications are rather stable from one round to another but draw on lessons learned in previous rounds [iii]. The CST is also responsible for the development of the source questionnaire (in British English), translation guidelines and quality assessment. It also oversees the sampling designs of each participating country and produces design and nonresponse weight. The CST supports the NCs in the planning of the data collection through guidelines, training materials, or individual feedback and closely monitors the progress of fieldwork.

Stable survey characteristics across rounds are the face-to-face recruitment and survey mode (although in some cases telephone recruitment is allowed), the major part of the questionnaire, requirements in call scheduling (minimum 4 attempts, one in the evening, on in the weekend, and spread out over two weeks), some refusal conversion, and maximum assignment sizes for individual interviewers. The target response rate of 70% and a maximum non-contact rate of 3% has also been maintained, emphasizing the aim of a high response rate. In practice, a feasible national target response rate is set in discussions between CST and NCs, aiming at increasing response rates compared to the previous round.

The ESS response rates may therefore be considered reasonably comparable across rounds. Nevertheless, over the different rounds, some countries may have altered some elements in their fieldwork approach, such as respondent incentives, interviewer bonuses, refusal conversion procedure, interviewer training, refusal conversion efforts, or the use of advance letters. These changes may have had a direct effect on response rates.

A large number of factors that influence response rates can be cultural and societal, hence country dependent. Therefore, we can consider the response rates as clustered within countries, although we are interested in the effect of rounds (time) on the response rate in general. To examine the effect of time on response rates, we use a multilevel model with the response rates as dependent variables at level 1 (country-year combinations) nested in countries, which are the level 2 units. This model allows us to separate round effects and country effects, and to calculate the general trend in response rates controlling for differences in the participating countries in each round. The intercept is random and can vary from country to country, but we consider the effect of the rounds as a fixed effect, as we are only interested in the general trend of the response rate. Since the effects of the rounds are not necessarily linear, we treat the rounds as a categorical variable and estimate the effect of each subsequent round compared with the first. In order to model the effect of time (rounds) on response rates, the following multilevel model can be used:

![]()

where ![]() represents the response rate for ESS round i and for country j,

represents the response rate for ESS round i and for country j, ![]() is the overall intercept of the model, and

is the overall intercept of the model, and ![]() represents the effect of the round i compared with round 1 ( 2 to 7). The random effect

represents the effect of the round i compared with round 1 ( 2 to 7). The random effect ![]() accommodates the country differences in the level of response rates. In particular, this is necessary because not all countries participated in all seven rounds. Indeed, the selectivity of countries per round (for example particularly lower response rate countries participated in round i while higher response rate countries participated in round i’) would otherwise bias the time effect, measured by

accommodates the country differences in the level of response rates. In particular, this is necessary because not all countries participated in all seven rounds. Indeed, the selectivity of countries per round (for example particularly lower response rate countries participated in round i while higher response rate countries participated in round i’) would otherwise bias the time effect, measured by ![]() By introducing the random effect

By introducing the random effect ![]() , the effect of R_i (

, the effect of R_i (![]() is calculated as the weighted mean of the time effect in each country. The time effect as estimated by

is calculated as the weighted mean of the time effect in each country. The time effect as estimated by ![]() can consequently be interpreted as an overall effect of time, regardless of the specific countries that participated in the various rounds. Second, ignoring this term means that clustering at the country level is not taken into account, which would otherwise possibly lead to invalid standard errors. Out of the 181 country-round combinations, nine combinations had missing or incomplete contact form information (Austria Round 4, Estonia Round 3, France Round 1, Iceland Round 2 and Round 6, Latvia Round 3, Romania Round 3, Sweden Round 1, and Turkey Round 2). To link response rates (noncontact and refusal rates) to fieldwork efforts, the paradata from the contact form files are necessary. Hence, Model 1 is estimated for 35 countries and 172 country-round combinations.

can consequently be interpreted as an overall effect of time, regardless of the specific countries that participated in the various rounds. Second, ignoring this term means that clustering at the country level is not taken into account, which would otherwise possibly lead to invalid standard errors. Out of the 181 country-round combinations, nine combinations had missing or incomplete contact form information (Austria Round 4, Estonia Round 3, France Round 1, Iceland Round 2 and Round 6, Latvia Round 3, Romania Round 3, Sweden Round 1, and Turkey Round 2). To link response rates (noncontact and refusal rates) to fieldwork efforts, the paradata from the contact form files are necessary. Hence, Model 1 is estimated for 35 countries and 172 country-round combinations.

The response rate very closely reflects the response rate (RR1) as defined in AAPOR 2016 standard guidelines for calculating response rates (AAPOR 2016, p61). It expresses the number of valid interviews– records in data file, relative to the total number of eligible cases (The ESS Data Archive 2014, p24):

Ineligible cases include:

- “address is not residential (institution, business/industrial purpose/ Respondent reside in an institution,”

- “address is not occupied (not occupied, demolished, not yet built/ Address occupied but no resident household (weekend or second home),”

- “other ineligible address,”

- “respondent emigrated/left the countries for more than 6 months,” and

- “respondent is deceased.”

In some country-round combinations, a substantial number of cases were sampled but contact was never attempted (Round 1: Czech Republic (319) and Slovenia (47) ; Round 2: Belgium (24) and Czech Republic (1196) ; Round 4: Greece (165), Israel (283), and Latvia (407); Round 5: Hungary (603), R6: Lithuania (1431)). The raisons for these unapproached cases can be different: the fieldwork budget ran out, no interviewers were available anymore or other fieldwork issues. However, these unapproached cases are not taken into account in the denominator when calculating the response rate. This way, the response rate calculated and used in the analyses of this paper are a measure of the success rate during the fieldwork. As a result, these rates are higher than the official response rates for which the unapproached cases are taken into account in the denominator. This could result in slightly different round effect than if the published ESS response rates were calculated. However, we believe that these ‘success’ rates are more informative.

As nonresponse is mainly due to noncontacts and refusals, we also consider the refusal rates (refusals relative to all refusals and interviews) and the noncontact rates (all noncontacted cases relative to all eligible cases) in addition to final response rates. Model 1, which was previously presented for estimating the progress in the response rates over the rounds, can also be applied to estimate the time effect on noncontact and refusal rates.

Lastly, we consider the changes in fieldwork efforts: The average number of contact attempts per case (natural logarithm), the percentage of initial refusals subsequently re-approached, whether an incentive was offered to respondents or nonrespondents, the percentage of experienced interviewers, whether there was refusal conversion interviewer training, whether there was an interviewer bonus, and whether there was an advance letter. The same model as for response rates, refusal, and noncontact rates can be applied for these fieldwork variables to avoid the country composition in a specific round biasing the result. In cases where the fieldwork variable is not continuous (for example the binary variable indicating whether or not an advance letter was used in the fieldwork), the multilevel model is transformed into its logit-link counterpart for which there is no error term.

3. Trends in Response Rates, Noncontact Rates and Refusal Rates

The general trend

In the first step, we study the development of the response rates in the European Social Survey over the first seven rounds, as well as the noncontact and refusal rates. Table 1 shows the overall intercept (![]() ), the effects of the rounds (

), the effects of the rounds ( ![]() ), the variance at the country level, and the residual variance.

), the variance at the country level, and the residual variance.

Table 1. Round effects on response rates, noncontact rates, and refusal rates among 35 countries, European Social Survey round 1 to 7

| Response (%) |

Noncontact (%) |

Refusal (%) |

|||||

| Intercept ( |

61.86 |

*** |

6.06 |

*** |

27.58 |

*** | |

| Round effect | R2 |

2.44 |

-0.90 |

-3.11 |

|||

| R3 |

0.47 |

-0.00 |

-1.07 |

||||

| R4 |

-0.37 |

-0.77 |

0.06 |

||||

| R5 |

-2.13 |

-0.03 |

1.58 |

||||

| R6 |

-0.65 |

-1.21 |

-0.45 |

||||

| R7 |

-4.48 |

* |

-1.33 |

1.69 |

|||

|

67.77 |

*** |

7.63 |

*** |

78.47 |

*** | ||

|

45.39 |

*** |

1.08 |

*** |

39.66 |

*** | ||

| Notes: The reference group in the categorical analysis is R1. *p<.05; **p<.01; ***p<.001 |

|||||||

As expected, the effects of the rounds on the response rates, noncontact rates, and refusal rates are not linear. Round 2 and round 3 both have a positive effect on the response rates (although not significant), which is reflected by a negative effect on both noncontact rates and refusal rates. This is most probably due to an improvement of contact and refusal conversion procedures based on experience in the first round. The four following rounds (rounds 4 through 7) have a negative effect on the response rates, particularly round 7, which had a significant effect. In parallel, these rounds have a negative effect on the noncontact rate but a positive effect on the refusal rate on rounds 4, 5, and 7. These results are a first indication that although the noncontact rate is reduced overall – probably through fieldwork efforts – obtaining cooperation once contact is established becomes increasingly difficult. Moreover, looking at the amplitude of the effect on response rates, a growing decrease can be observed: From a positive effect in round 2 to a smaller positive effect in round 3, to increasingly larger negative effects from round 4 to 7 (with the exception of round 6). A similar pattern (in the opposite direction) can be observed for the refusal rates. The difference in effects on the response rates from one round to another (again with the exception of round 6) is around 1 to 1.5 percentage points, supporting the results from de Leeuw and de Heer (2002) and some of the results from Williams and Brick (2017).

Finally, the variance between countries for the response rates, the noncontact rates, and the refusal rates is greater than the variance between rounds within a country .

All the analyses were repeated excluding the countries that participated only once, and excluding those that participated only once or twice. The results were very similar and led to the same conclusions.

Country-specific profile

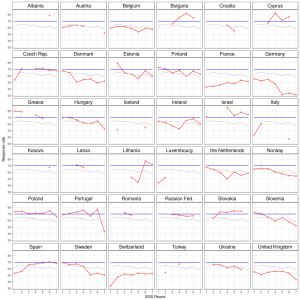

While the general trend is a decrease in response rates, this tendency is not uniform among the participating countries, as can also be seen in Figure 1, where the red lines represent the response rate in each round for each particular country. The blue and the gray lines are both identical in every country. The blue line represents the general response rate target of 70%. As can be seen, many countries have never or have rarely achieved this objective. In fact, the actual response rate only exceeds the objective in 43 out of 181 country-round combinations. The gray line is the trend line over all countries, based on Model 1, the results of which are presented in Table 1. For each round, the round-specific parameter is added to the intercept (for example 61.86 + 2.44 = 64.20 for round 2), thereby obtaining an average response rate over all countries. The general decreasing trend in response rates found in Table 1, from round 2 to 7 with the exception of round 6, can be visually confirmed when observing the gray line.

Figure 1. Development of the response rates over the rounds in each country participating in the European Social Survey (red line), 70% target response rate (blue line), and general trend (grey line)

Turning to the country-specific red lines, a decline in the response rates can be observed in Denmark, Estonia, Finland, Germany, Greece, Hungary, Norway, Slovenia, Sweden, and Ukraine, although the decline is never consistent. Conversely, a remarkable increase in response rates can be observed in some countries: France, Spain, and Switzerland. In other countries, the response rates appear to be relatively stable (Austria, Belgium, Czech Republic, Poland, the Russian Federation, Slovakia, and the United Kingdom) or sometimes quite erratic (Bulgaria, Cyprus, Ireland, Israel, Lithuania, The Netherlands, and Portugal).

These country differences in the evolution of the response rates might be due to differences in the fieldwork efforts and strategies, and in the way these evolved over the rounds. Some countries may have altered fieldwork efforts (introduction of incentives, advance letters, etc.) over the years in order to attain better response rates or in order to deal with anticipated response deterioration. In other countries, for some rounds, unfortunate situation may have occur (running out of money, no interviewer available) leading to a premature end of the fieldwork.

4. Trends in Fieldwork Efforts

Fieldwork efforts are difficult to define and to quantify in a cross-country context: The cost of one contact attempt in one country may be very different to that in another one, or the amount of an incentive may have a different impact in different countries, depending on the cost of living. However, we are interested in the overall changes in fieldwork strategies and not in the differences between countries. We concentrate on a number of fieldwork strategies that are well documented for all rounds of the ESS. The strategies examined can be split into efforts directed to reduce noncontact rates (number of contact attempts per case), to reduce refusal rates (respondents incentives, refusal conversion attempts, advance letters or brochures or refusal conversion training), or both (bonuses for the interviewers). Moreover, the characteristics of the interviewer group conducting the surveys, especially their level of experience, might also have an influence on the response rate. To accommodate country differences and differences in participating countries in different rounds, the fieldwork effort evolutions are modeled using Model 1.

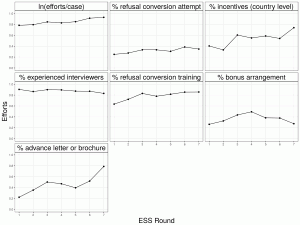

Figure 2 illustrates the evolution of the fieldwork effort in terms of the number of contact attempts (effort) per case (ln), refusal conversion (expressed as the average re-approach probability after a first refusal), the percentage of countries providing an incentive (any type of incentive: Monetary as well as non-monetary, conditional as well as unconditional), the percentage of experienced interviewers (any previous experience of survey interviewing), the percentage of countries with a bonus arrangement for their interviewers, and the percentage of countries that use advance letters or brochures. The points are derived from Model 1, in the same way the overall response rates were (gray line), adding the round-specific effect to the overall intercept.

Figure 2. The changes in fieldwork efforts from round 1 to round 7.

In general, the fieldwork efforts increased. In particular, the number of contact attempts (ln (efforts/case)) in rounds 6 and 7, the percentage of refusal conversion in round 6, the percentage of countries offering an incentive in round 7, the percentage of countries offering refusal conversion training in round 6 and 7, and the use of an advance letter or brochure in rounds 3, 4, 6, and 7 are significantly higher than in round 1. However, the two first indicators–ln (effort/case) and % refusal conversion attempt–are directly measured from the contact forms. There is a risk that these forms are filled out more meticulously over the rounds, meaning that the first rounds of the ESS have more underreporting. Therefore, these indicators should be carefully interpreted. One exception to the increasing fieldwork trend is the percentage of experienced interviewers (not significant), which is in line with the findings of Dixon and Tucker (2010) and Schaeffer et al. (2010) that “good” interviewers are harder to find. The other exception is the percentage of countries that have a bonus arrangement for interviewers, which appears to decrease (also not significant). These results suggest that increasing effort is made with regard to respondents, but that less is invested in interviewers. Importantly, the response rate trend is negative despite a general increase in fieldwork efforts.

5. Discussion

Similarly to the results of many recent papers (Atrostic et al., 2001; de Leeuw and de Heer, 2002; Rogers et al., 2004; Curtin et al., 2005; Dixon and Tucker, 2010; Bethlehem et al., 2011; Brick and Williams, 2013; Kreuter, 2013; Williams and Brick, 2017), we find a generally decreasing trend in response rates over the rounds in the European Social Survey, especially from round 2 to 7 with the exception of round 6. The topic of the rotating modules (personal and social well-being and democracy) and the shorter interview duration in round 6 compared to other rounds could have caused the deviation from the declining response rate in this round. The higher percentage of refusal conversion observed in that round is another possible explanation for a higher response rate in round 6.

In line with the observation of Williams and Brick (2017), a small decline between rounds seems to have a significant cumulative effect. In the case of the European Social Survey, round 7 is the first round for which this decline is significant compared with round 1. This decline in response rates seems to be more due to increasing refusal rates (although no significant effects) than the effect of noncontact, which is more or less stable.

Several societal changes (e.g., smaller households, another work/life balance, privacy concerns), technological innovations (e.g., mobile phones and surveys), decreasing trust in surveys or increasing survey burden may affect individual response propensities, explaining the gradual decline in response rates.

At the same time, there seem to be indications that many participating countries have increased their fieldwork efforts in order to prevent individual response propensities from decreasing and, in turn, the response rates from falling. These efforts seem to have had an effect on noncontact rates, but have been less effective in reducing refusal rates.

It should also be noted that greater fieldwork efforts are sometimes the result of low response rates. Stoop, Billiet, Koch and Fitzgerald (2010) found that the response rates of the ESS round 3 are related to the length of the fieldwork period and a “fieldwork efforts index,” consisting of interviewer experience, interviewer payment, interviewer briefings, the use of advance letters or brochures, and respondent incentives. They found that more fieldwork efforts were deployed in countries with low response rates. This finding is a paradox: the lower the response rate, the more efforts the survey agency has to deploy. Stoop (2009) found similar results for Dutch social surveys in particular, as response rates could at least temporarily be maintained by increased fieldwork efforts, and the researcher states that “extended field efforts may have held off the decrease in response rates, but they may not be able to counteract the continuing downward trend” (p. 3).

This paper has some limitations. Response rates are the result of at least two interacting factors: The overall survey climate of a country (the average propensity of its inhabitants to participate in a survey) and the effort the survey agency is willing (and paid for) to invest in the fieldwork in order to attain a certain response rate. The latter factor can be indicated by the various fieldwork input factors discussed in this paper, such as refusal conversion programs, incentives, or advance letters. Other factors such as survey cost or cost per case can also be considered. For the European Social Survey, this information is unfortunately difficult to obtain and to analyze in a comparative way across countries. We are therefore unable to provide the relevant analysis. Moreover, we also did not consider the effect of the potentially increasing “inability” rate (people unable to participate in the survey because of physical/mental incapacity or language problems) and we did not consider the potential implication of the possible decline in interviewers’ capacity. Lastly, we chose to use only the sample units that were used/activated during the fieldwork in the few countries for which some cases were not approached. This implies that the reported response rates are a little bit higher than the ESS published response rates. The impact of this option on the observed trend seems negligible.

Notwithstanding these limitations, our findings are consistent with recent literature and point to a decline in response rates despite an increase in fieldwork effort. This decline is worrying, as it poses a threat to data quality through nonresponse bias, although, high response rates are not always related to low nonresponse bias (Groves and Peytcheva, 2008). Koch et al. (2014) demonstrated that countries with a non-individual sampling frame (household or area sampling frames) usually obtain higher response rate than countries with an individual sampling frame. Also countries with a non-individual sampling frame obtain their interviews faster in the fieldwork period (Vandenplas et al., 2017). This is against the expectations as non-individual sampling frames take a supplementary sampling step that should lower response rates and do not allow for a tailored approach of the respondents (named advanced brochure, for instance). Moreover, using external and internal criteria to assess the quality of the responding samples in different ESS countries, Koch et al. (2014) found a positive correlation between bias and response rates. This points out to the necessity to focus on nonresponse bias as well as on high response rates. Finally, some of the observed decline in response rate could be the consequence of initiatives taken in order to increase the data quality.

The general decline in survey response rates and the deteriorating survey climate may encourage some researchers to question the usefulness of general (cross-national) surveys, especially face-to-face surveys. The growing number of online panels (based on a probability sample or not) may seem like an attractive alternative source of data. Up to now, however, online surveys response rates remains lower than face-to-face surveys and may be better suited for specific target population. Moreover, probability based surveys still display less bias than non-probability surveys (Yeager, 2011; Langer, 2018).

[i] http://www.europeansocialsurvey.org/about/structure_and_governance.html

[ii] http://www.europeansocialsurvey.org/methodology/ess_methodology/survey_specifications.html

[iii] http://www.europeansocialsurvey.org/docs/round7/methods/ESS7_quality_matrix.pdf

References

- The American Association for Public Opinion Research. (2016). Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys, 9th edition. AAPOR. Retrieved from http://www.aapor.org/AAPOR_Main/media/publications/Standard-Definitions20169theditionfinal.pdf.

- Atrostic, B. K., Bates, N., Burt, G., & Silberstein, A. (2001). Nonresponse in U.S. Government Household Surveys: Consistent Measures, Recent Trends, and New Insights. Journal of Official Statistics, 17(2), 209–226.

- Bethlehem, J. G., Cobben, F., & Schouten, B. (2011). Handbook of Nonresponse in Household Surveys. Hoboken, N.J.: John Wiley.

- Bretschneider, M. (1996). DEMOS-Eine Datenbank zum Nachweis Kommunaler Umfragen auf dem Weg zum Analyseinstrument. ZA-Information/Zentralarchiv für Empirische Sozialforschung, (38), 59-75

- Brick, J. M., & Williams, D. (2013). Explaining Rising Nonresponse Rates in Cross-Sectional Surveys. The ANNALS of the American Academy of Political and Social Science, 645(1), 36–59. http://doi.org/10.1177/0002716212456834

- Campanelli, P., Sturgis, P., & Purdon, S. (1997). Can you hear me knocking? An investigation into the impact of interviewers on survey response rates. London: GB National Centre for Social Research.

- Curtin, R., Presser, S., & Singer, E. (2005). Changes in telephone survey nonresponse over the past quarter century. Public Opinion Quarterly, 69(1), 87–98. http://doi.org/10.1093/poq/nfi002

- De Heer, W. (1999). International response trends: results of an international survey. Journal of official statistics, 15(2), 129.

- De Leeuw, E. D., & De Heer, W. (2002). Trends in Households Survey Nonresponse: A Longitudinal and International Comparison. In R. M. Groves, D. A. Dillman, E. Eltinge, & R. J. A. Little (Eds.), Survey Nonresponse , pp. 41–54. New York: Wiley.

- Dixon, J., & Tucker, C. (2010). Survey Nonresponse. In P. Marsden & J. D. Wright (Eds.), Handbook of Survey Research. Bingley: Emerald Group Publishing Limited.

- The ESS Data Archive (2014). ESS7 -2014 Documentation report, London: European Social Survey. Retrieved from: http://www.europeansocialsurvey.org/docs/round7/survey/ESS7_data_documentation_report_e03_1.pdf

- Goyder, J. (1987). The silent minority: Nonrespondents on sample surveys. Boulder: Westview Press.

- Groves, R. M. (2004). Survey errors and survey costs (Vol. 536). New jersey: John Wiley & Sons.

- Groves, R. M., & Peytcheva, E. (2008). The Impact of Nonresponse Rates on Nonresponse Bias. A Meta-Analysis. Public Opinion Quarterly, 72(2), 167–189. http://doi.org/10.1093/poq/nfn011

- Hox, J. J., & De Leeuw, E. D. (1994). A comparison of nonresponse in mail, telephone, and face-to-face surveys. Quality and Quantity, 28(4), 329-344.Koch, A., Blom, A. G. , Stoop, I. & Kappelhof, J. (2009). Data Collection Quality Assurance in Cross-National Surveys: The Example of the ESS. Methoden Daten Analysen. Zeitschrift für Empirische Sozialforschung, Jahrgang 3, Heft 2, 219-247.

- Koch, A., V. Halbherr, I. A. L. Stoop, & J. W. S. Kappelhof (2014), Assessing ESS Sample Quality by using External and Internal Criteria, Mannheim, Germany: European Social Survey, GESIS. Available at https://www.europeansocialsurvey.org/docs/round5/methods/ESS5_sample_composition_assessment.pdf.

- Kreuter, F. (2013). Facing the Nonresponse Challenge. The Annals of the American Academy of Political and Social Science, 645(1), 23–35. http://doi.org/10.1177/0002716212456815

- Langer, G.(2018). Probability versus non-probability methods. In David L. Vannette and Jon A. Krosnick, (Eds), The Palgrave Handbook of Survey Research, pages 393–403. Springer, Cham.

- Rogers, A., Murtaugh, M. A., Edwards, S., & Slattery, M. L. (2004). Contacting controls: Are we working harder for similar response rates, and does it make a difference? American Journal of Epidemiology, 160(1), 85–90. http://doi.org/10.1093/aje/kwh176

- Schaeffer, N. C., Dykema, J., & Maynard, D. W. (2010). Interviewers and interviewing. Handbook of Survey Research, In P. Marsden & J. D. Wright (Eds.), Handbook of Survey Research. Bingley: Emerald Group Publishing Limited (pp 437–470).

- Singer, E. (2006). Nonresponse Bias in Household Surveys. Public Opinion Quarterly, 70(5), 637–645.

- Singer, E., & Presser, S. (2008). Privacy, confidentiality, and respondent burden as factors in telephone survey nonresponse. In J. M. Lepkowski,, N. C. Tucker, J .M. Brick, E. de Leeuw, L. Japec, P. J. Lavrakas, & R. L. Sangster. Advances in telephone survey methodology, New Jersey: John Wiley & Sons. (pp 447–470).

- Smith, T. W. (1995). Trends in non-response rates. International Journal of Public Opinion Research, 7(2), 157–171.

- Smith, T. W. (1983). The Hidden 25 Percent: An Analysis of Nonresponse on the 1980 General Social Survey. Public Opinion Quarterly, 47(3), 386–404. Retrieved from http://dx.doi.org/10.1086/268797

- Stoop, I. (2013). A few questions about nonresponse in the Netherlands. Survey Practice, 2(4).

- Stoop, I.(2007) No time, too busy. Time strain and survey cooperation. In: G. Loosveldt, M. Swyngedouw and B. Cambré (Eds.) Measuring Meaningful Data in Social Research. Leuven: Acco. (pp. 301-314)

- Stoop, I. A. L. (2005). The hunt for the last respondent: Nonresponse in sample surveys. The Hague: Sociaal en Cultureel Planbureau.

- Stoop, I., Billiet J., Koch A. and Fitzgerald R. (2010) Improving Survey Response. Lessons Learned from the European Social Survey. Chichester, John Wiley & Sons. Ltd.

- Tourangeau, R. (2004). Survey research and societal change. Annual Review of Psychology, 55, 775–801.

- Tucker, C., & Lepkowski, J. M. (2008). Telephone survey methods: Adapting to change. Advances in Telephone Survey Methodology, 1–26.

- Vandenplas, C. & Loosveldt G. (2017). Modeling the weekly data collection efficiency for face-to-face surveys: six rounds of the European Social Survey Journal of Survey Statistics and Methodology, Volume 5, Issue 2, 1 June 2017, Pages 212–232, https://doi.org/10.1093/jssam/smw034

- Williams, D., & Brick, J. M. (2017). Trends in U.S. Face-to-Face Household Survey Nonresponse and Level of Effort. Journal of Survey Statistics and Methodology, (September), 1–26. http://doi.org/10.1093/jssam/smx019

- Yeager D. S., Krosnick, J.A., Chang, L., Javitz, H. S., Levendusky, M. S., Simpser, A., and Wang, R. Comparing the accuracy of rdd telephone surveys and internet surveys conducted with probability and non-probability samples. Public Opinion Quarterly, 75(4):709–747, 2011.