Letting the cat out of the bag: The impact of respondent multitasking on disclosure of socially undesirable information and answers to knowledge questions

Park, K. H., Aizpurua, E., Heiden, E. O. & Losch, M. E. (2020). Letting the cat out of the bag: The impact of respondent multitasking on disclosure of socially undesirable information and answers to knowledge questions. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=14420

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

Previous research shows that a high proportion of respondents engage in other activities while answering surveys. In this study, we examine the effect of multitasking in reporting sensitive information and socially undesirable behavior (e.g., substance use, mental health, gambling) along with reporting of knowledge/awareness of publicly funded programs. The dataset comes from a dual-frame random digit dial telephone survey of adults in a Midwestern state (N = 1,761) who were asked about their attitudes and behaviors toward gambling and health-related behaviors. The results of the study reveal that nearly half of the respondents engaged in multitasking activities (46.9%). In addition, it was found that multitaskers disclosed more socially undesirable information and reported lower levels of knowledge than non-multitaskers. The implications of these findings and how they fit in with previous work are discussed.

Keywords

data quality, multitasking, social desirability, telephone surveys

Acknowledgement

The 2018 Iowa Gambling Attitudes and Behaviors study was funded by the Office of Gambling Treatment and Prevention of the Iowa Department of Public Health. We thank them for allowing CSBR to conduct additional methodological research using this project. The opinions, findings, and conclusions expressed in this presentation are those of the authors and not necessarily those of the Office of Gambling Treatment and Prevention of the Iowa Department of Public Health, or the University of Northern Iowa.

The replication data can be obtained through the funding agency in the state, and the authors can share the syntax per request.

Copyright

© the authors 2020. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

The increased use of cell-phones to participate in telephone and online surveys has broadened the opportunities for multitasking. The mobility offered by these devices allows respondents to engage in survey research both within and outside the home, while performing other activities (e.g., shopping, exercising). These activities can be simultaneous (e.g., answering a questionnaire while listening to music) or sequential (e.g., discontinue the survey to answer a phone call) (Zwarun and Hall 2014). Previous research has measured multitasking using two strategies: (1) asking respondents about the activities that they have engaged in while answering questionnaires and (2) using paradata to detect window and browser tab switching behaviors (Höhne et al. 2020). While the former is prone to recall errors and social desirability bias, the latter is limited to online surveys and only identifies on-device multitasking, leaving out common forms of respondent multitasking such as speaking with others or watching TV (Ansolabehere and Schaffner 2015). Unlike self-reports, which provide information at the survey level (i.e., whether the respondent has multitasked while responding to the questionnaire), paradata offers information at the question, page, and survey level (Diedenhofen and Much 2017). Although paradata accurately identifies cases where respondents abandon the survey page, it does not provide information about the type of activities taking place, nor it identifies activities carried out in other devices (e.g., respondents texting on their cell-phones while completing a survey on a PCs).

The prevalence of multitasking varies depending on how it is operationalised, but there is agreement that respondent multitasking is frequent. Studies using self-reported measures of multitasking in telephone surveys show multitasking rates ranging from 45% to 55% (Aizpurua et al. 2018a; Aizpurua et al. 2018b; Lavrakas, Tompson, and Benford 2010). Findings from dual-frame telephone surveys indicate that landline respondents report multitasking as often as cell-phone respondents, although the activities they engage in vary by device (Aizpurua et al. 2018a; Kennedy 2010). For example, outdoor activities such as walking or driving are exclusive to cell-phone respondents while others (e.g., watching TV) are more common among landline respondents (Aizpurua et al. 2018a). Studies using online surveys have found similar rates of self-reported multitasking, indicating that between one-in-two and one-in-three respondents engage in other activities while answering surveys (Antoun, Couper and Conrad 2017; Zwarun and Hall 2014).

The high prevalence of multitasking has raised questions about careless responding and potential harm to data quality based on the premise that combining multiple activities may affect the cognitive processes involved in answering survey questions. These processes encompass the stages of comprehension, recall, judgment, and response (Tourangeau 2018). The combination of a high cognitive task, such as answering a questionnaire, with other activities may increase the risk of question misunderstanding, retrieval failure, difficulties integrating the information into judgments, or reporting inaccurate responses. As a result, multitaskers might be less able to complete the process optimally, taking cognitive shortcuts that lead to satisficing behaviors (Krosnick 1991). In her study, Kennedy (2010) found that respondents who reported eating and drinking during the survey displayed more comprehension problems. Specifically, they were less likely to account for an important exclusion mentioned in the question when compared to those not eating or drinking. In addition, findings from a nationwide online survey in the Netherlands showed that multitasking was associated with lower self-reported concentration levels (de Bruijne and Oudejans 2015). More recently, another study conducted in the US found that respondents who reported multitasking were described by interviewers as distracted twice as often as non-multitaskers (Aizpurua et al. 2018a).

Despite this, the evidence suggests that multitasking has limited effect on data quality indicators. No differences have been found between multitaskers and non-multitaskers in non-differentiation scores (Aizpurua et al. 2018a, 2018b; Kennedy 2010; Lavrakas et al. 2010; Sendelbah et al. 2016), non-substantive responses (Aizpurua et al. 2018a, 2018b; Höhne et al. 2020; Kennedy 2010), or acquiescent and rounded responses (Aizpurua et al. 2018a, 2018b). The effect of multitasking on response time seems to be moderated by mode, with online surveys indicating that multitasking increases completion times by 20% to 25% (Ansolabehere and Schaffner 2015; León, Aizpurua and Diaz de Rada, in press) while telephone surveys have shown no differences based on multitasking status (Aizpurua et al. 2018a, 2018b).

Other data quality indicators have turned up mixed evidence, calling for further investigation. This is the case for social desirability as well as correct answers to knowledge questions. While the study conducted by Lavrakas and colleagues (2010) with cell-phone samples found that refusals to sensitive questions were comparable across multitaskers and non-multitaskers, a later online survey revealed that multitaskers were more likely to provide socially undesirable responses than non-multitaskers (León et al. in press). This was true for the dichotomous indicator of multitasking (yes-no), as well as for three of the individual activities. Specifically, respondents who were eating or drinking, doing chores, and speaking on the phone provided more socially undesirable answers. The authors explained these findings indicating that multitasking may have affected the last stage of the response process, such that respondents may have failed to censor their responses to sensitive questions.

The other indicator that has obtained inconclusive results is answers to factual questions. While Ansolabehere and Schaffner (2015) found that multitaskers were as likely as non-multitaskers to answer factual questions about politics correctly, later studies suggest otherwise. In a statewide telephone survey, Aizpurua and colleagues (2018a) found that multitaskers provided less accurate responses than non-multitaskers to a question requesting the definition of Science, Technology, Engineering, and Math (STEM) education. This finding is consistent with another statewide survey in which multitaskers reported lower awareness of STEM education than non-multitaskers (Heiden et al. 2017). The increased likelihood of satisficing behaviors as task difficulty raises might explain that knowledge questions, which impose greater cognitive burden (Gummer and Kunz 2019), are more affected by multitasking than less demanding questions.

This study contributes to previous research by examining the impact of respondent multitasking on two indicators for which the evidence is inconclusive: socially desirable answers and correct responses to factual questions. Based on previous research, we anticipate that multitaskers will disclose more socially undesirable information and report a lower level of knowledge/awareness than non-multitaskers.

Data

This study was reviewed and approved by the University of Northern Iowa Institutional Review Board (IRB). Data were collected between September 12, 2018, and January 16, 2019, as part of a dual-frame random digit dial telephone survey of adults in a US Midwestern State (N = 1,761) who were asked about their attitudes and behaviors toward gambling and health-related behaviors. Within-household selection in the landline sample entailed a modified Kish procedure to randomly select an adult living within the household. The cell-phone users were the respondents. The overall response rate (RR3, AAPOR 2016) was 26.3% (29.9% for the cell-phone sample and 13.1% for the landline sample). The overall cooperation rate (COOP3, AAPOR 2016) was 70.2%. The cooperation rate was 76.4% for the cell sample and 42.0% for the land sample. The sample was provided by Marketing Systems Group (MSG), and the survey was administered in English (n = 1,729) and Spanish (n = 32). The interview averaged 26.07 minutes in length (Mdn = 25.0, SD = 5.95), and no incentives were offered for participation.

Measures

Multitasking. Self-reported multitasking was assessed at the end of the interview. Specifically, respondents were asked whether they had engaged in any other activities while completing the survey (“During the time we have been on the phone, in what other activities, if any, were you engaged?”). The question was field coded – presented as open-ended for respondents, although pre-coded responses were used by interviewers to match respondents’ answers. These pre-coded responses included working, watching kids, watching TV, cooking, driving, and Internet surfing or social media. For other answers, interviewers recorded verbatim what respondents indicated. Respondents could indicate as many activities as applied. Two coders classified all answers into 17 categories (e.g., doing housework, shopping) and respondents were later identified as multitaskers (when they reported one or more activities), or non-multitaskers.

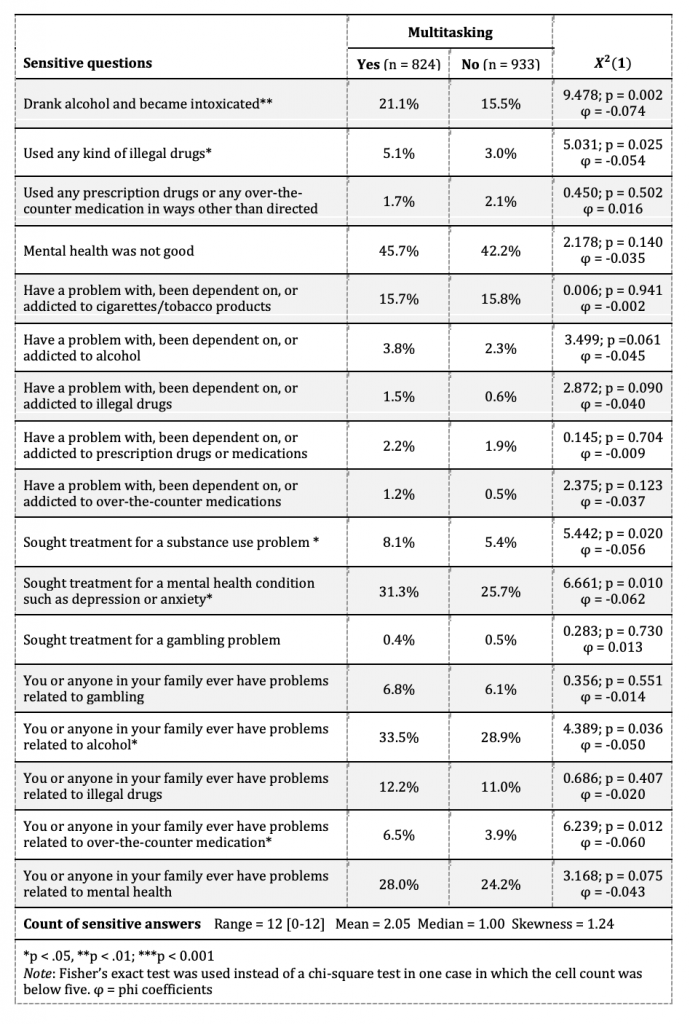

Socially undesirable responses. The questionnaire included a series of questions that may be considered sensitive, as they trigger social desirability concerns (Tourangeau and Yan 2007). These included four questions asking respondents if, over the last 30 days, they had consumed alcohol and became intoxicated, used illegal drugs, used medications in ways other than directed, or had mental health problems. In addition, five questions asked respondents if they were dependent or addicted to tobacco, alcohol, illegal drugs, prescription medications, or over-the-counter medications. Three other questions asked whether respondents had ever sought treatment for substance use, mental health, or gambling problems (items are presented in Table 3). Finally, respondents were asked if anyone in their family ever had problems related to alcohol, illegal drugs, over-the-counter medications, mental health issues, or gambling. All items were dichotomous (yes, no), except for the question on alcohol consumption which had 3 response options (did not consume alcohol, consumed alcohol, but did not became intoxicated, and consumed alcohol and became intoxicated). Positive answers were coded as 1, and a total of 17 questions were used to count how many positive responses were provided by each respondent throughout the questionnaire.

Knowledge questions. Two questions assessed awareness and knowledge of the state voluntary gambling self-exclusion program (SEP) that has a lifetime and 5-year ban to casinos in the state. The questions asked: “Before participating in this study, were you aware of Statewide Self-Exclusion Program?”. If they responded affirmatively, they were asked about the term of the SEP (either 5 years -correct response- or lifetime ban).

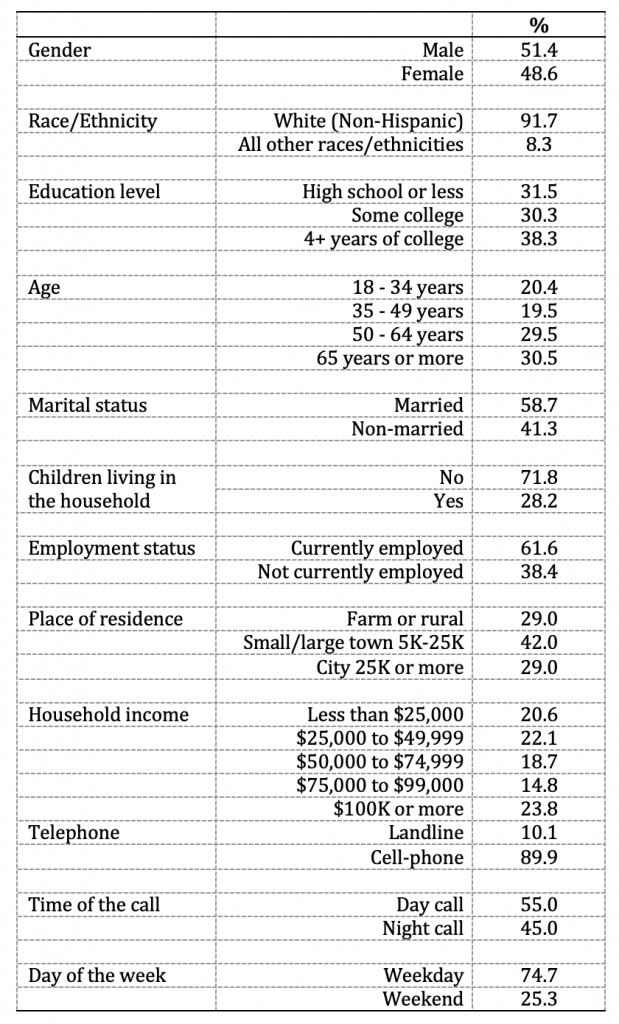

Respondent and survey characteristics. In the multivariate analyses, several characteristics of the respondents and interviews were included as control variables. Respondent-level variables included gender (male, female), race (White non-Hispanic, other) education level (from high school or less to college graduate), age, marital status, children living in the household (yes, no), employment status, place of residence (from farm to city of 25.000 inhabitants and over), and income (from less than $25.000 to $100.000 and over). Interview characteristics included type of telephone (landline, cell-phone), time of the call (daytime, evening) and day of the week (weekday, weekend). Descriptive statistics for all study variables can be found in Table 1. These variables were included in the models because previous research has shown their association with multitasking behaviors (Aizpurua et al. 2018a, 2018b; Ansolabehere and Schaffner 2015; León et al. in press) and they might be linked to disclosure and knowledge.

Analytical Strategy

First, the proportion of multitaskers was estimated for the full sample as well as by telephone type. Potential differences in multitasking rates between cell-phone and landline respondents were assessed using chi-square tests. To examine differences in social desirability based on multitasking status, responses to each of the sensitive questions were compared using proportions and chi-square tests. Student’s t-test was used to compare the mean number of socially undesirable responses between the groups. To further analyze the effects of multitasking on socially undesirable responses, a negative binomial regression model was estimated. The negative binomial regression was selected to account for the over-dispersion and excess of zeros of the outcome variable (Hilbe 2011). To examine the relationship between multitasking and answers to the knowledge questions, chi-square tests were used. Finally, a logistic regression model was estimated using correct answers as the outcome variable and multitasking as the main predictor while controlling for sociodemographic and survey-related variables. These analyses were conducted using IBM SPSS v.24.

The loss of information from missing data was small. The range of missing information ranged from 0% to 9.5% among the covariates, and from 0% to 3.7% among the outcome variables. Missing covariate information was imputed based on a weighted sequential method, which uses surveys responses to substitute missing information. This initial procedure was performed using SUDAAN (for additional information see Cox, 1980; Iannacchione, 1982).

Results

Sample Composition

As shown in Table 1, approximately half of the respondents identified themselves as females (48.6%). Most participants were White (91.7%) and used cell-phones to complete the interview (89.9%). Almost four in ten respondents were college graduates (38.3%), and a similar proportion were below 50 years of age (39.9%) and reported having children in the household (38.4%). About four in ten respondents lived in towns (42.0%) and had annual incomes of $75,000 and over (38.6%). Nearly half of the surveys were completed during the daytime (9 AM to 6 PM) and roughly one in four respondents were interviewed during the weekend (25.3%).

Table 1. Respondents and survey characteristics (n = 1,761)

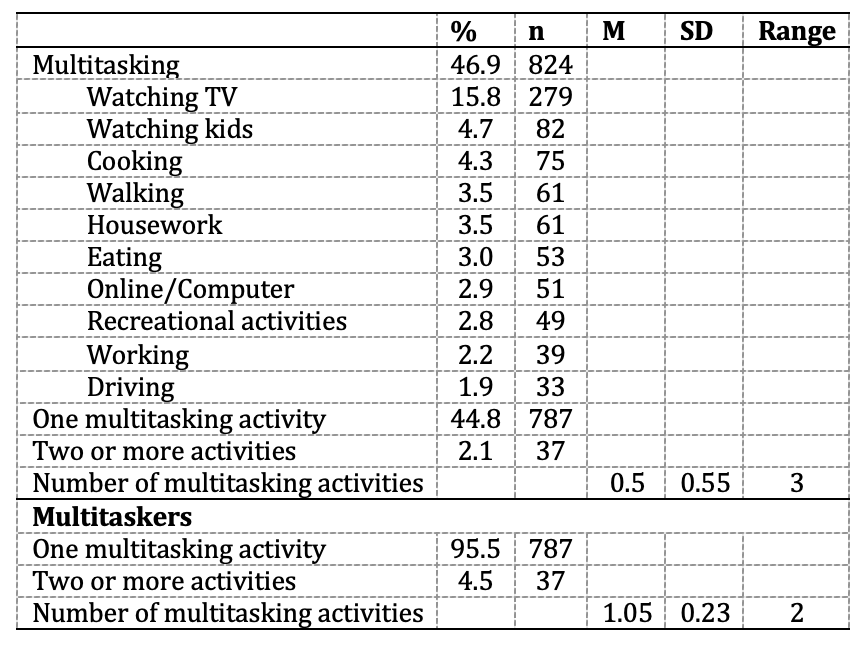

Multitasking rates

Slightly less than half of respondents (46.9%) indicated that they had engaged in at least one other activity while completing the 26-minute survey. The difference between landlines and cell-phones was not significant (42.1% versus 47.4%, χ2(1) = 1.805, p = .179, φ = 0.032). The activities reported by respondents included online and offline tasks, with the most common being watching TV (15.8%), watching children (4.7%), cooking (4.3%), walking (3.5%), and surfing the Internet or engaging in gaming activities with electronic devices (2.9%) (see Table 2).

Table 2. Multitasking status and activities

Social desirability

Responses to sensitive questions by multitasking status are presented in Table 3. In general, multitaskers were slightly more likely to disclose socially undesirable information when compared to non-multitaskers. These differences were significant in six of the 17 questions (35.3%), with multitaskers reporting significantly higher rates of alcohol intoxication, illegal drug use, substance use treatment, mental health treatment, personal or in-family alcohol problems, and personal or in-family problems with over-the-counter medication. Although these differences were observed, effect sizes were small in all cases (0-.07 ≥ φ ≥ -0.05). When examining the overall count of undesirable answers, the results were consistent. On average, multitaskers reported significantly more socially undesirable answers (M = 2.24; SD = 2.15), than non-multitaskers (M = 1.89; SD = 1.93). This difference was statistically significant (t(1,757) = -3.581, p < .001) and represented a small-sized effect (Cohen’s d = 0.17). Because the variance in the count of socially undesirable responses for multitaskers was significantly different than that for non-multitaskers (F= 7.30, p < 0.01), t-test were estimated taking into account the dissimilar variances in the two groups.

Table 3. Responses to sensitive questions by multitasking status

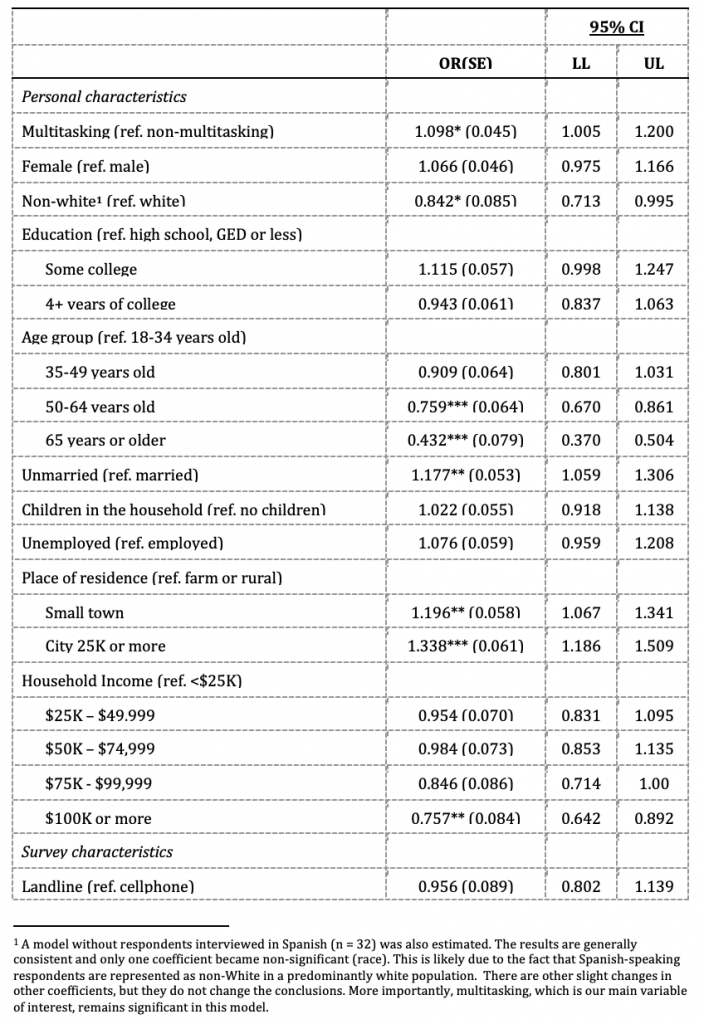

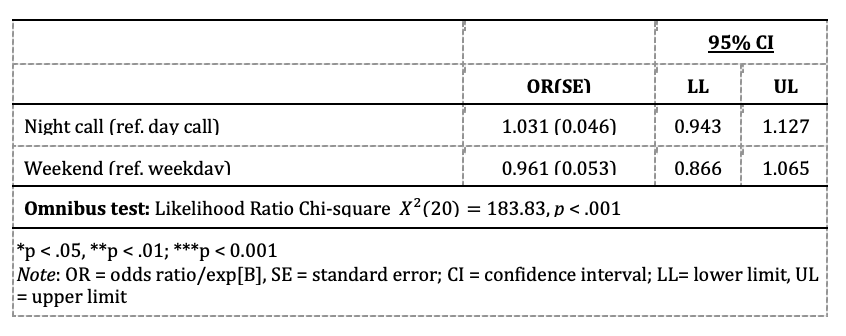

To further explore the relationship between responses to sensitive questions and multitasking, a negative binomial model was estimated using the count of socially undesirable responses as the outcome variable and multitasking as the predictor, while controlling for a series of demographic and survey-related variables. A Poisson model was also estimated, but the overdispersion of the dependent variable (χ2 = 2.03) favored the negative binomial regression. Table 4 presents the results of this model, indicating that undesirable responses were higher among multitaskers (OR = 1.10, 95% CI = 1.01, 1.20), and providing support to the hypothesis that multitasking is associated with greater disclosure. In addition, several demographic variables, including race, age, marital status, income, and place of residence emerged as related to responses to sensitive questions (see Table 4).

Table 4. Results of the regression model (odd ratio) predicting the number of socially undesirable responses (n = 1,757)

Correct answers to knowledge questions

The second data quality indicator examined in this study was answers to knowledge questions. This included a first question analyzing awareness of a state gambling self-exclusion program (SEP), and a contingency question asking about the length of this program. Individuals in both groups provided similar responses to the first question, indicating that there were no differences in awareness of SEP between multitaskers and non-multitaskers (26.5% versus 25.9%; χ2(1) = 0.10, p = .76). However, in the second question, which asked whether the state’s SEP had a 5-year or lifetime ban to casinos, multitaskers were less likely to correctly identify the ban length when compared with non-multitaskers (40.3% versus 50.8% ; χ2(1) = 5.08, p < .05), although the effect size was small (φ = 0.11).

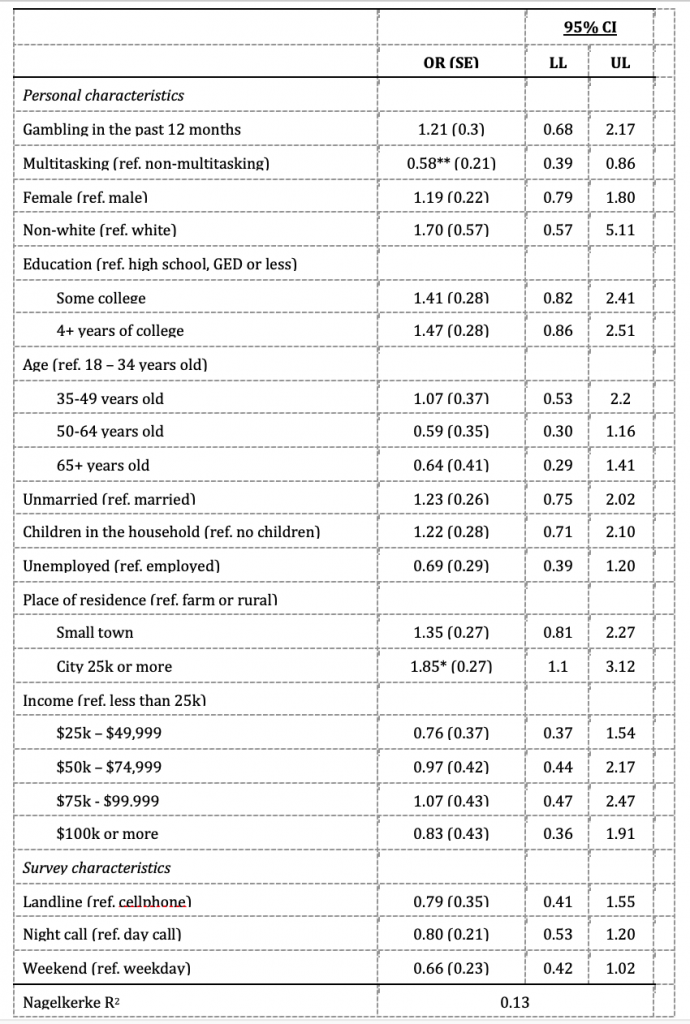

To further analyze the relationship between multitasking and correct answers, while controlling for other variables, a logistic regression model was estimated. Both odds ratios (OR) and 95% confidence intervals (CIs) are presented in Table 5. Consistent with the bivariate findings, multitasking was associated with knowledge of SEP terms, and multitaskers were less likely to know the term of SEP when compared to non-multitaskers (OR = 0.58, 95% CI = 0.39, 0.86). The only other significant predictor was place of residence, with respondents living in urban areas being more likely to know the SEP term (OR = 1.85, 95% CI = 1.10, 3.12).

Table 5. Logistic regression results for knowing the program term (n = 452)

Discussion

This study aimed to examine the prevalence of multitasking and its impact on two data quality indicators for which the evidence was unclear: social desirability and answers to knowledge questions. We did so by using data from an RDD dual-frame telephone survey and examining responses to a wide range of sensitive questions. Consistent with previous research (Aizpurua et al. 2018a, 2018b; Lavrakas et al. 2010), this study found that approximately one in two respondents (46.9%) indicated engaging in additional activities while answering the survey. Despite the already high rate, it is likely that multitasking is being underestimated. A recent online study compared self-reported measures of on-device multitasking with paradata (browser tab and window switching) and found that self-reported estimates were between 1.6 and 2.4 times lower than those derived from paradata (Höhne et al. 2020). Recall errors and social desirability bias help explain why multitasking might suffer from underreporting when estimated using self-reports. In relatively long surveys, where respondents are asked about their engagement in other activities at the end of the questionnaire, it is plausible that brief tasks and/or those occurring early during the interview are not remembered. Social desirability is also a potential explanation, as respondents might be reluctant to disclose other activities if they anticipate that this might be seen disrespectful or portray them as careless respondents (Höhne et al. 2020).

The range of activities reported by respondents in this study varies widely, with watching television being the most frequent activity. This finding is also consistent with previous research conducted using telephone (Aizpurua et al. 2018a, 2018b; Lavrakas et al. 2010) and online surveys (Ansolabehere and Schaffner 2015), that points to television as the primary environmental distraction among respondents. In addition to examining the prevalence of multitasking, this study investigated its relationship with responses to sensitive and factual questions. Our results provide evidence that multitasking is associated with greater disclosure of socially undesirable responses, even after controlling for sociodemographic factors. This finding is consistent with a previous study exploring the impact of multitasking on self-reported intimate partner violence (León et al. in press). However, the effect sizes were small and the exact mechanism by which multitasking relates to disclosure calls for further research. Since multitaskers are more likely to report higher levels of distraction (Wenz 2019) and lower levels of concentration and attention (de Bruijne and Oudejans 2015; Wenz 2019), these ramifications might help explain the relationship between multitasking and data quality indicators. The added cognitive burden caused by multitasking might amplify task difficulty, increasing the risk of satisficing behaviors (Hoolbrock et al. 2003). In the presence of competing activities, individuals have to divide their attention, which might result in a decreased ability to adjust their responses to social norms. In any case, greater disclosure is interpreted as evidence of more honest reporting and, thus, a sign of better, rather than worse, data quality. These findings are consistent with previous research suggesting that low-level multitasking (e.g., snacking, watching television) may actually improve respondent performance (Kennedy 2010). Another interpretation, if multitasking behaviors are considered sensitive, is that the relationship between multitasking and other socially undesirable responses might be explained by other factors which account for the tendency to disclose sensitive information more generally (e.g., personality traits, perceptions of privacy). Future studies using paradata and interviewer observations could test if such hypothesis finds empirical support.

This paper also analyzed differences in responses to a knowledge question based on multitasking status. The results provide support for the hypothesis that multitaskers render fewer correct responses than non-multitaskers, although the effect size was small. This finding is consistent with previous research (Aizpurua et al. 2018a) and may indicate that the effect of multitasking on data quality indicators is moderated by question type. When compared with attitudinal items, factual questions assessing respondents’ knowledge require greater cognitive effort, particularly at the retrieving stage of the response process (Nadeau and Niemi 1995; Tourangeau, Rips and Rasinski 2000). This demand may result in knowledge questions being more affected by the reduced availability of resources stemming from multitasking. Future research could explore the relationship among question type, multitasking, and data quality in more depth, particularly when recent studies suggest that multitasking increases with burdensome questions (Baier, Metzler and Fuchs 2019).

While our study contributes to the growing literature on respondent multitasking and data quality, limitations of our research should be noted. Consistent with previous studies, validation data was not available, and we assumed that higher reports of the sensitive behaviors represented more accurate responses. Although this approach is commonly adopted and the assumption behind it is often plausible, it is still an assumption (Tourangeau and Yan 2007). Despite covering different sensitive topics in this study (mental health issues, substance use, and gambling), future studies could replicate these findings using a wider range or a different set of topics with varying levels of sensitivity. In addition, since the effects of multitasking on the response process might differ depending on the frequency or the intensity of multitasking activities, examining the associations between different forms of multitasking and disclosure is a potential avenue for future research. Finally, knowledge was assessed by using a single question about a specific state-wide program. The specificity of the question limits the generalizability of our findings to factual questions in other topics. Future research should replicate these findings using multiple questions of a more generalist nature.

While respondent multitasking was widespread and this behavior was linked to more incorrect responses, the size of this effect was small. Multitaskers also indicated more sensitive behaviors, which was interpreted as a sign of more honest reporting. These findings are encouraging for survey practitioners, suggesting that engaging in additional activities while answering surveys have a limited effect on data quality.

References

1.American Association for Public Opinion Research (AAPOR). 2016. Standard definitions: Final dispositions of case codes and outcome rates for surveys. https://www.aapor.org/Standards-Ethics/Standard-Definitions-(1).aspx (accessed September 1, 2019).

2. Aizpurua, E., Heiden, E. O., Park, K. H., Wittrock, J., & Losch, M. E. (2018a). Investigating respondent multitasking and distraction using self-reports and interviewers’ observations in a dual-frame telephone survey. Survey Insights: Methods from the Field. Retrieved from https://surveyinsights.org/?p=10945

3. Aizpurua, E., Heiden, E. O., Park, K. H., Wittrock, J., & Losch, M. E. (2018b). Predictors of multitasking and its impact on data quality: Lessons from a statewide dual-frame telephone survey. Survey Practice, 11.

4. Ansolabehere, S. & Schaffner, B. F. (2015). Distractions: The incidence and consequences of interruptions for survey respondents. Journal of Survey Statistics and Methodology, 3, 216–239.

5. Antoun, C., Couper, M. P., & Conrad, F. G. (2017). Effects of mobile versus PC web on survey response quality. Public Opinion Quarterly, 81, 280–306.

6. Baier, T., Metzler, A., & Fuchs, M. (2019). The effect of page and question characteristics on page defocusing. Paper presented at the Conference of the European Survey Research Association, July 15-19, Zagreb, Croatia.

7. Cox, B. G. (1980). The Weighted Sequential Hot Deck Imputation Procedure. Conference Proceedings at the Annual Conference of American Statistical Association. USA, 721-726. http://www.asasrms.org/Proceedings/papers/1980_152.pdf

8. de Bruijne, M. & Oudejans, M. (2015). Online surveys and the burden of mobile responding. In U. Engel (Ed.), Survey measurements: Techniques, data quality and sources of error (pp. 108-123). Frankfurt: Campus Verlag.

9. Diedenhofen, B. & Much, J. (2017). PageFocus: Using paradata to detect and prevent cheating on online achievement tests. Behavior Research Methods, 49, 1444–1459.

10. Gummer, T. and Kunz, T. (2019). Relying on external information sources when answering knowledge questions in web surveys. Sociological Methods & Research, 1-19.

11. Heiden, E. O., Wittrock, J., Aizpurua, E. Park, K. H., & Losch, M. E. (2017). The impact of multitasking on survey data quality: Observations from a statewide telephone survey. Paper presented at the Annual Conference of the American Association for Public Opinion Research, May 18-21, New Orleans, LA.

12. Hilbe, J. M. 2011. Negative binomial regression. 2nd Edition. Cambrige, UK: Cambridge University Press.

13. Höhne, J. K., Schlosser, S., Couper, M. P., & Blom, A. (2020). Switching away: Exploring on-device media multitasking in web surveys. Computers in Human Behavior, 111, 1-11.

14. Hoolbrok, A. L., Green, M., & Krosnick, J. (2003). Telephone versus face-to-face interviewing of national probability samples with long questionnaires: Comparisons of respondent satisficing and social desirability response bias. Public Opinion Quarterly, 67, 79–125.

15. Iannacchione, V. G. (1982). Weighted Sequential Hot Deck Imputation Macros. Seventh Annual SAS User’s Group International Conference, San Francisco, CA, February 1982.

16. Kennedy, C. (2010). Nonresponse and measurement error in mobile phone surveys. Doctoral Dissertation. University of Michigan.

17. Krosnick, J. A. (1991). Response strategies for coping with the cognitive demands of attitude measures in surveys. Applied Cognitive Psychology, 5, 213–36.

18. Lavrakas, P. J., Tompson, T. N., & Benford, R. (2010). Investigating data quality in cell-phone surveying. Paper presented at the Annual Conference of the American Association of Public Opinion Research, May 13-16, Chicago, IL.

19. León, C. M., Aizpurua, E., & Díaz de Rada, V. (in press). Multitarea en una encuesta online: Prevalencia, predictores e impacto en la calidad de los datos. Revista Española de Investigaciones Sociológicas, 173, 27-46.

20. Nadeau, R. & Niemi, R. G. (1995). Educated guesses: The process of answering factual knowledge questions in surveys. Public Opinion Quarterly, 59, 323-346.

21. Sendelbah, A., Vehovar, V., Slavec, A, & Petrovcic, A. (2016). Investigating respondent multitasking in web surveys using paradata. Computers in Human Behavior, 55, 777-787.

22. Tourangeau, R. (2018). The survey response process from a cognitive viewpoint. Quality Assurance in Education, 26, 169-181.

23. Tourangeau, R., Rips, L. J., & Rasinski, K. (2000). The psychology of survey response. New York: Cambridge University Press.

24. Tourangeau, R., & Yan, T. (2007). Sensitive questions in surveys. Psychological Bulletin, 133, 859–883.

25. Wenz, A. (2019). Do distractions during web survey completion affect data quality? Findings from a laboratory experiment. Social Science Computer Review, 1-14.

26. Zwarun, L., & Hall, A. (2014). What’s going on? Age, distraction, and multitasking during online survey taking. Computers in Human Behavior, 41, 236–244.