The effect of advance letters on survey participation: The case of Ireland and the European Social Survey

Capistrano, D. & Creighton, M. (2022). The effect of advance letters on survey participation: The case of Ireland and the European Social Survey. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=15979. The data used in this article is available for reuse from http://data.aussda.at/dataverse/smif at AUSSDA – The Austrian Social Science Data Archive. The data is published under the Creative Commons Attribution-ShareAlike 4.0 International License and can be cited as: ”Replication Data for: The effect of advance letters on survey participation: The case of Ireland and the European Social Survey” https://doi.org/10.11587/VQU4BW.

© the authors 2022. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

This study examined the effects of advance letters on individual participation in the 2018 round of the European Social Survey in Ireland. As participation rates in household surveys have been in decline in many countries, understanding the impact of engagement strategies, such as prior contact, are crucial for fieldwork planning and overall quality of data collection. Based on a natural experiment, we assessed the likelihood of individuals to take part in the survey comparing those who have received an advance letter with those who did not receive it. Contrary to previous evidence on the effectiveness of prior contact, our results indicate that individuals in the sample that received an advance letter are less likely to take part in the survey. We discuss these results in light of an increasing public scepticism regarding social surveys and data privacy.

Keywords

advance letters, European Social Survey, survey methods, survey participation

Acknowledgement

This study was funded by the Irish Research Council. We are grateful to Dr. Philip J. O'Connell for his support during the conceptual phase of this research.

Copyright

© the authors 2022. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

Participation rates in household surveys have been declining in many developed countries over the last four decades (Beullens et al., 2018; de Leeuw et al., 2018). As this lack of coverage may affect survey quality, researchers have discussed the effectiveness of strategies to increase survey participation, ranging from providing monetary incentives to participants (Chromy and Horvitz 1978; Kibuchi et al. 2019; Singer and Ye 2013), to offering different modes of participation (Heijmans, van Lieshout, and Wensing 2015; Olson, Smyth, and Wood 2012).

In this article, we present the results of a natural experiment conducted in a national household survey in Ireland. The survey was administered from October 2017 to April 2018. Although the survey procedures included sending advance letters for all sampled addresses, recent changes occurred in the postal system of the country led to a substantial number of letters not being delivered. We have assessed the impact of receiving or not an advance letter on the likelihood to participate in the survey. After a brief discussion about previous studies on the effects of advance letters, we provide more details about this natural experiment occurred in Ireland and discuss the impact on survey participation assessed by this investigation.

The Effects of Advance Letters

For household surveys in which the address is known in advance, one common strategy is to send an advance letter introducing the study and requesting cooperation. Many studies investigating the effects of this strategy have shown a positive effect of advanced letters on cooperation (Dillman et al., 1976; de Leeuw et al., 2018; Stoop et al., 2010; Traugott et al., 1987) as well as on the cost effectiveness (Link & Mokdad, 2005; Schell et al., 2018). Experimental evidence from Schell et al. (2018), for instance, indicates that despite the effects not being significant for participation rates, advance letters reduce call attempts and costs per group.

However, the scope of this evidence is almost entirely focused on telephone or mail surveys (de Leeuw et al., 2007; Goldstein & Jennings, 2002) and these studies are generally located in the United States. In a recently published review of the available experimental evidence, Vogl et al. (2019, p. 89) concur that there is a knowledge gap in relation to the impact across different modes with “little published research examining the effect of advance letters on the outcomes in face-to-face studies”.

An indication of the impact of advance letters on face-to-face surveys comes from an experiment carried out in the United Kingdom. Lynn et al. (1998) tested three different types of advance letter in a national household survey and found that only one type (simple informal letter) had a statistically significant impact over cooperation compared to not sending any letter. This conclusion highlights the importance of the format and content of those letters (Brunner and Carroll, 1969; Luiten, 2011).

Based on the examination of a panel study, Vogl et al. (2019) also provide indication that advance letters may increase participation rates with a higher impact on contact and cooperation at the screening phase of the study. “there is a clear tendency toward a positive impact of advance letters on response rates”. Despite the scarce evidence in relation to face-to-face surveys, Dillman et al. (2014, p. 421) suggest that prior notification can help to improve response rates across different modes, as they help to “build trust that the survey is legitimate, and help communicate that the results may be beneficial to individuals or groups with whom they identify in a positive way”. Similarly, Vogl et al. (2019, p. 92) argue that “in face-to-face interviews, the cost for interviewers and contacting a potential respondent is much higher and therefore it seems reasonable to assume that advance mailing would be even more cost-effective”.

In a different direction, it is also reasonable to suspect that prior notification may discourage participation. In a context of increasing survey fatigue, receiving an advance letter can anticipate refusal due to the impersonal nature of this first contact. In this sense, we could expect a more positive effect of an unexpected visit from an interviewer compared to the negative effect of a “surprise” telephone call in telephone surveys, as suggested by Dillman et al. (1976).

The ESS in Ireland and Advance Letters

The survey analysed in this paper was part of the administration of the Round 9 of the European Social Survey (ESS) in Ireland. The ESS is a cross-national survey conducted every two-years in more than twenty European countries since 2002. It aims to collect data on public attitudes, values and perceptions related to various topics such as religion, politics, migration, and well-being. The ESS adopts a rigorous method of data collection and survey design. All participating countries are required to follow standard procedures that apply to all stages of the survey administration. Among them, countries need to follow strict probability sampling methods to select participants among the resident population aged 15 and over (European Social Survey 2018).

A practice across ESS countries is to provide prior information about the survey using advance letters posted to addresses selected in the sample (Beullens et al., 2018). In 2018, Ireland had a distinctive context in relation to their postal services. Ireland was the last country among the Organisation for Economic Co-operation and Development (OECD) members to implement a national post code system, introducing it in 2015. Up until then, as a substantial portion of the addresses were not unique, the Irish national postal service (An Post) has relied primarily on a mechanised process for national sorting combined with local knowledge from its’ delivery employees (Ferguson 2016). The new national post code (Eircode) was launched in July 2015, but it was not fully implemented in 2018 and faced concerns on two fronts. First, residents were unaccustomed to using the Eircode. Second, some An Post employees saw the Eircode system as benefiting competition and did not use them. As discussed by Berger, Keenan and Miscione (2015, p. 2), An Post employees were concerned that “…a postcode might be more useful to rival courier and delivery companies without both the sophisticated equipment and local knowledge, than An Post itself”

The European Social Survey requires the delivery of advance letters to selected households or participants. As the Irish sample was selected from the full register of the Eircode postal system, these letters were sent to householders using only the Eircode and omitted additional identifying information. The fieldwork team identified which letters were returned as a result of “insufficient address”, which would only occur when the Eircode was not in use by An Post at the local office. Returned letters did not restrict interviewers who succeeded in locating all sampled addresses via the use of GIS. Although the capital city had more coverage of the Eircode, the failure to deliver these letters were not restricted to a specific region of the country, we have investigated whether selected residents who did not receive the advance letters were less likely to take part in the survey.

The letter: Content and format

In relation to the content and format of the letter, the ESS provides to participating countries templates of the text to be sent out to selected addresses. The Irish coordinator team has adapted this template after discussion within the coordinator team and suggestions from experienced interviewers. One of the most recurrent suggestions was to emphasise the academic aspect of the research.

In addition, these interviewers recommended to increase the size of the logo from the academic institution responsible for the survey as it is a recognised academic institution in the country and its’ prestige would support interviewers in their work engaging participants. Previous evidence also indicates the positive impact of using a university letterhead (Brunner & Carroll, 1969). The letter also mentioned the gift incentive for participation (10 EUR). These changes in the content and format of the advance letter were all expected to instigate interest and increase the likelihood of cooperation. A copy of the advance letter used in the survey can be found in the Appendix A of this paper.

As a requirement for the administration of the ESS in Round 9, a leaflet with information on data privacy was also sent with the advance letter. This leaflet provided details on how the data would be used exclusively for academic purpose and the participants rights according to the European Union General Data Protection Regulation (GDPR).

Research Questions

Considering the presence of undelivered advance letters, the Irish ESS National Coordinators saw an opportunity to consider two research questions. First, given that the cost of administering advance letters is non-trivial, what is the impact of not receiving an advance letter over the likelihood of cooperating with the survey? Notwithstanding the evidence supporting a positive impact of advance letters, are there contexts in which the outcome would limit their utility? For reasons outlined above, the extent to which postal delivery was successful is sensitive to the link between the way in which households (and by extension individuals) were identified. In the Irish case, the use of an Eircode offered a clear population from which to draw the sample, but was imperfectly integrated into the postal service (i.e., An Post). The result is a context in which the receipt of an advance letter is, in some cases, plausibly limited for reasons other than individual and household characteristics.

Second, is there evidence that, in some contexts, advance letters can reduce participation? In a context increasingly subject to concerns over survey participation and privacy, the advance letter could present an opportunity to reflect and increase reluctance to engage upon follow up. In the absence of an advance letter, the initial contact would be relatively faster in terms of converting to a scheduled interview. Distinct from the infeasibility (or limited feasibility) concern addressed in our first question, we find variation in the receipt of advance letters for reasons outside of the household’s control to be useful in considering if an initial direct contact has positive outcomes in terms of completed interviews.

Data and methods

In total, 3,768 households were selected to take part in the survey. This sample resulted from a multistage cluster sample with the first stage consisting of a Probability Proportional to Size (PPS) selection of 628 geographical clusters and the second stage refers to a random selection of six Eircodes within each cluster. At the final stage, one resident of each address aged 15 or older was selected following the criteria of the last birthday.

In our sample, we have kept only the sample units in which the interviewer succeeded in contacting one person at the selected household. Consequently, we have removed all invalid addresses (e.g. derelict, vacant, commercial) as well as those cases in which interviewers could not contact the respondent after at least four visits over the period of two weeks. Those sample units were not considered in this analysis as the information regarding the household that was essential for our analysis was not collected among all those addresses. This resulted in a final sample of 3,047 cases in which either the interview was conducted, or the householder refused to take part in the survey.

Among these cases, 405 (13%) advance letters were not delivered and returned by the postal service alleging “insufficient address”. There is no evidence on whether all the remaining letters were received or, if received, if they were read by the potential respondents. However, as the standard procedure in the first visit to the household, interviewers were expected to refer to the advance letter and no interviewers reported issues on participants having not received the letter.

As part of the survey administration, interviewers were required to fill out a contact form for each sample unit detailing each of their visits to the household as well as providing some information regarding the household, the vicinity and, in the case of refusals, the gender and apparent age of the person who refused to participate.

Based on this information, we have conducted a multivariate analysis to predict the effect of having received an advance letter on the likelihood to cooperate and complete an interview in the survey. The main outcome is a binary variable on having a completed interview or having refused to participate. The main independent variable is also binary assuming the values of 1 for those sample units in which the advance letter was not returned (treatment) for which we assume the letter was received and 0 for sample units in which the letter was returned on the grounds of “insufficient address” (control). The covariates are described below:

Social variables

Household condition: This is a subjective assessment from the interviewer regarding the overall physical condition of the selected household. The response categories were “Very bad (1), Bad (2), Satisfactory (3), Good (4), Very good (5)”. During the training sessions for interviewers, pictures with different households were shown to provide parameters for this assessment. The interviewer’s manual also contained descriptions of these categories. We expect this to be a proxy for socio-economic status of the householder, which has shown to be a relevant factor for survey cooperation with higher SES associated with higher cooperation rates (Goyder, Warriner, and Miller 2002). In Table 1, the proportions are presented within aggregated categories for convenience. However, for the analysis, the variable was used as a 5-point scale with higher values denoting better household conditions.

Vicinity condition: This is another subjective assessment from the interviewer regarding the amount of litter and rubbish in the immediate vicinity. The response categories were “Very large amount (1), Large amount (2), Small amount (3), None or almost none (4) ”. We have used this variable as a proxy for vicinity infrastructure which is also related to socio-economic status. In the analysis, the variable was used in its original format as a 4-point scale with higher values meaning lower amount of litter and rubish.

Access: This variable indicates if there is an entry phone system and/or a locked gate or door before reaching the sampled household’s door. As highlighted by Tourangeau (2004), societal shifts such as increasing concerns about safety have led to changes in the configuration of access to households, rising of gated communities for instance, that resulted in more challenges to survey organisations and response rates.

Geo-demographic variables

Population density: Previous studies have found lower cooperation rates in densely populated areas (Couper and Groves 1996). To control for this, we have included the variable on population density that was used to select the sample. The variable represents the population density of the sampled address cluster based on the 2016 Census. In Table 1, the variable was dichotomised into “Low” and “Medium/High” using a cutoff point based on the proportion of the population living in rural (low) and urban (medium/high) areas according to the Central Statistics Office.

Region: We expect that households located in more urbanised regions such as Dublin, South-West and South-East will be likely to have a higher refusal rate as, in addition to be densely populated, residents may also be more likely to suffer from respondent fatigue caused by frequent requests for participation in surveys. In Table 1, we provide descriptive statistics for Dublin and other regions aggregated. However, we controlled the regional effect by using the categorical variable with the eight NUTS 3 regions of Ireland (Border, West, Mid-west, South-East, South-West, Dublin, Mid-East, and Midlands).

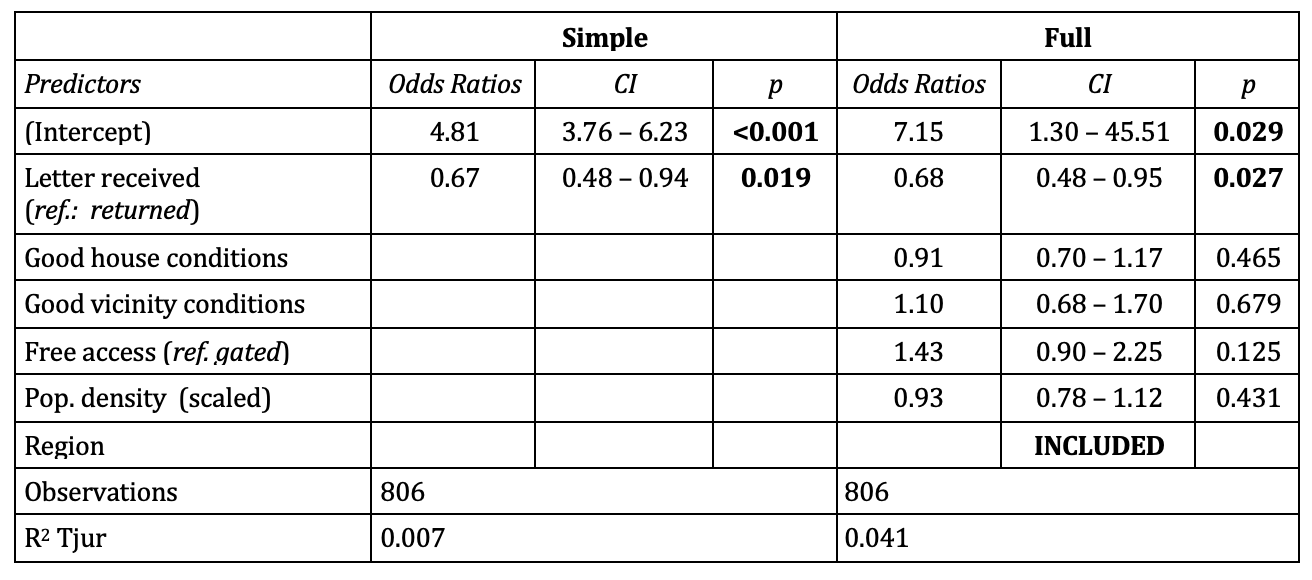

The Table 1 below shows the incidence of letters that were not returned (received) and completed interviews (as opposed to refusal) according to the observed variables of the household used as covariates in the analysis. The variable “region” denotes the eight NUTS3 regions in Ireland. For the sake of clarity, seven regions were grouped into one in Table 1, but the original format was used for all analyses.

Table 1. Percentage of advance letters received and completed interviews according to different covariates

In relation to completed interviews, the Table 1 suggests a higher response rate for households in better conditions, with free access to the address (as opposed to gated) and within vicinities with some litter (as opposed to no litter). In addition, response rates are higher in areas with lower population density and outside Dublin.

As also shown in Table 1, the proxy variables for socio-economic conditions of the household suggest a balanced distribution of received letters across socio-economic characteristics, all around the total proportion of 87%. However, the variables derived of population density and region indicate that households in more densely populated areas and Dublin were disproportionately more likely to receive the advance letters (94% and 99%, respectively) compared to those in less densely populated areas (68%) and in other regions (82%). A further analysis shows that the population density is significantly higher for addresses that received the letter, t(1,118) = -23, p < 0.001. This indicates that the return of letters by the postal service was not completely random being more biased towards sample units in areas less densely populated and outside Dublin.

To deal with unbalanced samples in non-randomised experiments, many researchers tend to resource to propensity score matching (Rosenbaum & Rubin, 1983) to approximate the sample to a randomised experiment. However, recent developments have shown that adopting this approach may actually increase imbalance, model dependence and bias (King & Nielsen, 2019). For this analysis, we opted to use coarsened exact matching (Iacus, King and Porro, 2011) to construct a balanced sub-sample. The variables utilized for this matching were “population density” and “region” as among the observed variables they are the only ones that influence simultaneously the outcome and treatment (Caliendo & Kopeinig, 2008). This method entails the coarsening of the variables “population density” and “regions” by creating bins, and then matching the observations where each observation in the control group (letter returned) is matched with an observation in the intervention group (letter received) within these bins. The resulting matched sample contains 806 observations.

The downside of this approach is the information loss caused by discarding non-matched observations (Black et al., 2020). However, the results presented in the next section using coarsened exact matching are very similar to the ones obtained by a non-matched sample. The tables with the results using the full sample are presented in the Appendix B of this paper. The descriptive statistics also indicate that other control variables have a similar distribution across matched and full samples. All models were built using the survey design weight to correct for different probabilities to be included in the sample due to complex sampling design (Kaminska, 2020).

Results

Here we describe the results of our analysis by each outcome. First, we fit a logistic regression model to predict the likelihood of the sample unit to have a completed interview as its’ final outcome. After that, we test whether the advance letter is related to a timely achievement of an interview. Considering that 28% of all interviews were finalised in the first visit to the household, it is also relevant to explore to what extent the advance letter could be influential in this regard. For that, we build a logistic regression model with a different response variable: the completion of an interview in the first visit.

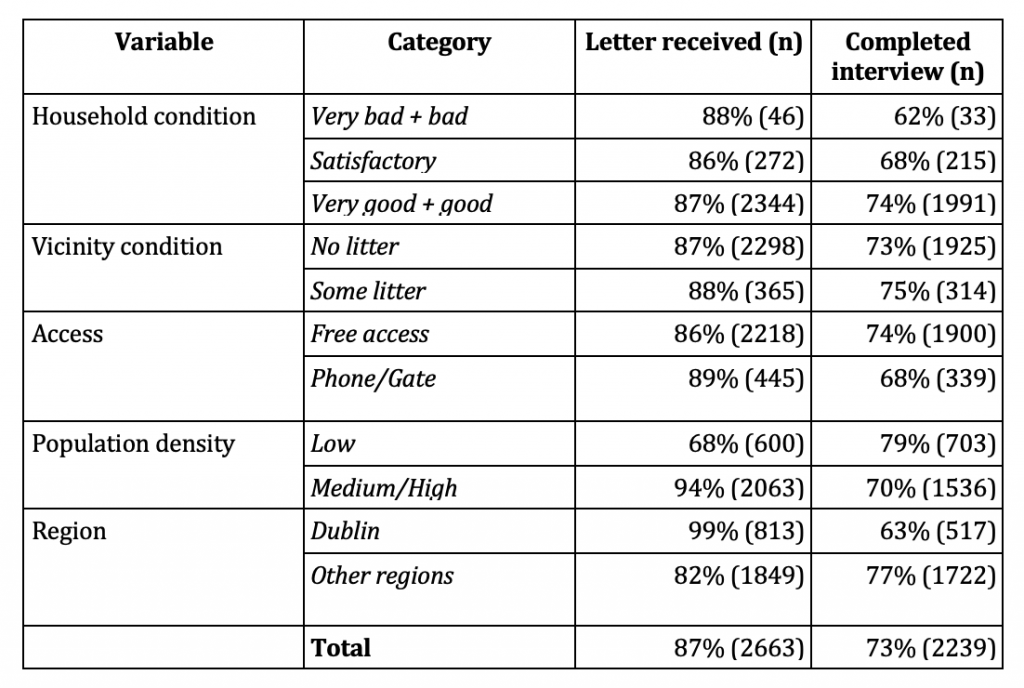

Table 2. Logistic Regression Coefficients for completed interview as opposed to refusal to participate

As show in Table 2, two different models were tested to explain the occurrence or not of an interview. The first one includes only the variable related to the receipt of the letter. The second model includes proxy variables for the socioeconomic status of the householder as well as population density and geographical location.

The Tjur’s R squared coefficient increases as we add the social and geo-demographic variables indicating that they are relevant to explain interview completion. Although none of the covariates show statistical significance, the estimates follow the same expected direction with good vicinity conditions and free access being associated with higher odds of interview completion. On the other hand, sampled houses in better conditions are less likely to have a resident that will complete an interview.

In terms of the role of the advance letter, both models indicate a statistically significant effect on interview completion. The simple model indicates that residents in selected households that received the advance letter have 33% lower odds of taking part in the interview compared to those who did not receive it (OR = 0.67, 95% CI: 0.48,0.94). Even controlling for social and geo-demographic variables, the odds of participation for those who received the advance letter are 32% lower than for those households where the letter was returned (OR = 0.68, 95% CI: 0.48, 0.95).

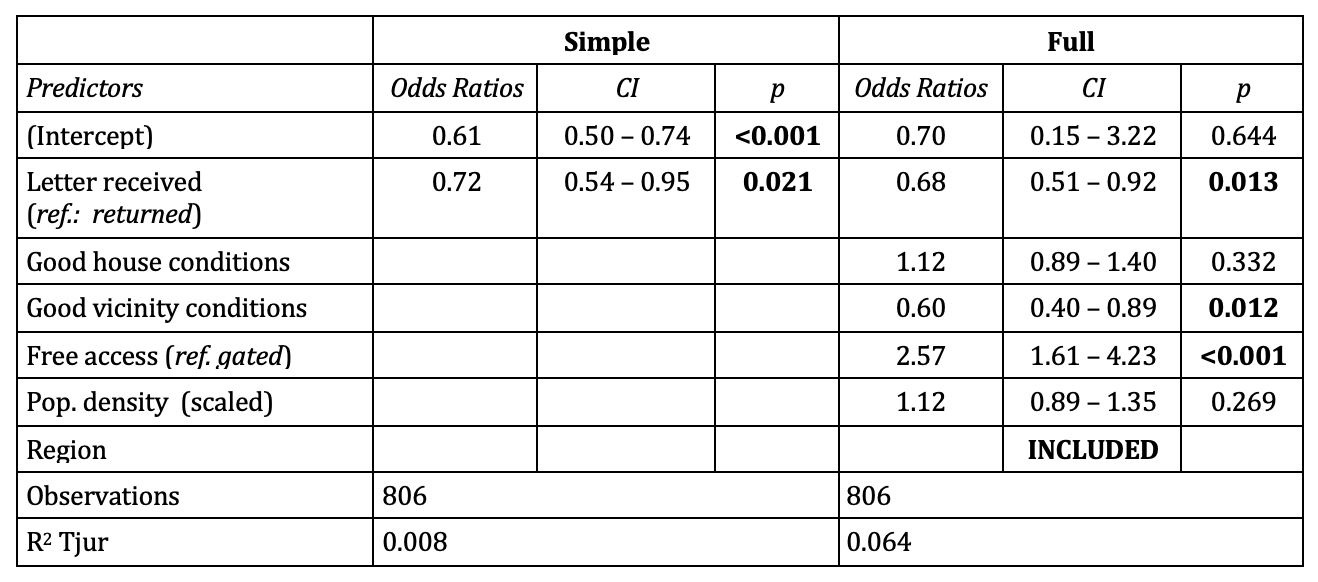

Table 3. Logistic Regression Coefficients for interview completed in the first visit

In addition to helping with survey participation, the advance letter could facilitate the contact with interviewers and encourage a timely participation. However, the data for Ireland indicates that receiving an advance letter is negatively associated with an interview in the first visit. The first model shows that residents who received the prenotice letter have 28% lower odds of completing the interview in the first visit as opposed to completing in subsequent visits or refusing to participate (OR = 0.72, 95% CI: 0.54, 0.95). The full model, including socio-economic and geo-demographic variables, suggests that the odds of a timely interview are 32% lower among those who received the advance letter (OR = 0.68, 95% CI: 0.51,0.92).

In addition, these models seem to explain better the variation in the completion in the first visit than completion at any visit. Two covariates are shown to be significant to explain this new outcome of completion in the first visit. Having a vicinity without or with a small amount of rubbish or litter seems to reduce the likelihood of completing an interview in the first visit. At the same time, residents with free access to their front door (as opposed to having an entry phone system or locked gate before) have 157% higher odds of being interviewed in the first visit to the address (OR = 2.57, CI: 1.61,4.23).

Discussion

To sum up, we find evidence that receiving an advance letter is not conducive to higher likelihood of taking part in the survey. Independent of the implications for completed interviews, the implementation of the advance letters confronted a delivery agency that was only partially cooperative. The result was a non-trivial proportion of the sample failing to receive the letter despite the costs of sending and the time used to facilitate the distribution remaining relatively fixed. In relation to our first research question, we find that evidence that a one-size-fits-all approach in terms of the use of advance letters would not apply in the Irish context.

In relation to our second question, our results suggest that in-person contact for the first contact can significantly improve the relative odds of completing a survey. Building upon earlier evidence (e.g., Morton-Williams, 1993) and Debell et al., 2020) that letters generically addressed to “The Householder” may diminish the positive effect of advance letters, our results suggest that the advance letter may have prevented the positive effect of the in-person initial contact by interviewer at the household door. This could also explain why in those households in which the advance letter was returned there were also fewer visits. Receiving the letter may have encouraged the sampled individuals to consider better their participation resulting in a longer period for deciding to refuse or participate.

Further work should consider that the social and institutional landscape is increasingly sensitive to concerns about invasive data collection. Notably, the advance letter was accompanied by a data protection leaflet in which several aspects of data handling, privacy and confidentiality were addressed under the recently established European Union’s General Data Protection Regulations (GDPR). In other words, what might have laid the foundation for a successful conversion to an interview in the past might have unintended consequences in the present – at least in some contexts.

Of note, this work is suggestive, but in need of better data to draw firm conclusions. The proxy variables used for socio-economic status were based on subjective assessments from the interviewer. Variables such as population density and region were not optimal controls for the potential effect of hard-to-reach communities where we would expect that the letter return to be higher but where we could also find higher participation rates. In addition, there may be unobserved variables that are correlated with the receipt of advance letters and explain better the likelihood of taking part in the survey. Finally, lower participation rates do not necessarily impact negatively the quality of the survey (Vogl et al. 2019). In this sense, sending advance letters may contribute to higher quality with participation of respondents who planned the timing of their interview and are better informed and conscious of their role as a participant (Link and Mokdad, 2005).

Overall, the results suggest that blanket assumptions about the utility of advance letters for improving response rates should be tailored to the context in which the fieldwork will be undertaken. If there is a barrier to the letter’s delivery or, more troubling, a negative interaction with general concerns over privacy, initial direct contact might be preferable – at least in terms of achieving a completed interview.

Appendices

APPENDIX A – Advance Letter – European Social Survey in Ireland, 2018

APPENDIX B – Logistic regression tables for the non-matched sample

Table B2. Logistic Regression Coefficients for completed in the first visit (non-matched sample)

References

- Berger, A., Keenan, P., & Miscione, G. (2015). A Postcode not for Post. AMCIS 2015 Proceedings. https://aisel.aisnet.org/amcis2015/BizAnalytics/GeneralPresentations/5

- Beullens, Koen, Geert Loosveldt, Caroline Vandenplas, and Ineke Stoop. 2018. ‘Response Rates in the European Social Survey: Increasing, Decreasing, or a Matter of Fieldwork Efforts?’ Survey Methods: Insights from the Field (SMIF), April. https://doi.org/10.13094/SMIF-2018-00003 .

- Black, B. S., Lalkiya, P., & Lerner, J. Y. (2020). The Trouble with Coarsened Exact Matching (SSRN Scholarly Paper ID 3694749). Social Science Research Network. https://doi.org/10.2139/ssrn.3694749

- Brunner, G. A., & Carroll, Jr., Stephen J. (1969). The Effect of Prior Notification on the Refusal Rate in Fixed Address Surveys. Journal of Advertising Research, 9(1), 42–44.

- Caliendo, M., & Kopeinig, S. (2008). Some Practical Guidance for the Implementation of Propensity Score Matching. Journal of Economic Surveys, 22(1), 31–72. https://doi.org/10.1111/j.1467-6419.2007.00527.x

- Chromy, James R., and Daniel G. Horvitz. 1978. ‘The Use of Monetary Incentives in National Assessment Household Surveys’. Journal of the American Statistical Association 73 (363): 473–78. https://doi.org/10.1080/01621459.1978.10480037 .

- Couper, Mick P., and Robert M. Groves. 1996. ‘Social Environmental Impacts on Survey Cooperation’. Quality and Quantity 30 (2): 173–88. https://doi.org/10.1007/BF00153986 .

- Debell, M., Maisel, N., Edwards, B., Amsbary, M., & Meldener, V. (2020). Improving Survey Response Rates with Visible Money. Journal of Survey Statistics and Methodology, 8(5), 821–831. https://doi.org/10.1093/jssam/smz038

- Dillman, Don A., Jean Gorton Gallegos, and James H. Frey (1976). ‘Reducing Refusal Rates for Telephone Interviews’. Public Opinion Quarterly 40 (1): 66–78. https://doi.org/10.1086/268268 .

- Dillman, D. A., Smyth, J. D., & Christian, L. M. (2014). Internet, phone, mail, and mixed-mode surveys: The tailored design method (4th edition). Wiley.

- European Social Survey. 2018. ‘European Social Survey Round 9 Sampling Guidelines: Principles and Implementation’. https://www.europeansocialsurvey.org/docs/round9/methods/ESS9_sampling_guidelines.pdf .

- Ferguson, Stephen. 2016. The Post Office in Ireland: An Illustrated History. Newbridge, Co. Kildare, Ireland: Irish Academic Press.

- Goldstein, Kenneth M., and M. Kent Jennings. 2002. ‘The Effect of Advance Letters on Cooperation in a List Sample Telephone Survey’. The Public Opinion Quarterly 66 (4): 608–17.

- Goyder, John, Keith Warriner, and Susan Miller. 2002. ‘Evaluating Socio-Economic Status (SES) Bias in Survey Nonresponse’. JOURNAL OF OFFICIAL STATISTICS-STOCKHOLM- 18 (1): 1–12.

- Heijmans, Naomi, Jan van Lieshout, and Michel Wensing. 2015. ‘Improving Participation Rates by Providing Choice of Participation Mode: Two Randomized Controlled Trials’. BMC Medical Research Methodology 15 (1): 29. https://doi.org/10.1186/s12874-015-0021-2 .

- Iacus, Stefano M., Gary King, and Giuseppe Porro. 2011. “Multivariate Matching Methods That Are Monotonic Imbalance Bounding.” Journal of the American Statistical Association 106 (493): 345–61. https://doi.org/10.1198/jasa.2011.tm09599.

- Kibuchi, Eliud, Patrick Sturgis, Gabriele B. Durrant, and Olga Maslovskaya. 2019. ‘Do Interviewers Moderate The Effect of Monetary Incentives on Response Rates in Household Interview Surveys?’ Journal of Survey Statistics and Methodology. https://doi.org/10.1093/jssam/smy026 .

- Ho, D. E., Imai, K., King, G., & Stuart, E. A. (2007). Matching as Nonparametric Preprocessing for Reducing Model Dependence in Parametric Causal Inference. Political Analysis, 15(3), 199–236. https://doi.org/10.1093/pan/mpl013

- Kaminska, O. (2020). Guide to Using Weights and Sample Design Indicators with ESS Data. https://www.europeansocialsurvey.org/docs/methodology/ESS_weighting_data_1_1.pdf

- King, Gary, and Richard Nielsen. 2019. “Why Propensity Scores Should Not Be Used for Matching.” Political Analysis 27 (4): 435–54. https://doi.org/10.1017/pan.2019.11

- Leeuw, Edith De, Mario Callegaro, Joop Hox, Elly Korendijk, and Gerty Lensvelt-Mulders. 2007. ‘The Influence of Advance Letters on Response in Telephone SurveysA Meta-Analysis’. Public Opinion Quarterly 71 (3): 413–43. https://doi.org/10.1093/poq/nfm014

- Leeuw, Edith de, Joop Hox, and Annemieke Luiten. 2018. ‘International Nonresponse Trends across Countries and Years: An Analysis of 36 Years of Labour Force Survey Data’. Survey Methods: Insights from the Field (SMIF), December. https://doi.org/10.13094/SMIF-2018-00008 .

- Link, Michael W., and Ali Mokdad. 2005. ‘Advance Letters as a Means of Improving Respondent Cooperation in Random Digit Dial Studies: A Multistate Experiment’. The Public Opinion Quarterly 69 (4): 572–87.

- Luiten, A. (2011). Personalisation in advance letters does not always increase response rates. Demographic correlates in a large scale experiment. Survey Research Methods, Vol 5, 11-20 Pages. https://doi.org/10.18148/SRM/2011.V5I1.3961

- Lynn, P., Turner, R., & Smith, P. (1998). Assessing the Effects of an Advance Letter for a Personal Interview: Market Research Society. Journal. https://doi.org/10.1177/147078539804000306

- Morton-Williams, Jean. 1993. Interviewer Approaches. Aldershot, England ; Brookfield, Vt., USA : [London?]: Dartmouth ; SCPR.

- Olson, Kristen, Jolene D. Smyth, and Heather M. Wood. 2012. ‘Does Giving People Their Preferred Survey Mode Actually Increase Survey Participation Rates? An Experimental Examination’. Public Opinion Quarterly 76 (4): 611–35. https://doi.org/10.1093/poq/nfs024

- Rosenbaum, P. R., & Rubin, d. B. (1983). The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1), 41–55. https://doi.org/10.1093/biomet/70.1.41

- Schell, C., Godinho, A., Kushnir, V., & Cunningham, J. A. (2018). To send or not to send: Weighing the costs and benefits of mailing an advance letter to participants before a telephone survey. BMC Research Notes, 11(1), 813. https://doi.org/10.1186/s13104-018-3920-6

- Singer, ELEANOR, and CONG Ye. 2013. ‘The Use and Effects of Incentives in Surveys’. Annals of the American Academy of Political and Social Science 645: 112–41.

- Stoop, Ineke A. L., Jaak Billiet, Achim Koch, and Rory Fitzgerald, eds. 2010. Improving Survey Response: Lessons Learned from the European Social Survey. Wiley Series in Survey Methodology. Chichester: Wiley.

- Tourangeau, R. (2004). Survey Research and Societal Change. Annual Review of Psychology, 55(1), 775–801. https://doi.org/10.1146/annurev.psych.55.090902.142040

- Traugott, Michael W., Robert M. Groves, and James M. Lepkowski. 1987. ‘USING DUAL FRAME DESIGNS TO REDUCE NONRESPONSE IN TELEPHONE SURVEYS’. Public Opinion Quarterly 51 (4): 522–39. https://doi.org/10.1086/269055

- Vogl, S., Parsons, J. A., Owens, L. K., & Lavrakas, P. J. (2019). Experiments on the Effects of Advance Letters in Surveys. In P. Lavrakas, M. Traugott, C. Kennedy, A. Holbrook, E. de Leeuw, & B. West (Eds.), Experimental Methods in Survey Research (1st ed., pp. 89–110). Wiley. https://doi.org/10.1002/9781119083771.ch5