A Multimode Strategy to Contact Participants and Collect Responses in a Supplement to a Longitudinal Household Survey

Field report

Kistler, A., Decker, S., Steiger, D., Novik, J. 2023. “A Multimode Strategy to Contact Participants and Collect Responses in a Supplement to a Longitudinal Household Survey”. Survey Methods: Insights From the Field. Retrieved from https://surveyinsights.org/?p=18357

© the authors 2024. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Abstract

The Agency for Healthcare Research and Quality’s (AHRQ’s) annual Medical Expenditure Panel Survey (MEPS) collects data on Americans’ health care expenditures and use. In seeking to understand connections between these topics and social determinants of health, AHRQ and Westat conducted a new MEPS supplemental study in 2021 using a multimode (web and paper) instrument. All other MEPS supplements had been administered only as paper questionnaires. Due to sensitive questions, participants were encouraged to complete the web survey, but had the option of responding by paper. Response was encouraged through a multimode contact strategy, including text messages, emails, and/or mailings. This paper reviews the protocol for encouraging web response and the response rates when utilizing various contact modes, depending on the collection of householders’ email and mobile phone information and permission to send texts during the core MEPS interview. The overall unweighted response rate was 74.2%, with 69.3% of responses submitted via web. Response rates were highest among adults for whom both email and mobile phone information were provided (85.5%).

Keywords

contact protocol, longitudinal research, MEPS, multimode, self-administered questionnaire, social determinants of health, web survey

Acknowledgement

This work was supported with partial funding from the Assistant Secretary of Planning and Evaluation (ASPE).

Disclaimer: The views expressed in this manuscript are those of the authors and do not necessarily reflect the views of the Agency for Healthcare Quality and Research or the U.S. Department of Health and Human Services.

Copyright

© the authors 2024. This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0)

Introduction

The Agency for Healthcare Research and Quality’s (AHRQ’s) Medical Expenditure Panel Survey (MEPS) has been providing information about the United States’ healthcare system since 1996[1]. Data are collected through in-person contact with overlapping panels of households. Panels of approximately 10,000 households are interviewed five times over 2.5 years. However, during the COVID-19 pandemic, the study extended participation for certain panels to nine interviews over 4.5 years. One household respondent (HHR) responds for all members of the household. MEPS routinely also asks other adult householders (non-HHR) to complete additional self-administered questionnaires (SAQs) designed to broaden the scope of information collected while minimizing HHR burden.

MEPS regularly asks about many individual characteristics considered to be correlated with social determinants of health (SDoH), including measures of education, income, and race/ethnicity. However, AHRQ recently developed a new supplemental SDoH SAQ addressing social and behavioral factors found to be significantly related to health (Knighton, et al. 2018) that have not been asked as part of the core MEPS survey. These topics include variables measuring housing quality, neighborhood characteristics, financial strain, social connectedness, experiences of discrimination or physical or social violence, and adverse childhood experiences[2].

Some of these factors are considered to be of a sensitive nature, so one challenge was to determine how to maintain data privacy for respondents living in multi-person households in which completed paper surveys could be reviewed by other household members easily before being mailed back or picked up by the field interviewer. Another key challenge was to deploy a data collection protocol that would maximize response without impacting the household’s future participation in MEPS. To address these challenges, the new SAQ deployed both a multimode contact strategy and a multimode instrument, both of which were novel approaches for MEPS. This paper reviews the multimode contact protocol used to encourage web response and the response rates for various contact modes during the data collection effort.

Background

SAQs are often used as a preferred mode for collecting data on sensitive topics. There is growing evidence that web-based questionnaires may increase response to sensitive topics and decrease bias (De Leeuw and Hox 2011; Burkill et al. 2016; Kays et al. 2012). Paper-based surveys may lead to bias in responses due to “bystander effects” (concerns about others seeing survey responses if the paper questionnaire is not collected or mailed immediately). Web-based surveys can produce greater self-disclosure on sensitive topics than paper surveys, because they are perceived as more anonymous, creating greater illusions of privacy (Gnambs and Kaspar 2015).

Despite these advantages, research suggests that paper-based surveys still yield higher response rates than web-based surveys (Shih and Fan 2008; Ebert et al. 2018). However, a multimode approach, in which both paper and web are available as modes of response, can provide a balance of cost effectiveness, response, and disclosure of sensitive information (Biemer et al. 2018).

In a multimode study, a sequential design is often used, in which initial mailings encourage web participation. Only nonrespondents are later provided with a paper version of the survey. There is evidence that, compared to a single mode approach, a multi-mode, sequential approach can produce relatively high response rates, improve representativeness, and lower data collection costs (Messer and Dillman 2011).

Many sample lists may only include a single type of contact information (such as a mailing or email address). However, if more than one type of contact information is available, inviting participation using multiple modes increases the chances that sample cases receive and attend to the request for participation (De Leeuw 2018; Tourangeau 2017; Olson et al. 2021; Dillman, et al. 2014).

Methods

Traditionally, the MEPS annual Adult SAQ and other periodic MEPS SAQs on special topics have been administered as paper questionnaires for the HHR and other adults within the household to complete independently. The SAQs are either completed while the field interviewer (FI) is in the household, or the FI leaves them behind for individuals to complete at their convenience. Due to the sensitive nature of some questions in the SDoH SAQ, especially measures of physical and social violence and adverse childhood events, web completion was selected as the primary mode of data collection for this supplement. A paper copy was provided to those with barriers to internet access, as well as to web nonrespondents[3]. To encourage web response, the methods of inviting and reminding potential respondents to participate in the SDoH SAQ needed to be adjusted from standard MEPS procedures. In order to facilitate easier access to the survey, we preferred to provide respondents with an electronic link to the survey website through an email or text message.

The SDoH SAQ was administered in English and Spanish to eligible MEPS adults[4]. The multimode phase of the study was fielded during the first half of 2021, with an additional phase of paper nonresponse follow up occurring during the second half of 2021. Respondents were promised a $20 incentive to be provided upon completion by either survey mode, to encourage participation and to help offset any perceived burden of being asked to respond using a different mode (web) than other MEPS tasks.

At the time the SdoH was administered, MEPS was fielding four panels of households (Panels 23-26), with each panel at a different stage of participation. For example, Panel 26 was participating in their first interview, while Panel 23 was participating in their seventh interview. Due to the coronavirus pandemic, MEPS allowed a much larger proportion of interviews to be conducted via telephone rather than in-person[5]. During the core MEPS interview, contact information was requested for adults eligible to complete the SDoH SAQ using the procedures described below.

Determination of Respondent Contact Mode and Method of Inviting Response

In order to send electronic links to the respondent pool, this necessitated asking the MEPS HHR to provide an email address and/or mobile phone number (including permission to text if provided) for each adult in the household. For individuals for whom no email address or mobile number was provided, HRRs were asked whether that adult had internet access to determine whether the person would be able to access the web version of the survey.

For those providing contact information, an electronic link to the survey was sent via email or text message. The website address and unique access code were printed in a letter mailed to the potential respondent if no email address or mobile number had been provided, but the HHR indicated that the adult had access to the internet. For others, a paper copy of the survey was left by the FI. These adults were not provided the website address or a unique access code, therefore they did not have the opportunity to complete a web survey. In effect, the SDoH SAQ employed a sequential design, in that a person could respond either via paper or web mode at the start, although paper follow-up was employed in the event of nonresponse for either mode.

Communicating with Respondents to Maximize Both Overall and Web Response

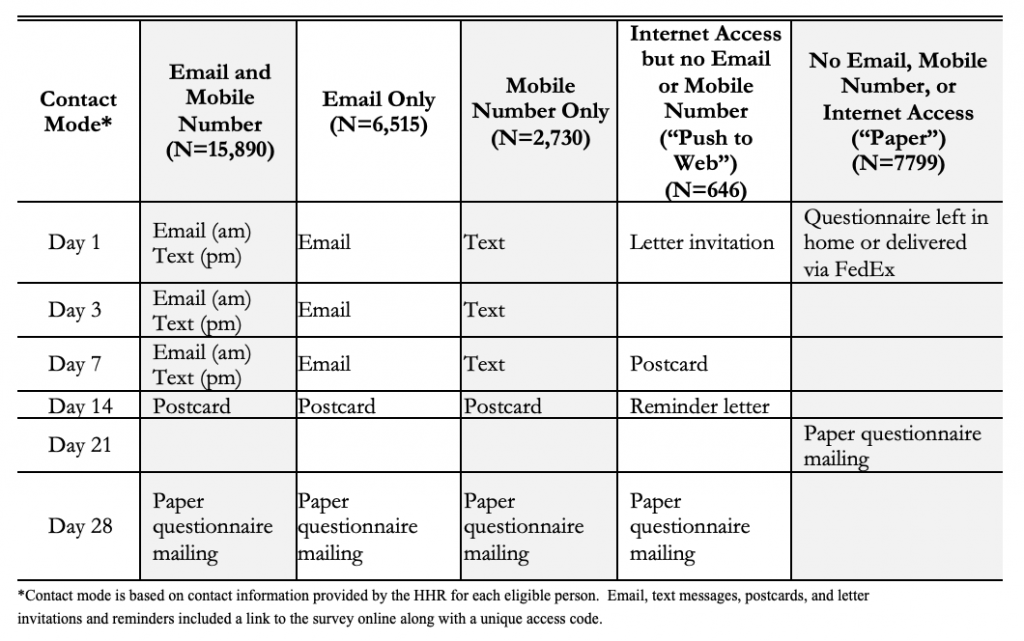

Depending on the contact information provided, each person received their survey invitation and reminders using that mode or combination of modes. For example, if both an email and a mobile number with permission to text were provided, then that person would receive both an email and a text message at each prompt. Table 1 summarizes the communication protocol based on a classification of contact mode and possible response mode into five categories based on information provided by the HHR: (1) both email and mobile phone number provided (web response planned); (2) only email address provided (web response planned); (3) only mobile phone number provided (web response planned); 4) internet access available, although no email address or mobile phone number were provided (“push to web” – web response sought); and (5) no email address or mobile phone number were provided and internet access unavailable or refused (“paper only”). Adults with a reported email and/or mobile phone number received an electronic invitation one day after the HHR completed the MEPS core interview and nonresponse reminders on days 3 and 7. Nonrespondents were mailed a postcard reminder on day 14; if there was still no response by day 28, a paper version of the questionnaire was mailed.

Table 1. SDoH Communication Protocol (Spring Cycle)

For those with no email or mobile number recorded but who had internet access, we mailed an invitation letter with the survey website address and a unique access code inviting the person to complete a web survey, a reminder postcard on day 7, a reminder letter on day 14, and a final mailing with the paper questionnaire on day 28. Those who initially received a paper copy of the questionnaire and did not respond were mailed a replacement paper questionnaire after 21 days.

Finally, any adult who did not respond to the survey during the Spring cycle (January through June of 2021) was provided a final opportunity to respond using a paper questionnaire distributed by the FI during the core MEPS interview conducted as part of the Fall data collection cycle (July through December of 2021).

The remainder of this paper reports the number of eligible respondents contacted by contact mode, the number responding in each survey mode, and the time until survey response. Associations between respondent characteristics and both the overall response rate and the probability of responding via web were modeled using a generalized linear model (GLM) with a logit link function. Respondent characteristics included mode of contact, whether the respondent was the HHR, age group, gender, marital status, race/ethnicity, region and round.

Findings

During the Spring 2021 cycle, 33,580 adult householders were invited to participate in the SDoH SAQ, representing 19,393 households. A total of 24,924 survey responses were received throughout both the initial Spring invitation (88.0% of responses) and Fall follow-up (12.0% of responses) cycles. During the Spring cycle, 17,260 web responses were received (69.3% of the total) and 4,663 responses were submitted through a paper questionnaire (18.7% of total). An additional 3,001 paper questionnaires (12.0% of total) were collected during the Fall nonresponse follow-up phase.

Provision of Contact Information

HHRs provided both a mobile number and an email address for nearly half[6] of eligible adults across all of the households (47.3%). The HHR provided only an email address for 19.4% of the eligible adults and provided only a mobile number for 8.1%. Less than 2% of eligible adults had internet access although the HHR did not provide email or mobile contact information and were therefore sent letters with the website address and a unique access code. Finally, nearly one-quarter (23.2%) of eligible adults received only a paper questionnaire due to lack of electronic contact information or reported internet access.

Provision of contact information was consistent across most of the longitudinal panels of MEPS. However, HHRs participating in the MEPS study for the first time (Panel 26) were significantly less likely to provide both a mobile number and an email address for eligible adults (42.9%) than those in their third (48.3%), fifth (49.2%), or seventh round (50.8%). This is likely because we had not yet built up enough trust with them to be willing to share contact information for other adults in the household.

Response Rates and Mode of Response

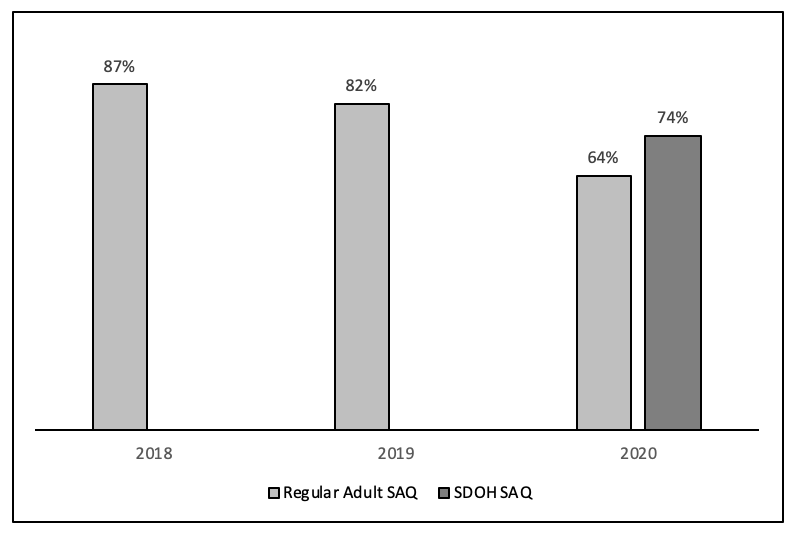

The overall unweighted response rate for the SDoH SAQ was 74.2%, calculated by adding completed and partial interviews and dividing by the total sample size of eligible adults who were invited to participate in the study[7]. Figure 1 depicts recent response rates for the regular MEPS SAQ (response rates computed by the authors) compared to the MEPS SDoH SAQ.

Figure 1. Trends in MEPS SAQ Supplement Unweighted Response Rates, 2018-2020[8]

Despite the sensitive nature of some questions in the SDoH SAQ, its 74.2% response rate, although lower than the rates for the regular adult SAQ in pre-COVID years, is considerably higher than the 64% Adult SAQ response rate in 2020. This may be due to a number of reasons, including the provision of a $20 incentive upon completion, the multimode contact strategy, or offering multiple methods of response. As shown in Figure 2, offering the web option also had the advantage of faster response. During the Spring cycle, approximately 90% of web responses were collected within 14 days compared to about 18% of paper responses. During the Fall data collection cycle, with only paper nonresponse follow-up occurring, about 40% of SAQs were completed by day 14.

Figure 2. Number of days to complete the web or paper SDoH SAQ

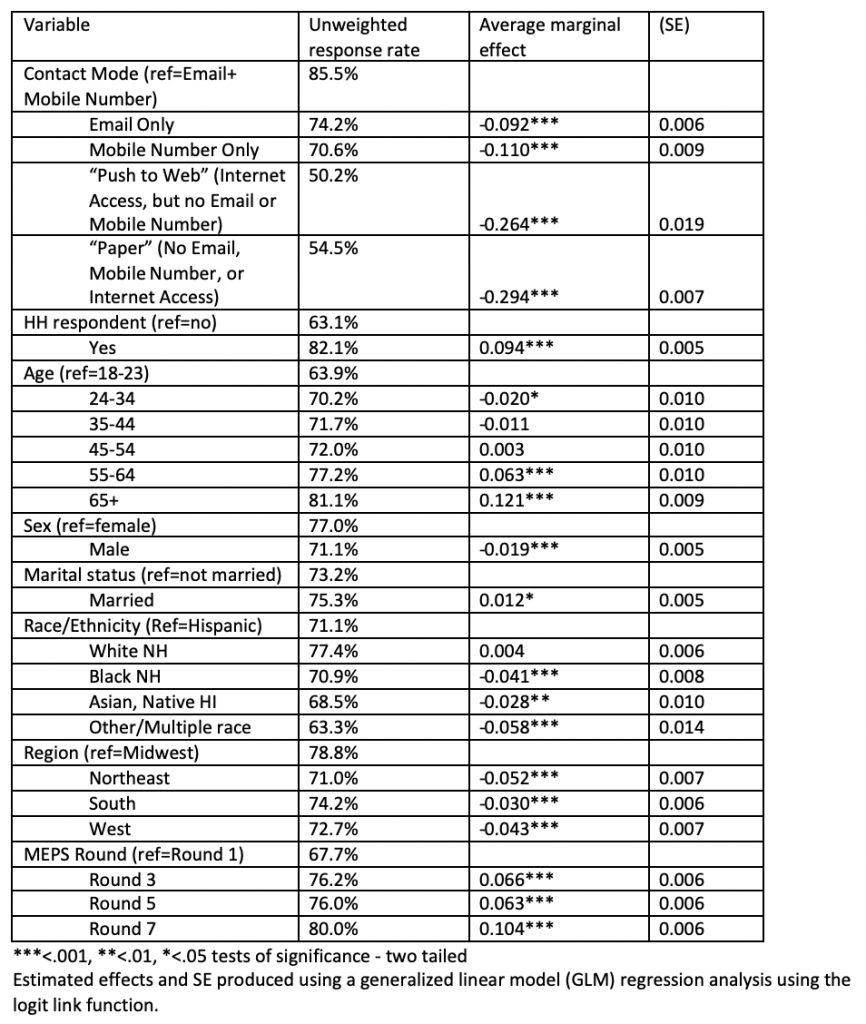

Table 2 presents both mean response rates by respondent characteristic (first column) and the results of a regression analysis modeling the association between each characteristic and the response rate controlling for other characteristics. Looking at the means, HHRs appeared more likely to respond (82.1%) than other household adults (63.1%). The regression results uphold this finding. Adjusting for other characteristics, the HHR is 9.4 percentage points more likely to respond to the survey compared to other adults. This is a 14.9% increase relative to the mean response rate for non-HHR respondents of 63.1%.

Response rates also varied significantly by mode(s) of contact (p<.001). Relative to respondents who were able to be contacted by both email and mobile phone number, those contacted via email only, mobile phone number only, push to web, or paper were 9.2, 11.0, 26.4, and 29.4, percentage points less likely to respond to the survey, respectively. This represents 10.8, 12.9, 30.9, and 34.4% reductions, respectively, relative to the mean response rate (85.5%) for those with both an email address and mobile phone number. Older adults were significantly more likely to respond than younger adults. Adults aged 55-64 and 65+ were 6.3 and 12.1 percentage points (or 9.9% and 18.9%) more likely to respond to the SAQ than adults 18-23. Males were less likely to respond than females, but the marginal effect was relatively small (1.9 percentage points, or 2.5%). Married adults were significantly more likely to respond than adults that were not married (including never married, widowed, divorced, and separated), but the marginal effect was also small (1.2 percentage points or 1.6%). White and Hispanic adults were equally likely to respond, but those of other racial/ethnic backgrounds were less likely to respond than white and Hispanic adults. Those living in the Midwest were significantly more likely to respond than those living elsewhere. Finally, participants in later rounds of MEPS were significantly more likely to respond than those from households in their first MEPS round. Relative to those responding in round 1, those responding in rounds 3, 5, and 7 were 6.6, 6.3, and 10.4 percentage points (9.7, 9.3, and 15.4%), respectively, more likely to respond compared to the mean response rate for those in round 1 of 67.7%. This finding likely underscores the importance of establishing trust with respondents over time.

Table 2. Estimated average marginal effects and standard errors from model to predict unweighted response to SDoH

Overall, about 69.3% of respondents responded via web. Among adults with internet access and therefore had the opportunity to respond via web, Table 3 shows the association between respondent characteristics and the probability of response via web, again using a GLM model. Among adults with internet access, 83.7% responded via web. Among adults with internet access, Table 3 reports the mean percent responding via web (first column) and the adjusted probability of responding via web by respondent characteristic. Web response was significantly higher when both an email and mobile phone number were used for contact than when only email, only mobile phone number, or only “push to web” invitation letters were used for contact. The estimated strength of the association was substantial. For example, respondents for whom only a mobile number was provided were 21.8 percentage points less likely to respond via web than those for whom both a mobile number and email address were provided. This represents a 24.5% reduction compared to the mean percent responding via web (89.1%). Those with internet access but for whom no mobile number or email address was provided were 30.5 percentage points (34.2%) less likely to respond via web compared to those for whom both a mobile number and email address were provided.

Young adults aged 18 to 23 were significantly more likely to respond by web than any other age group, with adults aged 65+ being 9.6 percentage points (11.5%) less likely to respond by web compared to those aged 18-23. Females were significantly more likely to respond by web than males (by 3.3 percentage points, or 3.9%). Black and Hispanic adults were less likely than others to respond via web. For example, non-Hispanic white adults were 8.8 percentage points (11.3%) more likely than Hispanic adults to respond via web. Those living in the Northeast were significantly more likely to respond by web than those in the Midwest. Finally, those in later rounds of MEPS panels were significantly more likely than those in their first round to respond via web.

Table 3. Estimated average marginal effects and standard errors of model to predict web as mode of response to SDoH

Discussion

The MEPS SDoH SAQ was the first MEPS supplement to offer multiple modes for respondent contact, communication, and response, obtaining an overall response rate of 74.2%. This response rate was higher than those for other MEPS SAQs conducted during the pandemic period (without an incentive), despite the inclusion of some questions on the SdoH that could be personally sensitive. The results suggest that adults receiving survey communications by both email and text were more likely to respond than when only one method of contact was possible. We observed that HHRs participating in early rounds of MEPS were reluctant to provide email and mobile contact information for other adults in their household. It is possible that the increased trust in MEPS, in addition to a promised incentive and multiple contact attempts, contributed to the propensity to respond. It should be noted that there is no control group for comparison, so it is difficult to know what portion of the response rate was impacted by offering multiple modes for completion, multiple reminders to complete, or a post-data collection incentive. However, the results suggest that offering both web and paper response options with a promised incentive could help to encourage response, perhaps especially during times that are otherwise challenging for data collection. The finding that older adults are less likely to complete the SDoH via web is consistent with prior research (Braekman, et al. 2019; Lynn 2020). The general finding that response rates varied significantly by personal characteristics (age and race/ethnicity) points to the continued necessity of offering a paper response option.

Although AHRQ originally planned to field the SDoH SAQ only in 2021, a small subset of the questions was fielded as part of the MEPS Adult SAQ in 2022 and it is hoped that more SDoH items will be re-fielded eventually, perhaps rotating subsets of the questions in different years. The opportunity to observe how responses to the SDoH questions differ in pandemic and post-pandemic periods may help researchers study the effects of the pandemic. Future research could also explore the possibility of mode effects in substantive responses between paper and web response. The responses to questions in the SDoH SAQ will also allow researchers, policy makers, and providers to explore the relationships between the many social and behavioral factors in the SDoH instrument and measures of health status, the use of health care, and health spending in MEPS both during and after the pandemic.

Further, due to the success of the multimode SDoH SAQ, MEPS switched to a multimode approach for the annual Adult SAQ in Fall 2023. This supplemental data collection will be modeled after the SDoH multimode approach, but will not offer an incentive. This protocol may help clarify to what extent the higher response rate for the SDoH compared to recent response rates for the annual SAQ was due to the incentive.

Endnotes

[1] See additional information at https://meps.ahrq.gov/survey_comp/survey.jsp

[2] See Appendix 1 for the full set of SDoH measures and Appendix 2 for sources.

[3] Hotline assistance numbers were provided at the end of the survey due to the inclusion of some sensitive topics.

[4] MEPS has complex rules for who is considered an eligible adult for inclusion in survey supplements. For more details, see Methodology Report #33: Sample Designs of the Medical Expenditure Panel Survey Household Component, 1996-2006 and 2007-2016 (ahrq.gov)

[5] For more details on the impact of COVID on the conduct of MEPS, see https://ajph.aphapublications.org/doi/full/10.2105/AJPH.2021.306534.

[6] All estimates in this paper are unweighted.

[7] Weights will eventually be created by AHRQ to adjust for differential response rates by observed characteristics.

[8] Response rates calculated using AAPOR Response Rate 2 (RR2).

MEPS Social and Health Experiences Self-Administered Questionnaire (SAQ) Item #, Question Text, and Response Categories

Sources for Questions in the MEPS Social and Health Experiences Self-Administered Questionnaire (SAQ)

References

- Biemer, Paul P., Joe Murphy, Stephanie Zimmer, Chip Berry, Grace Deng, and Katie Lewis. 2018. “Using Bonus Monetary Incentives to Encourage Web Response in Mixed-Mode Household Surveys,” Journal of Survey Statistics and Methodology, 6: 240-261. https://doi.org/10.1093/jssam/smx015

- Braekman, Elise, Sabine Drieskens, Rana Charafeddine, Stefaan Demarest, Finaba Berete, Lydia Gisle, Jean Tafforeau, Johan Van der Heyden, and Guido Van Hal. 2019. “Mixing Mixed-mode Designs in a National Health Interview Survey: A Pilot Study to Assess the Impact of on the Self-Administered Questionnaire Non-Response,” BMC Medical Research Methodology, 19: 1-10. https://doi.org/10.1186/s12874-019-0860-3

- Burkill, Sarah, Andrew Copas, Mick P. Couper, Soazig Clifton, Philip Prah, Jessica Datta, Frederick Conrad, Kaye Wellings, Anne M. Johnson, and Bob Erens. 2016. “Using the Web to Collect Data on Sensitive Behaviours: A Study Looking at Mode Effects on the British National Survey of Sexual Attitudes and Lifestyles.” PLoS One. 11(2). https://doi.org/10.1371/journal.pone.0147983

- De Leeuw, Edith D. 2018. “Mixed Mode: Past, Present, and Future,” Survey Research Methods 12(2): 75-89. https://doi.org/10.18148/srm/2018.v12i2.7402

- De Leeuw, Edith D. and Joop J. Hox. 2011. “Internet Surveys as Part of a Mixed-Mode Design” In Das, M., P. Ester, and L. Kaczmirek, editors. Social and Behavioral Research and the Internet: Advances in Applied Methods and New Research Strategies. New York: Routledge, Taylor and Francis Group, 45-76. http://dx.doi.org/10.4324/9780203844922-3

- Dillman, Don A., Jolene D. Smyth, and Leah Melani Christian. 2014. “Internet, phone, mail, and mixed-mode surveys: The tailored design method.” John Wiley & Sons. https://www.wiley.com/en-us/Internet,+Phone,+Mail,+and+Mixed+Mode+Surveys:+The+Tailored+Design+Method,+4th+Edition-p-9781118456149

- Ebert, Jonas Fynboe, Huibers, Linda, Christensen, Bo, Christensen, Morten Bondo. 2018. “Paper- or Web-Based Questionnaire Invitations as a Method for Data Collection: Cross-Sectional Comparative Study of Differences in Response Rate, Completeness of Data, and Financial Cost.” Journal of Medical Internet Research, Jan; 20(1): e24. https://doi.org/10.2196/jmir.8353

- Gnambs, Timo and Kai Kaspar. 2015. “Disclosure of sensitive behaviors across self-administered survey modes: a meta-analysis.” Behavior research methods, 47(4): 1237-1259. https://doi.org/10.3758/s13428-014-0533-4

- Kays, Kristina, Kathleen Gathercoal, and William Buhrow. 2012. “Does survey format influence self-disclosure on sensitive question items?” Computers in Human Behavior, 28(1): 251-256. https://doi.org/10.1016/j.chb.2011.09.007

- Knighton, Andrew J., Brad Stephenson, and Lucy A. Savitz. 2018. “Measuring the Effect of Social Determinants on Patient Outcomes: A Systematic Literature Review,” Journal of Health Care of the Poor and Underserved, 29(1): 81-106. https://doi.org/10.1353/hpu.2018.0009

- Lynn, Peter. (2020). “Evaluating Push-To-Web Methodology tor Mixed-Mode Surveys Using Address-Based Samples,” Survey Research Methods, 14(1): 19-30. https://doi.org/10.18148/srm/2020.v14i1.7591

- Messer, Benjamin L. and Don A. Dillman. 2011. “Surveying the general public over the internet using address-based sampling and mail contact procedures.” Public Opinion Quarterly, 75(3): 429-457. https://doi.org/10.1093/poq/nfr021.

- Olson, Kristen, Jolene D. Smyth, Rachel Horwitz, Scott Keeter, Virginia Lesser, Stephanie Marken, Nancy A. Mathiowetz, Jackie S. McCarthy, Eileen O’Brien, Jean D. Opsomer, and Darby Steiger. 2021. “Transitions from telephone surveys to self-administered and mixed-mode surveys: AAPOR task force report.” Journal of Survey Statistics and Methodology, 9(3): 381-411. https://doi.org/10.1093/jssam/smz062

- Shih, Tse-Hua, and Fan, Xitao. 2008. “Comparing Response Rates from Web and Mail Surveys: A Meta-Analysis.” Field Methods, 20(3): 249–271. https://doi.org/10.1177/1525822X08317085

- Tourangeau, Roger. 2017. “Mixing modes.” Total survey error in practice. 115-132. https://doi.org/10.1002/9781119041702.ch6